Introducing bidirectional streaming for real-time inference on Amazon SageMaker AIArtificial Intelligence We’re introducing bidirectional streaming for Amazon SageMaker AI Inference, which transforms inference from a transactional exchange into a continuous conversation. This post shows you how to build and deploy a container with bidirectional streaming capability to a SageMaker AI endpoint. We also demonstrate how you can bring your own container or use our partner Deepgram’s pre-built models and containers on SageMaker AI to enable bi-directional streaming feature for real-time inference.

We’re introducing bidirectional streaming for Amazon SageMaker AI Inference, which transforms inference from a transactional exchange into a continuous conversation. This post shows you how to build and deploy a container with bidirectional streaming capability to a SageMaker AI endpoint. We also demonstrate how you can bring your own container or use our partner Deepgram’s pre-built models and containers on SageMaker AI to enable bi-directional streaming feature for real-time inference. Read More

The FBI warns of a surge in account takeover (ATO) fraud schemes and says that cybercriminals impersonating various financial institutions have stolen over $262 million in ATO attacks since the start of the year. […] Read More

Tor has announced improved encryption and security for the circuit traffic by replacing the old tor1 relay encryption algorithm with a new design called Counter Galois Onion (CGO). […] Read More

New research has found that organizations in various sensitive sectors, including governments, telecoms, and critical infrastructure, are pasting passwords and credentials into online tools like JSONformatter and CodeBeautify that are used to format and validate code. Cybersecurity company watchTowr Labs said it captured a dataset of over 80,000 files on these sites, uncovering thousands of Read […]

Microsoft is investigating an Exchange Online service outage that is preventing customers from accessing their mailboxes using the classic Outlook desktop client. […] Read More

State-linked hackers stayed under the radar by using a variety of commercial cloud services for command-and-control communications. Read More

Despite possibly supplanting some young analysts, one Gen Z cybersecurity specialist sees AI helping teach those willing to learn and removing drudge work. Read More

How to Implement Three Use Cases for the New Calendar-Based Time IntelligenceTowards Data Science Starting with the September 2025 Release of Power BI, Microsoft introduced the new Calendar-based Time Intelligence feature. Let’s see what can be done by implementing three use cases. The future looks very interesting with this new feature.

The post How to Implement Three Use Cases for the New Calendar-Based Time Intelligence appeared first on Towards Data Science.

Starting with the September 2025 Release of Power BI, Microsoft introduced the new Calendar-based Time Intelligence feature. Let’s see what can be done by implementing three use cases. The future looks very interesting with this new feature.

The post How to Implement Three Use Cases for the New Calendar-Based Time Intelligence appeared first on Towards Data Science. Read More

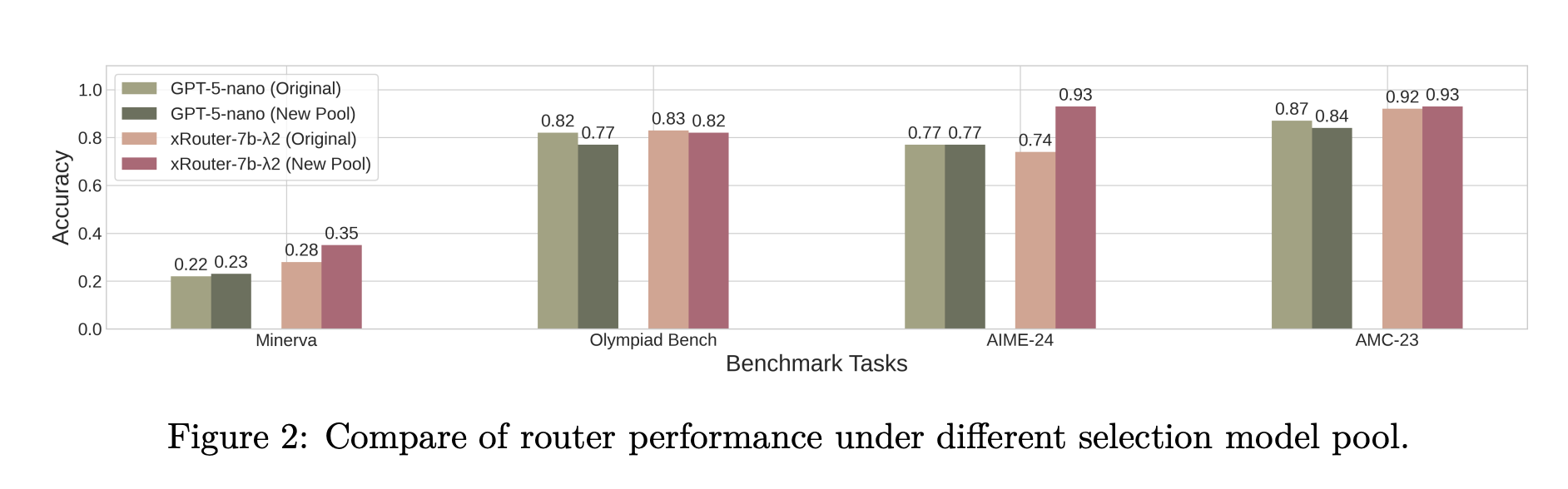

Salesforce AI Research Introduces xRouter: A Reinforcement Learning Router for Cost Aware LLM OrchestrationMarkTechPost When your application can call many different LLMs with very different prices and capabilities, who should decide which one answers each request? Salesforce AI research team introduces ‘xRouter’, a tool-calling–based routing system that targets this gap with a reinforcement learning based router and learns when to answer locally and when to call external models, while

The post Salesforce AI Research Introduces xRouter: A Reinforcement Learning Router for Cost Aware LLM Orchestration appeared first on MarkTechPost.

When your application can call many different LLMs with very different prices and capabilities, who should decide which one answers each request? Salesforce AI research team introduces ‘xRouter’, a tool-calling–based routing system that targets this gap with a reinforcement learning based router and learns when to answer locally and when to call external models, while

The post Salesforce AI Research Introduces xRouter: A Reinforcement Learning Router for Cost Aware LLM Orchestration appeared first on MarkTechPost. Read More

Risk management company Crisis24 has confirmed its OnSolve CodeRED platform suffered a cyberattack that disrupted emergency notification systems used by state and local governments, police departments, and fire agencies across the United States. […] Read More