Are AI Browsers Any Good? A Day with Perplexity’s Comet and OpenAI’s AtlasKDnuggets Understanding the underlying technology helps explain why AI browsers exhibit such uneven performance.

Understanding the underlying technology helps explain why AI browsers exhibit such uneven performance. Read More

RISAT’s Silent Promise: Decoding Disasters with Synthetic Aperture RadarTowards Data Science The high-resolution physics turning microwave echoes into real-time flood intelligence

The post RISAT’s Silent Promise: Decoding Disasters with Synthetic Aperture Radar appeared first on Towards Data Science.

The high-resolution physics turning microwave echoes into real-time flood intelligence

The post RISAT’s Silent Promise: Decoding Disasters with Synthetic Aperture Radar appeared first on Towards Data Science. Read More

5 Excel AI Lessons I Learned the Hard WayKDnuggets This article transforms the unwelcome experiences into five comprehensive frameworks that will elevate your Excel-based machine learning work.

This article transforms the unwelcome experiences into five comprehensive frameworks that will elevate your Excel-based machine learning work. Read More

It’s the law of unintended consequences: equipping browsers with agentic AI opens the door to an exponential volume of prompt injections. Read More

Microsoft warned users on Tuesday that FIDO2 security keys may prompt them to enter a PIN when signing in after installing Windows updates released since the September 2025 preview update. […] Read More

South Korea’s financial sector has been targeted by what has been described as a sophisticated supply chain attack that led to the deployment of Qilin ransomware. “This operation combined the capabilities of a major Ransomware-as-a-Service (RaaS) group, Qilin, with potential involvement from North Korean state-affiliated actors (Moonstone Sleet), leveraging Managed Service Provider (MSP) Read More

More than half of organizations surveyed aren’t sure they can secure non-human identities (NHIs), underscoring the lag between the rollout of these identities and the tools to protect them. Read More

New Microsoft cloud updates support Indonesia’s long-term AI goalsAI News Indonesia’s push into AI-led growth is gaining momentum as more local organisations look for ways to build their own applications, update their systems, and strengthen data oversight. The country now has broader access to cloud and AI tools after Microsoft expanded the services available in the Indonesia Central cloud region, which first went live six

The post New Microsoft cloud updates support Indonesia’s long-term AI goals appeared first on AI News.

Indonesia’s push into AI-led growth is gaining momentum as more local organisations look for ways to build their own applications, update their systems, and strengthen data oversight. The country now has broader access to cloud and AI tools after Microsoft expanded the services available in the Indonesia Central cloud region, which first went live six

The post New Microsoft cloud updates support Indonesia’s long-term AI goals appeared first on AI News. Read More

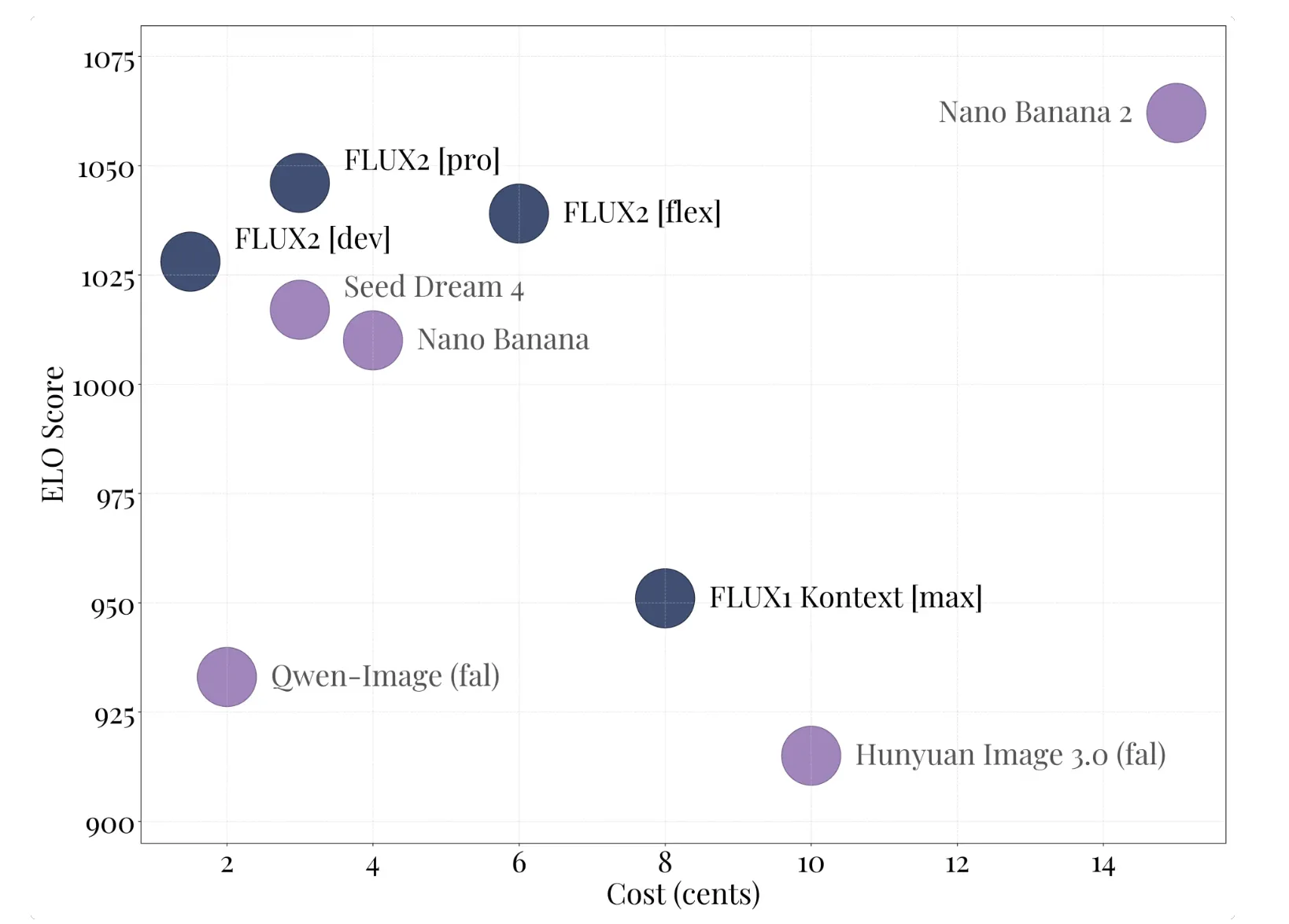

Black Forest Labs Releases FLUX.2: A 32B Flow Matching Transformer for Production Image PipelinesMarkTechPost Black Forest Labs has released FLUX.2, its second generation image generation and editing system. FLUX.2 targets real world creative workflows such as marketing assets, product photography, design layouts, and complex infographics, with editing support up to 4 megapixels and strong control over layout, logos, and typography. FLUX.2 product family and FLUX.2 [dev] The FLUX.2 family

The post Black Forest Labs Releases FLUX.2: A 32B Flow Matching Transformer for Production Image Pipelines appeared first on MarkTechPost.

Black Forest Labs has released FLUX.2, its second generation image generation and editing system. FLUX.2 targets real world creative workflows such as marketing assets, product photography, design layouts, and complex infographics, with editing support up to 4 megapixels and strong control over layout, logos, and typography. FLUX.2 product family and FLUX.2 [dev] The FLUX.2 family

The post Black Forest Labs Releases FLUX.2: A 32B Flow Matching Transformer for Production Image Pipelines appeared first on MarkTechPost. Read More

Estimating LLM Consistency: A User Baseline vs Surrogate Metricscs.AI updates on arXiv.org arXiv:2505.23799v4 Announce Type: replace-cross

Abstract: Large language models (LLMs) are prone to hallucinations and sensitive to prompt perturbations, often resulting in inconsistent or unreliable generated text. Different methods have been proposed to mitigate such hallucinations and fragility, one of which is to measure the consistency of LLM responses — the model’s confidence in the response or likelihood of generating a similar response when resampled. In previous work, measuring LLM response consistency often relied on calculating the probability of a response appearing within a pool of resampled responses, analyzing internal states, or evaluating logits of responses. However, it was not clear how well these approaches approximated users’ perceptions of consistency of LLM responses. To find out, we performed a user study ($n=2,976$) demonstrating that current methods for measuring LLM response consistency typically do not align well with humans’ perceptions of LLM consistency. We propose a logit-based ensemble method for estimating LLM consistency and show that our method matches the performance of the best-performing existing metric in estimating human ratings of LLM consistency. Our results suggest that methods for estimating LLM consistency without human evaluation are sufficiently imperfect to warrant broader use of evaluation with human input; this would avoid misjudging the adequacy of models because of the imperfections of automated consistency metrics.

arXiv:2505.23799v4 Announce Type: replace-cross

Abstract: Large language models (LLMs) are prone to hallucinations and sensitive to prompt perturbations, often resulting in inconsistent or unreliable generated text. Different methods have been proposed to mitigate such hallucinations and fragility, one of which is to measure the consistency of LLM responses — the model’s confidence in the response or likelihood of generating a similar response when resampled. In previous work, measuring LLM response consistency often relied on calculating the probability of a response appearing within a pool of resampled responses, analyzing internal states, or evaluating logits of responses. However, it was not clear how well these approaches approximated users’ perceptions of consistency of LLM responses. To find out, we performed a user study ($n=2,976$) demonstrating that current methods for measuring LLM response consistency typically do not align well with humans’ perceptions of LLM consistency. We propose a logit-based ensemble method for estimating LLM consistency and show that our method matches the performance of the best-performing existing metric in estimating human ratings of LLM consistency. Our results suggest that methods for estimating LLM consistency without human evaluation are sufficiently imperfect to warrant broader use of evaluation with human input; this would avoid misjudging the adequacy of models because of the imperfections of automated consistency metrics. Read More