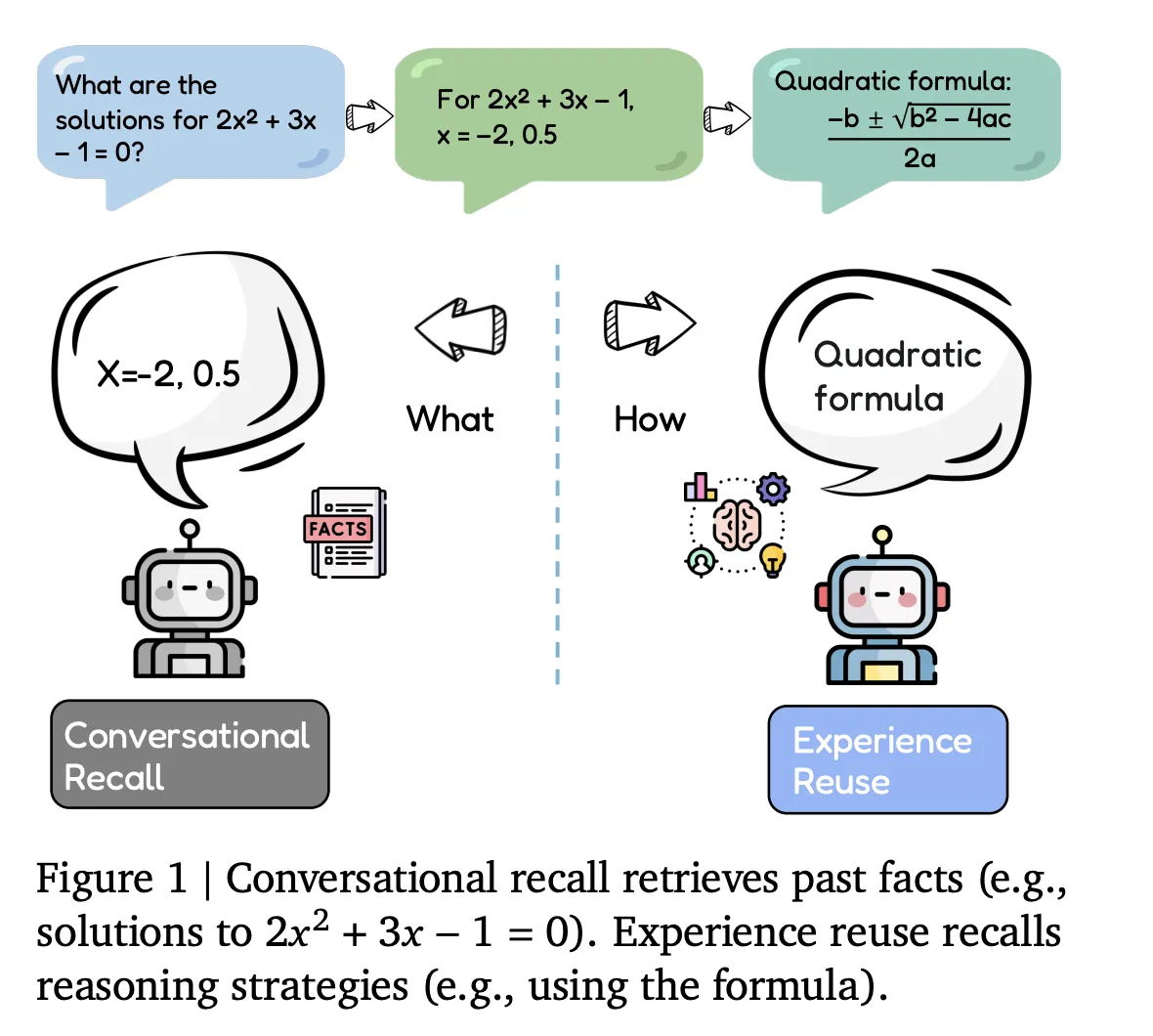

Google DeepMind Researchers Introduce Evo-Memory Benchmark and ReMem Framework for Experience Reuse in LLM AgentsMarkTechPost Large language model agents are starting to store everything they see, but can they actually improve their policies at test time from those experiences rather than just replaying context windows? Researchers from University of Illinois Urbana Champaign and Google DeepMind propose Evo-Memory, a streaming benchmark and agent framework that targets this exact gap. Evo-Memory evaluates

The post Google DeepMind Researchers Introduce Evo-Memory Benchmark and ReMem Framework for Experience Reuse in LLM Agents appeared first on MarkTechPost.

Large language model agents are starting to store everything they see, but can they actually improve their policies at test time from those experiences rather than just replaying context windows? Researchers from University of Illinois Urbana Champaign and Google DeepMind propose Evo-Memory, a streaming benchmark and agent framework that targets this exact gap. Evo-Memory evaluates

The post Google DeepMind Researchers Introduce Evo-Memory Benchmark and ReMem Framework for Experience Reuse in LLM Agents appeared first on MarkTechPost. Read More

New control system teaches soft robots the art of staying safeMIT News – Machine learning MIT CSAIL and LIDS researchers developed a mathematically grounded system that lets soft robots deform, adapt, and interact with people and objects, without violating safety limits.

MIT CSAIL and LIDS researchers developed a mathematically grounded system that lets soft robots deform, adapt, and interact with people and objects, without violating safety limits. Read More

The Machine Learning “Advent Calendar” Day 2: k-NN Classifier in ExcelTowards Data Science Exploring the k-NN classifier with its variants and improvements

The post The Machine Learning “Advent Calendar” Day 2: k-NN Classifier in Excel appeared first on Towards Data Science.

Exploring the k-NN classifier with its variants and improvements

The post The Machine Learning “Advent Calendar” Day 2: k-NN Classifier in Excel appeared first on Towards Data Science. Read More

JSON Parsing for Large Payloads: Balancing Speed, Memory, and ScalabilityTowards Data Science Benchmarking JSON libraries for large payloads

The post JSON Parsing for Large Payloads: Balancing Speed, Memory, and Scalability appeared first on Towards Data Science.

Benchmarking JSON libraries for large payloads

The post JSON Parsing for Large Payloads: Balancing Speed, Memory, and Scalability appeared first on Towards Data Science. Read More

How to Vibe Code on a BudgetKDnuggets What if I told you that a powerful vibe coding workflow on par with Claude Code can cost you less than $10? Let me prove it.

What if I told you that a powerful vibe coding workflow on par with Claude Code can cost you less than $10? Let me prove it. Read More

How Proactive Cybersecurity Saves Money (and Reputation) (Sponsored)KDnuggets The value of a modern company isn’t in its firewalls; it’s in its terabytes of proprietary, labeled data and the predictive models built upon them.

The value of a modern company isn’t in its firewalls; it’s in its terabytes of proprietary, labeled data and the predictive models built upon them. Read More

How to Use Simple Data Contracts in Python for Data ScientistsTowards Data Science Stop your pipelines from breaking on Friday afternoons using simple, open-source validation with Pandera.

The post How to Use Simple Data Contracts in Python for Data Scientists appeared first on Towards Data Science.

Stop your pipelines from breaking on Friday afternoons using simple, open-source validation with Pandera.

The post How to Use Simple Data Contracts in Python for Data Scientists appeared first on Towards Data Science. Read More

IBM cites agentic AI, data policies, and quantum as 2026 trendsAI News Enterprise leaders are entering 2026 with an uncomfortable mix of volatility, optimism, and pressure to move faster on AI and quantum computing, according to a paper published by the IBM Institute for Business Value. Its findings are based on more than 1,000 C-suite executives and 8,500 employees and consumers. While only around a third of

The post IBM cites agentic AI, data policies, and quantum as 2026 trends appeared first on AI News.

Enterprise leaders are entering 2026 with an uncomfortable mix of volatility, optimism, and pressure to move faster on AI and quantum computing, according to a paper published by the IBM Institute for Business Value. Its findings are based on more than 1,000 C-suite executives and 8,500 employees and consumers. While only around a third of

The post IBM cites agentic AI, data policies, and quantum as 2026 trends appeared first on AI News. Read More

7 ChatGPT Tricks to Automate Your Data TasksKDnuggets This article explores how to transform ChatGPT from a chatbot into a powerful data assistant that streamlines the repetitive, the tedious, and the complex.

This article explores how to transform ChatGPT from a chatbot into a powerful data assistant that streamlines the repetitive, the tedious, and the complex. Read More

Maximizing the efficiency of human feedback in AI alignment: a comparative analysiscs.AI updates on arXiv.org arXiv:2511.12796v2 Announce Type: replace-cross

Abstract: Reinforcement Learning from Human Feedback (RLHF) relies on preference modeling to align machine learning systems with human values, yet the popular approach of random pair sampling with Bradley-Terry modeling is statistically limited and inefficient under constrained annotation budgets. In this work, we explore alternative sampling and evaluation strategies for preference inference in RLHF, drawing inspiration from areas such as game theory, statistics, and social choice theory. Our best-performing method, Swiss InfoGain, employs a Swiss tournament system with a proxy mutual-information-gain pairing rule, which significantly outperforms all other methods in constrained annotation budgets while also being more sample-efficient. Even in high-resource settings, we can identify superior alternatives to the Bradley-Terry baseline. Our experiments demonstrate that adaptive, resource-aware strategies reduce redundancy, enhance robustness, and yield statistically significant improvements in preference learning, highlighting the importance of balancing alignment quality with human workload in RLHF pipelines.

arXiv:2511.12796v2 Announce Type: replace-cross

Abstract: Reinforcement Learning from Human Feedback (RLHF) relies on preference modeling to align machine learning systems with human values, yet the popular approach of random pair sampling with Bradley-Terry modeling is statistically limited and inefficient under constrained annotation budgets. In this work, we explore alternative sampling and evaluation strategies for preference inference in RLHF, drawing inspiration from areas such as game theory, statistics, and social choice theory. Our best-performing method, Swiss InfoGain, employs a Swiss tournament system with a proxy mutual-information-gain pairing rule, which significantly outperforms all other methods in constrained annotation budgets while also being more sample-efficient. Even in high-resource settings, we can identify superior alternatives to the Bradley-Terry baseline. Our experiments demonstrate that adaptive, resource-aware strategies reduce redundancy, enhance robustness, and yield statistically significant improvements in preference learning, highlighting the importance of balancing alignment quality with human workload in RLHF pipelines. Read More