A sprawling academic cheating network turbocharged by Google Ads that has generated nearly $25 million in revenue has curious ties to a Kremlin-connected oligarch whose Russian university builds drones for Russia’s war against Ukraine. The Nerdify homepage. The link between essay mills and Russian attack drones might seem improbable, but understanding it begins with a […]

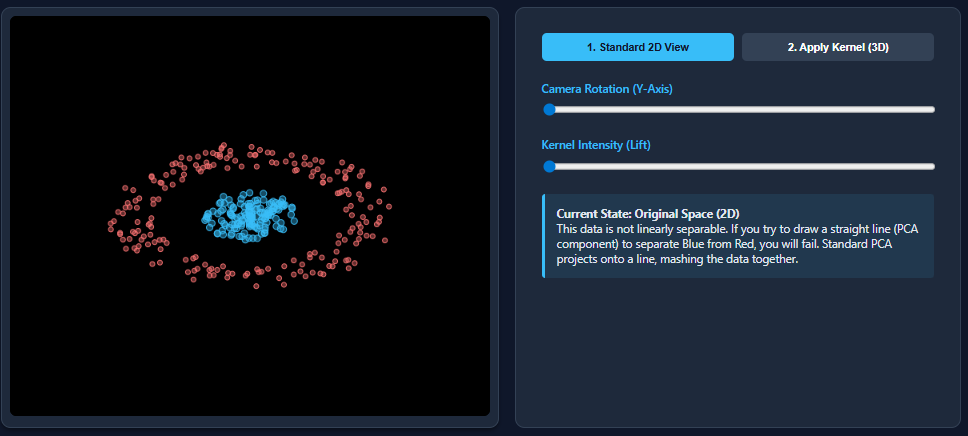

Kernel Principal Component Analysis (PCA): Explained with an ExampleMarkTechPost Dimensionality reduction techniques like PCA work wonderfully when datasets are linearly separable—but they break down the moment nonlinear patterns appear. That’s exactly what happens with datasets such as two moons: PCA flattens the structure and mixes the classes together. Kernel PCA fixes this limitation by mapping the data into a higher-dimensional feature space where nonlinear

The post Kernel Principal Component Analysis (PCA): Explained with an Example appeared first on MarkTechPost.

Dimensionality reduction techniques like PCA work wonderfully when datasets are linearly separable—but they break down the moment nonlinear patterns appear. That’s exactly what happens with datasets such as two moons: PCA flattens the structure and mixes the classes together. Kernel PCA fixes this limitation by mapping the data into a higher-dimensional feature space where nonlinear

The post Kernel Principal Component Analysis (PCA): Explained with an Example appeared first on MarkTechPost. Read More

How to Design a Fully Local Multi-Agent Orchestration System Using TinyLlama for Intelligent Task Decomposition and Autonomous CollaborationMarkTechPost In this tutorial, we explore how we can orchestrate a team of specialized AI agents locally using an efficient manager-agent architecture powered by TinyLlama. We walk through how we build structured task decomposition, inter-agent collaboration, and autonomous reasoning loops without relying on any external APIs. By running everything directly through the transformers library, we create

The post How to Design a Fully Local Multi-Agent Orchestration System Using TinyLlama for Intelligent Task Decomposition and Autonomous Collaboration appeared first on MarkTechPost.

In this tutorial, we explore how we can orchestrate a team of specialized AI agents locally using an efficient manager-agent architecture powered by TinyLlama. We walk through how we build structured task decomposition, inter-agent collaboration, and autonomous reasoning loops without relying on any external APIs. By running everything directly through the transformers library, we create

The post How to Design a Fully Local Multi-Agent Orchestration System Using TinyLlama for Intelligent Task Decomposition and Autonomous Collaboration appeared first on MarkTechPost. Read More

The Machine Learning “Advent Calendar” Day 6: Decision Tree RegressorTowards Data Science During the first days of this Machine Learning Advent Calendar, we explored models based on distances. Today, we switch to a completely different way of learning: Decision Trees.

With a simple one-feature dataset, we can see how a tree chooses its first split. The idea is always the same: if humans can guess the split visually, then we can rebuild the logic step by step in Excel.

By listing all possible split values and computing the MSE for each one, we identify the split that reduces the error the most. This gives us a clear intuition of how a Decision Tree grows, how it makes predictions, and why the first split is such a crucial step.

The post The Machine Learning “Advent Calendar” Day 6: Decision Tree Regressor appeared first on Towards Data Science.

During the first days of this Machine Learning Advent Calendar, we explored models based on distances. Today, we switch to a completely different way of learning: Decision Trees.

With a simple one-feature dataset, we can see how a tree chooses its first split. The idea is always the same: if humans can guess the split visually, then we can rebuild the logic step by step in Excel.

By listing all possible split values and computing the MSE for each one, we identify the split that reduces the error the most. This gives us a clear intuition of how a Decision Tree grows, how it makes predictions, and why the first split is such a crucial step.

The post The Machine Learning “Advent Calendar” Day 6: Decision Tree Regressor appeared first on Towards Data Science. Read More

How We Are Testing Our Agents in DevTowards Data Science Testing that your AI agent is performing as expected is not easy. Here are a few strategies we learned the hard way.

The post How We Are Testing Our Agents in Dev appeared first on Towards Data Science.

Testing that your AI agent is performing as expected is not easy. Here are a few strategies we learned the hard way.

The post How We Are Testing Our Agents in Dev appeared first on Towards Data Science. Read More

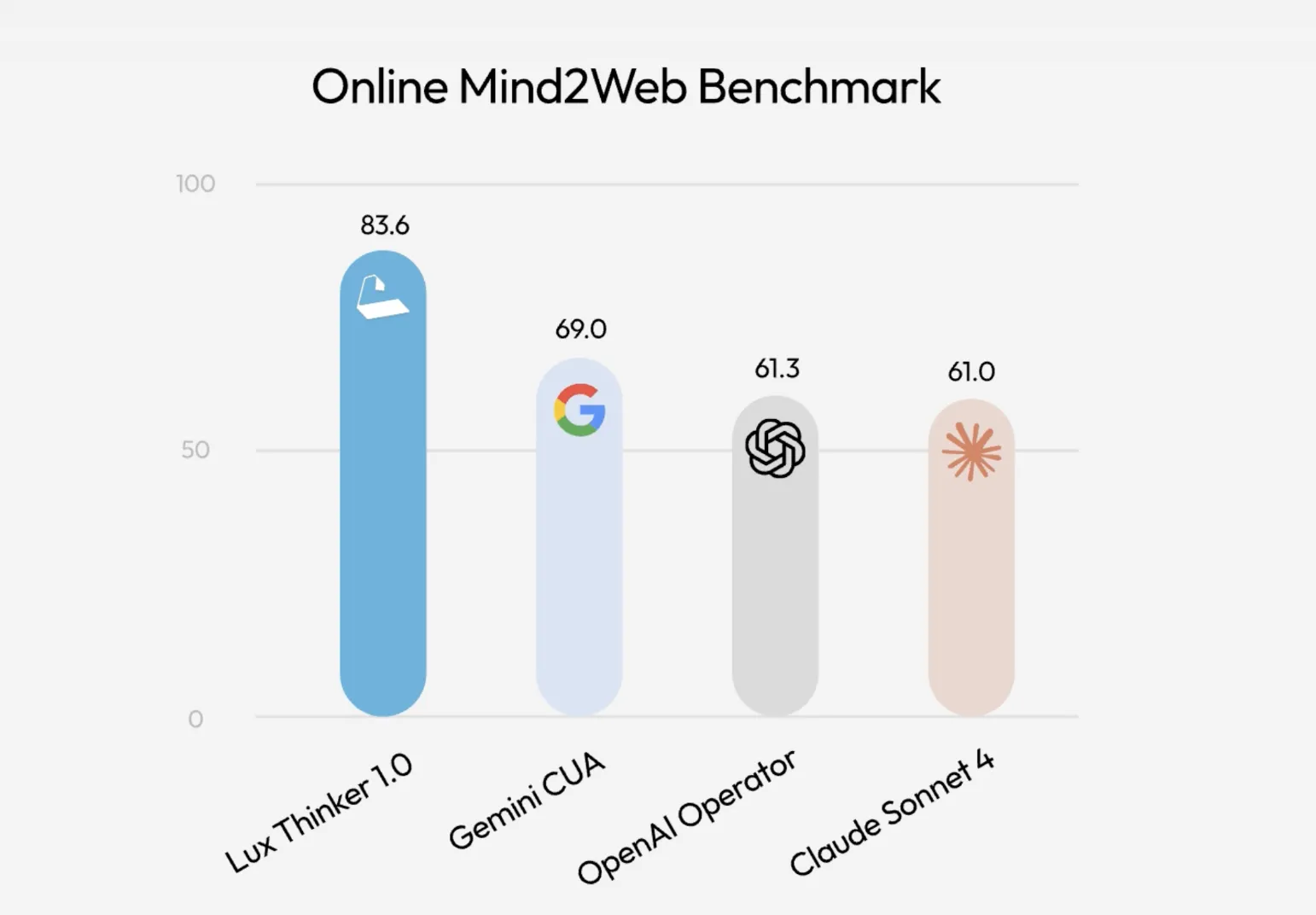

OpenAGI Foundation Launches Lux: A Foundation Computer Use Model that Tops Online Mind2Web with OSGym At ScaleMarkTechPost How do you turn slow, manual click work across browsers and desktops into a reliable, automated system that can actually use a computer for you at scale? Lux is the latest example of computer use agents moving from research demo to infrastructure. OpenAGI Foundation team has released Lux, a foundation model that operates real desktops

The post OpenAGI Foundation Launches Lux: A Foundation Computer Use Model that Tops Online Mind2Web with OSGym At Scale appeared first on MarkTechPost.

How do you turn slow, manual click work across browsers and desktops into a reliable, automated system that can actually use a computer for you at scale? Lux is the latest example of computer use agents moving from research demo to infrastructure. OpenAGI Foundation team has released Lux, a foundation model that operates real desktops

The post OpenAGI Foundation Launches Lux: A Foundation Computer Use Model that Tops Online Mind2Web with OSGym At Scale appeared first on MarkTechPost. Read More

The U.S. Cybersecurity and Infrastructure Security Agency (CISA) on Friday formally added a critical security flaw impacting React Server Components (RSC) to its Known Exploited Vulnerabilities (KEV) catalog following reports of active exploitation in the wild. The vulnerability, CVE-2025-55182 (CVSS score: 10.0), relates to a case of remote code execution that could be triggered by […]

Software teams at Google and other Rust adopters see safer code when using the memory-safe language, and also fewer rollbacks and less code review. Read More

As quantum quietly moves beyond lab experiment and into production workflows, here’s what enterprise security leaders should be focused on, according to Lineswala. Read More

Barts Health NHS Trust has announced that Clop ransomware actors have stolen files from a database by exploiting a vulnerability in its Oracle E-business Suite software. […] Read More