Contextual Quantum Neural Networks for Stock Price Predictioncs.AI updates on arXiv.org arXiv:2503.01884v2 Announce Type: replace-cross

Abstract: In this paper, we apply quantum machine learning (QML) to predict the stock prices of multiple assets using a contextual quantum neural network. Our approach captures recent trends to predict future stock price distributions, moving beyond traditional models that focus on entire historical data, enhancing adaptability and precision. Utilizing the principles of quantum superposition, we introduce a new training technique called the quantum batch gradient update (QBGU), which accelerates the standard stochastic gradient descent (SGD) in quantum applications and improves convergence. Consequently, we propose a quantum multi-task learning (QMTL) architecture, specifically, the share-and-specify ansatz, that integrates task-specific operators controlled by quantum labels, enabling the simultaneous and efficient training of multiple assets on the same quantum circuit as well as enabling efficient portfolio representation with logarithmic overhead in the number of qubits. This architecture represents the first of its kind in quantum finance, offering superior predictive power and computational efficiency for multi-asset stock price forecasting. Through extensive experimentation on S&P 500 data for Apple, Google, Microsoft, and Amazon stocks, we demonstrate that our approach not only outperforms quantum single-task learning (QSTL) models but also effectively captures inter-asset correlations, leading to enhanced prediction accuracy. Our findings highlight the transformative potential of QML in financial applications, paving the way for more advanced, resource-efficient quantum algorithms in stock price prediction and other complex financial modeling tasks.

arXiv:2503.01884v2 Announce Type: replace-cross

Abstract: In this paper, we apply quantum machine learning (QML) to predict the stock prices of multiple assets using a contextual quantum neural network. Our approach captures recent trends to predict future stock price distributions, moving beyond traditional models that focus on entire historical data, enhancing adaptability and precision. Utilizing the principles of quantum superposition, we introduce a new training technique called the quantum batch gradient update (QBGU), which accelerates the standard stochastic gradient descent (SGD) in quantum applications and improves convergence. Consequently, we propose a quantum multi-task learning (QMTL) architecture, specifically, the share-and-specify ansatz, that integrates task-specific operators controlled by quantum labels, enabling the simultaneous and efficient training of multiple assets on the same quantum circuit as well as enabling efficient portfolio representation with logarithmic overhead in the number of qubits. This architecture represents the first of its kind in quantum finance, offering superior predictive power and computational efficiency for multi-asset stock price forecasting. Through extensive experimentation on S&P 500 data for Apple, Google, Microsoft, and Amazon stocks, we demonstrate that our approach not only outperforms quantum single-task learning (QSTL) models but also effectively captures inter-asset correlations, leading to enhanced prediction accuracy. Our findings highlight the transformative potential of QML in financial applications, paving the way for more advanced, resource-efficient quantum algorithms in stock price prediction and other complex financial modeling tasks. Read More

A Pragmatist Robot: Learning to Plan Tasks by Experiencing the Real Worldcs.AI updates on arXiv.org arXiv:2507.16713v2 Announce Type: replace-cross

Abstract: Large language models (LLMs) have emerged as the dominant paradigm for robotic task planning using natural language instructions. However, trained on general internet data, LLMs are not inherently aligned with the embodiment, skill sets, and limitations of real-world robotic systems. Inspired by the emerging paradigm of verbal reinforcement learning-where LLM agents improve through self-reflection and few-shot learning without parameter updates-we introduce PragmaBot, a framework that enables robots to learn task planning through real-world experience. PragmaBot employs a vision-language model (VLM) as the robot’s “brain” and “eye”, allowing it to visually evaluate action outcomes and self-reflect on failures. These reflections are stored in a short-term memory (STM), enabling the robot to quickly adapt its behavior during ongoing tasks. Upon task completion, the robot summarizes the lessons learned into its long-term memory (LTM). When facing new tasks, it can leverage retrieval-augmented generation (RAG) to plan more grounded action sequences by drawing on relevant past experiences and knowledge. Experiments on four challenging robotic tasks show that STM-based self-reflection increases task success rates from 35% to 84%, with emergent intelligent object interactions. In 12 real-world scenarios (including eight previously unseen tasks), the robot effectively learns from the LTM and improves single-trial success rates from 22% to 80%, with RAG outperforming naive prompting. These results highlight the effectiveness and generalizability of PragmaBot. Project webpage: https://pragmabot.github.io/

arXiv:2507.16713v2 Announce Type: replace-cross

Abstract: Large language models (LLMs) have emerged as the dominant paradigm for robotic task planning using natural language instructions. However, trained on general internet data, LLMs are not inherently aligned with the embodiment, skill sets, and limitations of real-world robotic systems. Inspired by the emerging paradigm of verbal reinforcement learning-where LLM agents improve through self-reflection and few-shot learning without parameter updates-we introduce PragmaBot, a framework that enables robots to learn task planning through real-world experience. PragmaBot employs a vision-language model (VLM) as the robot’s “brain” and “eye”, allowing it to visually evaluate action outcomes and self-reflect on failures. These reflections are stored in a short-term memory (STM), enabling the robot to quickly adapt its behavior during ongoing tasks. Upon task completion, the robot summarizes the lessons learned into its long-term memory (LTM). When facing new tasks, it can leverage retrieval-augmented generation (RAG) to plan more grounded action sequences by drawing on relevant past experiences and knowledge. Experiments on four challenging robotic tasks show that STM-based self-reflection increases task success rates from 35% to 84%, with emergent intelligent object interactions. In 12 real-world scenarios (including eight previously unseen tasks), the robot effectively learns from the LTM and improves single-trial success rates from 22% to 80%, with RAG outperforming naive prompting. These results highlight the effectiveness and generalizability of PragmaBot. Project webpage: https://pragmabot.github.io/ Read More

A Geometric Taxonomy of Hallucinations in LLMscs.AI updates on arXiv.org arXiv:2602.13224v1 Announce Type: new

Abstract: The term “hallucination” in large language models conflates distinct phenomena with different geometric signatures in embedding space. We propose a taxonomy identifying three types: unfaithfulness (failure to engage with provided context), confabulation (invention of semantically foreign content), and factual error (incorrect claims within correct conceptual frames). We observe a striking asymmetry. On standard benchmarks where hallucinations are LLM-generated, detection is domain-local: AUROC 0.76-0.99 within domains, but 0.50 (chance level) across domains. Discriminative directions are approximately orthogonal between domains (mean cosine similarity -0.07). On human-crafted confabulations – invented institutions, redefined terminology, fabricated mechanisms – a single global direction achieves 0.96 AUROC with 3.8% cross-domain degradation. We interpret this divergence as follows: benchmarks capture generation artifacts (stylistic signatures of prompted fabrication), while human-crafted confabulations capture genuine topical drift. The geometric structure differs because the underlying phenomena differ. Type III errors show 0.478 AUROC – indistinguishable from chance. This reflects a theoretical constraint: embeddings encode distributional co-occurrence, not correspondence to external reality. Statements with identical contextual patterns occupy similar embedding regions regardless of truth value. The contribution is a geometric taxonomy clarifying the scope of embedding-based detection: Types I and II are detectable; Type III requires external verification mechanisms.

arXiv:2602.13224v1 Announce Type: new

Abstract: The term “hallucination” in large language models conflates distinct phenomena with different geometric signatures in embedding space. We propose a taxonomy identifying three types: unfaithfulness (failure to engage with provided context), confabulation (invention of semantically foreign content), and factual error (incorrect claims within correct conceptual frames). We observe a striking asymmetry. On standard benchmarks where hallucinations are LLM-generated, detection is domain-local: AUROC 0.76-0.99 within domains, but 0.50 (chance level) across domains. Discriminative directions are approximately orthogonal between domains (mean cosine similarity -0.07). On human-crafted confabulations – invented institutions, redefined terminology, fabricated mechanisms – a single global direction achieves 0.96 AUROC with 3.8% cross-domain degradation. We interpret this divergence as follows: benchmarks capture generation artifacts (stylistic signatures of prompted fabrication), while human-crafted confabulations capture genuine topical drift. The geometric structure differs because the underlying phenomena differ. Type III errors show 0.478 AUROC – indistinguishable from chance. This reflects a theoretical constraint: embeddings encode distributional co-occurrence, not correspondence to external reality. Statements with identical contextual patterns occupy similar embedding regions regardless of truth value. The contribution is a geometric taxonomy clarifying the scope of embedding-based detection: Types I and II are detectable; Type III requires external verification mechanisms. Read More

PlotChain: Deterministic Checkpointed Evaluation of Multimodal LLMs on Engineering Plot Readingcs.AI updates on arXiv.org arXiv:2602.13232v1 Announce Type: new

Abstract: We present PlotChain, a deterministic, generator-based benchmark for evaluating multimodal large language models (MLLMs) on engineering plot reading-recovering quantitative values from classic plots (e.g., Bode/FFT, step response, stress-strain, pump curves) rather than OCR-only extraction or free-form captioning. PlotChain contains 15 plot families with 450 rendered plots (30 per family), where every item is produced from known parameters and paired with exact ground truth computed directly from the generating process. A central contribution is checkpoint-based diagnostic evaluation: in addition to final targets, each item includes intermediate ‘cp_’ fields that isolate sub-skills (e.g., reading cutoff frequency or peak magnitude) and enable failure localization within a plot family. We evaluate four state-of-the-art MLLMs under a standardized, deterministic protocol (temperature = 0 and a strict JSON-only numeric output schema) and score predictions using per-field tolerances designed to reflect human plot-reading precision. Under the ‘plotread’ tolerance policy, the top models achieve 80.42% (Gemini 2.5 Pro), 79.84% (GPT-4.1), and 78.21% (Claude Sonnet 4.5) overall field-level pass rates, while GPT-4o trails at 61.59%. Despite strong performance on many families, frequency-domain tasks remain brittle: bandpass response stays low (<= 23%), and FFT spectrum remains challenging. We release the generator, dataset, raw model outputs, scoring code, and manifests with checksums to support fully reproducible runs and retrospective rescoring under alternative tolerance policies.

arXiv:2602.13232v1 Announce Type: new

Abstract: We present PlotChain, a deterministic, generator-based benchmark for evaluating multimodal large language models (MLLMs) on engineering plot reading-recovering quantitative values from classic plots (e.g., Bode/FFT, step response, stress-strain, pump curves) rather than OCR-only extraction or free-form captioning. PlotChain contains 15 plot families with 450 rendered plots (30 per family), where every item is produced from known parameters and paired with exact ground truth computed directly from the generating process. A central contribution is checkpoint-based diagnostic evaluation: in addition to final targets, each item includes intermediate ‘cp_’ fields that isolate sub-skills (e.g., reading cutoff frequency or peak magnitude) and enable failure localization within a plot family. We evaluate four state-of-the-art MLLMs under a standardized, deterministic protocol (temperature = 0 and a strict JSON-only numeric output schema) and score predictions using per-field tolerances designed to reflect human plot-reading precision. Under the ‘plotread’ tolerance policy, the top models achieve 80.42% (Gemini 2.5 Pro), 79.84% (GPT-4.1), and 78.21% (Claude Sonnet 4.5) overall field-level pass rates, while GPT-4o trails at 61.59%. Despite strong performance on many families, frequency-domain tasks remain brittle: bandpass response stays low (<= 23%), and FFT spectrum remains challenging. We release the generator, dataset, raw model outputs, scoring code, and manifests with checksums to support fully reproducible runs and retrospective rescoring under alternative tolerance policies. Read More

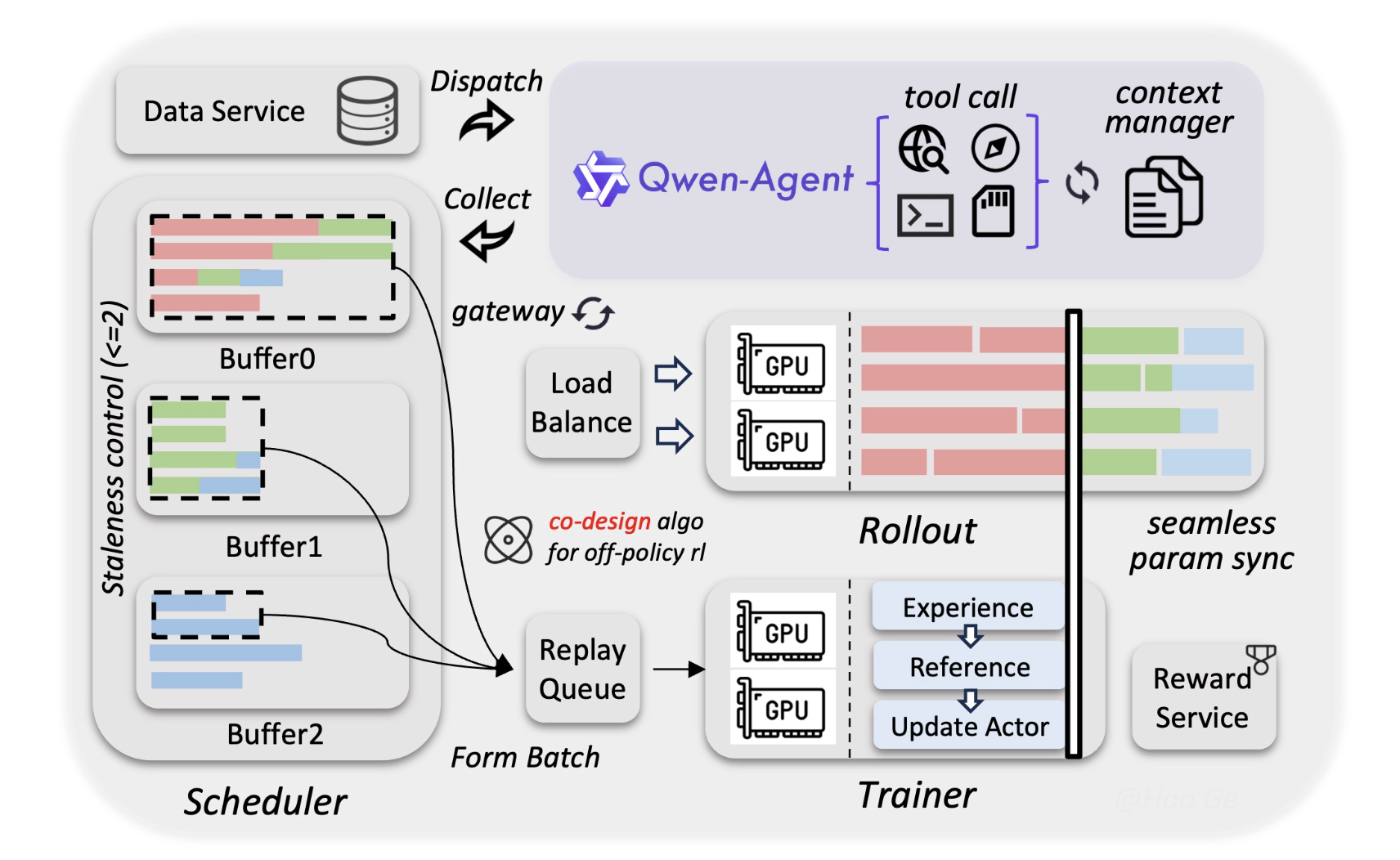

Alibaba Qwen Team Releases Qwen3.5-397B MoE Model with 17B Active Parameters and 1M Token Context for AI agentsMarkTechPost Alibaba Cloud just updated the open-source landscape. Today, the Qwen team released Qwen3.5, the newest generation of their large language model (LLM) family. The most powerful version is Qwen3.5-397B-A17B. This model is a sparse Mixture-of-Experts (MoE) system. It combines massive reasoning power with high efficiency. Qwen3.5 is a native vision-language model. It is designed specifically

The post Alibaba Qwen Team Releases Qwen3.5-397B MoE Model with 17B Active Parameters and 1M Token Context for AI agents appeared first on MarkTechPost.

Alibaba Cloud just updated the open-source landscape. Today, the Qwen team released Qwen3.5, the newest generation of their large language model (LLM) family. The most powerful version is Qwen3.5-397B-A17B. This model is a sparse Mixture-of-Experts (MoE) system. It combines massive reasoning power with high efficiency. Qwen3.5 is a native vision-language model. It is designed specifically

The post Alibaba Qwen Team Releases Qwen3.5-397B MoE Model with 17B Active Parameters and 1M Token Context for AI agents appeared first on MarkTechPost. Read More

Learn Python, SQL and PowerBI to Become a Certified Data Analyst for FREE This WeekKDnuggets From February 16–22, DataCamp’s entire curriculum is 100% free.

From February 16–22, DataCamp’s entire curriculum is 100% free. Read More

Cybersecurity researchers disclosed they have detected a case of an information stealer infection successfully exfiltrating a victim’s OpenClaw (formerly Clawdbot and Moltbot) configuration environment. “This finding marks a significant milestone in the evolution of infostealer behavior: the transition from stealing browser credentials to harvesting the ‘souls’ and identities of personal AI [ Read More

With the massive adoption of the OpenClaw agentic AI assistant, information-stealing malware has been spotted stealing files associated with the framework that contain API keys, authentication tokens, and other secrets. […] Read More

Dutch authorities arrested a 40-year-old man after he downloaded confidential documents that had been mistakenly shared by the police and refused to delete them unless he received “something in return.” […] Read More

The Washington Hotel brand in Japan has announced that that its servers were compromised in a ransomware attack, exposing various business data. […] Read More