Author: Derrick D. Jackson

Title: Founder & Senior Director of Cloud Security Architecture & Risk

Credentials: CISSP, CRISC, CCSP

Last updated October 8th, 2025

Hello Everyone, Help us grow our community by sharing and/or supporting us on other platforms. This allow us to show verification that what we are doing is valued. It also allows us to plan and allocate resources to improve what we are doing, as we then know others are interested/supportive.

Table of Contents

Pressed For Time?

Review or Download our 2-3 min Quick Slides or the 5-7 min Article Insights to gain knowledge with the time you have!

Pressed For Time?

Review or Download our 2-3 min Quick Slides or the 5-7 min Article Insights to gain knowledge with the time you have!

AI Governance Careers

Companies everywhere are hiring AI governance professionals. Fast.

The numbers tell part of the story. According to LinkedIn’s 2023 Work Change Report, AI governance roles grew by over 300% between 2022 and 2023. The EU AI Act entered into force on August 1, 2024. Corporate boards now face direct liability for AI failures. Investors want proof that companies can manage algorithmic risk.

This creates opportunity.

You don’t need to be a machine learning engineer to break into AI governance. If you understand compliance, know how to assess risk, or can translate technical concepts into business language, you already have transferable skills. The field needs people who think about fairness, accountability, and how systems affect real humans. It needs people who can read regulations and actually implement them.

I’m baffled that more of my colleagues haven’t jumped on this yet. I saw the same thing happen with cloud computing and DevOps. Early movers built entire careers. Governance has never been the sexy part of tech, but it pays well and the work matters. The field is wide open right now.!

What is AI Governance, and Why Does It Matter?

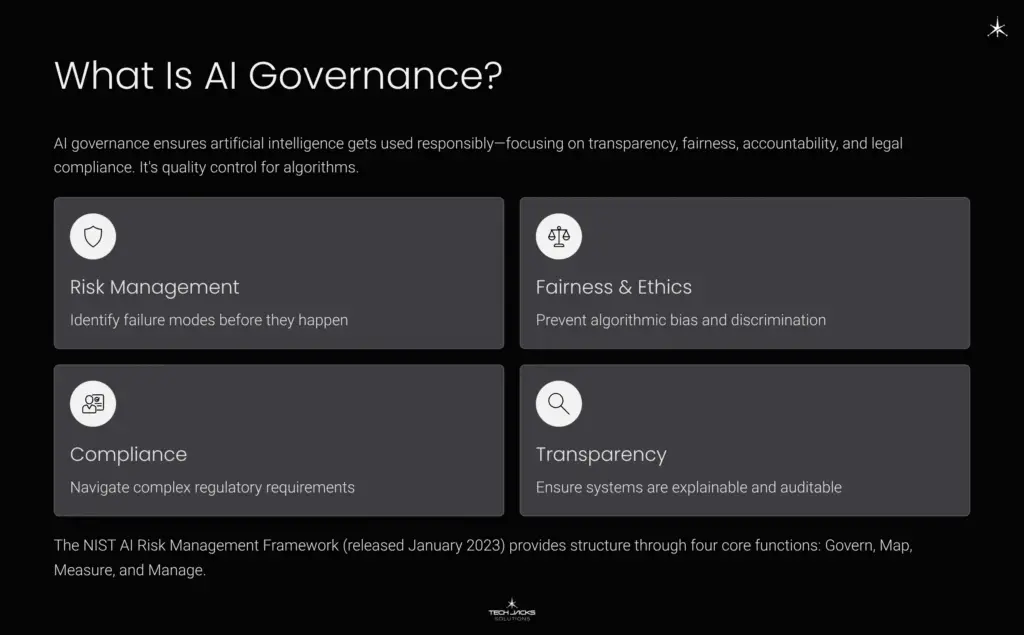

AI governance means making sure artificial intelligence gets used responsibly. You’re looking at transparency, fairness, accountability, and staying legal. Think of it as quality control for algorithms.

Every industry with regulatory oversight needs this. Finance, healthcare, government, insurance. They’re not just worried about compliance fines (though the EU AI Act can impose penalties up to €35 million or 7% of global annual turnover under Article 99). They’re worried about algorithmic bias in hiring, discriminatory lending models, diagnostic errors, wrongful arrests from facial recognition.

The NIST AI Risk Management Framework (released January 26, 2023) provides structure for how organizations should approach AI governance. It defines four core functions: Govern, Map, Measure, and Manage. Most companies are still figuring out how to implement these functions. That’s where you come in.

Key Roles in AI Governance Career Paths

The field breaks down into several distinct roles. Your background determines which path makes sense.

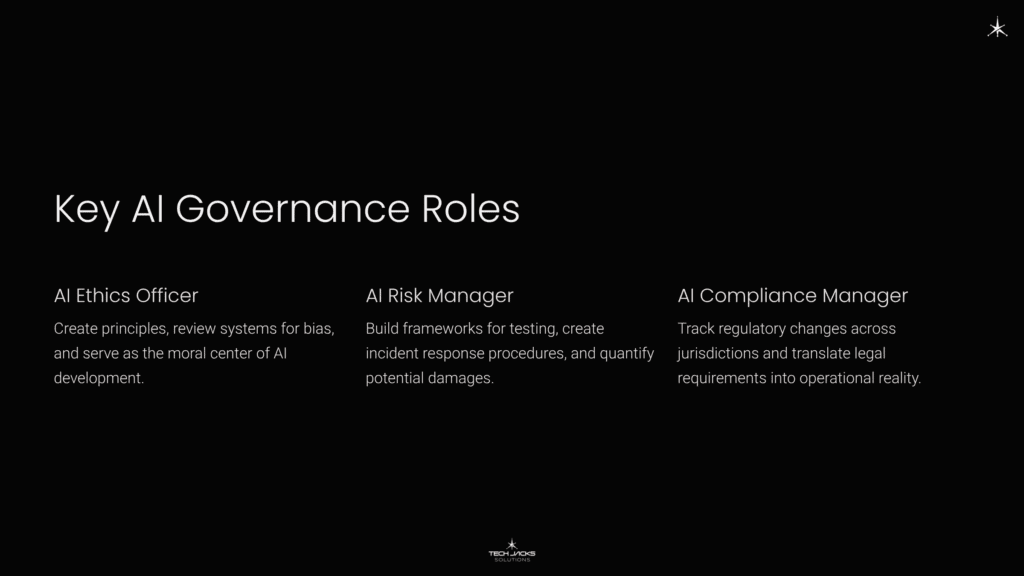

1. AI Ethics Officer

Someone needs to be the moral center of AI development. Ethics officers create principles, review systems for bias, and push back when something doesn’t smell right. This role combines philosophy, policy, and practical risk assessment.

2. AI Risk Manager

Every model carries risk. Risk managers identify what could go wrong before it does. They build frameworks for testing, create incident response procedures, and quantify potential damages. You’re thinking about failure modes, edge cases, and unintended consequences.

3. AI Compliance Manager

The EU AI Act alone runs to 458 pages. Someone needs to read those pages, understand what they mean for your organization, and build processes that keep you compliant. Compliance managers track regulatory changes across jurisdictions and translate legal requirements into operational reality.

4. AI Policy Analyst

Policy analysts focus on the upstream work. They monitor legislative developments, comment on proposed regulations, and help craft internal policies. This role suits people who like connecting technology to governance.

5. AI Auditor or Assurance Specialist

Auditors verify that AI systems actually do what they’re supposed to do. You’re testing models, reviewing documentation, and providing independent assessment. ISACA launched the AAIA (Advanced in AI Audit) certification in May 2025 specifically for this role.

Other emerging roles include AI product managers with governance expertise and legal advisors specializing in algorithmic accountability.

Salary Expectations

AI governance pays competitively. According to the IAPP 2025 Salary and Jobs Report (surveying over 1,600 professionals from more than 60 countries between March and April 2025):

Professionals working in both privacy and AI governance earn a median of over $169,700 in total compensation.

Those focused solely on AI governance earn a median of $151,800.

Technology sector roles command higher salaries, with legal and compliance positions earning median compensation of $205,000 and technical AI governance roles reaching $221,000.

Entry-level positions in AI governance typically start between $75,000 and $95,000 annually, based on market analysis of current job postings.

Geography matters. San Francisco, New York, and Seattle command higher salaries than smaller markets. Remote work is changing this dynamic somewhat, but location still affects compensation.

Why AI Governance is Growing Rapidly

Three forces are driving growth in this field.

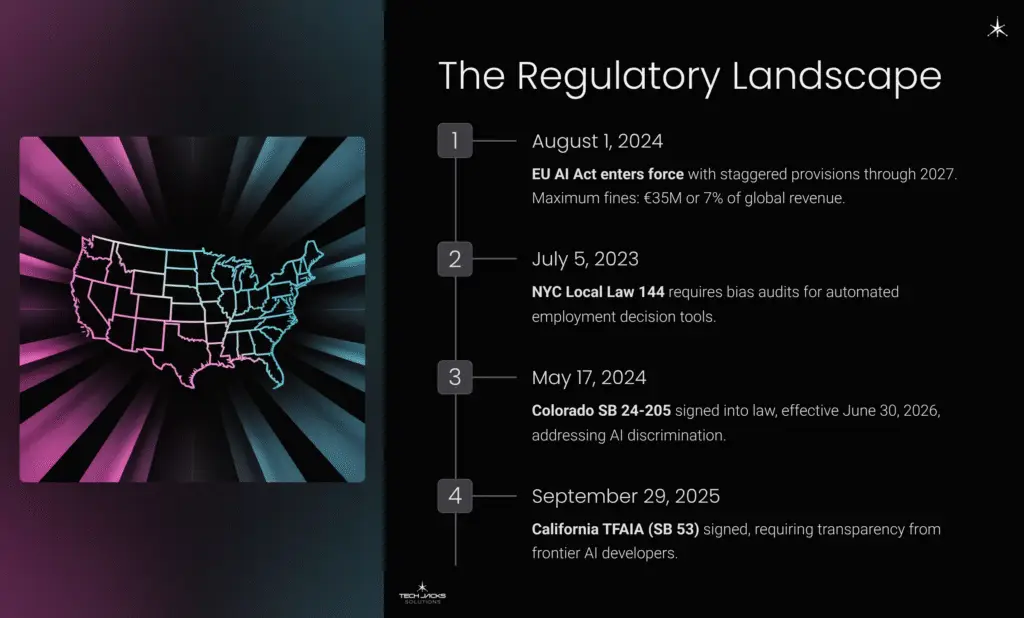

Regulatory pressure is real. The EU AI Act entered into force on August 1, 2024, with different provisions applying at staggered intervals through 2027. Maximum fines can reach €35 million or 7% of global annual turnover for the most serious violations. Colorado’s AI Act (SB 24-205) was signed into law on May 17, 2024, with an effective date of June 30, 2026. California signed the Transparency in Frontier Artificial Intelligence Act (SB 53) into law on September 29, 2025. New York City’s Local Law 144 (effective July 5, 2023) requires bias audits for automated employment decision tools.

Corporate risk management has become a board-level concern. CEOs face questions about AI strategy in earnings calls. Directors want to know how the company is managing algorithmic risk. Nobody wants to be the next headline about a discriminatory hiring algorithm or a chatbot that went rogue.

Investor expectations have shifted. ESG (Environmental, Social, Governance) criteria now include AI governance for many institutional investors. Private equity firms conduct AI due diligence during acquisitions. Being able to demonstrate responsible AI practices affects company valuation.

The California AI Regulatory Landscape

California’s approach to AI regulation evolved significantly in 2024 and 2025. In September 2024, Governor Gavin Newsom vetoed SB 1047 (the Safe and Secure Innovation for Frontier Artificial Intelligence Models Act), which would have imposed safety requirements on developers of large AI models costing over $100 million to train. Newsom argued that the bill’s threshold based solely on model size was not adequately supported by data.

However, California didn’t abandon AI regulation. In September 2025, Governor Newsom signed SB 53, the Transparency in Frontier Artificial Intelligence Act (TFAIA), into law. This represents California’s most significant AI developer regulation to date. The law requires developers of frontier AI models to provide transparency around their systems without the controversial “kill switch” and criminal penalty provisions that doomed SB 1047.

California also passed AB 2013 and SB 942 in 2024, both requiring enhanced transparency around generative AI training data and content, effective January 1, 2026. These laws apply broadly to any developers making generative AI systems available to Californians.

This patchwork of state regulations creates demand for compliance professionals who understand multiple jurisdictions. Companies operating nationally need people who can navigate California’s transparency requirements, Colorado’s anti-discrimination provisions, and New York’s bias audit mandates simultaneously.

Future Outlook for AI Governance

Based on current regulatory trajectories and industry hiring patterns, I expect the following developments over the next five years (these are my analysis, not guaranteed predictions):

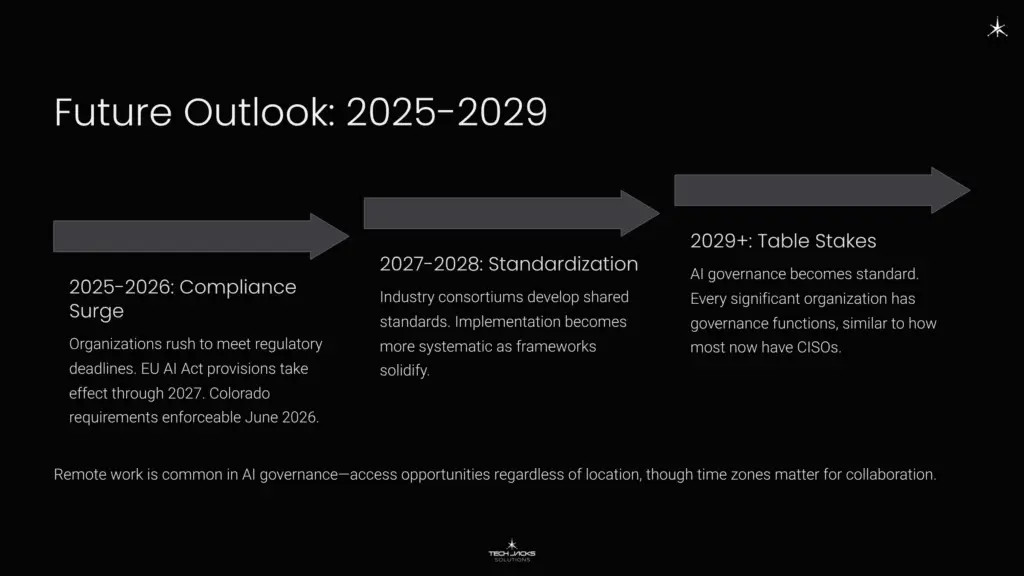

2025-2026: Compliance-focused hiring will surge as organizations rush to meet regulatory deadlines. The EU AI Act’s provisions take effect at different points through 2027. Colorado’s requirements become enforceable June 30, 2026. Companies will need compliance managers urgently.

2027-2028: Standardized governance frameworks will likely emerge. Right now, every company is building its own approach. Industry consortiums are working on shared standards. Once those solidify, implementation becomes more systematic.

2029 and beyond: AI governance becomes table stakes. Every significant organization will have governance functions, similar to how most large companies now have Chief Information Security Officers.

Remote work is common in AI governance since most of the work happens digitally. You can access opportunities regardless of location, though time zones still matter for real-time collaboration.

Another exciting trend in AI governance careers is the rise in remote opportunities. Since AI governance work is primarily digital, it’s a field accessible to professionals regardless of location.

The takeaway? An AI governance career provides an opportunity to be part of a transformative movement. It’s a chance to shape how we live, work, and interact with technology.

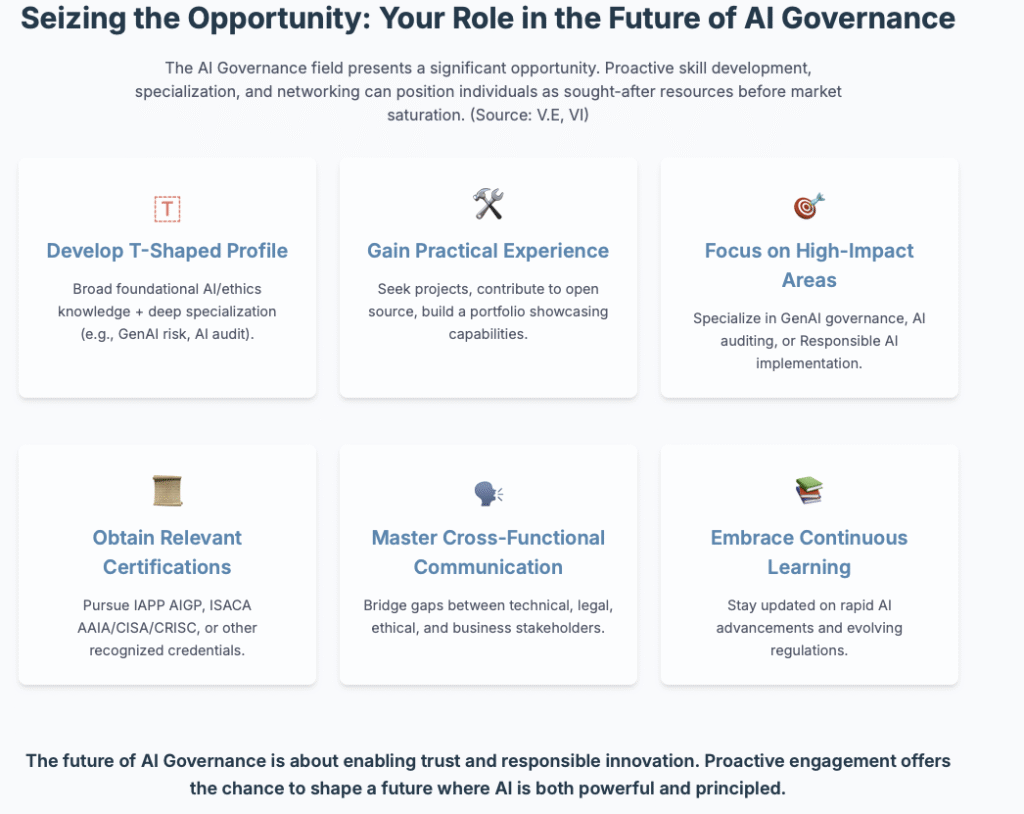

How to Transition Into an AI Governance Career

You probably already have relevant skills. The question is how to package them for AI governance roles.

From AI and ML Development

Your technical foundation gives you credibility. You understand how models work, where they break, and what “accuracy” actually means. To pivot:

- Study regulatory frameworks (start with the NIST AI RMF and EU AI Act)

- Develop stakeholder management skills (you’ll need to explain technical risks to non-technical audiences)

- Take courses in AI ethics and fairness (Coursera and edX both offer relevant programs)

This pathway can take you from Technical AI Safety Specialist to Chief AI Officer.

From Compliance or Privacy Roles

Your regulatory experience transfers directly. You know how to read dense legal documents, build controls, and manage audits. To add AI governance:

- Learn AI basics through non-technical courses (Andrew Ng’s “AI for Everyone” is solid)

- Study algorithmic bias and fairness principles

- Understand AI-specific risk management approaches

You might move from Compliance Analyst to AI Governance Director.

From Legal, Business Analysis, or Project Management

Domain expertise matters. Lawyers who understand AI can advise on procurement contracts and liability. Business analysts who know AI can optimize implementation. Project managers who grasp governance can lead cross-functional AI initiatives.

Add knowledge in:

- AI procurement and vendor management (for legal)

- AI workflow analysis (for business analysts)

- Governance oversight methodologies (for project managers)

The goal is T-shaped expertise. Broad knowledge across AI governance, deep expertise in your specialty.

Building the Skills You Need for AI Governance

Three skill sets matter.

1. Technical Foundation

You don’t need to code neural networks. You do need to understand:

- How AI and ML models work at a conceptual level

- Data privacy principles (GDPR, CCPA) as they apply to AI

- Common bias types and fairness metrics

- Model validation approaches

2. Regulatory and Policy Expertise

Study emerging regulations systematically. The EU AI Act is the most comprehensive framework right now, but jurisdictions worldwide are developing their own approaches. Learn:

- Risk classification systems

- Auditing methodologies

- Documentation requirements

- Incident reporting obligations

3. Soft Skills for Leadership

Technical knowledge alone won’t cut it. You need to:

- Explain complex AI concepts to non-technical stakeholders

- Collaborate across legal, technical, and business teams

- Make ethical decisions when the answer isn’t obvious

- Negotiate between competing priorities

Recommended Learning Resources

Certifications matter in this field. They signal commitment and provide structured learning.

Industry events offer networking and current developments. The AI Governance Global Summit and IAPP conferences are worth attending.

Hands-on projects build practical skills. Volunteer for AI governance initiatives within your organization or contribute to open-source AI auditing tools.

Essential Certifications

Certifications provide structure and credibility. They’re not strictly required, but they help, especially when you’re transitioning from another field.

Core AI Governance Certifications

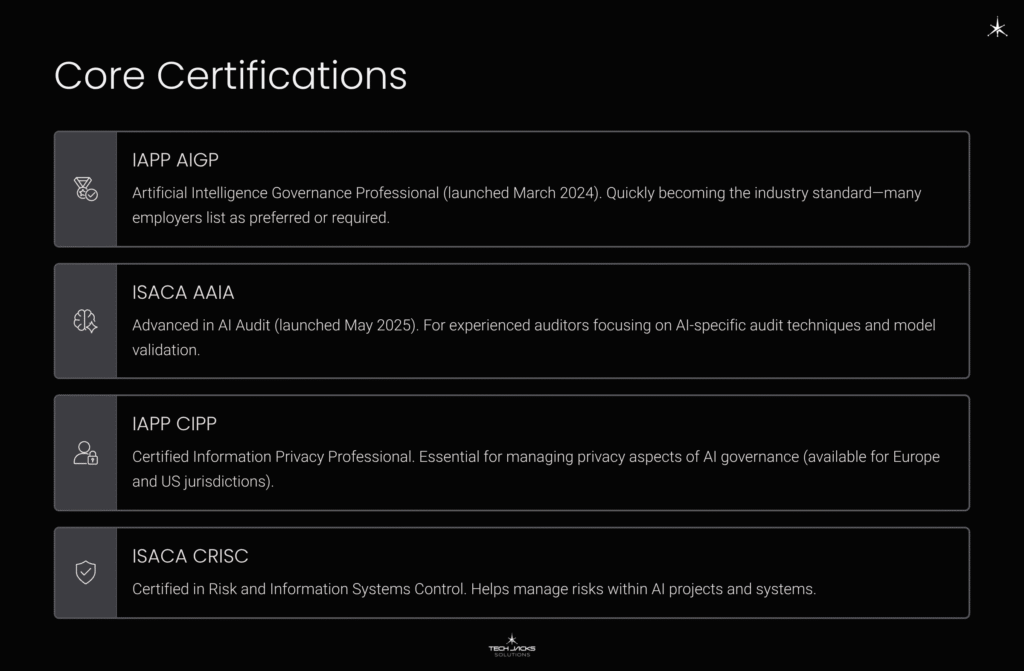

IAPP AIGP (Artificial Intelligence Governance Professional): Launched in March 2024, this certification is quickly becoming the industry standard. It covers AI fundamentals, governance frameworks, risk management, and compliance. Many employers now list it as preferred or required.

ISACA AAIA (Advanced in AI Audit): Launched in May 2025 for experienced auditors. Focuses on AI-specific audit techniques, model validation, and assurance practices.

ISACA CISA (Certified Information Systems Auditor): Supports understanding of AI system validation and audit contexts. Not AI-specific, but relevant.

ISACA CRISC (Certified in Risk and Information Systems Control): Helps with managing risks within AI projects.

ISACA CISM (Certified Information Security Manager): Covers security management aspects of AI systems.

Privacy and Compliance Certifications

IAPP CIPP (Certified Information Privacy Professional): Essential for managing privacy aspects of AI governance. Available in different jurisdictions (CIPP/E for Europe, CIPP/US for United States).

CompTIA Data+: Entry-level foundation in data analysis.

CDMP (Certified Data Management Professional): For data governance depth.

DGSP (Data Governance and Stewardship Professional): Focuses on responsible data management in AI contexts.

Project and Risk Management

PMP (Project Management Professional): Useful for leading AI governance initiatives.

CPA (Certified Public Accountant): Surprisingly relevant for auditing and understanding AI’s financial impacts.

Career paths in AI governance aren’t linear, but you can see common patterns.

Entry to Leadership Path

- Entry Level: AI Governance Analyst, AI Compliance Coordinator

- Intermediate: AI Ethics Specialist, AI Risk Manager, AI Compliance Manager

- Advanced: Director of AI Governance, Chief AI Governance Officer (CAIGO)

- SME: Subject Matter Expert in specialized areas

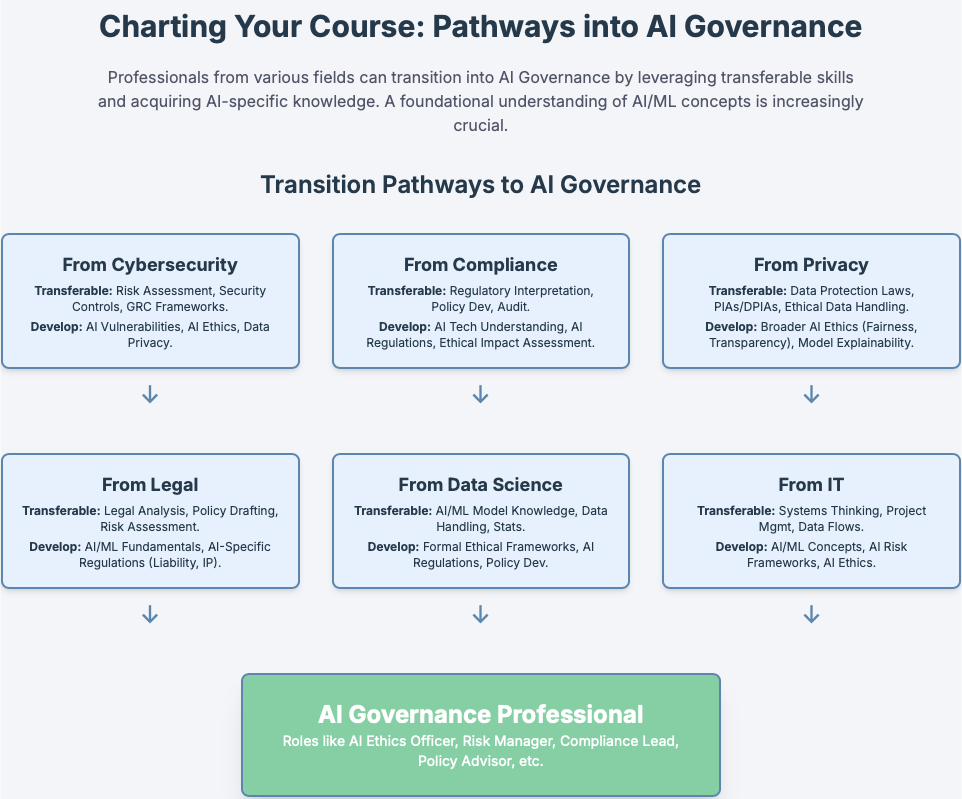

Transition Pathways by Background

Your current role determines your entry point.

From Cybersecurity:

- Current skills: Risk assessment, security controls, threat modeling

- Skills to develop: AI/ML basics, AI-specific vulnerabilities, ethics frameworks

- Target roles: AI Risk Manager → AI Security Governance Specialist → Director of AI Security

From Compliance/Legal:

- Current skills: Regulatory interpretation, policy development, controls implementation

- Skills to develop: AI technology fundamentals, emerging AI regulations

- Target roles: AI Compliance Officer → AI Governance Policy Lead → Chief AI Governance Officer

From Privacy:

- Current skills: Privacy laws, data protection, ethical data handling

- Skills to develop: AI ethics principles, model fairness concepts

- Target roles: AI Ethics Officer → AI Governance Manager → Director of AI Ethics

From Data Science/IT:

- Current skills: AI/ML technical knowledge, system administration, data analysis

- Skills to develop: Ethical frameworks, regulatory compliance, stakeholder communication

- Target roles: AI Ethics Specialist (Technical) → AI Model Validator → Head of Model Governance

These pathways require more depth than outlined here. Future articles will cover each transition in detail.

Strategic Development Recommendations

Building the Skills You Need for AI Governance

Break your development into phases.

Immediate Actions (0-6 months)

- Build foundational AI knowledge through courses like “AI for Everyone” or “Generative AI for Beginners”

- Pursue the IAPP AIGP certification

- Join professional communities (IAPP, ISACA, IEEE)

- Start documenting AI governance insights or analyses

- Follow developments in AI regulation

Medium-term Development (6-18 months)

- Specialize in an emerging area (generative AI governance is particularly active right now)

- Gain hands-on experience through internal projects or volunteer work

- Develop cross-functional collaboration skills

- Earn additional certifications aligned with your focus area

Long-term Positioning (18+ months)

- Establish thought leadership through writing, speaking, or open-source contributions

- Build T-shaped expertise (broad knowledge, deep specialization)

- Focus on high-demand specializations

- Seek mentors in leadership positions

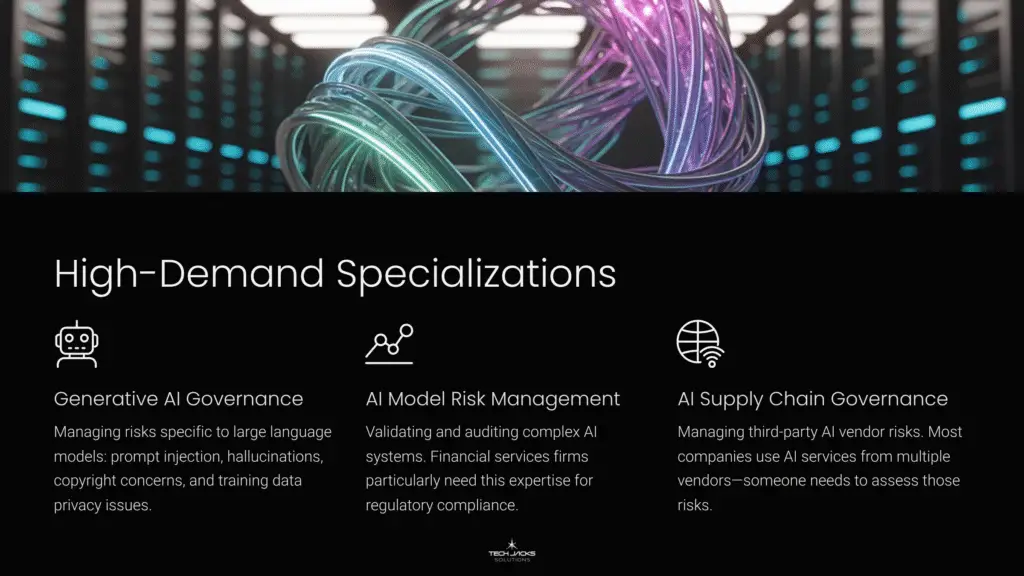

High-Value Specializations

Certain areas within AI governance are experiencing particularly strong demand.

Emerging High-Demand Areas

Generative AI Governance: Managing risks specific to large language models. This includes prompt injection vulnerabilities, hallucinations, copyright concerns, and data privacy issues with training data.

AI Model Risk Management: Validating and auditing complex AI systems. Financial services firms particularly need this expertise for regulatory compliance.

AI Regulatory Compliance: Navigating the EU AI Act, state-level regulations like California’s TFAIA and Colorado’s Anti-Discrimination in AI Law, and sector-specific requirements. This specialization will remain in demand as regulations evolve.

AI Supply Chain Governance: Managing third-party AI vendor risks. Most companies use AI services from multiple vendors. Someone needs to assess those risks.

Industry-Specific Opportunities

Different sectors have unique governance challenges.

Healthcare: Clinical AI applications face FDA oversight. Research applications must comply with IRB requirements. HIPAA complicates data governance.

Financial Services: Model risk management frameworks already exist in banking. AI governance builds on those foundations while addressing new risks.

Government: Public sector AI requires transparency and fairness at a different level than private sector applications. Federal contracts add another layer of requirements.

Legal Services: Law firms using AI for document review or legal research need governance around attorney-client privilege and work product protections.

Planning Your AI Governance Future

AI governance represents a genuine career opportunity. The field is growing. The work matters. The skills are learnable.

Assess where you are now. Identify skill gaps. Pick your first certification. Join a professional community. Start building expertise.

This isn’t about jumping on a trend. Companies need people who can implement responsible AI practices. Regulations require it. Boards demand it. The work needs doing.

If that appeals to you, now is a good time to start.

Action Steps

- Assess your current skills and identify gaps

- Enroll in certifications tailored to AI governance (start with IAPP AIGP)

- Join professional communities focused on responsible AI

- Build practical experience through projects or internal initiatives

- Stay current on regulatory developments across multiple jurisdictions

Excited to get started? View our AI Governance Career Hub & our AI Governance hub for additional resources, and follow our social media and share our website. Please like the article if you got any value out of it. Thanks