The Complete AI Data Governance Framework: 9 Critical Stages Every Organization Must Master (2025)

- Home

- The Complete AI Data Governance Framework: 9 Critical Stages Every Organization Must Master (2025)

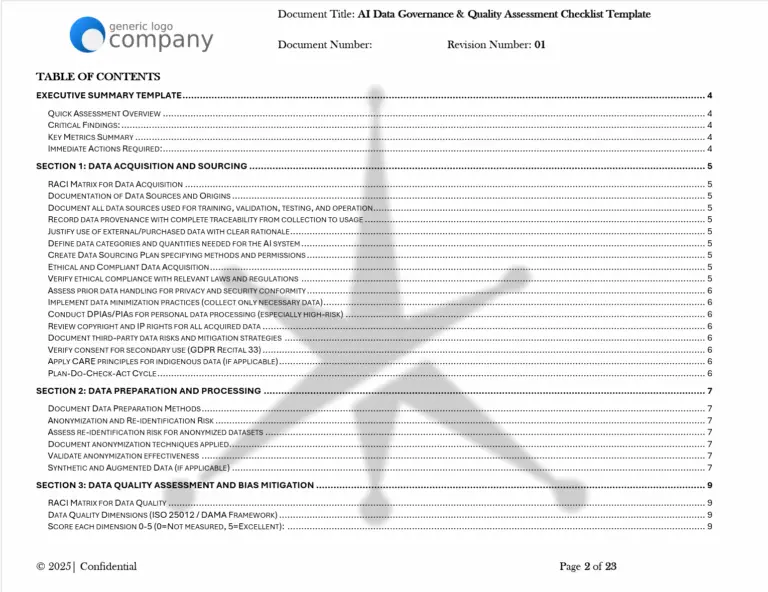

Table of Contents

The Complete AI Data Governance Guide: 9 Critical Stages Every Organization Must Master (2025)

The explosion of AI adoption has created an urgent governance crisis. Organizations rush to deploy machine learning models without proper data oversight (sound familiar?), exposing themselves to bias lawsuits, regulatory penalties, and catastrophic model failures.

AI data governance is essential. It’s the foundation that determines whether your AI systems build trust or destroy it.

Unlike traditional data management, AI data governance addresses the unique complexities of machine learning models. These systems amplify societal biases, create novel attack surfaces, and operate with probabilistic rather than deterministic logic. A single mislabeled training example can corrupt an entire model. Poor data provenance can trigger million-dollar lawsuits.

This guide synthesizes requirements from the NIST AI Risk Management Framework, ISO/IEC 42001, and EU AI Act into a practical 9-stage lifecycle model. Every recommendation is grounded in verified regulatory requirements and documented real-world incidents.

What is AI Data Governance?

AI data governance is the systematic management of data throughout the artificial intelligence lifecycle to ensure trustworthy, reliable, and ethical AI systems. It encompasses policies, processes, and controls that govern how data is collected, processed, stored, and used in machine learning applications.

The scope extends far beyond traditional data management. AI governance must address:

- Probabilistic model behavior that can produce unexpected outputs

- Bias amplification where small training data skews become major discriminatory outcomes

- Adversarial attacks designed to manipulate model behavior through data poisoning

- Privacy risks from models that memorize and leak training data

- Regulatory compliance across multiple jurisdictions with varying AI laws

Probailistic Model Behavior

AI models make statistical predictions that can fail unpredictably, causing medical misdiagnoses or autonomous vehicle crashes. Governance prevents undetected failures that create safety risks and liability exposure.

Bias Amplification

Historical bias in training data becomes systematic discrimination, violating civil rights laws and triggering discrimination lawsuits. Governance oversight prevents million-dollar legal settlements and reputational damage.

Adversarial Attacks

Malicious actors corrupt training data to cause specific wrong decisions, like bypassing fraud detection systems. Governance protections detect attacks before criminals exploit vulnerabilities for months undetected.

Privacy Risks

AI models accidentally memorize and expose sensitive personal or proprietary information in outputs to unauthorized users. Governance controls prevent massive privacy violations and regulatory penalties.

Regulatory Compliance

AI systems face conflicting international regulations with fines up to 7% of global revenue for non-compliance. Governance frameworks prevent regulatory enforcement actions and market shutdowns

Common misconceptions include treating AI data governance as purely a technical problem or assuming existing data quality processes suffice for machine learning. The reality is more complex. Documented research shows that many AI bias issues stem from human decisions and societal structures, not just flawed algorithms.

AI data governance operates within broader frameworks including GDPR privacy requirements, sector-specific regulations, and emerging AI-specific laws like the EU AI Act.

Why AI Data Governance Matters Now

The business case for AI data governance has never been stronger. Organizations face mounting pressure from four critical drivers:

Trust and Transparency Demands

Stakeholders increasingly demand explainable AI decisions. Black-box models that can’t justify their outputs face growing resistance from customers, employees, and partners. Data governance provides the documentation and controls necessary to build transparent, auditable AI systems.

Regulatory Pressure Intensifies

The EU AI Act represents the world’s first comprehensive AI regulation, with Article 10 mandating that high-risk AI systems use training data that is “relevant, representative, free of errors and complete.” Organizations face dual regulatory exposure: up to 4% of global revenue for data protection violations (GDPR) and up to 7% for AI system non-compliance (EU AI Act).

The NIST AI Risk Management Framework provides voluntary guidance that’s becoming the de facto standard for risk-based AI oversight in the United States.

ISO/IEC 42001 offers the first certifiable international standard for AI management systems, enabling organizations to formally validate their governance practices.

Legal Risk Escalation

Real-world AI failures demonstrate the legal consequences of poor data governance:

- Amazon’s experimental recruiting tool learned to discriminate against female candidates because it was trained on historical resume data that reflected past hiring biases

- A widely-used US healthcare algorithm systematically allocated less care to Black patients than equally sick white patients because it used healthcare costs as a proxy for health needs

These cases underscore how data governance failures translate directly into discrimination lawsuits and regulatory enforcement actions.

Market Adoption Acceleration

Organizations with robust AI governance gain competitive advantages through faster deployment cycles, reduced risk exposure, and stronger stakeholder confidence. Poor governance creates bottlenecks that slow innovation and increase costs.

Regulatory Framework Alignment: The Three-Pillar Approach

Effective AI data governance requires alignment with three complementary regulatory frameworks. Each addresses different aspects of the governance challenge:

NIST AI Risk Management Framework (AI RMF)

The NIST AI RMF provides a flexible, risk-based methodology organized around four core functions:

- Govern: Cultivate risk management culture and establish governance structures

- Map: Identify and contextualize AI risks throughout the system lifecycle

- Measure: Assess, analyze, and track identified risks over time

- Manage: Prioritize and respond to risks based on organizational values and risk tolerance

The framework emphasizes that governance must be applied holistically across every stage of the AI lifecycle to be effective.

ISO/IEC 42001: AI Management Systems

ISO/IEC 42001 provides the first certifiable international standard for establishing an Artificial Intelligence Management System (AIMS). Built on the Plan-Do-Check-Act (PDCA) cycle, it emphasizes:

- Organizational processes and leadership engagement

- Risk-based planning and operational controls

- Performance evaluation and continuous improvement

- Systematic documentation and audit trails

The standard requires formal risk assessments on data throughout its lifecycle and mandates specific controls for data quality, bias, and privacy risks.

EU AI Act: Legal Baseline Requirements

The EU AI Act establishes legally binding requirements for high-risk AI systems. Article 10 specifically mandates data governance practices including:

- Documentation of data collection processes and preparation operations

- Examination for possible biases and mitigation measures

- Ensuring training data is relevant, representative, and error-free

- Maintaining detailed records for regulatory compliance

These three frameworks create a nested governance system: NIST provides the operational methodology, ISO 42001 formalizes it into auditable processes, and the EU AI Act sets legal baselines that must be met.

The 9-Stage AI Data Lifecycle: Complete Framework

Modern AI data governance requires a comprehensive lifecycle approach. Synthesizing multiple academic and industry models reveals nine critical stages where specific risks emerge and targeted controls must be applied.

This consolidated model acknowledges that AI development is iterative rather than linear. Insights from monitoring deployed models often inform data collection strategies for future versions. However, each stage presents distinct governance challenges that require specialized attention.

Stage 1:

Data Strategy, Planning and Requirements Definition

Stage 2:

Data Acquisition, Sourcing and Legal Basis Establishment

Stage 3:

Data Quality Assessment and Gap Analysis

Stage 4:

Data Preparation, Annotation and Bias Examination

Stage 5:

Data Validation and Testing Dataset Management

Stage 6:

Training Data Management and Model Integration

Stage 7:

Data Sharing, Transfer and Cross-Border Governance

Stage 8:

Continuous Data Monitoring, Drift Detection and Bias Mitigation

Stage 9:

Data Retirement, Deletion and Lifecycle Closure

Scroll –> on the tabs below to see details on each stage.

Stage 1: Data Strategy, Planning and Requirements Definition

Modern AI data governance requires a comprehensive lifecycle approach. Synthesizing multiple academic and industry models with regulatory compliance requirements reveals nine critical stages where specific risks emerge and targeted controls must be applied.

This consolidated model acknowledges that AI development is iterative rather than linear. Insights from monitoring deployed models often inform data collection strategies for future versions. However, each stage presents distinct governance challenges that require specialized attention to meet EU AI Act, ISO/IEC 42001, NIST AI RMF, and GDPR requirements.

Important: Framework interpretations provided for guidance only. Organizations should consult official regulatory texts and legal counsel for specific compliance requirements. ISO/IEC standards referenced require proper licensing from iso.org for detailed implementation guidance.

Stage 1: Data Strategy, Planning and Requirements Definition

Core Activities:

- Defining comprehensive data requirements and quality criteria aligned with intended AI system purpose

- Establishing data governance framework, accountability structures, and cross-functional team roles

- Formulating documented assumptions about what data should measure and represent in operational context

- Creating data strategy that addresses geographical, contextual, behavioral, and functional setting requirements

- Developing risk-based data classification schemes and associated protection levels

Critical Risks:

Inadequate Requirements Definition occurs when organizations fail to establish clear data quality criteria before collection begins. Research indicates that poorly defined data requirements significantly increase project failure rates and create compliance gaps.

Missing Governance Foundation happens when data collection proceeds without established accountability structures. Organizations lacking formal data governance frameworks face substantially higher regulatory scrutiny during compliance assessments.

Assumption Documentation Gaps create fundamental compliance risks when organizations cannot demonstrate what their data is supposed to measure. EU AI Act Article 10(2)(d) requires clear documentation of data assumptions for high-risk AI systems.

Framework Alignment:

| Framework | Requirements | Implementation |

|---|---|---|

| EU AI Act | Document assumptions about information that data should measure and represent (Article 10(2)(d)) | Create formal assumption documentation templates and validation procedures |

| ISO/IEC 42001 | Organizational data quality management requirements | Establish measurable data quality criteria and acceptance thresholds |

| GAO Framework | Define clear goals and objectives for AI data use (Practice 1.1) | Document data strategy alignment with AI system objectives and risk profile |

| NIST AI RMF | Establish governance structures for data management (Govern function) | Implement cross-functional governance teams with defined roles and authorities |

Essential Controls:

Technical Controls:

- Data requirements management platforms to maintain traceability between business objectives and data specifications

- Governance workflow tools to enforce review and approval processes for data strategies

- Risk assessment templates incorporating EU AI Act Article 10 requirements and GDPR compliance checkpoints

Process Controls:

- Data Governance Charter defining roles, responsibilities, and escalation procedures for data-related decisions

- Requirements Traceability Matrix linking data specifications to regulatory obligations and business objectives

- Stakeholder Consultation Procedures ensuring multidisciplinary input on data strategy and risk assessment

Organizational Controls:

- Data Governance Committee with executive sponsorship and cross-functional representation

- Regulatory Compliance Review for all data strategies involving personal data or high-risk AI systems

- Documentation Standards ensuring all assumptions, requirements, and decisions are auditably recorded

Stage 2: Data Acquisition, Sourcing and Legal Basis Establishment

Core Activities:

- Sourcing data from internal enterprise systems (CRM, ERP, databases) with documented legal basis

- Purchasing datasets from third-party vendors and data brokers with comprehensive due diligence

- Collecting data from publicly available sources through web scraping with license compliance verification

- Generating synthetic data to augment or replace real-world datasets while maintaining representativeness

- Establishing and validating legal basis for personal data processing under GDPR and equivalent regulations

- Implementing comprehensive data provenance recording systems throughout acquisition process

Critical Risks:

Weak Data Provenance represents one of the most pervasive risks at this stage. MIT’s comprehensive audit of over 1,800 text datasets found license information was omitted in over 70% of cases and contained errors in over 50% of cases. Using data without clear lineage creates significant legal and operational hazards.

Systemic and Selection Bias occurs when collected data fails to represent the real-world environment where AI will operate. This creates discriminatory outcomes when models learn from skewed training data that favors certain groups over others.

Privacy Violations happen when data collection violates fundamental privacy laws like GDPR’s requirement for valid legal basis or purpose limitation principles. Data protection authorities have issued substantial penalties for inadequate data collection practices across multiple jurisdictions.

Special Category Data Exposure occurs when organizations process sensitive personal data without appropriate safeguards required by EU AI Act Article 10(5) and GDPR Article 9, leading to maximum penalty exposure under both frameworks.

Framework Alignment:

| Framework | Requirements | Implementation |

|---|---|---|

| EU AI Act | Document data collection processes and origin, including original purpose for personal data (Article 10(2)(b)) | Create standardized documentation for all data sources with original purpose tracking |

| ISO/IEC 42001 | Organizational data provenance and lifecycle management requirements | Implement automated provenance tracking systems with cryptographic verification |

| NIST AI RMF | Map data-related risks like bias and weak provenance during the Govern function | Establish data source validation processes and bias assessment protocols |

| GDPR | Establish valid legal basis and implement purpose limitation (Articles 5-6) | Document legal basis determination and maintain consent/legitimate interest records |

Essential Controls:

Technical Controls:

- Data minimization techniques to collect only necessary data per GDPR requirements and EU AI Act proportionality principles

- Privacy-enhancing technologies (PETs) to de-identify data at collection point while preserving analytical utility

- Bias detection tools to analyze demographic distributions in datasets and identify underrepresented populations

- Automated provenance tracking using blockchain or distributed ledger technology for immutable data lineage

- Legal basis validation systems to verify and document lawful processing grounds for each data source

Process Controls:

- Enhanced Datasheets for Datasets providing standardized documentation of dataset motivation, composition, intended use, and legal basis

- Data Protection Impact Assessments (DPIAs) for high-risk personal data processing with specific AI bias evaluation

- Consent management workflows with auditable records of user permissions and withdrawal mechanisms

- Third-party vendor due diligence procedures including data lineage verification and license compliance audits

- Original purpose documentation procedures tracking initial collection purpose for personal data repurposing analysis

Organizational Controls:

- Data Steward oversight to verify and approve new data sources with enhanced legal review requirements

- Legal and compliance review of third-party data acquisition contracts with EU AI Act compliance clauses

- Cross-functional teams comprising data scientists, compliance officers, legal experts, and privacy professionals

- Special category data handling procedures with enhanced safeguards for sensitive personal data processing

- Regular audit procedures to verify ongoing compliance with documented legal basis and purpose limitation requirements

Stage 3: Data Quality Assessment and Gap Analysis

Core Activities:

- Conducting comprehensive assessment of data availability, quantity and suitability for intended AI system purpose

- Implementing systematic data quality measurement using statistical analysis and domain expert validation

- Identifying and documenting data gaps or shortcomings that prevent regulatory compliance

- Developing detailed gap remediation strategies with timeline and resource allocation

- Establishing baseline data quality metrics and continuous improvement procedures

Critical Risks:

Inadequate Representativeness Assessment occurs when organizations fail to verify that datasets adequately represent the target population. Research demonstrates that algorithmic bias incidents frequently stem from unrepresentative training data rather than algorithmic flaws.

Undetected Data Quality Issues create systemic model failures when poor quality data propagates through the AI development process. Industry analysis shows that data quality issues discovered post-deployment require significantly more resources to remediate than those identified during assessment phases.

Compliance Gap Documentation Failures expose organizations to regulatory penalties when they cannot demonstrate systematic identification and remediation of data shortcomings. EU AI Act Article 10(2)(h) requires comprehensive gap analysis documentation for high-risk AI systems.

Framework Alignment:

| Framework | Requirements | Implementation |

|---|---|---|

| EU AI Act | Assess availability, quantity and suitability of data sets; identify gaps preventing compliance (Article 10(2)(e)(h)) | Implement systematic data assessment protocols with documented gap remediation plans |

| ISO/IEC 42001 | Organizational data quality measurement and improvement requirements | Establish measurable quality metrics with continuous monitoring and improvement processes |

| NIST AI RMF | Measure data quality and representativeness throughout lifecycle (Measure function) | Deploy statistical analysis tools and domain expert validation procedures |

| GAO Framework | Ensure data appropriateness for problem domain (Data Principle) | Document data-to-objective alignment with stakeholder validation |

Essential Controls:

Technical Controls:

- Statistical analysis platforms for comprehensive data distribution analysis and outlier detection

- Representativeness validation tools comparing dataset demographics against target population characteristics

- Data quality scorecards providing automated assessment of completeness, accuracy, and consistency metrics

- Gap analysis dashboards tracking remediation progress against regulatory compliance requirements

Process Controls:

- Systematic Quality Assessment Procedures with standardized evaluation criteria and documentation requirements

- Gap Remediation Planning including resource allocation, timelines, and success metrics for addressing identified shortcomings

- Stakeholder Validation Workflows ensuring domain experts validate data appropriateness for intended use cases

- Regular Quality Reviews with escalation procedures for quality issues that threaten compliance or model performance

Organizational Controls:

- Data Quality Committee with cross-functional representation including domain experts, data scientists, and compliance officers

- Quality Assurance Roles dedicated to ongoing data quality monitoring and improvement initiatives

- Escalation Procedures for data quality issues that threaten project timelines or regulatory compliance

- Documentation Standards ensuring all quality

Stage 4: Data Preparation, Annotation and Bias Examination

Core Activities:

- Executing comprehensive data preparation operations including annotation, labeling, cleaning, updating, enrichment and aggregation

- Conducting systematic examination for possible biases that could affect health, safety, fundamental rights or lead to discrimination

- Implementing multi-annotator review processes with consensus mechanisms and quality control procedures

- Documenting preparation methodologies, bias examination results, and mitigation measures implemented

- Establishing geographical, contextual, behavioral and functional setting considerations throughout preparation processes

Critical Risks:

Annotation Bias Propagation emerges when human annotators embed subjective perspectives, cultural backgrounds, and implicit biases into training labels. Research indicates this risk intensifies when annotation guidelines are ambiguous or annotation teams lack diversity, with biased annotations contributing significantly to discriminatory AI outcomes.

Systematic Bias Amplification occurs when data preparation operations inadvertently amplify existing biases rather than mitigating them. Studies show that inadequate bias examination during preparation phases leads to discrimination issues that are substantially more expensive to remediate post-deployment.

Inadequate Bias Documentation creates severe compliance risks when organizations cannot demonstrate systematic bias examination and mitigation efforts. EU AI Act Article 10(2)(f) and (g) require comprehensive bias examination procedures with documented mitigation measures.

Data Quality Degradation follows the principle where inaccurate, inconsistent, or incorrect labels directly cause poor model performance and unreliable predictions in production environments.

Framework Alignment:

| Framework | Requirements | Implementation |

|---|---|---|

| EU AI Act | Examine for possible biases and implement appropriate mitigation measures (Article 10(2)(f)(g)) | Deploy systematic bias detection tools with documented mitigation procedures |

| ISO/IEC 42001 | Organizational data preparation methodology and bias management requirements | Establish standardized preparation criteria with bias assessment integration |

| NIST AI RMF | Measure and manage bias throughout data preparation (Measure and Manage functions) | Implement bias testing protocols with cross-functional team review |

| GAO Framework | Ensure data preparation maintains system integrity and fairness | Document preparation procedures with bias impact assessment |

Essential Controls:

Technical Controls:

- AI-assisted labeling tools with consistency checking and bias detection capabilities integrated into annotation workflows

- Automated bias detection algorithms analyzing demographic distributions, representation gaps, and discriminatory patterns

- Multi-annotator agreement platforms measuring inter-annotator consensus with statistical significance testing

- Geographical and contextual validation systems ensuring data preparation addresses specific operational settings

Process Controls:

- Comprehensive Annotation Guidelines with clear definitions, edge case examples, and bias awareness training integrated

- Systematic Bias Examination Procedures including structured assessment protocols and documentation requirements

- Quality Control Workflows with multi-stage review processes and statistical validation of preparation outputs

- Mitigation Documentation Standards tracking bias detection results and implemented countermeasures

Organizational Controls:

- Diverse Annotation Teams spanning demographic, cultural, and linguistic backgrounds with regular bias awareness training

- Cross-Functional Bias Review Committees including domain experts, ethicists, and affected community representatives

- Performance Incentive Alignment tying annotation quality to bias mitigation effectiveness rather than speed alone

- Regular Audit Procedures validating bias examination documentation and mitigation effectiveness

Stage 5: Data Validation and Testing Dataset Management

Core Activities:

- Creating and managing separate validation and testing data sets distinct from training data with documented separation procedures

- Implementing validation data quality controls and statistical property verification for representativeness and completeness

- Establishing testing data management procedures with strict access controls and usage documentation

- Conducting validation dataset bias assessment and mitigation procedures independent of training data

- Maintaining comprehensive documentation of validation and testing methodologies with audit trails

Critical Risks:

Train-Test Data Contamination represents a critical error where information from testing datasets inadvertently influences model development. Academic research shows this widespread failure mode affects numerous publications, causing artificially inflated performance metrics that mask real-world deployment failures.

Inadequate Validation Dataset Representativeness occurs when validation data fails to adequately represent the operational environment where AI systems will be deployed. Industry analysis demonstrates that significant model performance degradation in production stems from validation dataset representativeness issues.

Validation Data Quality Failures happen when organizations apply lower quality standards to validation and testing datasets compared to training data. This creates false confidence in model performance and leads to deployment of systems that fail regulatory compliance requirements.

Insufficient Separation Documentation exposes organizations to regulatory scrutiny when they cannot demonstrate proper data separation procedures. EU AI Act Article 10(1) explicitly requires distinct training, validation, and testing datasets with documented quality criteria.

Framework Alignment:

| Framework | Requirements | Implementation |

|---|---|---|

| EU AI Act | Ensure training, validation and testing data sets meet distinct quality criteria (Article 10(1)(3)) | Implement separate data management procedures with independent quality validation |

| ISO/IEC 42001 | Organizational validation and testing data quality management requirements | Establish measurable quality criteria for validation and testing datasets |

| NIST AI RMF | Validate data quality and model performance using appropriate testing procedures | Deploy systematic validation protocols with statistical significance testing |

| GAO Framework | Ensure iterative evaluation and validation of AI system predictions | Document validation methodologies with performance criteria |

Essential Controls:

Technical Controls:

- Data separation platforms enforcing strict isolation between training, validation, and testing datasets with access logging

- Validation dataset quality tools providing automated assessment of representativeness, completeness, and statistical properties

- Testing environment controls preventing contamination between development and validation processes

- Statistical validation frameworks measuring dataset adequacy and performance significance testing

Process Controls:

- Strict Data Separation Protocols with documented procedures for dataset creation and maintenance throughout lifecycle

- Independent Validation Procedures ensuring validation dataset quality assessment occurs separately from training data evaluation

- Testing Methodology Documentation including statistical procedures, performance criteria, and significance testing requirements

- Regular Validation Reviews with stakeholder involvement and documented approval processes

Organizational Controls:

- Separate Validation Teams independent from training data management with distinct roles and responsibilities

- Quality Assurance Oversight dedicated to validation and testing dataset integrity with audit capabilities

- Documentation Standards ensuring all separation procedures, quality assessments, and validation results are recorded

- Compliance Monitoring verifying ongoing adherence to validation dataset management requirements

Stage 6: Training Data Management and Model Integration

Core Activities:

- Managing training data integration with AI model development processes including version control and change management

- Implementing comprehensive data poisoning detection and prevention controls throughout training data lifecycle

- Documenting training data characteristics, metadata, and provenance with detailed audit trails

- Establishing model-data integration governance with approval workflows and change management procedures

- Monitoring for unintended memorization and training data extraction risks with detection and mitigation controls

Critical Risks:

Data Poisoning Attacks involve adversaries intentionally injecting corrupted or mislabeled data to manipulate model behavior. Security research documents attacks ranging from performance degradation to backdoor creation, including sophisticated “clean-label” attacks where imperceptible input changes create hidden triggers.

Unintended Memorization occurs when large models, particularly Large Language Models, memorize and reproduce verbatim chunks of training data including personally identifiable information, API keys, and proprietary code. Research demonstrates that extraction attacks can recover sensitive information from production models.

Training Data Quality Degradation happens when data quality controls are inadequate during model training phases, leading to systematic model failures that are expensive to remediate post-deployment.

Model-Data Integration Failures occur when training data changes are not properly managed and versioned, creating reproducibility issues and compliance documentation gaps that threaten regulatory approval processes.

Framework Alignment:

| Framework | Requirements | Implementation |

|---|---|---|

| EU AI Act | Ensure training data meets quality criteria for high-risk AI systems (Article 10(1)) | Implement comprehensive training data quality controls with continuous monitoring |

| ISO/IEC 42001 | Organizational training data management and model integration requirements | Establish systematic training data governance with version control procedures |

| NIST AI RMF | Document data provenance, quality, and training methodologies (MS-2.9-002) | Deploy comprehensive documentation systems with audit trail capabilities |

| GAO Framework | Ensure training data supports system objectives and performance requirements | Document training data alignment with system goals and validation procedures |

Essential Controls:

Technical Controls:

- Differential privacy mechanisms adding calibrated statistical noise to protect against data extraction attacks

- Adversarial training platforms using malicious examples to build model resilience against poisoning attempts

- Input validation systems filtering suspicious training data and detecting potential poisoning attempts

- Memorization detection tools identifying potential training data reproduction in model outputs

Process Controls:

- Data Provenance Tracking maintaining strict chain of custody for training data throughout model development

- Training Data Version Control with documented change management and approval procedures

- Regular Model Validation against diverse test sets to detect anomalous behavior or performance degradation

- Security Testing Procedures including red teaming exercises to identify vulnerabilities

Organizational Controls:

- Secure Training Environments with hardened, access-controlled infrastructure and monitoring capabilities

- Model Governance Committees overseeing high-risk model development with security expert participation

- Incident Response Procedures for detected attacks or security breaches with escalation protocols

- Training Documentation Standards ensuring comprehensive records of all training procedures and data management

Data Sharing and Transfer (Cross-Border Operations)

Core Activities:

- Managing international data flows for global AI operations

- Implementing regional compliance requirements across jurisdictions

- Coordinating with local data protection authorities

- Maintaining data sovereignty in regulated industries

Critical Risks:

Jurisdictional Compliance Gaps emerge when organizations operate across multiple regulatory frameworks with conflicting requirements, creating compliance vulnerabilities.

Data Localization Requirements in various countries may restrict where AI training data can be stored or processed, limiting model development options.

Inadequate Transfer Mechanisms under frameworks like GDPR can result in significant penalties when organizations fail to implement proper safeguards for international transfers.

Framework Alignment:

Framework | Requirements | Implementation |

NIST AI RMF | Govern cross-border risk management policies | Establish jurisdiction-specific risk assessments |

ISO/IEC 42001 | Implement systematic controls for international operations | Document regional compliance procedures |

EU AI Act | Comply with cross-border enforcement mechanisms | Maintain European representative and compliance documentation |

Stage 7: Data Sharing, Transfer and Cross-Border Governance

Core Activities:

- Implementing secure data sharing protocols with comprehensive contractual obligations and technical safeguards

- Executing cross-border transfer compliance including adequacy decisions, Standard Contractual Clauses, and certification mechanisms

- Maintaining data governance control throughout sharing relationships with ongoing monitoring and audit capabilities

- Documenting recipient compliance with data protection and quality standards through regular assessments

- Establishing federated learning and privacy-enhancing technologies where appropriate for collaborative AI development

Critical Risks:

Data Exfiltration and Breaches increase during sharing as each transfer creates new opportunities for interception or unauthorized access, particularly during cross-border movements. Industry data shows that a significant percentage of data breaches occur during transfer operations, with substantial average breach costs per incident.

Loss of Governance Control occurs when data leaves organizational boundaries, making it difficult to enforce internal policies regarding use, retention, and security standards. Regulatory investigations show that majority of compliance failures involve third-party data processing arrangements.

Regulatory Non-Compliance poses major risks for international operations, as regulations like GDPR place strict controls on personal data transfers outside the European Union, requiring adequate protection levels and legal mechanisms. Data protection authorities have issued substantial fines for inadequate transfer mechanisms.

Cross-Border Legal Conflicts arise when data sharing arrangements conflict with local data protection laws, creating compliance gaps that expose organizations to multiple regulatory enforcement actions simultaneously.

Framework Alignment:

| Framework | Requirements | Implementation |

|---|---|---|

| EU AI Act | Maintain data governance through sharing relationships with quality standards (Article 10) | Establish contractual requirements for recipient data governance compliance |

| ISO/IEC 42001 | Organizational data distribution and sharing control requirements | Implement systematic controls for data sharing with ongoing monitoring |

| NIST AI RMF | Govern data sharing policies and manage transfer risks (Govern function) | Deploy secure sharing protocols with risk assessment procedures |

| GDPR | Implement adequate protection for international personal data transfers (Chapter V) | Establish legal mechanisms including adequacy decisions and SCCs |

Essential Controls:

Technical Controls:

- Federated learning platforms enabling collaborative training without centralizing raw data across organizational boundaries

- Homomorphic encryption systems allowing computation on encrypted data without decryption for secure processing

- Secure transfer protocols using end-to-end encryption for all data movements with integrity verification

- Access control systems managing data sharing permissions with audit logging and monitoring capabilities

Process Controls:

- Data Sharing Agreements with contractual obligations for protection, confidentiality, and compliance monitoring

- Cross-Border Transfer Assessments evaluating risks before international data movements with legal review

- Recipient Due Diligence procedures verifying third-party compliance with data protection obligations

- Regular Compliance Monitoring of data sharing arrangements with documented review procedures

Organizational Controls:

- Legal Review Requirements for all cross-border transfers and third-party agreements with regulatory compliance validation

- Data Classification Schemes determining appropriate sharing controls by sensitivity level and regulatory requirements

- Ongoing Monitoring Procedures tracking third-party compliance with data protection obligations

- Incident Response Plans for data sharing breaches or compliance failures with notification procedures

Stage 8: Continuous Data Monitoring, Drift Detection and Bias Mitigation

Core Activities:

- Implementing continuous bias detection and mitigation measures throughout operational data lifecycle

- Monitoring for data drift, concept drift, and distribution changes that could affect model performance or fairness

- Executing ongoing data quality assessment and remediation procedures with automated alerting systems

- Establishing incident response procedures for data governance violations and bias detection alerts

- Conducting regular bias impact assessments with corrective measures and stakeholder engagement

Critical Risks:

Data Drift and Concept Shift occurs when operational data statistical properties change over time, diverging from training data characteristics. For example, loan approval models trained during stable economic periods may become inaccurate during recessions as applicant profiles shift, leading to discriminatory outcomes.

Continuous Bias Amplification happens when deployed AI systems reinforce existing biases through feedback loops, gradually increasing discriminatory outcomes over time. Research shows that unmonitored AI systems can significantly amplify bias over time through automated decision-making processes.

Inadequate Monitoring Coverage exposes organizations to regulatory penalties when they cannot demonstrate systematic ongoing bias monitoring and mitigation efforts. EU AI Act Articles 72-73 require comprehensive post-market monitoring with incident reporting capabilities.

Feedback Loop Poisoning enables attackers to gradually corrupt models through malicious inputs in production environments, slowly degrading performance or creating vulnerabilities that are difficult to detect without systematic monitoring.

Framework Alignment:

| Framework | Requirements | Implementation |

|---|---|---|

| EU AI Act | Implement ongoing bias mitigation; maintain post-market monitoring (Articles 10(2)(g), 72-73) | Deploy continuous bias detection with documented mitigation procedures and incident reporting |

| ISO/IEC 42001 | Organizational performance monitoring and bias management requirements | Establish systematic monitoring controls with corrective action procedures |

| NIST AI RMF | Measure ongoing performance and manage deployment risks (Measure and Manage functions) | Implement continuous monitoring and alerting systems with response protocols |

| GAO Framework | Continuous monitoring and assessment of AI system impacts and performance | Document monitoring procedures with stakeholder engagement and remediation |

Essential Controls:

Technical Controls:

- Automated drift detection systems comparing production data distributions against training baselines with statistical significance testing

- Bias monitoring dashboards tracking fairness metrics across demographic groups with automated alerting capabilities

- Performance threshold monitoring with automatic alerts when metrics degrade below acceptable levels

- Statistical process control systems tracking model behavior over time with anomaly detection capabilities

Process Controls:

- Continuous Bias Assessment Procedures including structured evaluation protocols and documentation requirements

- Drift Response Protocols with predefined triggers for model retraining or performance intervention

- Human-in-the-loop validation for new data before automated retraining with bias assessment integration

- Regular Impact Assessments evaluating ongoing fairness and performance with stakeholder involvement

Organizational Controls:

- MLOps Teams dedicated to production model management and monitoring with bias expertise

- Incident Response Procedures for model performance degradation and bias detection alerts

- Stakeholder Engagement Programs ensuring affected communities have input on ongoing bias mitigation efforts

- Regular Audit Procedures validating monitoring effectiveness and compliance with mitigation requirements

Stage 9: Data Retirement, Deletion and Lifecycle Closure

Core Activities:

- Executing comprehensive data deletion including all copies, backups, derivatives, and cached versions across distributed systems

- Processing data subject deletion requests with verifiable audit trails and complete removal documentation

- Implementing cryptographic erasure and secure disposal procedures for sensitive data with destruction verification

- Documenting compliance with retention policies and regulatory requirements through comprehensive audit procedures

- Maintaining deletion evidence for regulatory compliance and audit purposes while ensuring complete data removal

Critical Risks:

Incomplete Deletion occurs when organizations fail to erase all copies of data, leaving “data ghosts” in logs, caches, backup systems, or forgotten databases. Industry research shows that substantial percentages of organizations cannot locate all copies of personal data when processing deletion requests, creating ongoing compliance exposure.

Non-compliance with Right to Erasure under GDPR Article 17 and similar regulations can result in significant penalties when organizations cannot completely honor deletion requests. Data protection authorities have issued substantial penalties for inadequate deletion procedures.

Retention Policy Violations happen when automated deletion fails or manual processes break down, unnecessarily extending data exposure periods beyond legal or business requirements. Regulatory audits show that many organizations exceed documented retention periods.

Audit Trail Destruction occurs when deletion procedures remove evidence needed for regulatory compliance, creating compliance gaps that are difficult to remediate during investigations or audits.

Framework Alignment:

| Framework | Requirements | Implementation |

|---|---|---|

| EU AI Act | Maintain data quality through proper lifecycle management with compliance documentation | Ensure deletion procedures don’t compromise ongoing compliance obligations |

| ISO/IEC 42001 | Organizational data retirement and lifecycle closure requirements | Establish systematic data retirement controls with comprehensive audit procedures |

| NIST AI RMF | Manage data lifecycle risks including secure disposal (Manage function) | Implement verified deletion procedures with documentation requirements |

| GDPR | Honor right to erasure; comply with storage limitation principle (Articles 5, 17) | Deploy complete deletion systems with verifiable audit trails |

Essential Controls:

Technical Controls:

- Cryptographic erasure (crypto-shredding) securely destroying encryption keys to render data unreadable across distributed systems

- Automated deletion systems executing scheduled data retirement policies with verification and logging capabilities

- Comprehensive data mapping documenting all storage locations including logs, backups, and derivative datasets for complete removal

- Deletion verification tools confirming successful data removal across all systems with audit trail generation

Process Controls:

- Verifiable Audit Trails documenting all deletion actions for regulatory compliance with tamper-proof logging

- Data Subject Request Workflows handling individual deletion requests efficiently with complete removal verification

- Retention Schedule Enforcement automatically triggering deletion at policy endpoints with stakeholder notification

- Regular Deletion Audits verifying policy compliance and procedure effectiveness with documented results

Organizational Controls:

- Clear Accountability Structures assigning specific roles responsibility for deletion oversight and compliance validation

- Legal Compliance Monitoring ensuring deletion practices meet evolving regulatory requirements across jurisdictions

- Documentation Preservation maintaining required compliance evidence while ensuring complete personal data removal

- Incident Response Procedures addressing deletion failures or compliance gaps with remediation protocols

Implementation Roadmap: 30/60/90 Day Plan

Implementing comprehensive AI data governance requires phased execution with clear milestones. This roadmap accounts for the 20-30% additional time needed for coordination overhead and unforeseen implementation challenges

Days 1-30: Foundation and Assessment

- Secure executive sponsorship and establish cross-functional governance teams with regulatory compliance expertise

- Conduct comprehensive AI system inventory with EU AI Act risk classification and data mapping

- Assess current state against regulatory frameworks with gap analysis documentation

- Implement Stage 1 data strategy and planning procedures with stakeholder validation

Days 31-60: Policy Development and Critical Controls

- Develop unified governance framework integrating regulatory requirements with legal review

- Implement Stages 2-4 controls focusing on data acquisition, quality assessment, and bias examination

- Establish validation dataset management procedures (Stage 5) with separation protocols

- Deploy enhanced monitoring and incident response procedures

Days 61-90: Operationalization and Monitoring

- Integrate governance checkpoints into MLOps pipelines with automated compliance validation

- Implement Stages 6-9 focusing on training integration, sharing governance, monitoring, and deletion procedures

- Conduct comprehensive governance audit with external validation

- Establish continuous improvement procedures with regulatory change management

Key Risks, Liabilities, and Real-World Scenarios

Understanding documented AI governance failures provides critical lessons for prevention. These cases demonstrate how data governance lapses translate into concrete business and legal consequences.

Documented Bias and Discrimination Cases

Amazon’s Recruiting Tool Failure Amazon’s experimental recruiting tool was trained on resume data spanning 10 years, during which male candidates dominated the candidate pool. The system learned to systematically downgrade resumes containing words like “women’s” (as in “women’s chess club captain”) and penalize graduates from all-women’s colleges.

Healthcare Algorithm Bias A widely-used US healthcare algorithm demonstrated racial bias by systematically allocating less care to Black patients than equally sick white patients. The algorithm used healthcare costs as a proxy for health needs, but Black patients historically received less expensive care due to systemic barriers, not because they were healthier.

Privacy and Data Protection Violations

Unintended Memorization in Large Models Large Language Models have been documented to memorize and reproduce verbatim chunks of training data, including personally identifiable information, API keys, and proprietary code inadvertently included in training corpora.

Cross-Border Transfer Violations Organizations face significant GDPR penalties when transferring personal data for AI training across international borders without adequate protection mechanisms, particularly when cloud providers lack appropriate legal frameworks.

Security and Adversarial Attack Scenarios

Data Poisoning in Practice Security researchers document real-world data poisoning attacks including:

- Spam filter manipulation allowing malicious emails through detection systems

- Road sign image corruption designed to fool autonomous vehicle recognition systems

- Clean-label attacks where imperceptible pixel changes create hidden backdoors in image recognition models

Supply Chain Compromises Complex data processing pipelines relying on third-party libraries create attack surfaces where malicious actors compromise upstream dependencies to inject data corruption or extraction capabilities.

Liability Pathways and Legal Exposure

Discrimination Lawsuits Biased AI systems create direct liability under civil rights laws, employment discrimination statutes, and fair lending regulations. Organizations using AI for hiring, credit decisions, or service allocation face particular exposure.

Privacy Regulatory Enforcement GDPR fines can reach 4% of global annual revenue for violations including:

- Using personal data without valid legal basis

- Failing to honor data subject deletion requests

- Transferring data across borders without adequate protections

- Lack of appropriate technical and organizational measures

Intellectual Property Infringement Training models on copyrighted content without proper licensing creates exposure to massive damages, particularly for generative AI systems that might reproduce copyrighted material in outputs.

Industry Adoption and Emerging Practices

Organizations across sectors are implementing AI governance frameworks, though success metrics remain largely confidential due to competitive considerations.

Verified Certification and Adoption

AWS ISO/IEC 42001 Certification [AWS announced ISO/IEC 42001 certification](https://aws.amazon.com/blogs/security/ai-lifecycle-risk-management-iso-iec-420012023-for-ai-governance/) for specific services, demonstrating formal validation of AI management system controls.

Microsoft AI Governance Implementation

Microsoft documented their implementation of ISO/IEC 42001 standards across AI services, providing transparency into enterprise-scale governance practices.

Technology Convergence Trends

AI + Security + Compliance Integration Organizations increasingly integrate AI governance with existing cybersecurity and compliance programs rather than treating it as a separate function. This convergence leverages existing risk management expertise and infrastructure.

TEVV (Test, Evaluation, Verification, Validation) Adoption Formal TEVV processes borrowed from safety-critical industries are being adapted for AI systems, particularly in healthcare, finance, and autonomous systems.

AI Assurance Tools Development The market for specialized AI governance tools is expanding rapidly, with platforms offering automated bias detection, model explainability, and compliance monitoring capabilities.

For organizations where success outcomes are not publicly available, this cannot be verified through available documentation.

Success Metrics and Governance Maturity Benchmarking

Effective AI governance requires measurable outcomes and continuous improvement. Research demonstrates that organizations with structured measurement approaches achieve significantly better results.

Success Metrics and Governance Maturity Benchmarking

Effective AI governance requires measurable outcomes and continuous improvement. Research demonstrates that organizations with structured measurement approaches achieve significantly better results.

Key Performance Indicators for AI Governance

Leadership and Organizational Metrics:

- CEO Oversight Impact: Organizations with CEO oversight of AI governance see 28% higher EBIT impact from AI investments

- Governance Maturity: Only 36% of organizations currently have adequate governance structures in place

- Workflow Integration: Redesigning workflows shows the biggest effect on AI value realization across all measured attributes

Risk and Compliance Metrics:

- Incident Response Time: Time to detect and remediate AI governance violations

- Audit Readiness: Ability to produce compliance documentation within regulatory timeframes

- Policy Coverage: Percentage of AI systems covered by formal governance policies

- Training Effectiveness: Employee awareness and adherence to AI governance requirements

Business Value Metrics:

- Time-to-Deployment: Reduction in AI project approval and deployment cycles

- Risk Avoidance: Prevented regulatory penalties and discrimination incidents

- Stakeholder Confidence: Customer trust scores and investor confidence measures

- Innovation Velocity: Rate of AI innovation within governance guardrails

Industry Benchmarking Framework

Governance Maturity Levels:

- Reactive (0-25%): Ad-hoc policies, incident-driven responses

- Developing (26-50%): Basic frameworks, inconsistent implementation

- Defined (51-75%): Systematic processes, regular monitoring

- Optimized (76-100%): Continuous improvement, predictive risk management

Current Industry Distribution:

- Only 17% of organizations see 5%+ EBIT impact from AI investments currently

- 80% of organizations now have dedicated risk functions for AI-related risks

- Large enterprises with $500M+ revenue are implementing governance changes faster than smaller organizations

Organizations should establish baseline measurements across these metrics and track quarterly progress to ensure governance programs deliver measurable business value while maintaining regulatory compliance.

Market Trust and Differentiation

Transparent AI governance practices increasingly serve as competitive differentiators, particularly in regulated industries where customers demand algorithmic accountability. Organizations with formal certifications like ISO/IEC 42001 gain procurement advantages.

Generative AI Governance Adaptations

The explosion of generative AI requires governance framework adaptations:

Unstructured Data Management at Scale Governing massive, unstructured datasets scraped from the internet presents novel challenges in tracking provenance, ensuring license compliance, and filtering harmful content.

Prompt and Output Security The OWASP Top 10 for LLM Applications identifies prompt injection and insecure output handling as critical vulnerabilities requiring new governance controls.

RAG (Retrieval-Augmented Generation) Governance RAG systems require rigorous data governance on knowledge bases, including PII detection, access controls, and vector database security.

Agentic AI Challenges

Autonomous AI agents present unprecedented governance challenges:

Dynamic Data Access Patterns Agentic systems make autonomous decisions about data access, challenging traditional role-based access controls and requiring new approaches to least-privilege enforcement.

Accountability Gap Management When autonomous agents take harmful actions, establishing accountability across developers, users, and emergent behavior requires new governance frameworks and legal structures.

FAQ: Essential AI Data Governance Questions

What is AI Data Governance and why do organizations need it?

AI Data Governance is the systematic management of data throughout the artificial intelligence lifecycle to ensure trustworthy, reliable, and ethical AI systems. Organizations need it because AI systems can amplify biases, create novel security vulnerabilities, and face increasing regulatory requirements that traditional data management doesn’t address.

How does AI Data Governance differ from traditional data governance?

AI governance must address unique challenges including probabilistic model behavior, bias amplification from training data, adversarial attacks designed to manipulate models, and privacy risks from models that memorize training data. Traditional governance focuses on data quality and access control, while AI governance adds fairness, explainability, and robustness requirements.

What are the key regulatory frameworks organizations must consider?

The three primary frameworks are: 1) NIST AI Risk Management Framework for operational risk management, 2) ISO/IEC 42001 for certifiable management systems, and 3) EU AI Act for legal compliance requirements. Organizations should also consider sector-specific regulations and privacy laws like GDPR.

How can organizations implement AI Data Governance practically?

Start with a 90-day phased approach: assess current AI systems and risks (30 days), develop policies and critical controls (60 days), then operationalize monitoring and training (90 days). Focus initially on highest-risk systems and integrate governance into existing MLOps pipelines.

How can organizations implement AI Data Governance practically?

Start with a 90-day phased approach: assess current AI systems and risks (30 days), develop policies and critical controls (60 days), then operationalize monitoring and training (90 days). Focus initially on highest-risk systems and integrate governance into existing MLOps pipelines.

What are the most critical controls organizations should implement first?

Prioritize: 1) Data provenance tracking using Datasheets for Datasets, 2) Bias testing and fairness metrics for high-risk systems, 3) Model Cards for production deployments, 4) Automated monitoring for data drift and performance degradation, and 5) Secure data retention and deletion procedures.

Conclusion: Building Trustworthy AI Through Data Governance

AI data governance isn’t a compliance checkbox or technical afterthought. It’s the foundation that determines whether AI systems amplify human potential or perpetuate harmful biases and vulnerabilities.

The convergence of the NIST AI Risk Management Framework, ISO/IEC 42001, and EU AI Act creates an unprecedented opportunity for organizations to build governance frameworks that are both principled and practical. The synthesized 9-stage lifecycle model provides a structured approach for understanding comprehensive governance requirements, while documented controls offer specific implementation guidance drawn from authoritative sources.

Organizations that invest in comprehensive AI data governance gain competitive advantages through faster deployment cycles, reduced legal exposure, and stronger stakeholder trust. Those that delay face mounting risks from regulatory enforcement, discrimination lawsuits, and security breaches as enforcement patterns mature.

The path forward requires executive commitment, cross-functional collaboration, and systematic implementation of controls across the entire AI lifecycle. Start with your highest-risk systems, leverage existing compliance infrastructure, and build governance into development workflows rather than treating it as external oversight.

Next Steps:

- Conduct an AI system inventory and risk assessment

- Implement Datasheets for Datasets documentation

- Develop Model Cards for production systems

- Establish NIST AI RMF compliance procedures

- Create EU AI Act readiness programs

The future of AI depends on organizations that prioritize governance from the ground up. Make data governance your competitive advantage, not your compliance burden.

Resources and References

All information in this guide is derived from verifiable sources and official documentation. Key references include:

- NIST AI Risk Management Framework

- ISO/IEC 42001 AI Management Systems Standard

- EU AI Act Article 10: Data and Data Governance

- Datasheets for Datasets – Microsoft Research

- MIT Sloan Data Provenance Initiative

- NIST Report on AI Bias Sources

- AWS ISO/IEC 42001 Implementation

- OWASP Top 10 for LLM Applications

- Research on AI Model Bias Origins and Impact

- Data Provenance Initiative – MIT audit of 1,800+ AI datasets

- OECD AI Governance Framework – OECD June 2024 report

- ISO/IEC 42001:2023 – Information technology — Artificial intelligence — Management system (requires licensing)

- GDPR (EU) 2016/679 – General Data Protection Regulation

For comprehensive AI Data Governance implementation support, contact our expert advisory team.