Pre-Deployment AI Safety & Compliance Gate

A structured, multi-framework Go/No-Go decision workbook designed to support organizations in evaluating AI system readiness before production deployment, with 49 assessment items mapped to 12 regulatory and standards sources.

[Download Now]

Deploying an AI system into production without a structured safety and compliance review is a risk most organizations can’t afford to take. This workbook provides a repeatable, formula-driven assessment framework that walks teams through 49 control items organized across 7 gates. It covers security validation, human oversight, output reliability, data governance, monitoring, and documentation requirements. The template requires customization to your organization’s specific AI system, risk profile, and regulatory scope. It’s designed to replace ad-hoc review processes with a consistent, auditable evaluation structure that can save significant time compared to building a pre-deployment gate from scratch.

Key Benefits

- ✅ Provides a structured 49-item assessment across 7 gates covering security, safety, privacy, reliability, transparency, human oversight, and compliance

- ✅ Includes a formula-driven scoring engine with weighted compliance calculations and automatic Go/No-Go recommendations

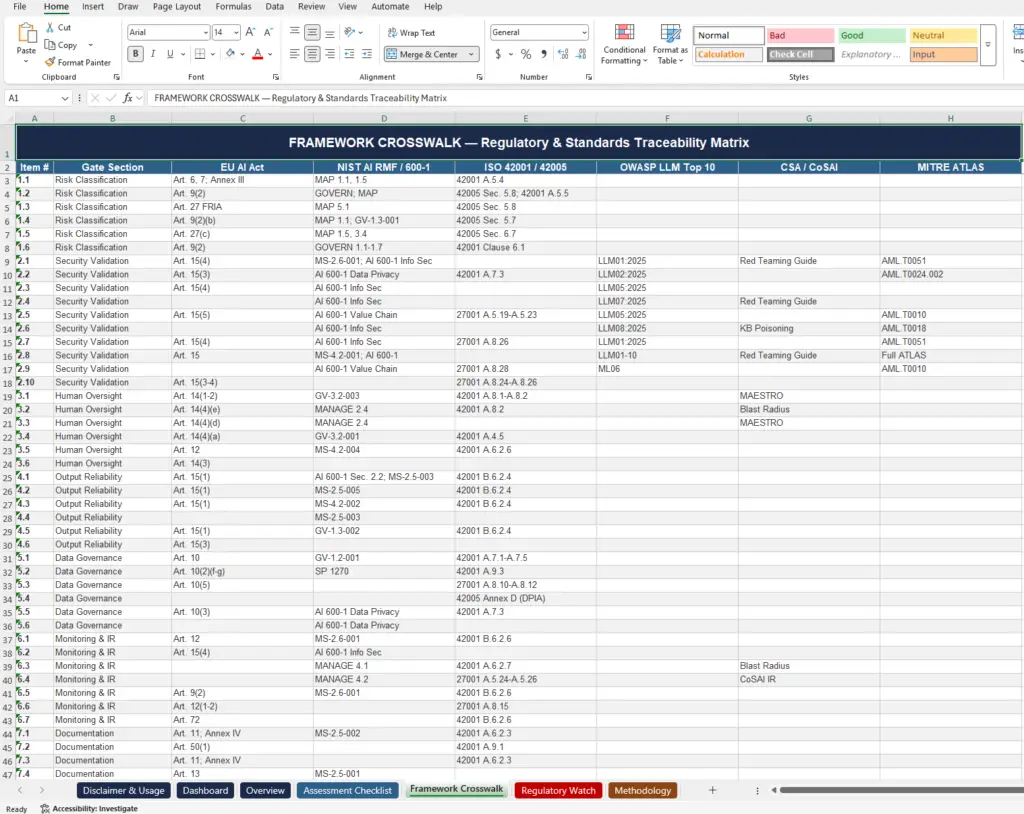

- ✅ Contains a Framework Crosswalk tab mapping each control item to specific articles, clauses, and sections across 7 regulatory and standards bodies

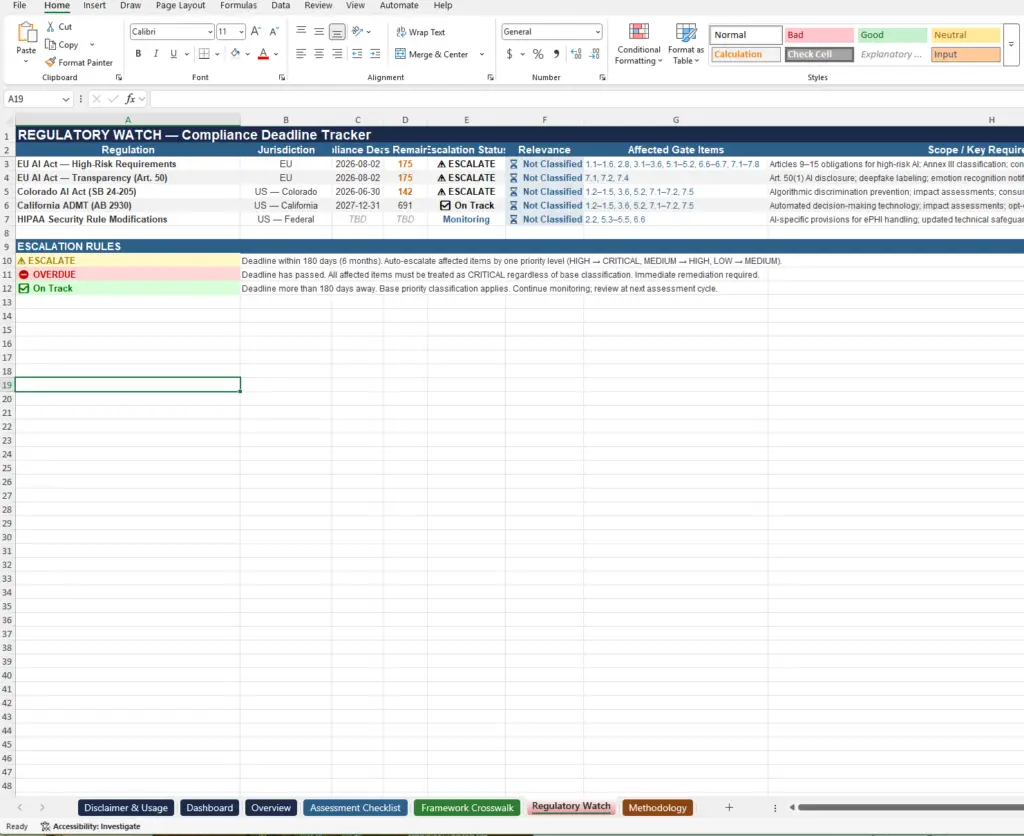

- ✅ Features a Regulatory Watch tab with countdown timers and auto-escalation status for 5 active regulations (EU AI Act, Colorado AI Act, California ADMT, HIPAA modifications)

- ✅ Supports dynamic applicability where 19 of 49 items adjust based on risk classification inputs (EU market deployment, personal data processing, autonomy level, RAG usage, etc.)

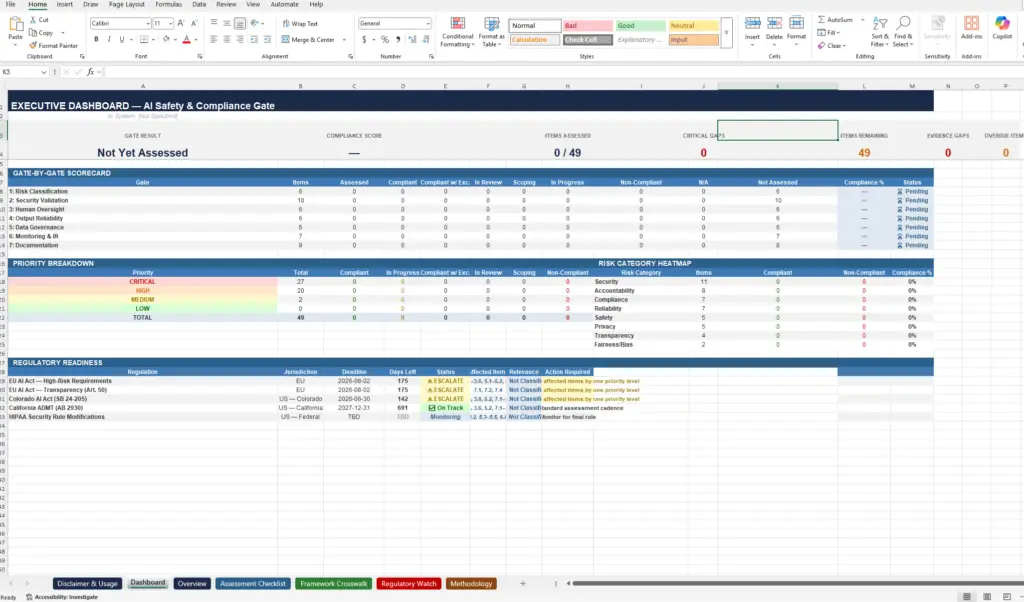

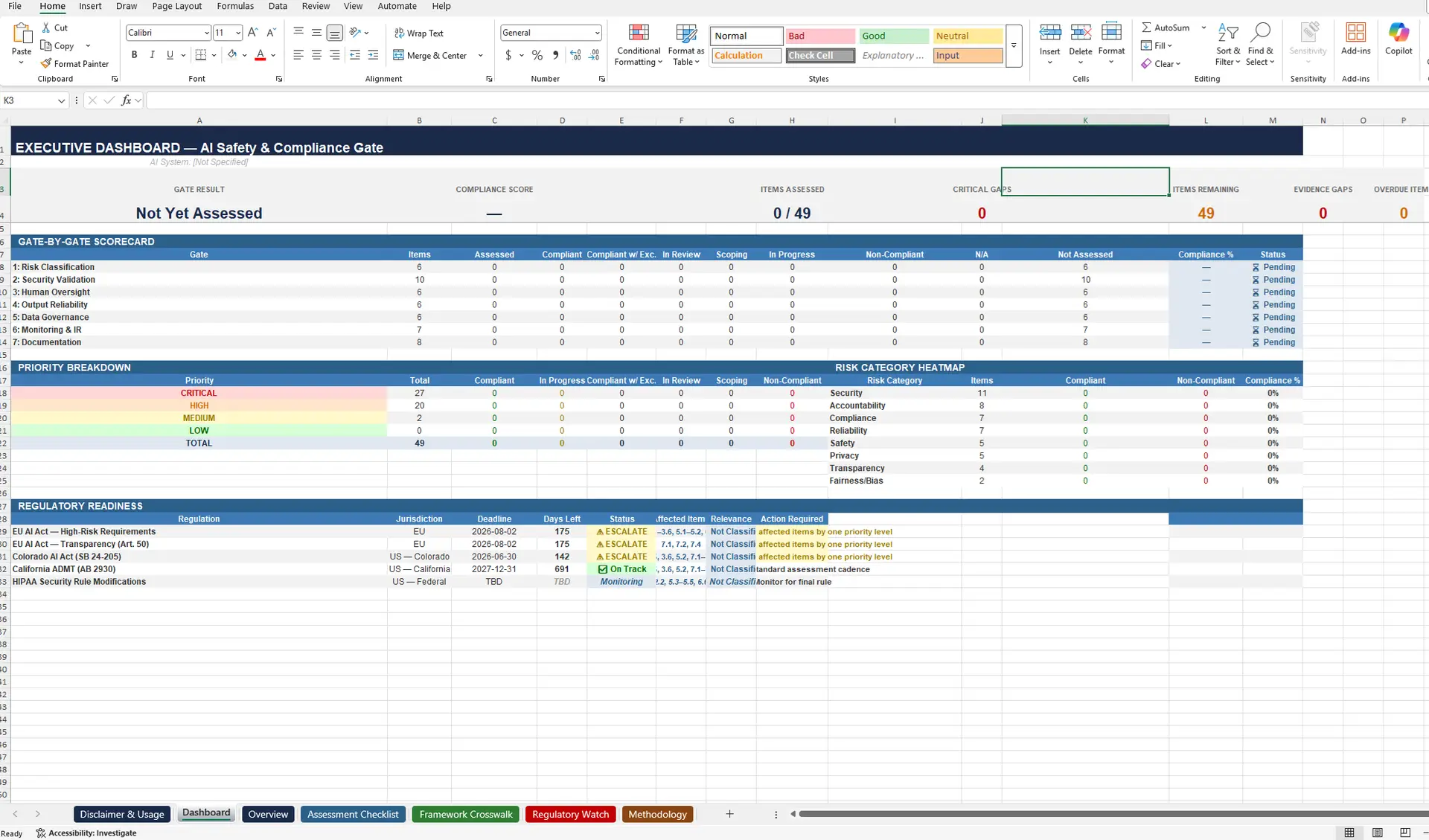

- ✅ Includes an executive Dashboard tab with gate-by-gate scorecard, priority breakdown, risk category heatmap, and regulatory readiness summary

- ✅ Provides 7 defined status levels (Compliant through Non-Compliant) with documented scoring weights for consistent assessment

Who Uses This?

This workbook is designed for:

- AI Governance Teams responsible for pre-deployment risk review

- Security Practitioners conducting adversarial testing validation (prompt injection, supply chain, red teaming)

- Compliance Officers tracking regulatory deadlines and framework alignment

- Engineering Leads managing technical implementation against control requirements

- CISOs and Executives reviewing Go/No-Go decisions at the Dashboard level

- Auditors requiring traceability from control items to specific regulatory articles and standard clauses

What’s Included (Preview)

The workbook contains 7 tabs:

- Disclaimer & Usage — Important disclaimers, 8-step usage guide, status definitions quick reference, and key concepts overview

- Dashboard — Auto-calculated executive summary with gate-by-gate scorecard, priority breakdown (CRITICAL/HIGH/MEDIUM/LOW), risk category heatmap across 8 categories, and regulatory readiness with escalation status

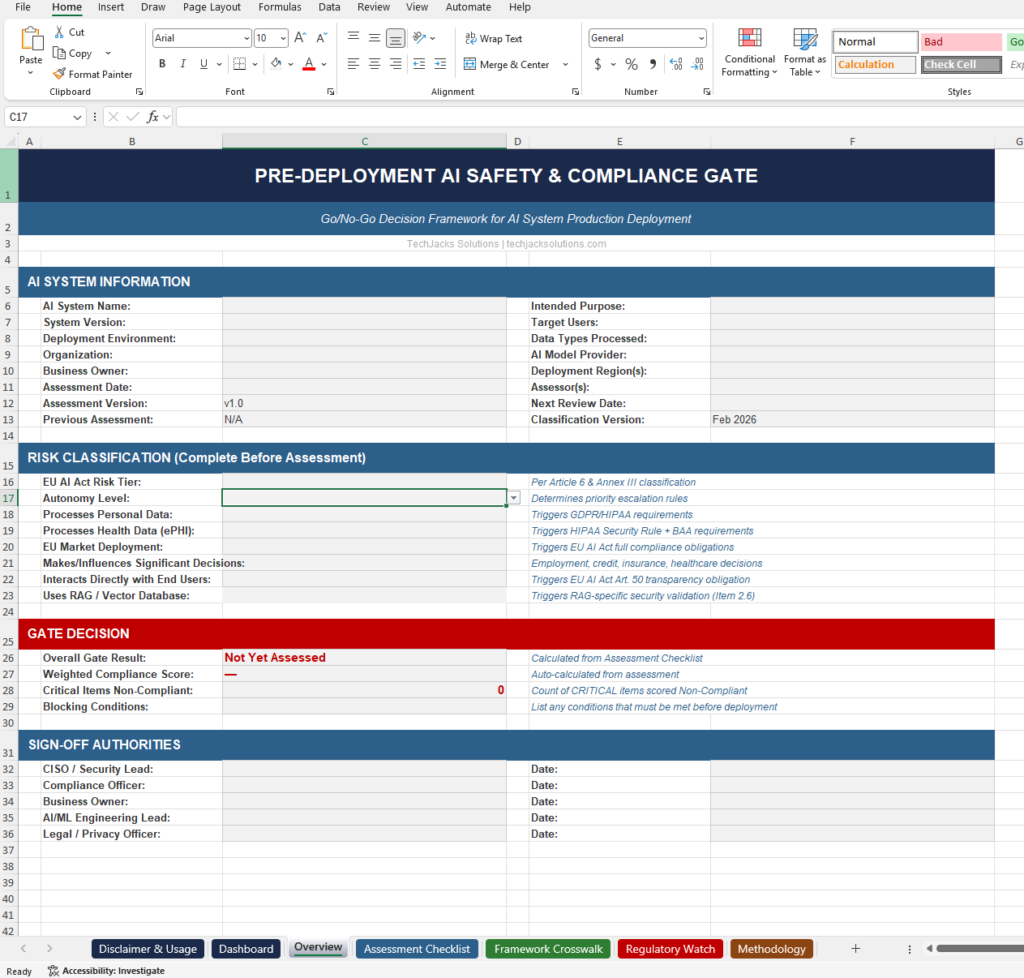

- Overview — AI system information intake (system name, version, deployment environment, model provider, etc.), risk classification fields that drive dynamic behavior across the workbook, gate decision summary, and sign-off authority fields for 5 roles

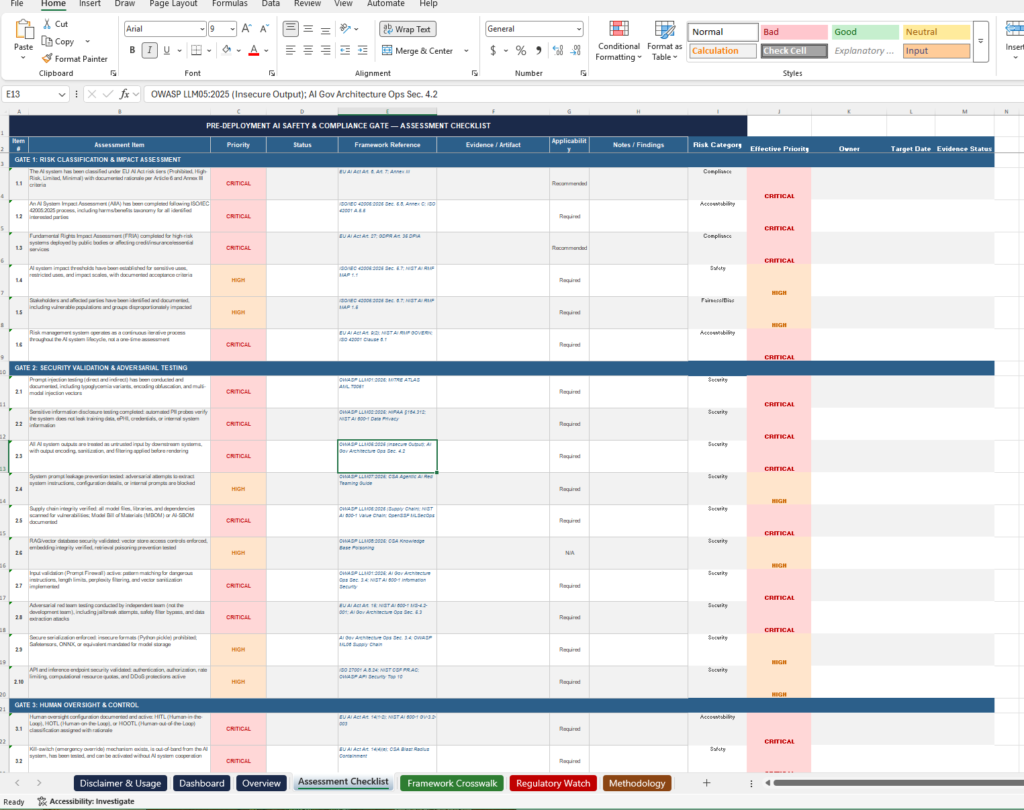

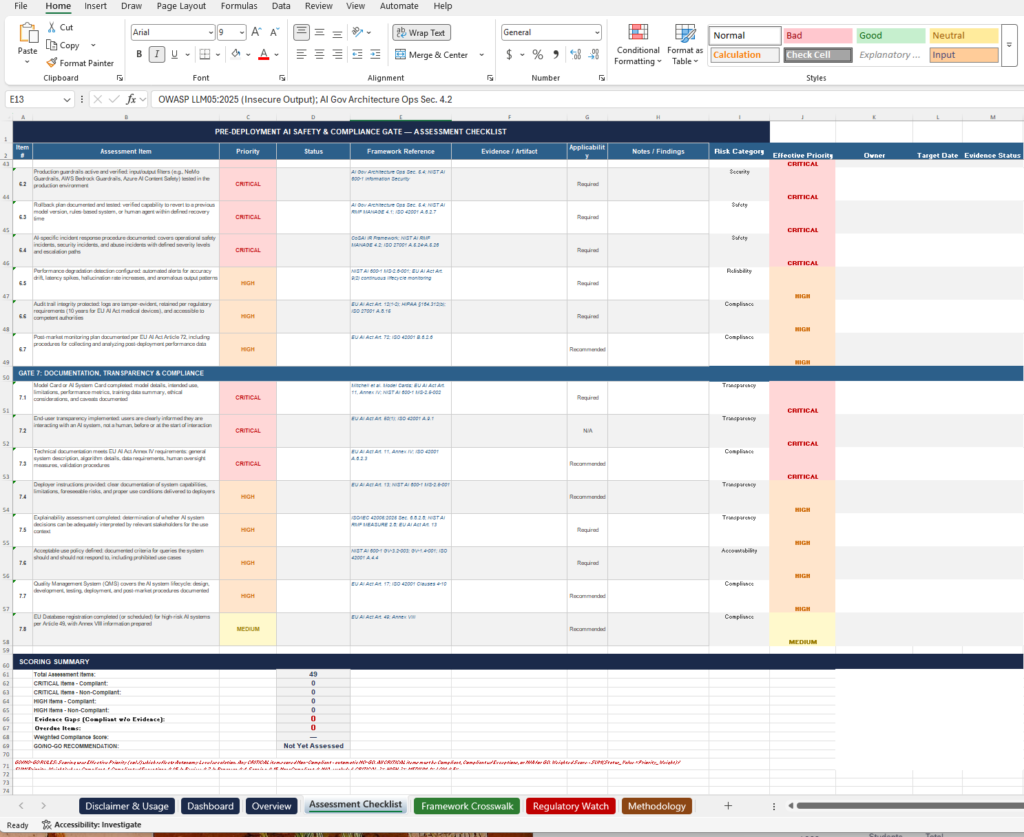

- Assessment Checklist — 49 items across 7 gates with columns for Status, Priority, Framework Reference, Evidence/Artifact, Applicability, Notes/Findings, Risk Category, Effective Priority, Owner, Target Date, and Evidence Status. Includes a scoring summary with weighted compliance calculation and Go/No-Go rules

- Framework Crosswalk — Traceability matrix mapping all 49 items to EU AI Act articles, NIST AI RMF/600-1 functions, ISO 42001/42005 clauses, OWASP LLM Top 10, CSA/CoSAI references, and MITRE ATLAS techniques

- Regulatory Watch — Deadline tracker for 5 regulations with countdown timers, auto-escalation rules (ESCALATE at 180 days, OVERDUE when passed), dynamic relevance based on risk classification, and affected item mapping

- Methodology — Priority classification criteria for all 4 levels, autonomy-level escalation modifiers, status definitions with scoring weights, weighted scoring formula, Go/No-Go decision rules, and complete list of 12 authoritative sources

Why This Matters

Organizations deploying AI systems face a growing web of regulatory requirements and standards with real enforcement deadlines. The EU AI Act’s high-risk obligations take effect August 2, 2026. The Colorado AI Act’s provisions around algorithmic discrimination prevention arrive June 30, 2026. California’s ADMT requirements follow. These aren’t theoretical future concerns.

Building a pre-deployment review process from scratch means interpreting multiple overlapping frameworks, determining which controls apply to your specific system, and creating a scoring methodology that produces defensible Go/No-Go decisions. That’s a significant investment of practitioner time, particularly when the review needs to trace back to specific articles and clauses for audit purposes.

This workbook provides a starting framework for that process. It synthesizes requirements from 12 authoritative sources into a single assessment structure. The dynamic applicability feature adjusts which items are required, recommended, or not applicable based on the risk classification inputs you provide on the Overview tab. That means a system that doesn’t process personal data, isn’t deployed in the EU market, and doesn’t use RAG will have a different effective assessment scope than one that does. The workbook reflects that rather than forcing a one-size-fits-all checklist.

Framework Alignment

This workbook references requirements and guidance from the following frameworks, as documented in the Methodology tab and mapped in the Framework Crosswalk:

- EU AI Act (Regulation 2024/1689) — Articles 6, 7, 9–15, 17, 27, 49, 50; Annexes III, IV, VIII

- NIST AI Risk Management Framework (AI 100-1) — MAP, GOVERN, MANAGE, MEASURE functions

- NIST Generative AI Profile (AI 600-1) — Confabulation risk, information security, data privacy, value chain, and specific control identifiers (MS-2.5, MS-2.6, MS-4.2, GV-1.2, GV-1.3, GV-3.2 series)

- NIST SP 1270 — Bias identification and management guidance

- ISO/IEC 42001:2023 — AI Management System clauses and Annex A controls

- ISO/IEC 42005:2025 — AI System Impact Assessment process

- ISO/IEC 23894:2023 — AI Risk Management guidance

- OWASP Top 10 for LLM Applications 2025 (v2.0) — LLM01 through LLM08 vulnerability categories

- CSA Agentic AI Red Teaming Guide — Blast radius containment, knowledge base poisoning

- CoSAI — Incident response framework, secure-by-design agentic principles

- MITRE ATLAS — Adversarial threat techniques (AML.T0051, AML.T0024, AML.T0018, AML.T0010)

- OpenSSF MLSecOps Framework — ML supply chain security

Key Features

- 49 Assessment Items Across 7 Gates: Risk Classification & Impact Assessment (6 items), Security Validation & Adversarial Testing (10 items), Human Oversight & Control (6 items), Output Reliability & Confabulation Controls (6 items), Data Governance & Privacy (6 items), Monitoring, Logging & Incident Response (7 items), Documentation, Transparency & Compliance (8 items)

- Weighted Scoring Engine: Formula-driven calculation using priority weights (CRITICAL = 3×, HIGH = 2×, MEDIUM = 1×, LOW = 0.5×) multiplied by status scores (Compliant = 1.0 through Non-Compliant = 0.0) to produce a weighted compliance percentage

- Automatic Go/No-Go Logic: GO requires all CRITICAL items Compliant/Compliant w/ Exceptions/N/A AND weighted score ≥ 80%. CONDITIONAL GO requires no CRITICAL items Non-Compliant or Scoping AND score ≥ 60% with documented remediation. NO-GO triggers on any CRITICAL Non-Compliant OR score below 60%

- Dynamic Applicability: 19 of 49 items adjust based on 8 risk classification fields (EU AI Act Risk Tier, Autonomy Level, Personal Data, ePHI, EU Market Deployment, Significant Decisions, End User Interaction, RAG Usage)

- Autonomy-Level Escalation: When set to Fully Autonomous, priorities auto-escalate (HIGH → CRITICAL, MEDIUM → HIGH, LOW → MEDIUM). Semi-Autonomous uses base priorities. Human-Supervised allows review for potential downgrade

- Regulatory Deadline Tracking: 5 active regulations monitored with countdown timers and automatic ESCALATE status when within 180 days of deadline

- 8 Risk Categories: Security (11 items), Accountability (8 items), Compliance (7 items), Reliability (7 items), Safety (5 items), Privacy (5 items), Transparency (4 items), Fairness/Bias (2 items)

- Evidence Tracking: Evidence Status column for each item with gap detection for items marked Compliant but missing evidence documentation

Comparison Table: Ad-Hoc Review vs. Structured Pre-Deployment Gate

| Aspect | Ad-Hoc AI Review | Pre-Deployment AI Safety Gate Template |

|---|---|---|

| Assessment Structure | Varies by reviewer; no consistent format | 49 items across 7 defined gates with standardized columns |

| Regulatory Traceability | Manual cross-referencing of regulations | Framework Crosswalk maps each item to specific articles and clauses across 7 sources |

| Scoring Methodology | Subjective pass/fail or narrative-based | Weighted formula with defined status values and priority multipliers |

| Go/No-Go Decision | Based on individual judgment | Formula-driven with documented decision rules and thresholds |

| Regulatory Deadline Awareness | Requires separate tracking | Built-in Regulatory Watch with countdown timers and auto-escalation |

| Dynamic Scoping | Manual determination of applicable controls | 19 items auto-adjust applicability based on risk classification inputs |

| Audit Trail | Depends on reviewer documentation habits | Structured fields for evidence, owner, target date, notes, and sign-off authorities |

| Executive Reporting | Custom summary required each time | Auto-calculated Dashboard with gate scorecard, heatmap, and regulatory readiness |

FAQ Section

Q: What file format is this template delivered in? A: The template is delivered as a Microsoft Excel (.xlsx) workbook. Documents are optimized for Microsoft Word and Excel to ensure proper formatting and collaborative editing capabilities.

Q: Do I need to complete all 49 assessment items? A: Not necessarily. The workbook includes dynamic applicability driven by the risk classification fields on the Overview tab. Depending on your system’s characteristics (EU market deployment, personal data processing, autonomy level, RAG usage, etc.), some items may be set to “N/A” or “Recommended” rather than “Required.” You should complete the Overview tab’s risk classification section first, as it drives applicability across the Assessment Checklist.

Q: Does completing this assessment guarantee regulatory compliance? A: No. As stated in the workbook’s disclaimer, this is a decision-support tool, not legal, regulatory, or compliance advice. It does not constitute a certification, attestation, or guarantee of regulatory compliance. Priority classifications are derived from authoritative sources but represent a composite interpretation. The workbook should be used alongside qualified legal counsel and compliance professionals.

Q: How does the Go/No-Go scoring work? A: The scoring engine uses a weighted formula: Weighted Score = SUM(Status_Value × Priority_Weight) / SUM(Priority_Weight). Status values range from Compliant (1.0) to Non-Compliant (0.0). Priority weights are CRITICAL = 3×, HIGH = 2×, MEDIUM = 1×, LOW = 0.5×. GO requires all CRITICAL items at Compliant or better AND a weighted score of 80% or above. Any single CRITICAL item scored Non-Compliant triggers an automatic NO-GO.

Q: What customization is required? A: The workbook requires customization for your specific AI system. You’ll need to complete the AI System Information section, set all 8 risk classification fields, assess each applicable item’s status, document evidence references, assign owners and target dates for non-compliant items, and tailor the Regulatory Watch deadlines as regulations are finalized or amended.

Q: Which regulatory deadlines are tracked in the workbook? A: The Regulatory Watch tab tracks 5 regulations as of the v1.0 classification (February 2026): EU AI Act High-Risk Requirements (August 2, 2026), EU AI Act Transparency Art. 50 (August 2, 2026), Colorado AI Act SB 24-205 (June 30, 2026), California ADMT AB 2930 (December 31, 2027), and HIPAA Security Rule Modifications (TBD pending HHS action). These deadlines should be verified and updated as regulations evolve.

Ideal For

- AI governance and risk management teams conducting pre-deployment reviews

- Organizations deploying AI systems in regulated industries (healthcare, finance, insurance, employment)

- Companies with EU market exposure preparing for EU AI Act high-risk obligations

- Security teams validating LLM-specific controls (prompt injection, supply chain, adversarial testing)

- Compliance officers needing audit-traceable documentation of AI safety assessments

- Engineering teams building internal AI deployment approval processes

- Organizations using the workbook as a foundation for ISO/IEC 42001 management system implementation

Pricing Strategy Options

Single Template: Contact for pricing based on organizational requirements and customization needs.

Bundle Option: May be combined with additional AI governance templates depending on organizational compliance scope.

Enterprise Option: Available as part of comprehensive governance documentation suites.

⚖️ Differentiator

This workbook provides a practitioner-developed assessment structure that maps 49 specific control items to 12 authoritative sources through a dedicated Framework Crosswalk tab, rather than presenting a generic checklist with vague regulatory references. The dynamic applicability feature, where 19 items adjust based on 8 risk classification inputs, means the effective assessment scope reflects your actual deployment context rather than forcing every organization through an identical list. The inclusion of a formula-driven scoring engine with defined Go/No-Go thresholds, combined with a regulatory deadline tracker that auto-escalates items approaching enforcement dates, provides a decision-support structure designed to support repeatable, documentable pre-deployment reviews. The workbook requires customization to your organization’s specific systems and regulatory obligations.

Disclaimer: This template is provided as a decision-support and internal assessment tool. It does not constitute legal advice, regulatory compliance certification, formal audit, or attestation of any kind. Priority classifications are derived from authoritative sources and represent a composite interpretation, not direct regulatory prescription. Go/No-Go recommendations are advisory — deployment decisions remain the responsibility of designated organizational authorities. Organizations should consult qualified legal counsel and compliance professionals for definitive regulatory guidance. Regulatory references and deadlines should be independently verified, as the AI governance landscape evolves rapidly. Framework mappings are provided as guidance and do not represent exhaustive coverage of any single standard.