Author: Derrick D. Jackson

Title: Founder & Senior Director of Cloud Security Architecture & Risk

Credentials: CISSP, CRISC, CCSP

Last updated : Feb 3rd, 2026

Table of Contents

What Is The NIST AI RMF?

The Framework in 60 Seconds: The NIST AI Risk Management Framework (AI RMF 1.0) is a voluntary, outcome-based guide for managing AI risks across any sector or organization size. Published in January 2023 by the National Institute of Standards and Technology, it organizes AI risk management into four functions (GOVERN, MAP, MEASURE, MANAGE) spanning 19 categories and 72 subcategories. It’s becoming the de facto U.S. standard of care for AI governance. It’s also incomplete. NIST knows this and says so directly in the document. This article covers what the framework gets right, where it falls short, and what that means for organizations trying to use it.

Who This Article Is For

- New to AI governance? Start here for a plain-language overview of the framework’s purpose, structure, and honest limitations.

- Compliance or security professional? You’ll find framework overlap analysis, demonstration-of-adoption methods, and specific gap areas to plan around.

- Executive? Focus on the Business Impact section and the strengths/gaps comparison. These inform budget and strategy decisions.

This article provides a candid assessment of the NIST AI RMF as a whole. For function-specific guides, see the GOVERN, MAP, MEASURE, and MANAGE articles.

NIST AI RMF Function Overviews: View Articles

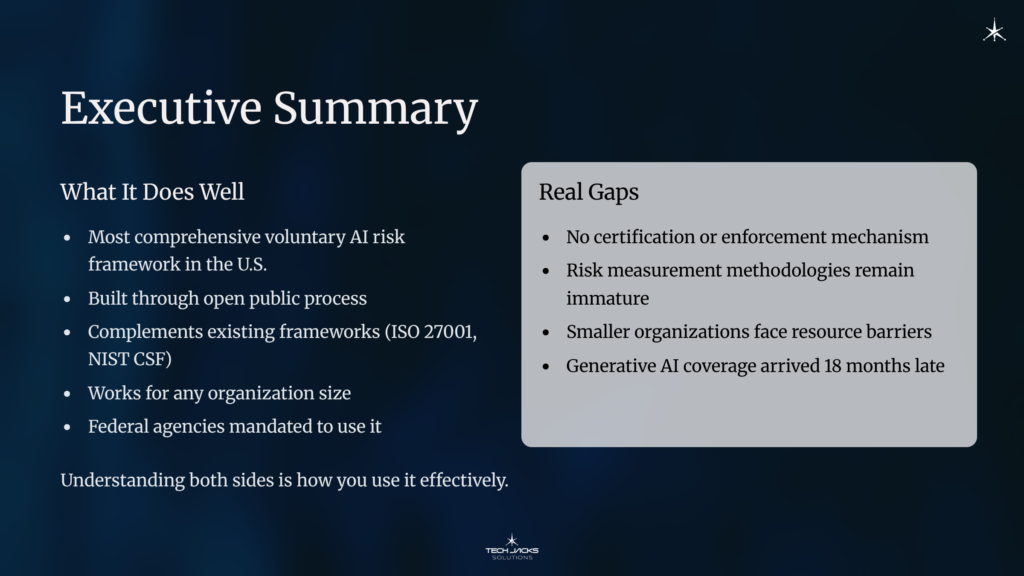

Executive Summary

The NIST AI RMF is the most comprehensive voluntary AI risk management framework available in the United States. It was built through an unusually open public process, it complements existing frameworks like ISO 27001 and NIST CSF rather than replacing them, and it’s structured to work for organizations of any size. Federal agencies are mandated to use it. Some state AI laws reference recognized risk management frameworks when evaluating organizational due diligence.

It also has real gaps. There’s no certification or enforcement mechanism. Risk measurement methodologies for AI systems remain immature (NIST says this themselves). Smaller organizations face disproportionate resource barriers. And generative AI coverage arrived 18 months after the base framework, leaving early adopters without guidance during the fastest period of GAI deployment in history.

None of that makes it a bad framework. It makes it an honest one. Understanding both sides is how you use it effectively.

Who Should Adopt the NIST AI RMF

NIST designed the framework to be sector-agnostic and use-case agnostic (Appendix D, Attribute 6, NIST AI 100-1). In theory, any organization that designs, develops, deploys, or uses AI systems is the intended audience. In practice, certain sectors and use cases face significantly more pressure to adopt.

The simple test: If your organization builds, buys, or operates AI systems that influence decisions about people, the AI RMF applies to you. If those decisions affect access to housing, credit, employment, healthcare, insurance, education, or government services, adoption isn’t optional in any practical sense. Courts and regulators are already using the framework as a benchmark for reasonable care.

Sectors facing the most adoption pressure:

- Healthcare: AI-driven claims processing, diagnostic support, and treatment recommendations directly affect patient outcomes. The UnitedHealth nH Predict case (90% error rate on appeals, federal litigation proceeding) illustrates the liability exposure. CMS and FDA oversight adds regulatory incentive.

- Financial services: Credit scoring, lending decisions, fraud detection, and insurance underwriting all involve algorithmic decisions about individuals. Fair lending laws, CFPB oversight, and SEC guidance on AI risk disclosure create a compliance floor.

- Hiring and employment: Resume screening, candidate scoring, and workforce analytics face growing scrutiny. The Mobley v. Workday collective action (AI hiring discrimination, conditionally certified as collective action May 2025) and the 2024 University of Washington study (Wilson & Caliskan) showing 85% preference for white-associated names in AI resume screening demonstrate the legal trajectory.

- Housing: Tenant screening algorithms that score rental applicants. SafeRent Solutions’ $2.275 million settlement for discriminatory impact on Black and Hispanic housing voucher recipients is the direct precedent.

- Government contracting: Federal agencies are mandated to use the AI RMF through executive orders. Organizations selling AI systems or services to federal agencies face contractual requirements to demonstrate alignment.

- Insurance: Risk scoring, claims automation, and underwriting algorithms that determine coverage or pricing for individuals.

Organizations using AI purely for internal operations (scheduling optimization, supply chain forecasting, document processing without individual-impact decisions) face lower immediate pressure but still benefit from structured risk management as their AI footprint grows.

The framework’s audience section (NIST AI 100-1, Section 2) defines “AI actors” broadly across the entire lifecycle: system operators, end users, domain experts, AI designers, impact assessors, product managers, procurement specialists, compliance experts, auditors, governance experts, impacted individuals, and communities. If your organization touches any part of that chain, the framework has something for you.

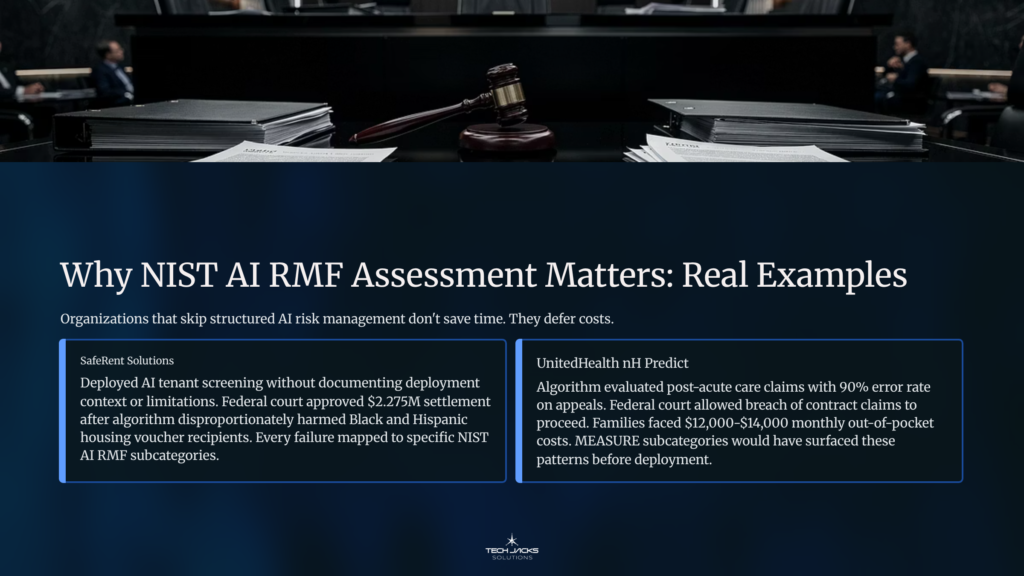

Why NIST AI RMF Assessment Matters: Real Examples

Organizations that skip structured AI risk management don’t save time. They defer costs.

SafeRent Solutions deployed an AI tenant screening algorithm without documenting deployment context, system limitations, or potential discriminatory impacts. In November 2024, a federal court approved a $2.275 million settlement after plaintiffs proved the algorithm disproportionately harmed Black and Hispanic housing voucher recipients (Louis, et al. v. SafeRent Solutions, D. Mass.). The U.S. Department of Justice filed a statement of interest supporting the case. Every failure mapped to a specific NIST AI RMF subcategory that would have required documentation before deployment.

UnitedHealth’s nH Predict algorithm evaluated post-acute care claims for Medicare Advantage beneficiaries. The system had a 90% error rate on appeals (nine of ten denied claims were reversed when patients appealed). In February 2025, a federal court allowed breach of contract claims to proceed in Estate of Gene B. Lokken v. UnitedHealth Group (CASE 0:23-cv-03514-JRT-SGE). By September 2025, the court denied UnitedHealth’s motion to amend the pretrial scheduling order, keeping the discovery process on track. Specific MEASURE subcategories (validity testing, safety evaluation, risk tracking) would have surfaced these patterns before families faced $12,000 to $14,000 monthly out-of-pocket costs.

These aren’t edge cases. They’re what happens when organizations treat AI risk management as optional.

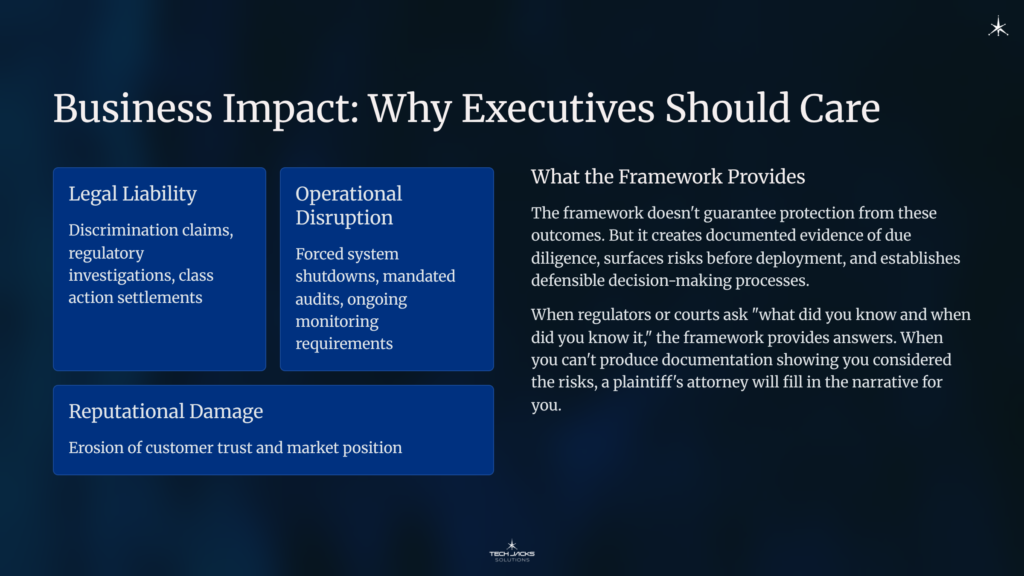

Business Impact: Why Executives Should Care

The AI RMF’s growing legal weight changes the calculus. Organizations deploying AI without a structured risk management approach face legal liability (discrimination claims, regulatory investigations, class action settlements), operational disruption (forced system shutdowns, mandated audits, ongoing monitoring requirements), and reputational damage that erodes customer trust.

The framework doesn’t guarantee protection from these outcomes. But it creates documented evidence of due diligence, surfaces risks before deployment, and establishes defensible decision-making processes. When regulators or courts ask “what did you know and when did you know it,” the framework provides answers. When you can’t produce documentation showing you considered the risks, a plaintiff’s attorney will fill in the narrative for you.

How the NIST AI RMF Was Created

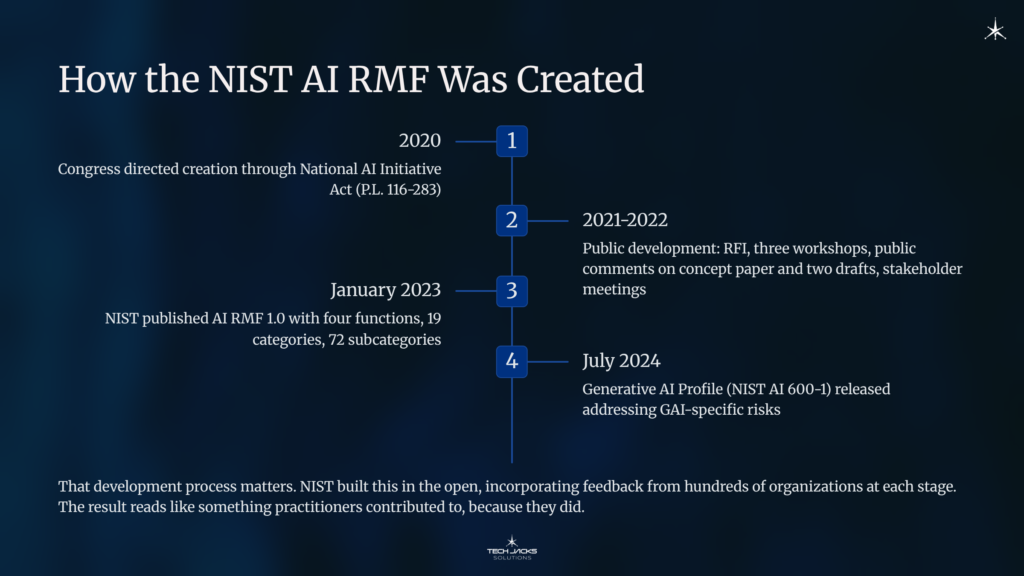

Congress directed the framework’s creation through the National Artificial Intelligence Initiative Act of 2020 (P.L. 116-283). NIST developed it through a public process that included a formal Request for Information, three widely attended workshops, public comments on a concept paper and two separate drafts, discussions at multiple public forums, and numerous small group meetings with stakeholders across industry, government, academia, and civil society (NIST AI 100-1, Executive Summary).

That development process matters. Most frameworks come from a standards body or a consulting firm and arrive fully formed. NIST built this one in the open, incorporating feedback from hundreds of organizations at each stage. The result reads like something practitioners contributed to, because they did.

NIST published version 1.0 in January 2023. The framework uses a two-number versioning system to track revisions, and NIST has confirmed a revision is now underway. On July 26, 2024, NIST released the Generative AI Profile (NIST AI 600-1) as a companion document addressing risks specific to generative AI systems, including confabulation, content provenance, information integrity, and environmental impacts (full document).

What the Framework Is Designed to Do

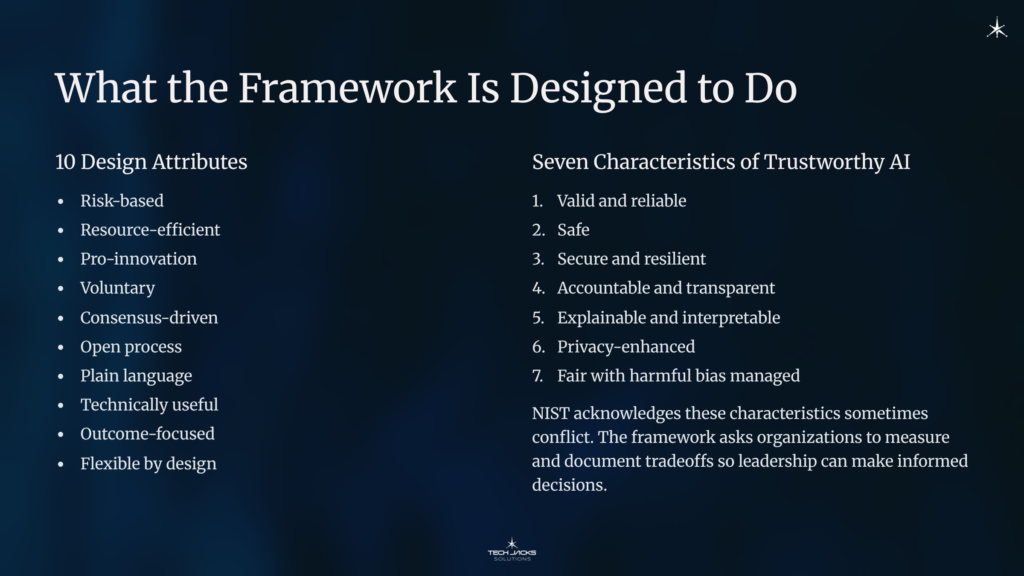

NIST laid out 10 design attributes when development began, and they remained consistent through publication (Appendix D, NIST AI 100-1). The framework is risk-based, resource-efficient, pro-innovation, and voluntary. It’s consensus-driven and developed through an open process. It uses plain language accessible to executives while remaining technically useful to practitioners.

Seven characteristics define trustworthy AI under the framework: valid and reliable, safe, secure and resilient, accountable and transparent, explainable and interpretable, privacy-enhanced, and fair with harmful bias managed. NIST acknowledges these characteristics sometimes conflict. Optimizing for explainability might reduce privacy protections. Privacy-enhancing techniques can degrade model accuracy. The framework doesn’t pretend these tradeoffs don’t exist. It asks organizations to measure and document them so leadership can make informed decisions.

The structural logic follows a pattern familiar to anyone who’s worked with NIST’s Cybersecurity Framework or ISO standards: functions, categories, subcategories. Four core functions contain 19 categories and 72 subcategories spanning the full AI lifecycle.

| Function | Focus | Categories | Subcategories |

|---|---|---|---|

| GOVERN | Policies, accountability, culture (cross-cutting) | 6 | 19 |

| MAP | Context setting, risk identification | 5 | 18 |

| MEASURE | Risk assessment, testing, monitoring | 4 | 13 |

| MANAGE | Risk treatment, incident response | 4 | 13 |

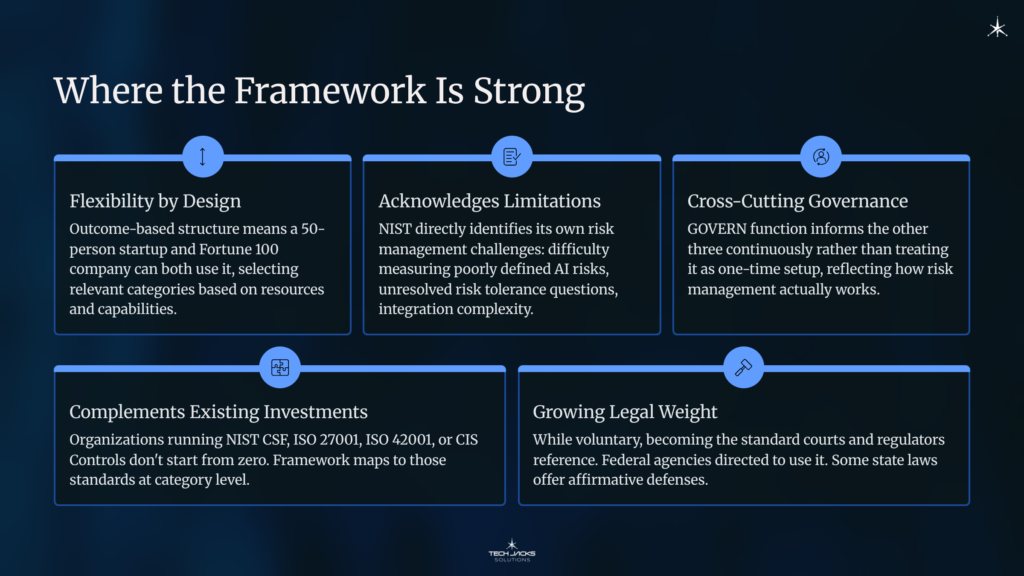

Where the Framework Is Strong

Flexibility by Design

The outcome-based structure means a 50-person startup and a Fortune 100 company can both use it, selecting the categories and subcategories relevant to their context. NIST explicitly states organizations may apply all 72 subcategories or choose among them based on resources and capabilities. This isn’t a checkbox exercise. It’s a catalog of outcomes you adapt to your situation.

It Acknowledges What It Can’t Do

NIST AI 100-1 (Section 1.2) identifies its own risk management challenges directly: difficulty measuring poorly defined AI risks, unresolved questions about risk tolerance, the complexity of prioritizing AI-specific risks alongside enterprise risks, and the challenge of integrating AI risk management across organizational silos. I’ve found that frameworks rarely call out their own limitations this honestly. It builds credibility with practitioners who already know the gaps exist.

Cross-Cutting Governance

Making GOVERN a function that informs the other three continuously (rather than treating it as a one-time setup phase) reflects how risk management actually works. Most organizations that fail at AI governance fail because they treated it as a project with a completion date rather than an ongoing discipline.

Complements Existing Investments

Organizations running NIST CSF, ISO 27001, ISO 42001, or CIS Controls v8 don’t start from zero. The AI RMF uses the same structural logic and maps to those frameworks at the category level. NIST provides crosswalks to related standards. It extends existing risk thinking to cover AI-specific concerns those frameworks weren’t designed for: harmful bias, generative AI risks, machine learning attacks, third-party AI supply chains, and socio-technical impacts on individuals and communities.

Growing Legal Weight

While voluntary, the AI RMF is becoming the standard courts and regulators reference. Federal agencies are directed to use it through NIST’s congressional mandates for AI procurement and governance. Some state AI laws reference recognized risk management frameworks when evaluating organizational due diligence. The line between “voluntary guidance” and “expected practice” gets thinner every year.

Where the Framework Falls Short

The framework is honest about several of these itself. Others become apparent when you try to implement it.

No Enforcement Mechanism

The AI RMF is voluntary. There’s no certification, no audit standard, no compliance badge. Organizations can claim adoption without external verification. ISO 42001 exists partly to fill this gap, but it’s a separate standard with its own costs and timelines. For organizations that need to demonstrate compliance to regulators or customers, “we follow NIST AI RMF” requires supporting evidence that the framework itself doesn’t define. The person who accepts the risk is rarely the person who handles the incident when that evidence gap surfaces.

Risk Measurement Remains Immature

NIST acknowledges this directly in Section 1.2.1 of NIST AI 100-1: AI risks that aren’t well-defined are difficult to measure quantitatively or qualitatively. The inability to appropriately measure AI risks doesn’t mean the system is low-risk. It means you don’t know.

Third-party risk metrics may not align between the organization developing the AI system and the organization deploying it. Emergent risks are hard to track. The Generative AI Profile (NIST AI 600-1) goes further, noting that current pre-deployment testing processes for GAI applications may be inadequate, non-systematically applied, or mismatched to deployment contexts. Measurement gaps between laboratory conditions and real-world settings are a known, unresolved problem.

Risk Tolerance Left to the Organization

The framework explicitly doesn’t prescribe risk tolerance (Section 1.2.2, NIST AI 100-1). This is both a strength (flexibility) and a real weakness (ambiguity). NIST acknowledges there may be contexts where risk tolerance challenges remain unresolved to the point where the framework isn’t readily applicable. For organizations without mature risk programs, “define reasonable risk tolerance” is a significant ask with limited concrete guidance on how.

Small and Medium Organizations Face Disproportionate Barriers

NIST acknowledges in Section 2 that smaller organizations may face different challenges depending on their capabilities and resources. But 72 subcategories, cross-functional coordination requirements, and extensive documentation expectations assume a level of organizational capacity many smaller teams don’t have. The framework’s flexibility helps here (you can adopt what applies), but the gap between “you may select” and “here’s how to prioritize with limited resources” is real.

Generative AI Coverage Came Later

The base framework (January 2023) didn’t comprehensively address generative AI risks. NIST AI 600-1 (July 2024) filled that gap 18 months later. During that window, organizations deploying generative AI systems worked without NIST-specific guidance on confabulation, content provenance, information integrity, harmful bias in foundation models, and environmental impacts of large-scale model training.

No Prescriptive Implementation Path

The NIST AI RMF Playbook provides suggested actions, but organizations determine sequencing, staffing, tooling, and integration themselves. For teams new to structured AI governance, the gap between “here are the outcomes” and “here’s how to get there” can be wide. Implementation quality varies significantly as a result.

Ecosystem-Level Risks Are Hard to Capture

NIST AI 600-1 notes that current measurement gaps make it difficult to precisely estimate ecosystem-level or longitudinal risks and related political, social, and economic impacts. The framework addresses individual system risk well. Cross-system, compounding, and societal-scale risks remain harder to manage within its structure.

How to Demonstrate Adoption

The AI RMF has no certification program. So how do you prove you’re using it? Since it’s a voluntary guide, “showing adoption” means producing documented artifacts and formal process outputs that demonstrate your organization has systematically achieved the outcomes described in the framework’s subcategories. Four categories of evidence cover this.

1. Documented AI Governance Policies (GOVERN 1.x)

The most direct evidence. These are formal organizational policies that define your AI risk management program:

- AI Risk Management Policy (GOVERN 1.2): Confirms that trustworthy AI characteristics (transparency, fairness, accountability, safety) are integrated into organizational procedures. Defines key terms, connects AI governance to existing risk controls, aligns with legal standards. Organizations can accelerate this using policy templates from the Responsible AI Institute, which are designed around NIST and ISO standards.

- Legal and Regulatory Documentation (GOVERN 1.1): Confirms legal and regulatory requirements involving AI are understood, managed, and documented for your specific industry and jurisdiction.

- Risk Tolerance Documentation (GOVERN 1.3): Written procedures defining the level of risk management activities based on the organization’s predetermined risk tolerance. This shows management actively engaged in defining acceptable risk levels.

- Decommissioning Procedures (GOVERN 1.7): Explicit procedures for phasing out AI systems safely, including data preservation for regulatory or legal review.

2. AI System Inventory (GOVERN 1.6)

A central registry of AI systems and related artifacts that demonstrates organizational control and prioritization. Sometimes called an AI Bill of Materials (AI-BOM). A serviceable inventory contains, for each system: links to technical documentation and source code, incident response plans, names and contact information for responsible AI actors, deployment dates, and last refresh dates. The existence and maintenance of this inventory is rapid evidence that GOVERN 1.6 is implemented.

3. Accountability Records (GOVERN 2.x)

Formal internal documents clarifying who is responsible for AI risk decisions:

- Roles and Responsibilities Documentation (GOVERN 2.1): An organizational map or charter that defines roles, responsibilities, and lines of communication for mapping, measuring, and managing AI risks. This includes who is ultimately responsible for each AI system’s decisions.

- Executive Sponsorship Records (GOVERN 2.3): Board minutes, senior management reports, or committee charters demonstrating executive leadership takes responsibility for AI risk decisions and has allocated resources.

- Training Records (GOVERN 2.2): Evidence that AI risk management training has been integrated into enterprise learning and that personnel have received role-appropriate training (technical teams get different training than oversight roles).

4. Assessment Reports

Structured methods to summarize the completeness of your implementation:

- Self-Assessment Checklists: The NIST AI RMF Playbook provides transparency and documentation items for each subcategory that function as internal checklists. Third-party templates like the GAO AI Accountability Framework (GAO-21-519SP) or commercial GRC platforms (AuditBoard, Vanta) can be adapted for internal audits.

- ISO 42001 Crosswalk Certification: The NIST AI RMF crosswalks demonstrate that GOVERN activities align directly with ISO 42001 clauses for Leadership and Commitment (5.1), AI Policy (5.2), and Roles, Responsibilities, and Authorities (5.3). Achieving ISO 42001 certification serves as externally verifiable evidence that GOVERN function outcomes are implemented and maintained.

- Independent Audits: Third-party auditors can conduct process audits against the framework’s subcategories. The resulting audit report provides objective assessment for regulators, investors, or boards.

- Responsible AI Certifications: Third-party certifications from organizations like the Responsible AI Institute or HITRUST that use the NIST AI RMF as a foundational component of their criteria.

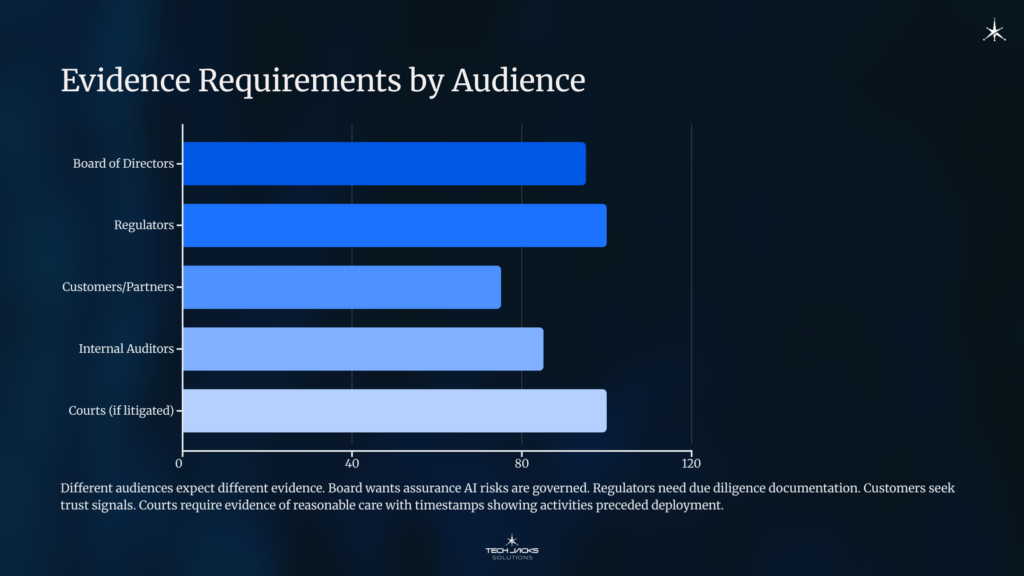

Different audiences expect different evidence:

| Audience | What They Expect | Primary Evidence |

|---|---|---|

| Board of Directors | Assurance that AI risks are governed | Executive sponsorship records, risk tolerance documentation, AI system inventory |

| Regulators | Due diligence documentation | Policies, assessment reports, training records, incident response evidence |

| Customers / Partners | Trust signals | ISO 42001 certification, third-party audit reports, Responsible AI certifications |

| Internal Auditors | Process conformity | Self-assessment checklists, Playbook documentation items, RACI matrices |

| Courts (if litigated) | Evidence of reasonable care | All of the above, with timestamps showing activities preceded deployment |

Adoption by Organization Size

The framework’s 72 subcategories aren’t meant to be adopted all-or-nothing. NIST designed it to scale. Here’s what practical adoption looks like at different organizational sizes.

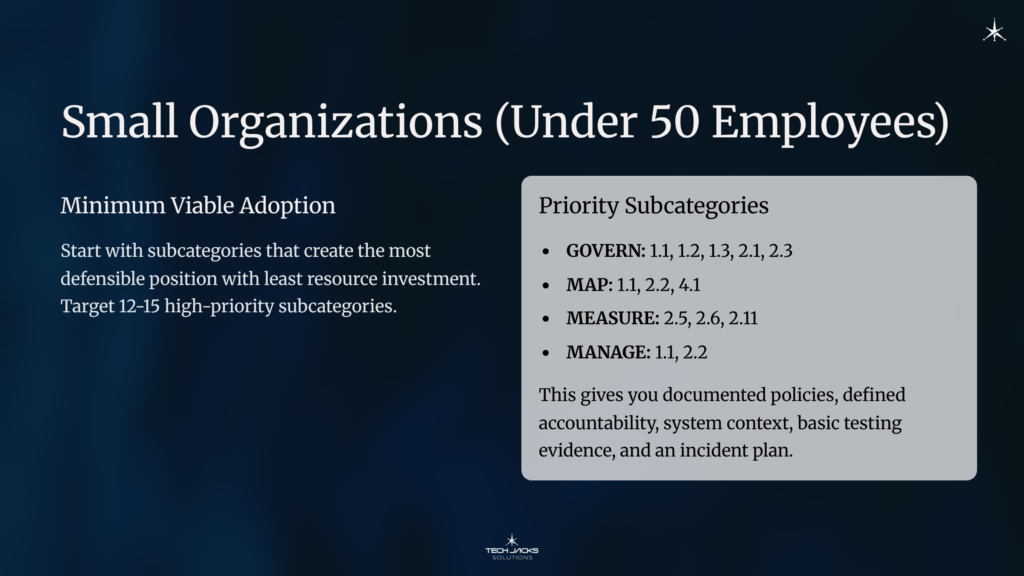

Small Organizations (under 50 employees)

Start with the subcategories that create the most defensible position with the least resource investment. Target 12-15 high-priority subcategories.

Minimum viable adoption:

- GOVERN 1.1 (legal/regulatory documentation), GOVERN 1.2 (AI risk management policy), GOVERN 1.3 (risk tolerance), GOVERN 2.1 (roles and responsibilities), GOVERN 2.3 (executive sponsorship)

- MAP 1.1 (intended purpose and context), MAP 2.2 (knowledge limits), MAP 4.1 (third-party risks)

- MEASURE 2.5 (validity testing), MEASURE 2.6 (safety evaluation), MEASURE 2.11 (fairness/bias testing)

- MANAGE 1.1 (risk treatment decisions), MANAGE 2.2 (incident response)

This gives you documented policies, defined accountability, system context, basic testing evidence, and an incident plan. It won’t cover everything, but it addresses the subcategories most likely to matter in a legal or regulatory inquiry.

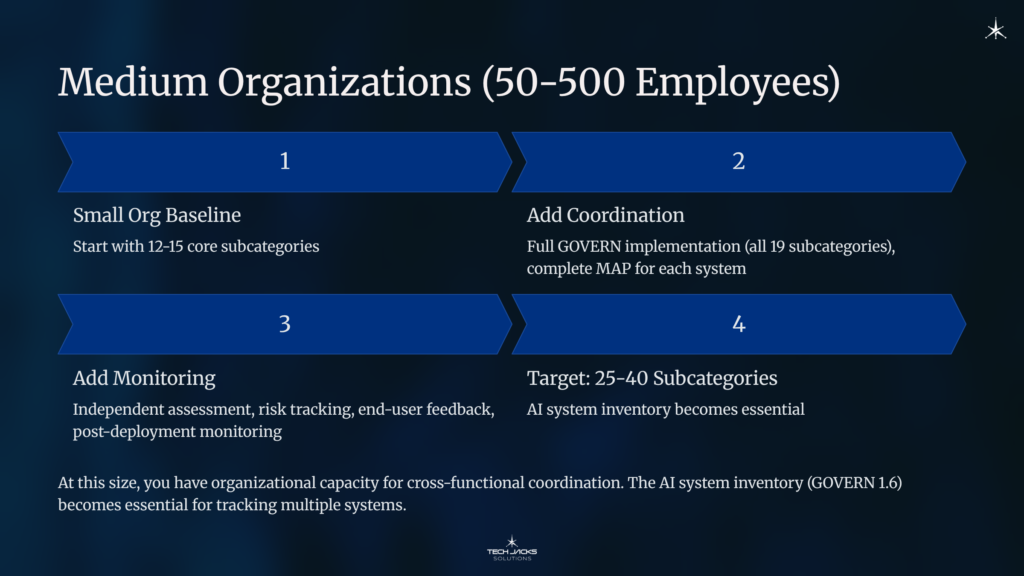

Medium Organizations (50-500 employees)

Expand to 25-40 subcategories. Add the coordination and monitoring subcategories that small organizations often skip due to resource constraints.

Add to the small organization baseline:

- Full GOVERN function implementation (all 19 subcategories), including workforce diversity (GOVERN 3), stakeholder engagement (GOVERN 5), and third-party risk policies (GOVERN 6)

- Complete MAP for each AI system (all five categories), including impact characterization (MAP 5) and benefits/costs analysis (MAP 3)

- MEASURE 1.3 (independent assessment), MEASURE 3.1 (risk tracking over time), MEASURE 3.3 (end-user feedback mechanisms)

- MANAGE 2.4 (mechanisms to halt or deactivate AI systems), MANAGE 4.1 (post-deployment monitoring)

At this size, you have the organizational capacity for cross-functional coordination. The AI system inventory (GOVERN 1.6) becomes essential for tracking multiple systems.

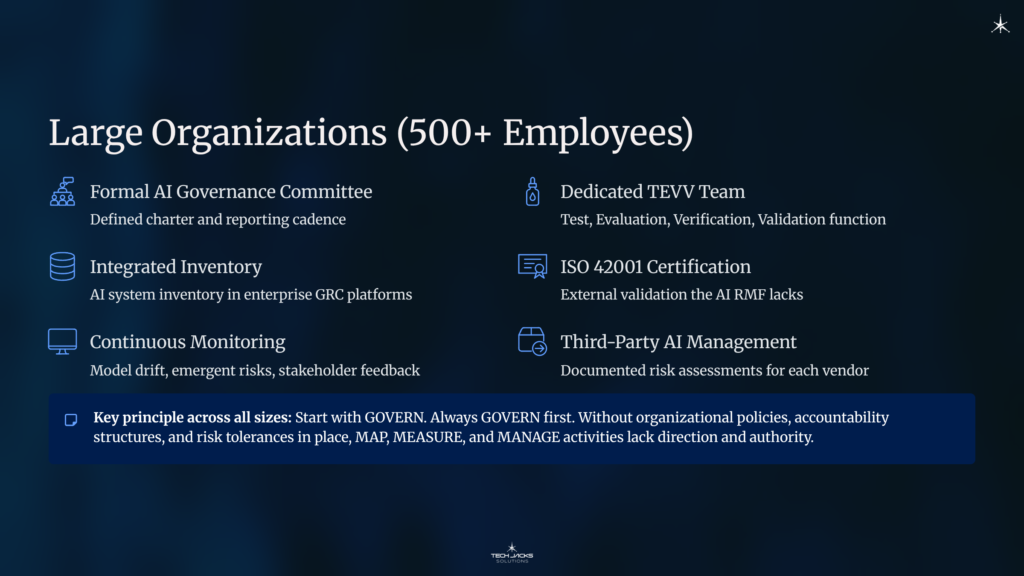

Large Organizations (500+ employees)

Target comprehensive adoption across all 72 subcategories, with depth proportional to AI system risk level. Low-risk systems get abbreviated documentation. High-risk systems get the full treatment.

What changes at scale:

- Formal AI governance committee with defined charter and reporting cadence

- Dedicated TEVV (Test, Evaluation, Verification, Validation) team or function

- AI system inventory integrated into enterprise risk management and GRC platforms

- ISO 42001 certification pursuit (provides the external validation the AI RMF lacks)

- Continuous monitoring infrastructure for model drift, emergent risks, and stakeholder feedback

- Structured public feedback and community engagement processes (MEASURE 3.3, GOVERN 5)

- Third-party AI supply chain management with documented risk assessments for each vendor (GOVERN 6, MAP 4)

The key principle across all sizes: Start with GOVERN. Always GOVERN first. Without organizational policies, accountability structures, and risk tolerances in place, MAP, MEASURE, and MANAGE activities lack direction and authority. You can’t measure what you haven’t defined, and you can’t manage what you haven’t measured.

Framework Overlap

The AI RMF doesn’t replace existing frameworks. It extends them. NIST publishes official crosswalks to related standards. Here’s where the overlap sits:

| Framework | Overlap with AI RMF | What AI RMF Adds |

|---|---|---|

| ISO 27001 | Risk assessment, monitoring, incident response, supplier controls | AI-specific: bias testing, explainability, societal impact, TEVV processes |

| ISO 42001 | AI policy, leadership commitment, performance evaluation, risk treatment | Broader scope: voluntary vs. certifiable, non-prescriptive outcomes |

| NIST CSF | Functions/categories structure, risk-based approach, governance | AI lifecycle coverage, trustworthiness characteristics, socio-technical risks |

| CIS Controls v8 | Asset inventory, audit logs, penetration testing, incident response | AI red teaming, fairness evaluation, model drift monitoring, decommissioning |

| EU AI Act | Risk classification, documentation, transparency, human oversight | Voluntary (vs. legally binding), broader risk treatment options, U.S. context |

ISO 42001 provides the closest alignment because it was designed specifically for AI management systems. Organizations pursuing ISO 42001 certification will find that NIST AI RMF implementation covers substantial ground toward conformity, particularly around leadership commitment (Clause 5), AI policy (Clause 5.2), and performance evaluation (Clause 9).

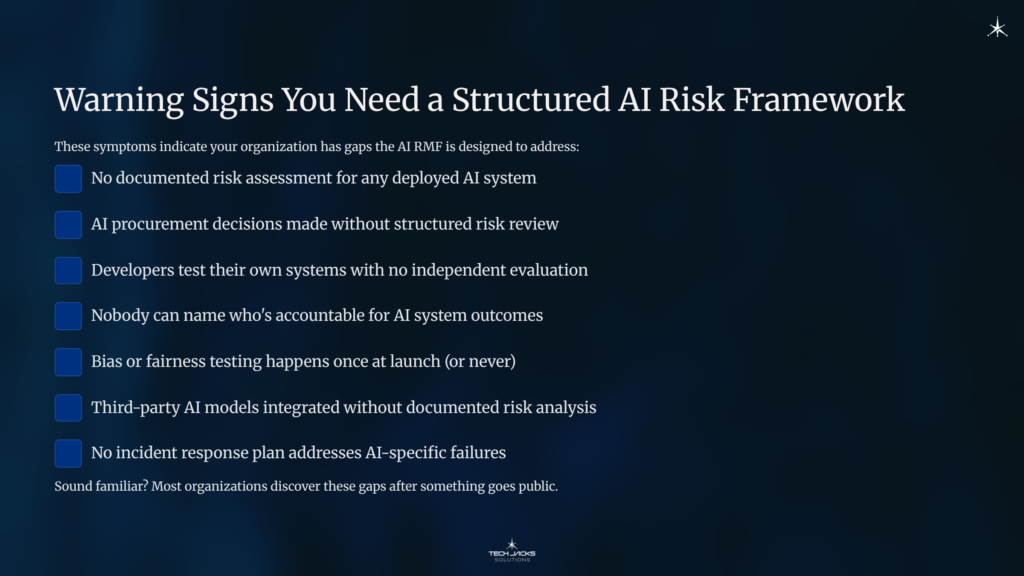

Warning Signs You Need a Structured AI Risk Framework

These symptoms indicate your organization has gaps the AI RMF is designed to address:

- No documented risk assessment for any deployed AI system

- AI procurement decisions made without structured risk review

- Developers test their own systems with no independent evaluation

- Nobody can name who’s accountable for AI system outcomes

- Bias or fairness testing happens once at launch (or never)

- Third-party AI models integrated without documented risk analysis

- No incident response plan addresses AI-specific failures

Sound familiar? Most organizations discover these gaps after something goes public.

Frequently Asked Questions

Q: Is the NIST AI RMF mandatory? No. It’s voluntary for private organizations. Federal agencies are directed to use it through congressional mandates and executive orders, and some state AI laws reference recognized risk management frameworks when evaluating due diligence. Voluntary doesn’t mean optional in practice when regulators and courts use it as a benchmark.

Q: Can we get certified against the NIST AI RMF? Not directly. The AI RMF has no certification program. ISO 42001 is the closest certifiable standard, and it aligns closely with many AI RMF outcomes. Third-party organizations like the Responsible AI Institute offer assessment programs that use the AI RMF as a foundation.

Q: How does it relate to the EU AI Act? The AI RMF is voluntary guidance. The EU AI Act is legally binding regulation. They overlap on risk assessment, transparency, documentation, and human oversight. Organizations operating in both jurisdictions can use the AI RMF to build the management structure and the EU AI Act to confirm legally mandated requirements.

Q: Where should we start? GOVERN. Always GOVERN first. Without organizational policies, accountability structures, and risk tolerances in place, MAP, MEASURE, and MANAGE activities lack direction and authority.

Q: Does the framework cover generative AI? The base framework (January 2023) has limited GAI coverage. The Generative AI Profile (NIST AI 600-1, July 2024) extends coverage to GAI-specific risks including confabulation, content provenance, information integrity, and environmental impacts.

Q: How often should we revisit AI RMF activities? Continuously. GOVERN is cross-cutting and ongoing. MAP, MEASURE, and MANAGE activities should run throughout the AI lifecycle, not as one-time exercises. AI systems drift. Contexts change. Risks evolve.

Q: What’s the minimum viable adoption for a small organization? Focus on 12-15 high-priority subcategories: core GOVERN policies (1.1, 1.2, 1.3, 2.1, 2.3), essential MAP activities (1.1, 2.2, 4.1), critical MEASURE testing (2.5, 2.6, 2.11), and basic MANAGE responses (1.1, 2.2). See the Adoption by Organization Size section for specifics.

Q: How do we prove adoption to a board or regulator? Four categories of evidence: documented governance policies, an AI system inventory, accountability records with executive sponsorship, and structured assessment reports (self-assessment checklists, third-party audits, or ISO 42001 certification). See the How to Demonstrate Adoption section.

Next Steps

New to AI governance? Start with the GOVERN function guide to understand the organizational foundations every other function depends on.

Compliance or security professional? Use the framework overlap table above to map your current controls to AI RMF outcomes. Identify the gaps (likely around bias testing, explainability, and societal impact), then use the demonstration-of-adoption section to plan your evidence package.

Executive? Ask your teams two questions: “Which of our AI systems have documented risk assessments?” and “Who is accountable for their outcomes?” If the answers are unclear, the AI RMF gives you a structure to fix that before regulators or courts do it for you.

Sources:

- NIST AI 100-1: AI Risk Management Framework 1.0 (January 2023)

- NIST AI 600-1: Generative AI Profile (July 2024)

- NIST Trustworthy and Responsible AI Resource Center

- NIST AI RMF Crosswalks

- National Artificial Intelligence Initiative Act of 2020 (P.L. 116-283)

- Louis, et al. v. SafeRent Solutions — Civil Rights Litigation Clearinghouse

- Louis v. SafeRent — U.S. DOJ Statement of Interest

- Louis v. SafeRent — Cohen Milstein case page

- Estate of Gene B. Lokken v. UnitedHealth Group — Court Opinion (Feb. 2025)

- Lokken v. UnitedHealth — Justia Docket

- Lokken v. UnitedHealth — STAT News investigation

- Lokken v. UnitedHealth — Medical Economics

- Lokken v. UnitedHealth — DLA Piper legal analysis

- Mobley v. Workday — Fisher Phillips legal analysis

- Wilson & Caliskan (2024), “Gender, Race, and Intersectional Bias in Resume Screening” — University of Washington

- Wilson & Caliskan (2024) — arXiv preprint

- Responsible AI Institute — NIST AI RMF alignment