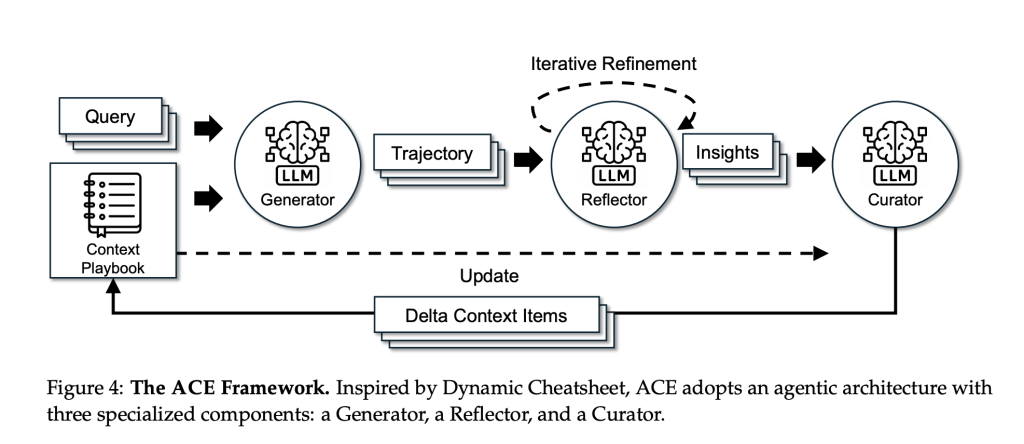

TL;DR: A team of researchers from Stanford University, SambaNova Systems and UC Berkeley introduce ACE framework that improves LLM performance by editing and growing the input context instead of updating model weights. Context is treated as a living “playbook” maintained by three roles—Generator, Reflector, Curator—with small delta items merged incrementally to avoid brevity bias and

The post Agentic Context Engineering (ACE): Self-Improving LLMs via Evolving Contexts, Not Fine-Tuning appeared first on MarkTechPost. Read More