NIST AI RMF Hub: Risk Management Framework 2026

- Home

- NIST AI RMF Hub: Risk Management Framework 2026

NIST AI RISK MANAGEMENT FRAMEWORK

Credentials: CISSP, CRISC, CCSP

NIST AI RISK MANAGEMENT FRAMEWORK

NIST AI RMF Hub

The NIST AI Risk Management Framework (AI RMF 1.0), published in January 2023, provides voluntary guidance for managing risks throughout the AI lifecycle. Unlike prescriptive regulations, it’s outcome-based. Organizations decide how to achieve the goals based on their context, risk tolerance, and resources.

Here’s what makes it distinct from earlier approaches: traditional software frameworks couldn’t adequately address harmful bias in AI systems, generative AI risks, machine learning attacks, or the complex third-party AI supply chain (according to NIST AI 100-1). The AI RMF fills those gaps.

What Is the NIST AI Risk Management Framework?

The NIST AI Risk Management Framework (AI RMF 1.0) was published in January 2023 after Congress directed its creation through the National Artificial Intelligence Initiative Act of 2020. NIST developed it through an open, public process involving formal requests for information, public workshops, comment periods on multiple drafts, and stakeholder engagement across industry, government, and civil society (NIST AI 100-1). In July 2024, NIST released a companion Generative AI Profile (NIST AI 600-1) extending coverage to GAI-specific risks.

The framework is voluntary, outcome-based, and sector-agnostic. It’s built around four core functions (GOVERN, MAP, MEASURE, MANAGE) containing 19 categories and 72 subcategories that span the full AI lifecycle. These functions target seven characteristics of trustworthy AI: valid and reliable, safe, secure and resilient, accountable and transparent, explainable and interpretable, privacy-enhanced, and fair with harmful bias managed.

The AI RMF doesn’t replace existing frameworks. Organizations already running ISO 27001, ISO 42001, the NIST Cybersecurity Framework, or CIS Controls v8 will find direct overlap at the category level. The AI RMF extends that foundation to address risks those frameworks weren’t built for: harmful bias, generative AI, machine learning attacks, and third-party AI supply chains. It’s becoming a de facto standard of care in the United States, with federal agencies mandated to use it and some state laws offering affirmative defenses for organizations following recognized frameworks.

It’s not without limitations. There’s no certification or enforcement mechanism, risk measurement methodologies are still maturing, and smaller organizations may find the 72-subcategory scope challenging to resource. For a deeper analysis, see NIST AI RMF: What It Does Well and Where It Falls Short.

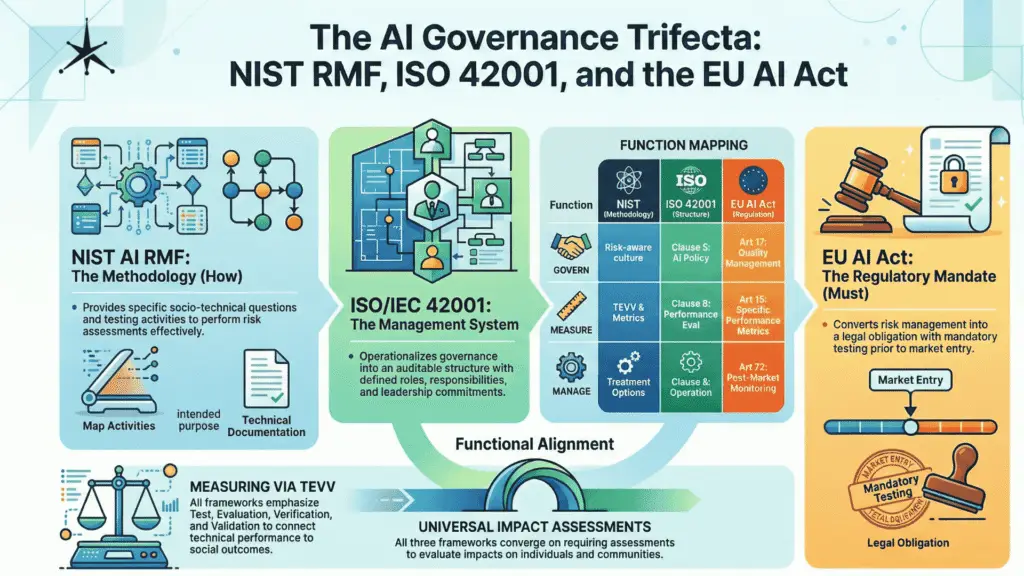

Framework Relationships

The AI RMF doesn’t replace existing frameworks. It complements them.

Organizations already using the NIST Cybersecurity Framework, NIST Privacy Framework, or ISO 27001 will find familiar structures (functions, categories, subcategories). The AI RMF extends this approach specifically to AI-related risks that those frameworks weren’t designed to address. ISO 42001:2023 (the AI management system standard) maps directly to GOVERN function outcomes, while the EU AI Act’s legal requirements align with many AI RMF practices, though the Act carries binding legal obligations where the AI RMF remains voluntary.

The Four Core Functions

The Four Core Functions

| Function | Categories | Subcategories | Purpose |

|---|---|---|---|

| GOVERN | 6 | 19 | Establishes culture, policies, and accountability (cross-cutting) |

| MAP | 5 | 18 | Defines context, identifies risks for specific AI systems |

| MEASURE | 4 | 13 | Assesses and tracks identified risks quantitatively and qualitatively |

| MANAGE | 4 | 13 | Treats, monitors, and communicates risk responses |

Total: 19 categories, 72 subcategories across the framework.

GOVERN operates differently from the other three. It’s cross-cutting, meaning its outcomes inform MAP, MEASURE, and MANAGE activities continuously.

NIST AI RMF Complete Controls Tracker - Example Guide

NIST Function Spotlight

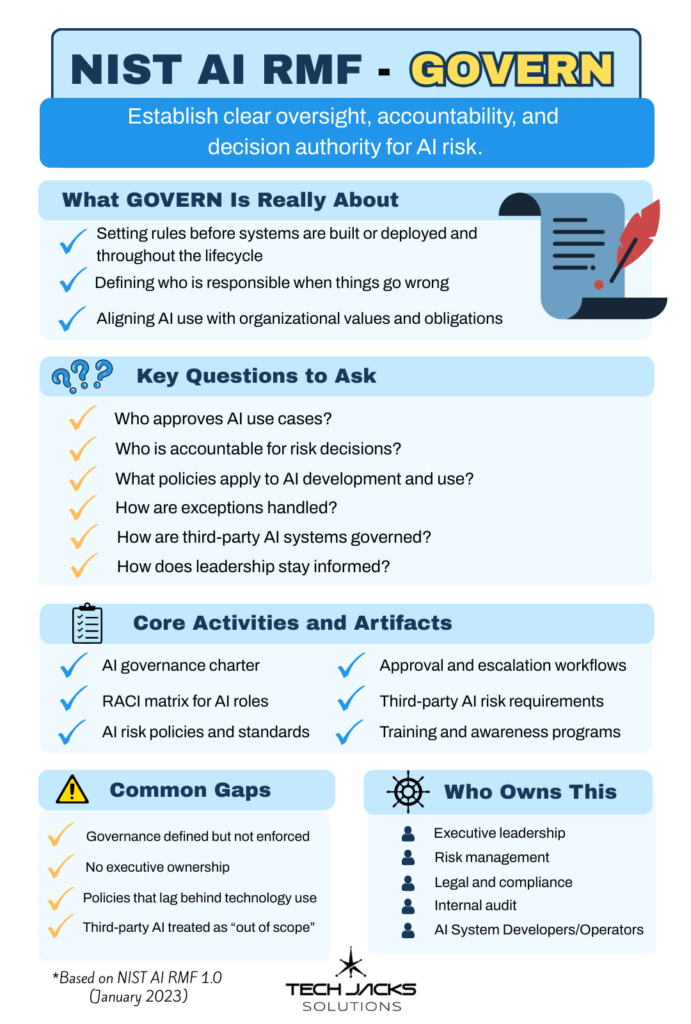

GOVERN is the foundation everything else sits on. Without it, MAP, MEASURE, and MANAGE don’t have direction, accountability, or authority. This function establishes the policies, roles, risk tolerances, and organizational culture that make AI risk management possible. Six categories cover legal compliance, accountability structures, workforce diversity, safety culture, stakeholder engagement, and third-party oversight.

It’s cross-cutting for a reason. GOVERN doesn’t run once and finish. It informs the other three functions continuously, throughout the entire AI lifecycle. Executive leadership takes direct responsibility for AI risk decisions here (per NIST AI 100-1, GOVERN 2.3). Writing a policy is easy. Assigning someone’s name to the consequences of an AI system’s failures is harder.

Organizations already running ISO 42001 or ISO 27001 will find direct overlap, particularly around leadership commitment, AI policy, and roles. The EU AI Act’s legal requirements align with many GOVERN outcomes, though the Act carries binding weight where NIST stays voluntary. GOVERN is becoming a de facto standard of care in the United States, with some state laws offering affirmative defenses for organizations following recognized frameworks.

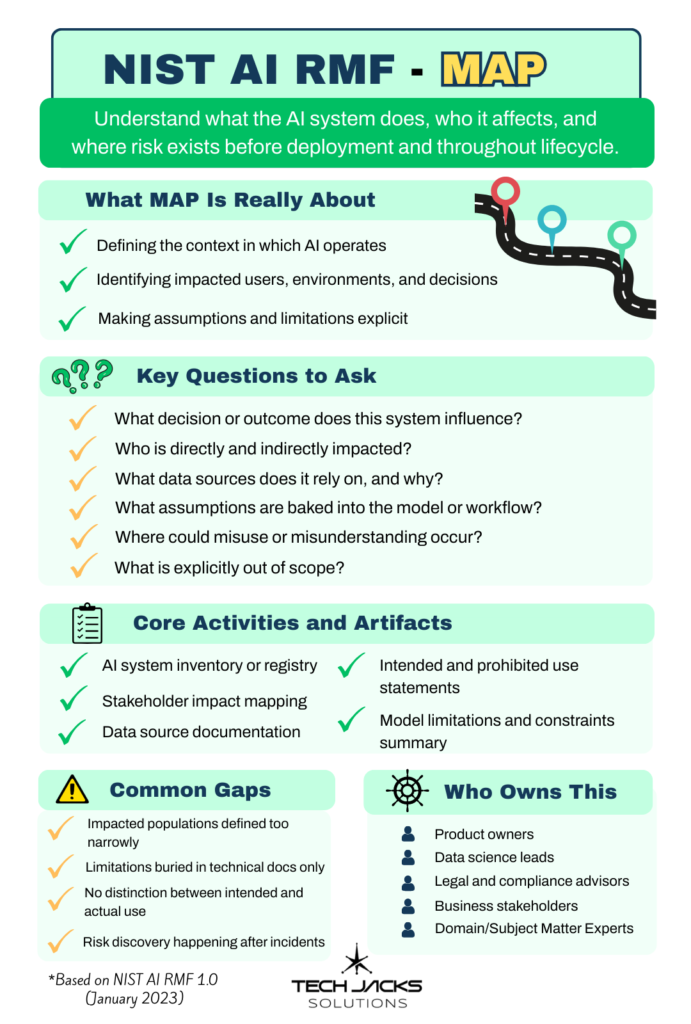

MAP answers the questions everybody should ask before deploying an AI system but often doesn’t. What is this system designed to do? Who does it affect? What are its limitations? What could go wrong? Five categories cover establishing context, categorizing the system, analyzing capabilities and costs, mapping third-party component risks, and characterizing impacts on individuals and communities.

MAP 1.1 (intended purpose and context) is the foundation for everything downstream. MAP 2.2 (knowledge limits) forces technical teams to admit what their system doesn’t know. Skip these and MEASURE and MANAGE operate blind. This is also where organizations discover uncomfortable truths: you map third-party dependencies and find your vendor’s training data sourcing is a black box. You document intended purpose and realize the system’s being used for decisions it was never designed to handle.

MAP aligns with ISO 27001 Clause 4.1/4.2 (Context of the Organization), ISO 42001’s Impact Assessment (Annex B.5), and CIS Controls 1 and 2 (Asset and Software Inventory). Organizations running those frameworks have a head start. They just haven’t applied the thinking specifically to AI systems yet.

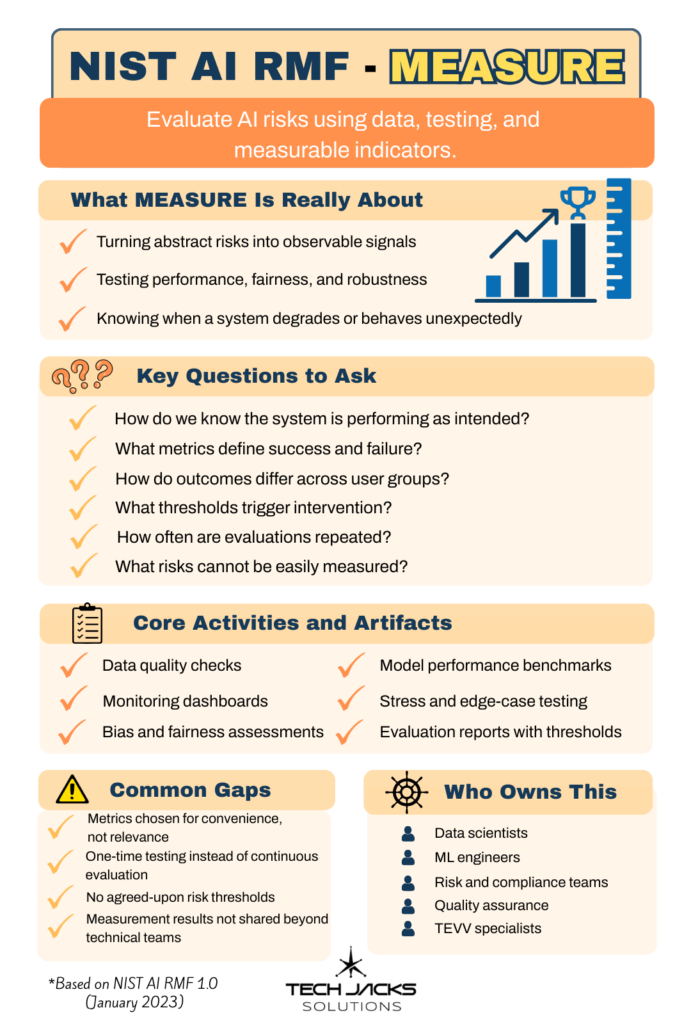

MEASURE is where claims about AI system performance meet evidence. Four categories cover metric selection, trustworthiness evaluation, stakeholder feedback, and measurement efficacy. The function uses quantitative, qualitative, and mixed methods to analyze and monitor the risks identified during MAP.

MEASURE 2 is where the real work lives, covering TEVV (Test, Evaluation, Verification, and Validation) across safety, bias, security, explainability, and privacy, all documented under conditions similar to actual deployment. MEASURE 1.3 requires independent assessors (people who didn’t build the system) to be involved in regular evaluations. Developers testing their own systems is like grading your own homework. MEASURE 1.1 also requires documenting risks you can’t measure. That honesty matters, and most frameworks don’t ask for it.

ISO 42001 Clause 9 (Performance Evaluation) provides the closest alignment. ISO 27001 covers the general monitoring principle but doesn’t address AI-specific concerns like bias testing or explainability. CIS 18 (Penetration Testing) and CIS 8 (Audit Log Management) map at the technical layer.

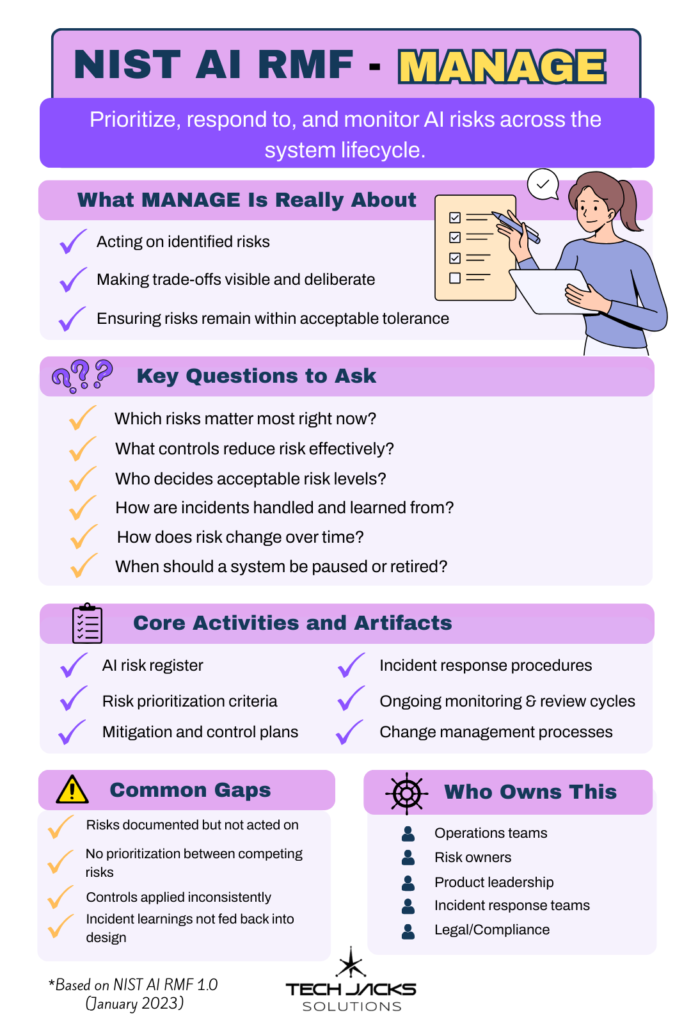

MANAGE is the operational engine. While GOVERN sets rules and MAP and MEASURE provide data, MANAGE is where you actually do something about the risks you’ve found. Four categories cover risk prioritization and treatment, strategies for sustaining value and system deactivation, third-party supply chain management, and post-deployment monitoring with incident response.

This function contains the go/no-go decision point. MANAGE 1.1 requires a documented determination of whether the AI system achieves its intended purposes and whether deployment should proceed. Someone’s name goes on that decision. MANAGE 1.3 defines four risk response options: mitigate, transfer, avoid, or accept. MANAGE 1.4 requires documenting residual risks to downstream users. Nothing clarifies organizational risk appetite like writing down what you chose not to fix.

MANAGE 2.4 is the “kill switch” subcategory, requiring mechanisms and assigned responsibilities to deactivate AI systems showing outcomes inconsistent with intended use. The crosswalk is direct: ISO 42001 Clauses 6.1.3 and 8.3 (AI Risk Treatment), ISO 27001 Annex A.5.24-28 (Incident Management), and CIS 17 (Incident Response). Organizations running ISO 27001 already have operational muscle here. MANAGE extends it to cover AI-specific treatments like model rollback and training data remediation.

Note:

This is a living page and will continuously be updated & enhanced.