A practical framework designed to help teams spot potential bias in AI systems before causing real-world harm, with structured evaluation across seven critical phases.

[Download Now]

AI Bias Assessment Checklist – Community Edition

What This Assessment Provides

AI systems can perform differently for different groups of people, often disadvantaging certain populations even with good intentions. Teams need structured approaches to identify where unfairness might occur and what actions can prevent or address it. This Community Edition checklist provides assessment frameworks across seven phases from planning through third-party AI evaluation.

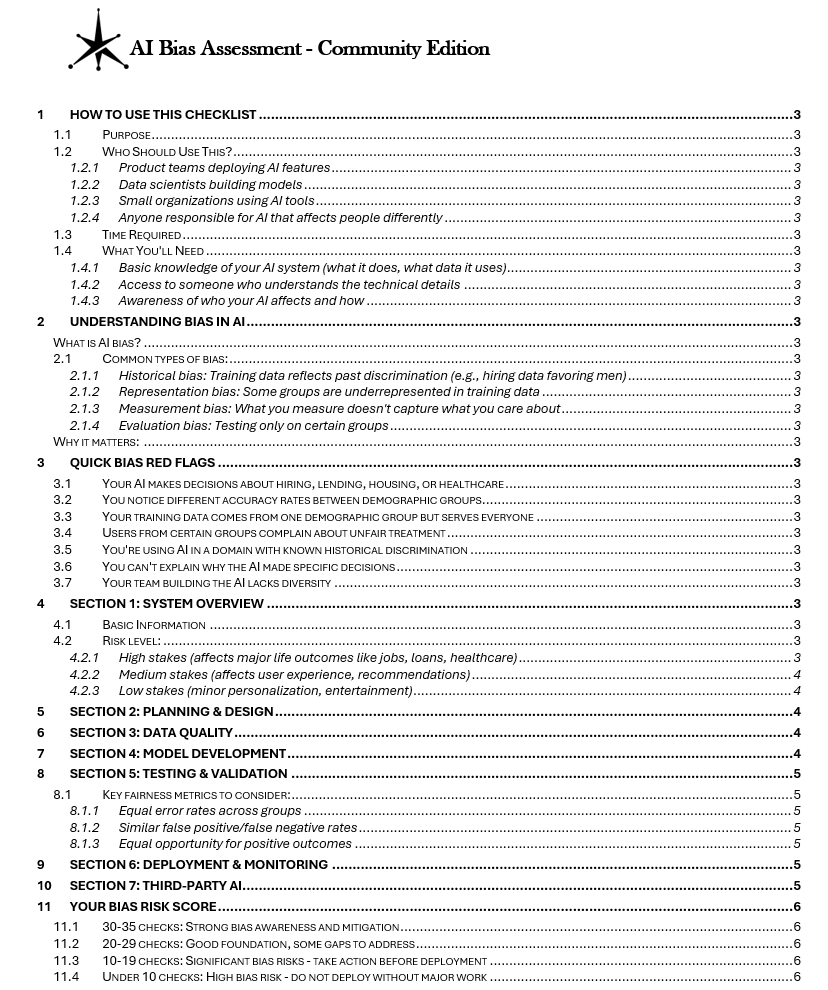

The checklist uses 35 checkpoint questions evaluating bias awareness and mitigation practices. Organizations can complete the initial assessment in 2-3 hours, though addressing identified issues will require additional time for data auditing, model testing, or deployment changes. The framework includes risk scoring with four interpretation tiers and priority action planning templates for immediate, near-term, and ongoing work.

What You Get: ✓ Seven-section assessment framework covering planning, data quality, model development, testing, deployment, monitoring, and third-party AI ✓ 35-checkpoint bias evaluation across the AI lifecycle ✓ Four-tier risk scoring system with interpretation guidance ✓ Common bias type definitions (historical, representation, measurement, evaluation) ✓ Three-phase mitigation technique guide (data collection, model training, post-deployment) ✓ Key fairness metrics reference (equal error rates, false positive/negative rates, equal opportunity) ✓ Priority action planning templates with urgency classification

Designed For:

- Product teams deploying AI features affecting diverse user populations

- Data scientists building models who need fairness evaluation frameworks

- Small organizations using AI tools without dedicated ethics teams

- Anyone responsible for AI systems that may affect different groups differently

Preview What’s Inside: The checklist contains seven assessment sections with yes/no checkpoint questions, a bias risk scoring matrix, common mistake warnings, mitigation technique quick reference, and action planning templates. Supporting sections define bias types, provide red flag identification, and list resources for deeper investigation.

Why AI Bias Assessment Matters

Biased AI can deny opportunities, reinforce stereotypes, and cause real harm at scale. A hiring tool that favors one demographic over another. A loan approval system with different accuracy rates across racial groups. A healthcare recommendation engine that performs poorly for underrepresented populations. These outcomes often emerge from historical discrimination embedded in training data, representation imbalances, measurement choices that fail to capture what matters, or evaluation practices that test only on certain groups.

Teams building or deploying AI need systematic approaches to identify where bias might enter their systems and what mitigation strategies can address it. This checklist structures bias assessment across the complete AI lifecycle, from initial planning considerations through ongoing monitoring practices.

Understanding Bias Types

The guide defines four common bias categories:

Historical Bias: Training data reflects past discrimination. Example: hiring data favoring men because historical hiring decisions were biased. The AI learns to perpetuate past patterns rather than make equitable decisions.

Representation Bias: Some groups are underrepresented in training data. Example: facial recognition trained predominantly on one ethnic group performs poorly on others. Insufficient data about certain populations leads to worse performance for those groups.

Measurement Bias: What you measure doesn’t capture what you care about. Example: using test scores as proxy for job performance when test access correlates with socioeconomic status. The measurement introduces bias even if applied consistently.

Evaluation Bias: Testing only on certain groups. Example: validating model performance on training data demographics without testing on the full user population. Bias remains undetected because testing isn’t comprehensive.

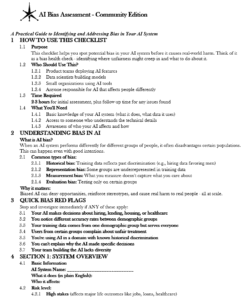

Assessment Framework Structure

Section 1: System Overview Basic information capture including AI system name, plain-language purpose, affected populations, and risk level classification (high stakes for major life outcomes like jobs/loans/healthcare, medium stakes for user experience/recommendations, low stakes for personalization/entertainment).

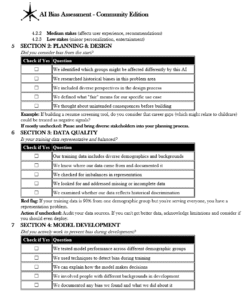

Section 2: Planning & Design Five checkpoints evaluating whether teams considered bias from the start: identifying groups affected differently, researching historical biases in the problem area, including diverse perspectives in design, defining fairness for the specific use case, and considering unintended consequences before building.

Section 3: Data Quality Five checkpoints assessing training data representativeness: demographic diversity inclusion, data provenance documentation, representation imbalance checking, missing/incomplete data handling, and historical discrimination examination.

Section 4: Model Development Five checkpoints evaluating active bias prevention during development: performance testing across demographic groups, bias detection technique usage, decision explanation capability, diverse background involvement in development, and bias finding documentation.

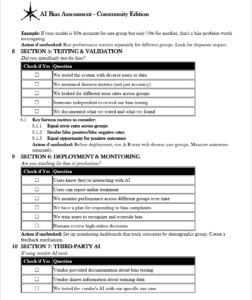

Section 5: Testing & Validation Five checkpoints for specific bias testing: diverse user/data testing, fairness metric measurement (not just accuracy), different error rate examination across groups, independent review of bias testing, and testing documentation. Includes fairness metric reference covering equal error rates, similar false positive/negative rates, and equal opportunity for positive outcomes.

Section 6: Deployment & Monitoring Six checkpoints for production bias watching: AI involvement disclosure to users, unfair treatment reporting mechanisms, cross-group performance monitoring over time, bias complaint response planning, user training for bias recognition and override, and human review of high-stakes decisions.

Section 7: Third-Party AI Five checkpoints for vendor AI tool evaluation: vendor bias testing documentation provision, training data information sharing, specific use case testing, contract bias monitoring requirements, and vendor bias issue reporting procedures.

Bias Risk Scoring System Aggregated scoring across 35 checkpoints with four interpretation tiers: 30-35 checks (strong bias awareness and mitigation), 20-29 (good foundation with gaps to address), 10-19 (significant bias risks requiring action before deployment), under 10 (high bias risk prohibiting deployment without major work).

Mitigation Technique Quick Reference Three-phase guidance covering data collection approaches (oversample underrepresented groups, use synthetic data for balance, remove/adjust biased historical data), model training techniques (fairness constraints in algorithms, adversarial debiasing, multiple model comparison), and post-deployment strategies (human oversight for borderline decisions, user appeal mechanisms, continuous monitoring and retraining).

Comparison Table: Informal Bias Checking vs. Structured Assessment

| Aspect | Ad Hoc Approach | Bias Assessment Checklist |

|---|---|---|

| Planning Consideration | Bias addressed reactively when problems arise | Five-point pre-development bias consideration framework |

| Data Evaluation | Assumption that available data is adequate | Five-checkpoint data quality assessment including representation analysis |

| Performance Testing | Overall accuracy measurement only | Separate performance metrics across demographic groups with fairness criteria |

| Monitoring | User complaints as primary signal | Six-point systematic monitoring including cross-group tracking and feedback mechanisms |

| Third-Party Accountability | Accepting vendor opacity about bias testing | Five-point vendor assessment with contractual transparency requirements |

| Documentation | Informal awareness without systematic recording | Structured documentation of bias findings and mitigation actions |

FAQ Section

Q: What if we don’t collect demographic data about users? A: Not measuring doesn’t mean bias doesn’t exist. It means you’re blind to it. The checklist addresses this common misconception explicitly. Teams need approaches to evaluate whether their AI performs differently for different groups, even if they don’t directly collect protected attributes. Performance disparities can emerge from proxy variables or historical patterns in training data. The assessment helps identify these risks even without explicit demographic data collection.

Q: Do we need bias assessment if our AI doesn’t make high-stakes decisions? A: Yes, though the depth of assessment can match risk level. The risk classification framework (high/medium/low stakes) helps teams calibrate their bias evaluation. Even lower-stakes applications like content recommendations can perpetuate stereotypes or create unfair user experiences. The checklist’s scoring system helps teams understand where their practices match their risk profile.

Q: What if we’re using third-party AI tools like vendor APIs? A: You remain accountable for bias in your application even when using external AI. Section 7 provides a five-point assessment specifically for vendor AI including documentation requirements, training data transparency, use case testing, and contractual obligations. Many vendors claim “proprietary” as justification for opacity. The checklist explicitly addresses this issue and provides evaluation criteria regardless of vendor cooperation.

Q: Our team is diverse. Does that mean our AI won’t be biased? A: No. The checklist explicitly addresses this misconception in the Common Mistakes section. Diverse teams help but don’t eliminate bias in data or algorithms. Historical bias in training data, representation imbalances, measurement choices, and evaluation practices can introduce bias regardless of team composition. The structured assessment evaluates these dimensions systematically.

Q: How do we fix bias once we find it? A: The Mitigation Technique Quick Reference provides three-phase guidance covering data collection strategies, model training approaches, and post-deployment interventions. However, the checklist also includes “When to Get More Help” guidance for situations requiring expert assistance, such as high-stakes decisions, significant bias without clear solutions, regulated industries, community concerns, or team expertise gaps.

Q: What format is this checklist? A: The checklist is provided as a Microsoft Word document enabling checkbox completion, note documentation, and organizational adaptation. Teams can save completed assessments as evidence of bias evaluation practices and track progress over time.

Ideal For

- AI Product Teams deploying features in domains with known historical discrimination patterns

- Data Scientists building models who need fairness evaluation beyond accuracy metrics

- Compliance Officers implementing equity requirements for AI systems

- Small Organizations using AI tools without dedicated fairness or ethics resources

- Anyone Deploying AI in hiring, lending, housing, healthcare, or other high-stakes domains

- Teams Using Vendor AI needing to evaluate bias despite limited transparency from providers