What happens when generative AI models train recursively on each others’ outputs?cs.AI updates on arXiv.org arXiv:2505.21677v3 Announce Type: replace-cross

Abstract: The internet serves as a common source of training data for generative AI (genAI) models but is increasingly populated with AI-generated content. This duality raises the possibility that future genAI models may be trained on other models’ generated outputs. Prior work has studied consequences of models training on their own generated outputs, but limited work has considered what happens if models ingest content produced by other models. Given society’s increasing dependence on genAI tools, understanding such data-mediated model interactions is critical. This work provides empirical evidence for how data-mediated interactions might unfold in practice, develops a theoretical model for this interactive training process, and experimentally validates the theory. We find that data-mediated interactions can benefit models by exposing them to novel concepts perhaps missed in original training data, but also can homogenize their performance on shared tasks.

arXiv:2505.21677v3 Announce Type: replace-cross

Abstract: The internet serves as a common source of training data for generative AI (genAI) models but is increasingly populated with AI-generated content. This duality raises the possibility that future genAI models may be trained on other models’ generated outputs. Prior work has studied consequences of models training on their own generated outputs, but limited work has considered what happens if models ingest content produced by other models. Given society’s increasing dependence on genAI tools, understanding such data-mediated model interactions is critical. This work provides empirical evidence for how data-mediated interactions might unfold in practice, develops a theoretical model for this interactive training process, and experimentally validates the theory. We find that data-mediated interactions can benefit models by exposing them to novel concepts perhaps missed in original training data, but also can homogenize their performance on shared tasks. Read More

Interactive Learning for LLM Reasoningcs.AI updates on arXiv.org arXiv:2509.26306v3 Announce Type: replace

Abstract: Existing multi-agent learning approaches have developed interactive training environments to explicitly promote collaboration among multiple Large Language Models (LLMs), thereby constructing stronger multi-agent systems (MAS). However, during inference, they require re-executing the MAS to obtain final solutions, which diverges from human cognition that individuals can enhance their reasoning capabilities through interactions with others and resolve questions independently in the future. To investigate whether multi-agent interaction can enhance LLMs’ independent problem-solving ability, we introduce ILR, a novel co-learning framework for MAS that integrates two key components: Dynamic Interaction and Perception Calibration. Specifically, Dynamic Interaction first adaptively selects either cooperative or competitive strategies depending on question difficulty and model ability. LLMs then exchange information through Idea3 (Idea Sharing, Idea Analysis, and Idea Fusion), an innovative interaction paradigm designed to mimic human discussion, before deriving their respective final answers. In Perception Calibration, ILR employs Group Relative Policy Optimization (GRPO) to train LLMs while integrating one LLM’s reward distribution characteristics into another’s reward function, thereby enhancing the cohesion of multi-agent interactions. We validate ILR on three LLMs across two model families of varying scales, evaluating performance on five mathematical benchmarks and one coding benchmark. Experimental results show that ILR consistently outperforms single-agent learning, yielding an improvement of up to 5% over the strongest baseline. We further discover that Idea3 can enhance the robustness of stronger LLMs during multi-agent inference, and dynamic interaction types can boost multi-agent learning compared to pure cooperative or competitive strategies.

arXiv:2509.26306v3 Announce Type: replace

Abstract: Existing multi-agent learning approaches have developed interactive training environments to explicitly promote collaboration among multiple Large Language Models (LLMs), thereby constructing stronger multi-agent systems (MAS). However, during inference, they require re-executing the MAS to obtain final solutions, which diverges from human cognition that individuals can enhance their reasoning capabilities through interactions with others and resolve questions independently in the future. To investigate whether multi-agent interaction can enhance LLMs’ independent problem-solving ability, we introduce ILR, a novel co-learning framework for MAS that integrates two key components: Dynamic Interaction and Perception Calibration. Specifically, Dynamic Interaction first adaptively selects either cooperative or competitive strategies depending on question difficulty and model ability. LLMs then exchange information through Idea3 (Idea Sharing, Idea Analysis, and Idea Fusion), an innovative interaction paradigm designed to mimic human discussion, before deriving their respective final answers. In Perception Calibration, ILR employs Group Relative Policy Optimization (GRPO) to train LLMs while integrating one LLM’s reward distribution characteristics into another’s reward function, thereby enhancing the cohesion of multi-agent interactions. We validate ILR on three LLMs across two model families of varying scales, evaluating performance on five mathematical benchmarks and one coding benchmark. Experimental results show that ILR consistently outperforms single-agent learning, yielding an improvement of up to 5% over the strongest baseline. We further discover that Idea3 can enhance the robustness of stronger LLMs during multi-agent inference, and dynamic interaction types can boost multi-agent learning compared to pure cooperative or competitive strategies. Read More

Thinking Machines Launches Tinker: A Low-Level Training API that Abstracts Distributed LLM Fine-Tuning without Hiding the KnobsMarkTechPost Thinking Machines has released Tinker, a Python API that lets researchers and engineers write training loops locally while the platform executes them on managed distributed GPU clusters. The pitch is narrow and technical: keep full control of data, objectives, and optimization steps; hand off scheduling, fault tolerance, and multi-node orchestration. The service is in private

The post Thinking Machines Launches Tinker: A Low-Level Training API that Abstracts Distributed LLM Fine-Tuning without Hiding the Knobs appeared first on MarkTechPost.

Thinking Machines has released Tinker, a Python API that lets researchers and engineers write training loops locally while the platform executes them on managed distributed GPU clusters. The pitch is narrow and technical: keep full control of data, objectives, and optimization steps; hand off scheduling, fault tolerance, and multi-node orchestration. The service is in private

The post Thinking Machines Launches Tinker: A Low-Level Training API that Abstracts Distributed LLM Fine-Tuning without Hiding the Knobs appeared first on MarkTechPost. Read More

5 Fun AI Agent Projects for Absolute BeginnersKDnuggets Build these AI agents that actually do useful work (and teach you a bunch).

Build these AI agents that actually do useful work (and teach you a bunch). Read More

A Gentle Introduction to MCP Servers and ClientsKDnuggets Get a gentle introduction to the standard that defines how artificial intelligence systems connect with the outside world.

Get a gentle introduction to the standard that defines how artificial intelligence systems connect with the outside world. Read More

ViLBias: Detecting and Reasoning about Bias in Multimodal Contentcs.AI updates on arXiv.org arXiv:2412.17052v4 Announce Type: replace

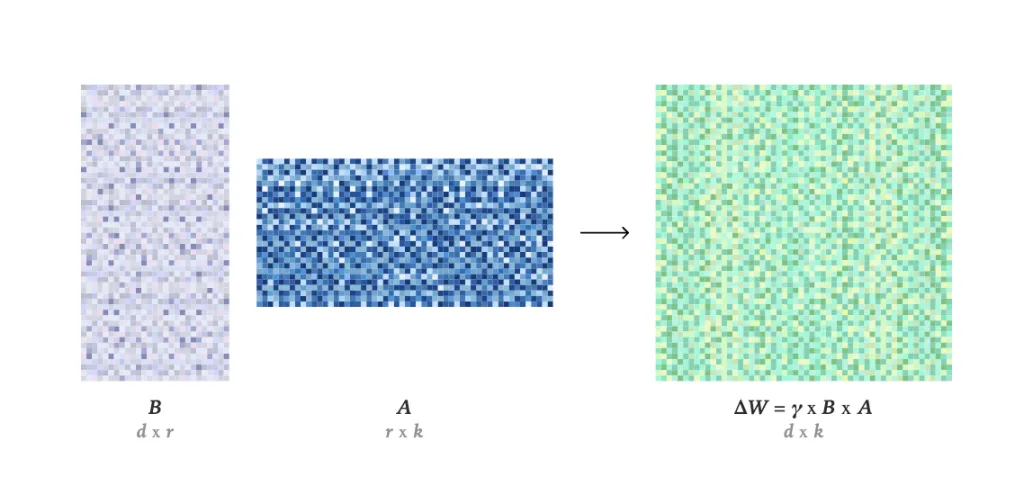

Abstract: Detecting bias in multimodal news requires models that reason over text–image pairs, not just classify text. In response, we present ViLBias, a VQA-style benchmark and framework for detecting and reasoning about bias in multimodal news. The dataset comprises 40,945 text–image pairs from diverse outlets, each annotated with a bias label and concise rationale using a two-stage LLM-as-annotator pipeline with hierarchical majority voting and human-in-the-loop validation. We evaluate Small Language Models (SLMs), Large Language Models (LLMs), and Vision–Language Models (VLMs) across closed-ended classification and open-ended reasoning (oVQA), and compare parameter-efficient tuning strategies. Results show that incorporating images alongside text improves detection accuracy by 3–5%, and that LLMs/VLMs better capture subtle framing and text–image inconsistencies than SLMs. Parameter-efficient methods (LoRA/QLoRA/Adapters) recover 97–99% of full fine-tuning performance with $<5%$ trainable parameters. For oVQA, reasoning accuracy spans 52–79% and faithfulness 68–89%, both improved by instruction tuning; closed accuracy correlates strongly with reasoning ($r = 0.91$). ViLBias offers a scalable benchmark and strong baselines for multimodal bias detection and rationale quality.

arXiv:2412.17052v4 Announce Type: replace

Abstract: Detecting bias in multimodal news requires models that reason over text–image pairs, not just classify text. In response, we present ViLBias, a VQA-style benchmark and framework for detecting and reasoning about bias in multimodal news. The dataset comprises 40,945 text–image pairs from diverse outlets, each annotated with a bias label and concise rationale using a two-stage LLM-as-annotator pipeline with hierarchical majority voting and human-in-the-loop validation. We evaluate Small Language Models (SLMs), Large Language Models (LLMs), and Vision–Language Models (VLMs) across closed-ended classification and open-ended reasoning (oVQA), and compare parameter-efficient tuning strategies. Results show that incorporating images alongside text improves detection accuracy by 3–5%, and that LLMs/VLMs better capture subtle framing and text–image inconsistencies than SLMs. Parameter-efficient methods (LoRA/QLoRA/Adapters) recover 97–99% of full fine-tuning performance with $<5%$ trainable parameters. For oVQA, reasoning accuracy spans 52–79% and faithfulness 68–89%, both improved by instruction tuning; closed accuracy correlates strongly with reasoning ($r = 0.91$). ViLBias offers a scalable benchmark and strong baselines for multimodal bias detection and rationale quality. Read More

What Makes a Language Look Like Itself?Towards Data Science How simple statistics reveal the visual fingerprints of 20 languages

The post What Makes a Language Look Like Itself? appeared first on Towards Data Science.

How simple statistics reveal the visual fingerprints of 20 languages

The post What Makes a Language Look Like Itself? appeared first on Towards Data Science. Read More

Uncovering Vulnerabilities of LLM-Assisted Cyber Threat Intelligencecs.AI updates on arXiv.org arXiv:2509.23573v2 Announce Type: replace-cross

Abstract: Large Language Models (LLMs) are intensively used to assist security analysts in counteracting the rapid exploitation of cyber threats, wherein LLMs offer cyber threat intelligence (CTI) to support vulnerability assessment and incident response. While recent work has shown that LLMs can support a wide range of CTI tasks such as threat analysis, vulnerability detection, and intrusion defense, significant performance gaps persist in practical deployments. In this paper, we investigate the intrinsic vulnerabilities of LLMs in CTI, focusing on challenges that arise from the nature of the threat landscape itself rather than the model architecture. Using large-scale evaluations across multiple CTI benchmarks and real-world threat reports, we introduce a novel categorization methodology that integrates stratification, autoregressive refinement, and human-in-the-loop supervision to reliably analyze failure instances. Through extensive experiments and human inspections, we reveal three fundamental vulnerabilities: spurious correlations, contradictory knowledge, and constrained generalization, that limit LLMs in effectively supporting CTI. Subsequently, we provide actionable insights for designing more robust LLM-powered CTI systems to facilitate future research.

arXiv:2509.23573v2 Announce Type: replace-cross

Abstract: Large Language Models (LLMs) are intensively used to assist security analysts in counteracting the rapid exploitation of cyber threats, wherein LLMs offer cyber threat intelligence (CTI) to support vulnerability assessment and incident response. While recent work has shown that LLMs can support a wide range of CTI tasks such as threat analysis, vulnerability detection, and intrusion defense, significant performance gaps persist in practical deployments. In this paper, we investigate the intrinsic vulnerabilities of LLMs in CTI, focusing on challenges that arise from the nature of the threat landscape itself rather than the model architecture. Using large-scale evaluations across multiple CTI benchmarks and real-world threat reports, we introduce a novel categorization methodology that integrates stratification, autoregressive refinement, and human-in-the-loop supervision to reliably analyze failure instances. Through extensive experiments and human inspections, we reveal three fundamental vulnerabilities: spurious correlations, contradictory knowledge, and constrained generalization, that limit LLMs in effectively supporting CTI. Subsequently, we provide actionable insights for designing more robust LLM-powered CTI systems to facilitate future research. Read More

PodEval: A Multimodal Evaluation Framework for Podcast Audio Generationcs.AI updates on arXiv.org arXiv:2510.00485v1 Announce Type: cross

Abstract: Recently, an increasing number of multimodal (text and audio) benchmarks have emerged, primarily focusing on evaluating models’ understanding capability. However, exploration into assessing generative capabilities remains limited, especially for open-ended long-form content generation. Significant challenges lie in no reference standard answer, no unified evaluation metrics and uncontrollable human judgments. In this work, we take podcast-like audio generation as a starting point and propose PodEval, a comprehensive and well-designed open-source evaluation framework. In this framework: 1) We construct a real-world podcast dataset spanning diverse topics, serving as a reference for human-level creative quality. 2) We introduce a multimodal evaluation strategy and decompose the complex task into three dimensions: text, speech and audio, with different evaluation emphasis on “Content” and “Format”. 3) For each modality, we design corresponding evaluation methods, involving both objective metrics and subjective listening test. We leverage representative podcast generation systems (including open-source, close-source, and human-made) in our experiments. The results offer in-depth analysis and insights into podcast generation, demonstrating the effectiveness of PodEval in evaluating open-ended long-form audio. This project is open-source to facilitate public use: https://github.com/yujxx/PodEval.

arXiv:2510.00485v1 Announce Type: cross

Abstract: Recently, an increasing number of multimodal (text and audio) benchmarks have emerged, primarily focusing on evaluating models’ understanding capability. However, exploration into assessing generative capabilities remains limited, especially for open-ended long-form content generation. Significant challenges lie in no reference standard answer, no unified evaluation metrics and uncontrollable human judgments. In this work, we take podcast-like audio generation as a starting point and propose PodEval, a comprehensive and well-designed open-source evaluation framework. In this framework: 1) We construct a real-world podcast dataset spanning diverse topics, serving as a reference for human-level creative quality. 2) We introduce a multimodal evaluation strategy and decompose the complex task into three dimensions: text, speech and audio, with different evaluation emphasis on “Content” and “Format”. 3) For each modality, we design corresponding evaluation methods, involving both objective metrics and subjective listening test. We leverage representative podcast generation systems (including open-source, close-source, and human-made) in our experiments. The results offer in-depth analysis and insights into podcast generation, demonstrating the effectiveness of PodEval in evaluating open-ended long-form audio. This project is open-source to facilitate public use: https://github.com/yujxx/PodEval. Read More

Privacy-Preserving Learning-Augmented Data Structurescs.AI updates on arXiv.org arXiv:2510.00165v1 Announce Type: cross

Abstract: Learning-augmented data structures use predicted frequency estimates to retrieve frequently occurring database elements faster than standard data structures. Recent work has developed data structures that optimally exploit these frequency estimates while maintaining robustness to adversarial prediction errors. However, the privacy and security implications of this setting remain largely unexplored.

In the event of a security breach, data structures should reveal minimal information beyond their current contents. This is even more crucial for learning-augmented data structures, whose layout adapts to the data. A data structure is history independent if its memory representation reveals no information about past operations except what is inferred from its current contents. In this work, we take the first step towards privacy and security guarantees in this setting by proposing the first learning-augmented data structure that is strongly history independent, robust, and supports dynamic updates.

To achieve this, we introduce two techniques: thresholding, which automatically makes any learning-augmented data structure robust, and pairing, a simple technique that provides strong history independence in the dynamic setting. Our experimental results demonstrate a tradeoff between security and efficiency but are still competitive with the state of the art.

arXiv:2510.00165v1 Announce Type: cross

Abstract: Learning-augmented data structures use predicted frequency estimates to retrieve frequently occurring database elements faster than standard data structures. Recent work has developed data structures that optimally exploit these frequency estimates while maintaining robustness to adversarial prediction errors. However, the privacy and security implications of this setting remain largely unexplored.

In the event of a security breach, data structures should reveal minimal information beyond their current contents. This is even more crucial for learning-augmented data structures, whose layout adapts to the data. A data structure is history independent if its memory representation reveals no information about past operations except what is inferred from its current contents. In this work, we take the first step towards privacy and security guarantees in this setting by proposing the first learning-augmented data structure that is strongly history independent, robust, and supports dynamic updates.

To achieve this, we introduce two techniques: thresholding, which automatically makes any learning-augmented data structure robust, and pairing, a simple technique that provides strong history independence in the dynamic setting. Our experimental results demonstrate a tradeoff between security and efficiency but are still competitive with the state of the art. Read More