The RansomHouse ransomware-as-a-service (RaaS) has recently upgraded its encryptor, switching from a relatively simple single-phase linear technique to a more complex, multi-layered method. […] Read More

NVIDIA AI Releases Nemotron 3: A Hybrid Mamba Transformer MoE Stack for Long Context Agentic AIMarkTechPost NVIDIA has released the Nemotron 3 family of open models as part of a full stack for agentic AI, including model weights, datasets and reinforcement learning tools. The family has three sizes, Nano, Super and Ultra, and targets multi agent systems that need long context reasoning with tight control over inference cost. Nano has about

The post NVIDIA AI Releases Nemotron 3: A Hybrid Mamba Transformer MoE Stack for Long Context Agentic AI appeared first on MarkTechPost.

NVIDIA has released the Nemotron 3 family of open models as part of a full stack for agentic AI, including model weights, datasets and reinforcement learning tools. The family has three sizes, Nano, Super and Ultra, and targets multi agent systems that need long context reasoning with tight control over inference cost. Nano has about

The post NVIDIA AI Releases Nemotron 3: A Hybrid Mamba Transformer MoE Stack for Long Context Agentic AI appeared first on MarkTechPost. Read More

Threat hunters have discerned new activity associated with an Iranian threat actor known as Infy (aka Prince of Persia), nearly five years after the hacking group was observed targeting victims in Sweden, the Netherlands, and Turkey. “The scale of Prince of Persia’s activity is more significant than we originally anticipated,” Tomer Bar, vice president of […]

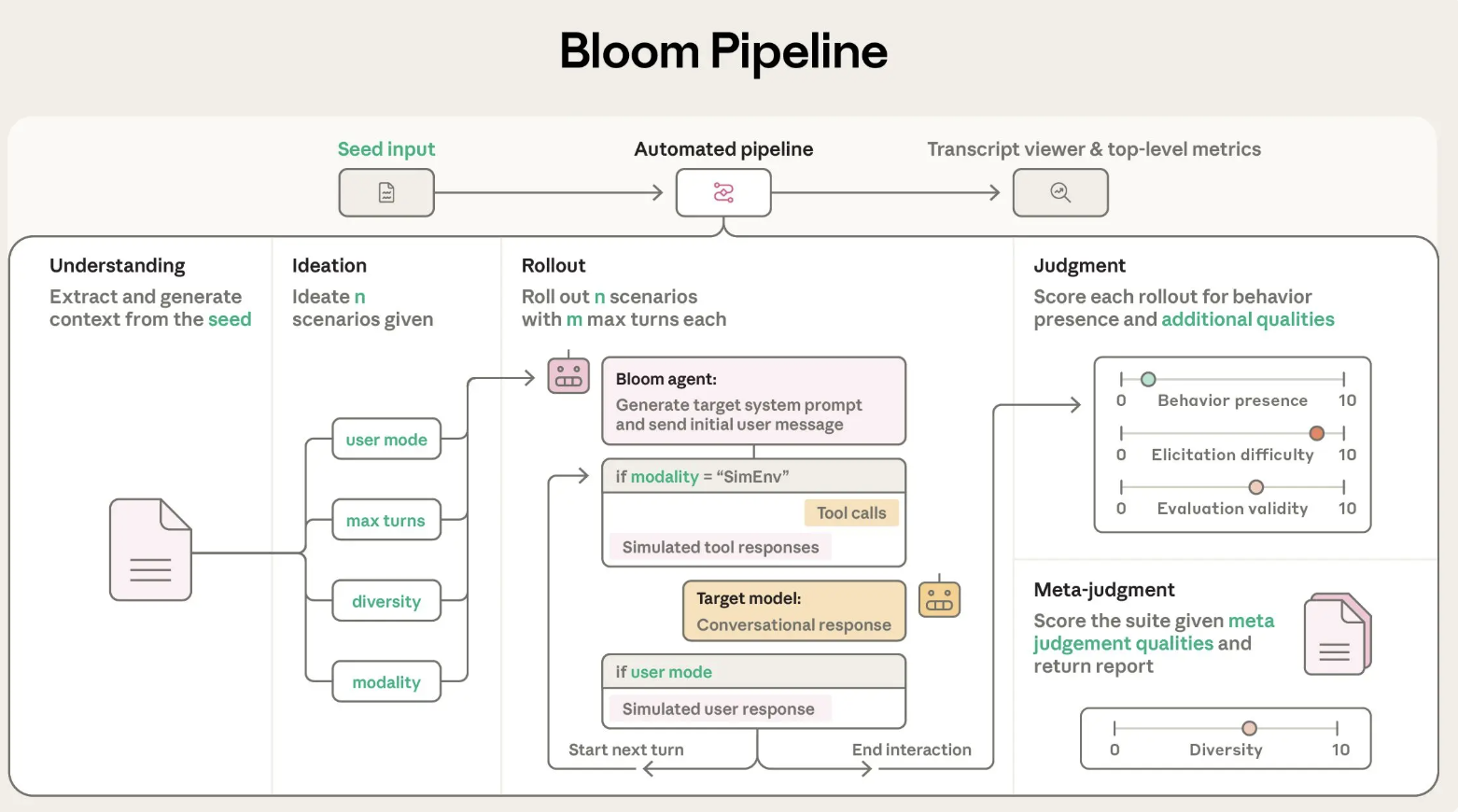

Anthropic AI Releases Bloom: An Open-Source Agentic Framework for Automated Behavioral Evaluations of Frontier AI ModelsMarkTechPost Anthropic has released Bloom, an open source agentic framework that automates behavioral evaluations for frontier AI models. The system takes a researcher specified behavior and builds targeted evaluations that measure how often and how strongly that behavior appears in realistic scenarios. Why Bloom? Behavioral evaluations for safety and alignment are expensive to design and maintain.

The post Anthropic AI Releases Bloom: An Open-Source Agentic Framework for Automated Behavioral Evaluations of Frontier AI Models appeared first on MarkTechPost.

Anthropic has released Bloom, an open source agentic framework that automates behavioral evaluations for frontier AI models. The system takes a researcher specified behavior and builds targeted evaluations that measure how often and how strongly that behavior appears in realistic scenarios. Why Bloom? Behavioral evaluations for safety and alignment are expensive to design and maintain.

The post Anthropic AI Releases Bloom: An Open-Source Agentic Framework for Automated Behavioral Evaluations of Frontier AI Models appeared first on MarkTechPost. Read More

Understanding the Generative AI UserTowards Data Science What do regular technology users think (and know) about AI?

The post Understanding the Generative AI User appeared first on Towards Data Science.

What do regular technology users think (and know) about AI?

The post Understanding the Generative AI User appeared first on Towards Data Science. Read More

Tools for Your LLM: a Deep Dive into MCPTowards Data Science MCP is a key enabler into turning your LLM into an agent by providing it with tools to retrieve real-time information or perform actions.

In this deep dive we cover how MCP works, when to use it, and what to watch out for.

The post Tools for Your LLM: a Deep Dive into MCP appeared first on Towards Data Science.

MCP is a key enabler into turning your LLM into an agent by providing it with tools to retrieve real-time information or perform actions.

In this deep dive we cover how MCP works, when to use it, and what to watch out for.

The post Tools for Your LLM: a Deep Dive into MCP appeared first on Towards Data Science. Read More

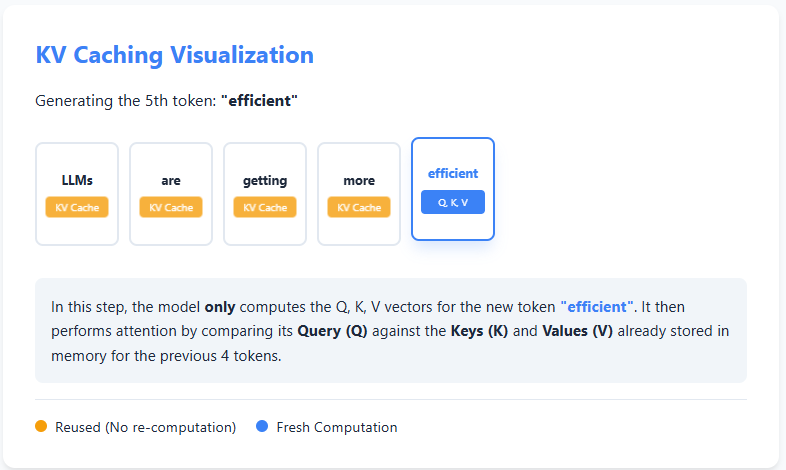

AI Interview Series #4: Explain KV CachingMarkTechPost Question: You’re deploying an LLM in production. Generating the first few tokens is fast, but as the sequence grows, each additional token takes progressively longer to generate—even though the model architecture and hardware remain the same. If compute isn’t the primary bottleneck, what inefficiency is causing this slowdown, and how would you redesign the inference

The post AI Interview Series #4: Explain KV Caching appeared first on MarkTechPost.

Question: You’re deploying an LLM in production. Generating the first few tokens is fast, but as the sequence grows, each additional token takes progressively longer to generate—even though the model architecture and hardware remain the same. If compute isn’t the primary bottleneck, what inefficiency is causing this slowdown, and how would you redesign the inference

The post AI Interview Series #4: Explain KV Caching appeared first on MarkTechPost. Read More

The U.S. Department of Justice (DoJ) this week announced the indictment of 54 individuals in connection with a multi-million dollar ATM jackpotting scheme. The large-scale conspiracy involved deploying malware named Ploutus to hack into automated teller machines (ATMs) across the U.S. and force them to dispense cash. The indicted members are alleged to be part […]

The UEFI firmware implementation in some motherboards from ASUS, Gigabyte, MSI, and ASRock is vulnerable to direct memory access (DMA) attacks that can bypass early-boot memory protections. […] Read More

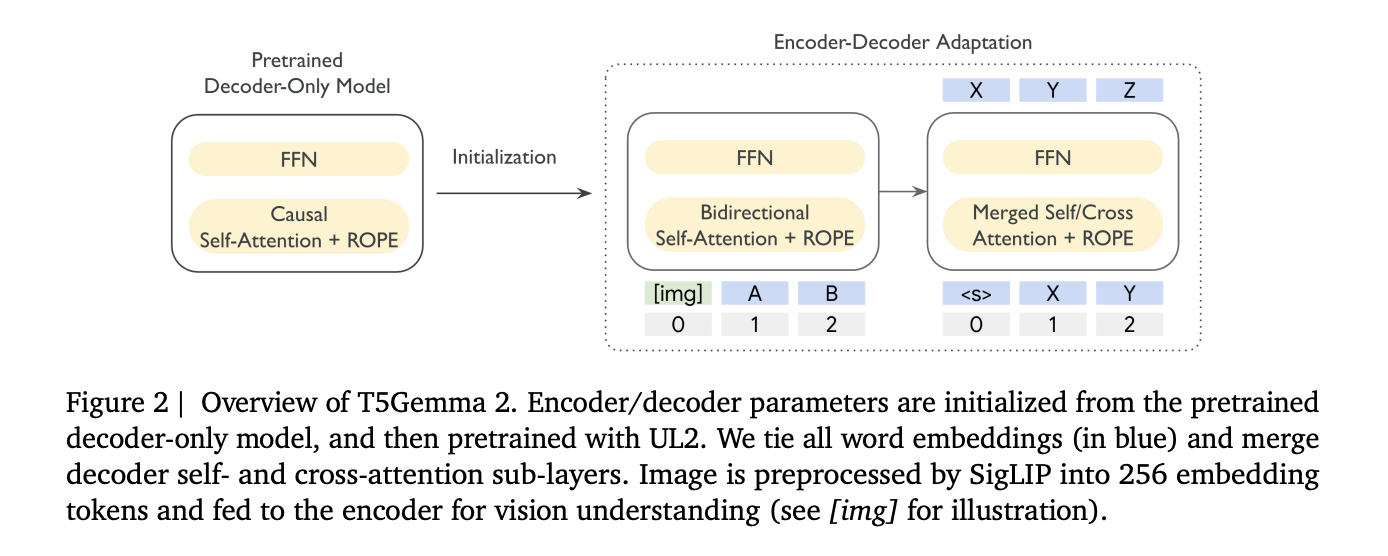

Google Introduces T5Gemma 2: Encoder Decoder Models with Multimodal Inputs via SigLIP and 128K ContextMarkTechPost Google has released T5Gemma 2, a family of open encoder-decoder Transformer checkpoints built by adapting Gemma 3 pretrained weights into an encoder-decoder layout, then continuing pretraining with the UL2 objective. The release is pretrained only, intended for developers to post-train for specific tasks, and Google explicitly notes it is not releasing post-trained or IT checkpoints

The post Google Introduces T5Gemma 2: Encoder Decoder Models with Multimodal Inputs via SigLIP and 128K Context appeared first on MarkTechPost.

Google has released T5Gemma 2, a family of open encoder-decoder Transformer checkpoints built by adapting Gemma 3 pretrained weights into an encoder-decoder layout, then continuing pretraining with the UL2 objective. The release is pretrained only, intended for developers to post-train for specific tasks, and Google explicitly notes it is not releasing post-trained or IT checkpoints

The post Google Introduces T5Gemma 2: Encoder Decoder Models with Multimodal Inputs via SigLIP and 128K Context appeared first on MarkTechPost. Read More