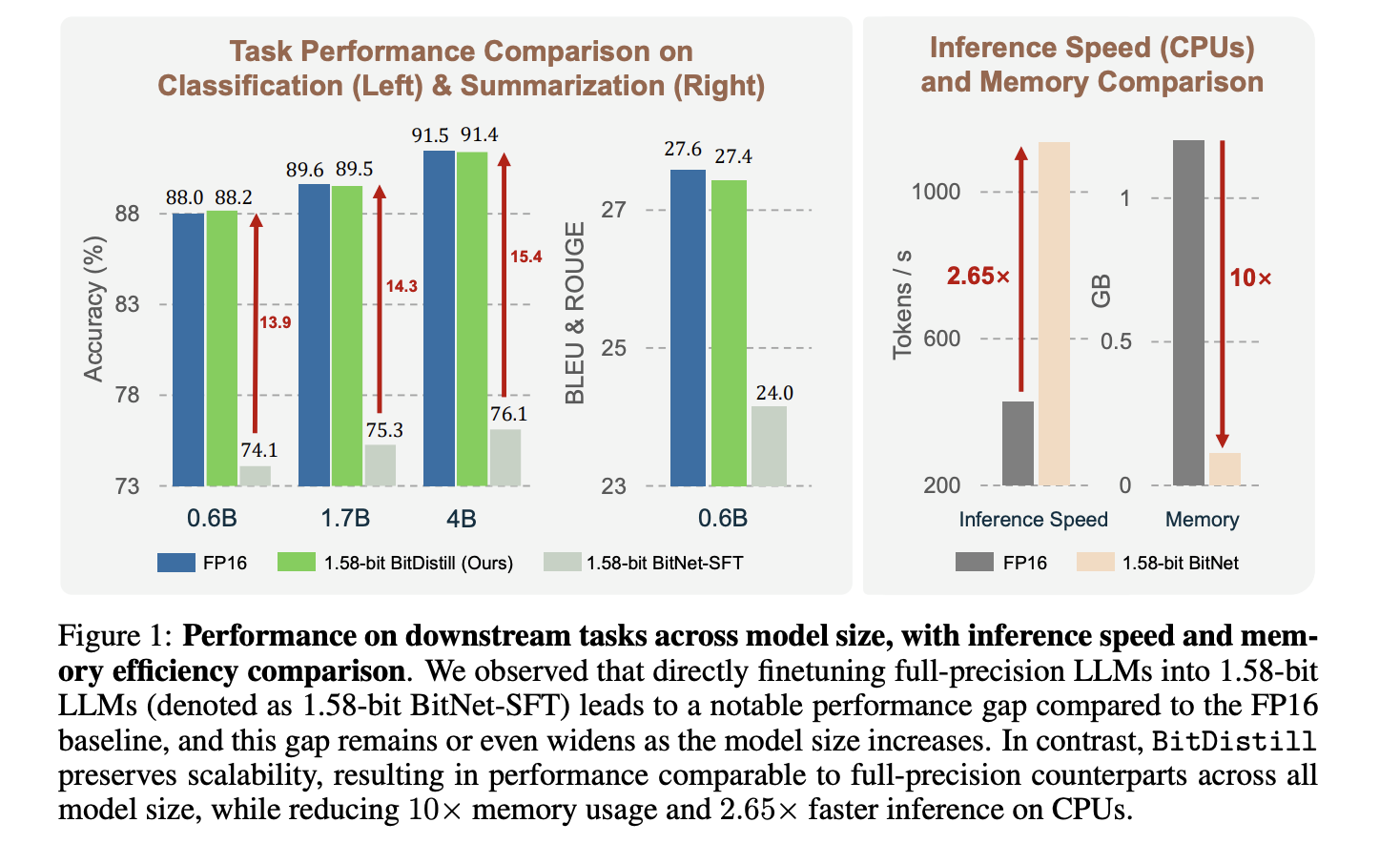

Microsoft Research proposes BitNet Distillation, a pipeline that converts existing full precision LLMs into 1.58 bit BitNet students for specific tasks, while keeping accuracy close to the FP16 teacher and improving CPU efficiency. The method combines SubLN based architectural refinement, continued pre training, and dual signal distillation from logits and multi head attention relations. Reported

The post Microsoft AI Proposes BitNet Distillation (BitDistill): A Lightweight Pipeline that Delivers up to 10x Memory Savings and about 2.65x CPU Speedup appeared first on MarkTechPost. Read More