- Version 1

- Download 4

- File Size 138.17 KB

- File Count 1

- Create Date October 4, 2025

- Last Updated October 20, 2025

- Download

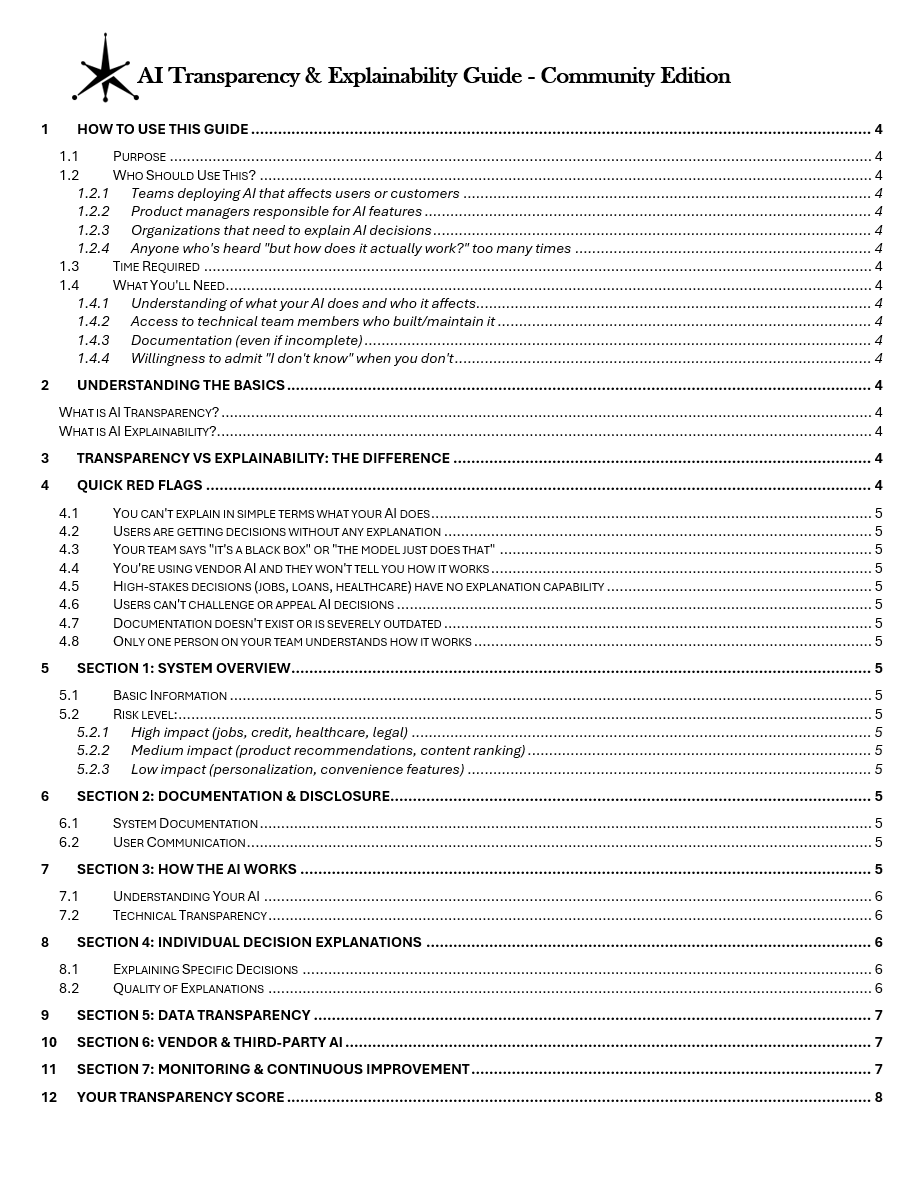

AI Transparency and Explainability Guide - Community Edition

A practical framework designed to help organizations build user trust through clear communication about AI capabilities, limitations, and decision-making processes.

[Download Now]

What This Guide Provides

Users won't trust AI systems they don't understand. Regulators increasingly require explanations for automated decisions. Teams need structured approaches to document what their AI does, how it works, and why specific outcomes occur. This Community Edition guide provides assessment frameworks across seven critical transparency and explainability dimensions.

The guide uses checkbox-based evaluation across 42 transparency indicators, from basic system documentation through individual decision explanations and continuous monitoring. Organizations can complete the initial assessment in 2-3 hours, though implementing improvements and building explanation capabilities will require ongoing attention. The framework includes three-tier explainability levels matched to use case risk, helping teams provide appropriate transparency without over-engineering low-stakes applications.

What You Get: ✓ Seven-section assessment framework covering system overview through continuous improvement ✓ 42-point transparency scoring system with interpretation guidance ✓ Three-tier explainability level framework (Basic, Decision Factors, Detailed) ✓ Risk classification guidance (High/Medium/Low impact) ✓ Explanation technique recommendations for different model types ✓ "Grandma Test" communication framework for plain-language explanations ✓ Pre-deployment checklist with eight critical readiness criteria ✓ Regulatory considerations covering EU AI Act, GDPR, and US state laws

Designed For:

- Product teams deploying AI that affects users or customers

- Product managers responsible for AI feature transparency

- Organizations needing to explain AI decisions to stakeholders

- Teams implementing explainability requirements for regulatory compliance

Preview What's Inside: The guide contains seven assessment sections with checkbox frameworks, a transparency scoring matrix, explanation technique recommendations, common mistake warnings, and action planning templates. Supporting sections provide regulatory context, resource recommendations, and documentation templates.

Why AI Transparency and Explainability Matter

Organizations deploying AI face fundamental questions from users, regulators, and internal stakeholders: What does this AI do? How does it work? Why did it make this specific decision? What can I do if I disagree? Without structured approaches to answering these questions, organizations risk user distrust, regulatory challenges, and accountability failures when AI produces unexpected or problematic outcomes.

This guide distinguishes between transparency (explaining how the AI system works overall) and explainability (explaining why specific decisions happened for specific individuals). Both dimensions are necessary. Transparency without explainability leaves users unable to understand their individual outcomes. Explainability without transparency creates confusion about the broader system context.

The Transparency vs. Explainability Framework

Transparency addresses system-level understanding: what the AI does, what data it uses, how it was trained, and what its limitations are. Example from the guide: "This hiring tool ranks resumes based on skills, experience, and education from our job description."

Explainability addresses individual decision understanding: why the AI made a specific decision, what factors influenced the outcome, how confident the AI is, and what would need to change for a different result. Example from the guide: "Your resume ranked lower because it showed 2 years of required experience instead of the 5 years we specified."

The guide structures assessment across both dimensions, helping teams evaluate whether they can explain their AI at both the system level and the individual decision level.

Assessment Framework Structure

Section 1: System Overview Basic information capture including AI system name, plain-language purpose description, decision authority (AI, humans, or both), and risk level classification aligned with impact on users (high impact for jobs/credit/healthcare/legal, medium for recommendations/ranking, low for personalization/convenience).

Section 2: Documentation & Disclosure Ten-item assessment covering system documentation practices and user communication approaches. Evaluates whether organizations maintain current plain-language documentation, disclose AI involvement to users, explain limitations, notify users about changes, and provide challenge mechanisms.

Section 3: How the AI Works Ten-item framework evaluating overall system understanding including general approach explanation, factor importance, training data documentation, performance characteristics, model cards, architecture understanding, behavior testing across user groups, failure mode identification, performance metric transparency, and decision boundary documentation.

Section 4: Individual Decision Explanations Ten-item assessment for specific outcome explanation capabilities including user access to explanations, factor importance display, confidence indicators, counterfactual scenarios, actionable guidance, plain language usage, consistency, user testing, specificity, and honest limitation communication.

Section 5: Data Transparency Six-item evaluation of data openness including training data provenance, quality disclosure, sensitive attribute usage (including proxy variables), individual data usage communication, processing step explanation, and data sheet documentation.

Section 6: Vendor & Third-Party AI Six-item framework for external AI tool transparency including vendor documentation provision, training data information sharing, explanation capability for vendor decisions, update notification, contractual transparency requirements, and use case testing.

Section 7: Monitoring & Continuous Improvement Six-item assessment of ongoing transparency maintenance including explanation effectiveness tracking, decision logging for traceability, user feedback monitoring, documentation review cadence, explanation quality auditing, and challenge/appeal frequency tracking.

Transparency Scoring System Aggregated scoring across all 42 checkmarks with four interpretation tiers: 35-42 checks (excellent transparency), 25-34 (good foundation with improvement room), 15-24 (significant gaps requiring attention before wider deployment), under 15 (critical issues with high risk).

Three-Tier Explainability Levels Framework matching explanation depth to use case risk:

- Level 1 (Basic Transparency): Minimum for any AI. Users know AI is involved, general description of what AI does, help/appeal information.

- Level 2 (Decision Factors): For most applications. Top influencing factors, general confidence level, simple what-if scenarios.

- Level 3 (Detailed Explanations): For high-stakes decisions. Specific factor contributions, precise confidence scores, detailed counterfactuals, full decision audit trail.

Explanation Technique Recommendations Model-specific guidance covering simple models (decision trees show path, linear models show weights, rules-based show triggers), complex models (feature importance via SHAP/LIME, attention mechanisms for transformers, surrogate models, example-based), and universal techniques (confidence scores, counterfactuals, natural language summaries).

Comparison Table: Ad Hoc Communication vs. Structured Transparency

| Aspect | Informal Approach | Transparency Guide Framework |

|---|---|---|

| User Disclosure | Inconsistent or hidden AI involvement | Systematic disclosure requirements with user communication checklist |

| Decision Explanations | Generic responses like "the AI decided" | Structured explanation frameworks with factor importance and counterfactuals |

| Documentation | Outdated technical docs or none | Plain-language system documentation with regular update requirements |

| Risk Assessment | Uniform approach for all AI | Three-tier explainability matching (Basic/Decision Factors/Detailed) to risk level |

| Vendor Accountability | Accepting "proprietary" as excuse for opacity | Six-point vendor transparency assessment with contractual requirements |

| Monitoring | Reactive response to complaints | Proactive tracking of explanation effectiveness and appeal frequency |

FAQ Section

Q: What's the difference between transparency and explainability? A: Transparency explains how the AI system works overall (what it does, what data it uses, its limitations). Explainability explains why specific decisions happened for specific individuals (what factors mattered, what confidence level, what could change the outcome). Both are necessary. The guide provides assessment frameworks for both dimensions.

Q: Do I need the same level of explainability for every AI system? A: No. The guide includes a three-tier framework matching explanation depth to risk level. Basic transparency (Level 1) is minimum for any AI. Most applications need decision factor explanations (Level 2). High-stakes decisions like employment, credit, healthcare, or legal matters require detailed explanations (Level 3) with full audit trails. Using insufficient explanation depth for high-risk applications is a common and problematic mistake.

Q: What if my AI uses vendor or third-party models? A: You remain accountable for AI decisions even when using external tools. Section 6 provides a six-point assessment for vendor AI transparency including documentation requirements, training data disclosure, explanation capabilities, and contractual standards. The guide explicitly addresses the "proprietary" excuse that many vendors use to avoid transparency obligations.

Q: How do I explain complex deep learning models? A: The guide provides technique recommendations for complex models including feature importance methods (SHAP, LIME), attention mechanisms for transformers, surrogate model approaches, and example-based explanations. The key is selecting techniques appropriate to your audience and use case. The "Grandma Test" section provides guidance on translating technical explanations into plain language.

Q: Does this guide help with regulatory compliance? A: The guide includes regulatory considerations covering EU AI Act (high-risk AI must provide explanations, users have right to explanation), GDPR Article 22 (right to explanation for automated decisions), US state laws (algorithmic transparency in specific contexts), and industry-specific requirements for healthcare, finance, and employment. However, it provides frameworks for assessment and does not constitute legal advice about specific compliance obligations.

Q: What format is this guide? A: The guide is provided as a Microsoft Word document enabling form filling, note-taking, and organizational customization. The checkbox assessment format allows teams to track progress and reassess periodically.

Ideal For

- AI Product Teams deploying systems where users need to understand outcomes and challenge decisions

- Product Managers responsible for building trust through clear AI communication

- Compliance Officers implementing explainability requirements from EU AI Act or GDPR

- Customer Experience Teams addressing user questions about AI-driven decisions

- UX Researchers testing whether AI explanations actually help users understand

- Organizations Using Vendor AI needing to maintain accountability despite external models

About This Guide

Version: Community Edition 1.0

Format: Microsoft Word (.docx)

Page Count: 14 pages

Created by: Tech Jacks Solutions

What This Provides:

- Transparency and explainability assessment framework

- Risk-matched explainability level guidance

- Action planning tools

What This Does NOT Provide:

- Legal advice

- Guarantee of regulatory compliance

- Detailed technical implementation specifications

- Industry-specific guidance

Tools Referenced:

- SHAP (SHapley Additive exPlanations)

- LIME (Local Interpretable Model-agnostic Explanations)

- What-If Tool (Google)

- InterpretML (Microsoft)

Standards Referenced:

- ISO/IEC 23053 (AI Trustworthiness)

- IEEE 7001 (Transparency of Autonomous Systems)

- NIST AI Risk Management Framework

Disclaimer: This guide provides frameworks for assessing AI transparency and explainability but does not constitute legal, compliance, or technical implementation advice. Organizations should consult with qualified professionals to ensure their AI explanation approaches meet applicable regulatory and industry requirements.

Remember: Transparency isn't about revealing trade secrets. It's about being honest and helpful. If you're making decisions about people, they deserve to understand why.

| File | Action |

|---|---|

| AI-Transparency-and-Explainability-Guide-Community-Edition.docx | Download |