- Version

- Download

- File Size 0.00 KB

- File Count 0

- Create Date August 24, 2025

- Last Updated August 24, 2025

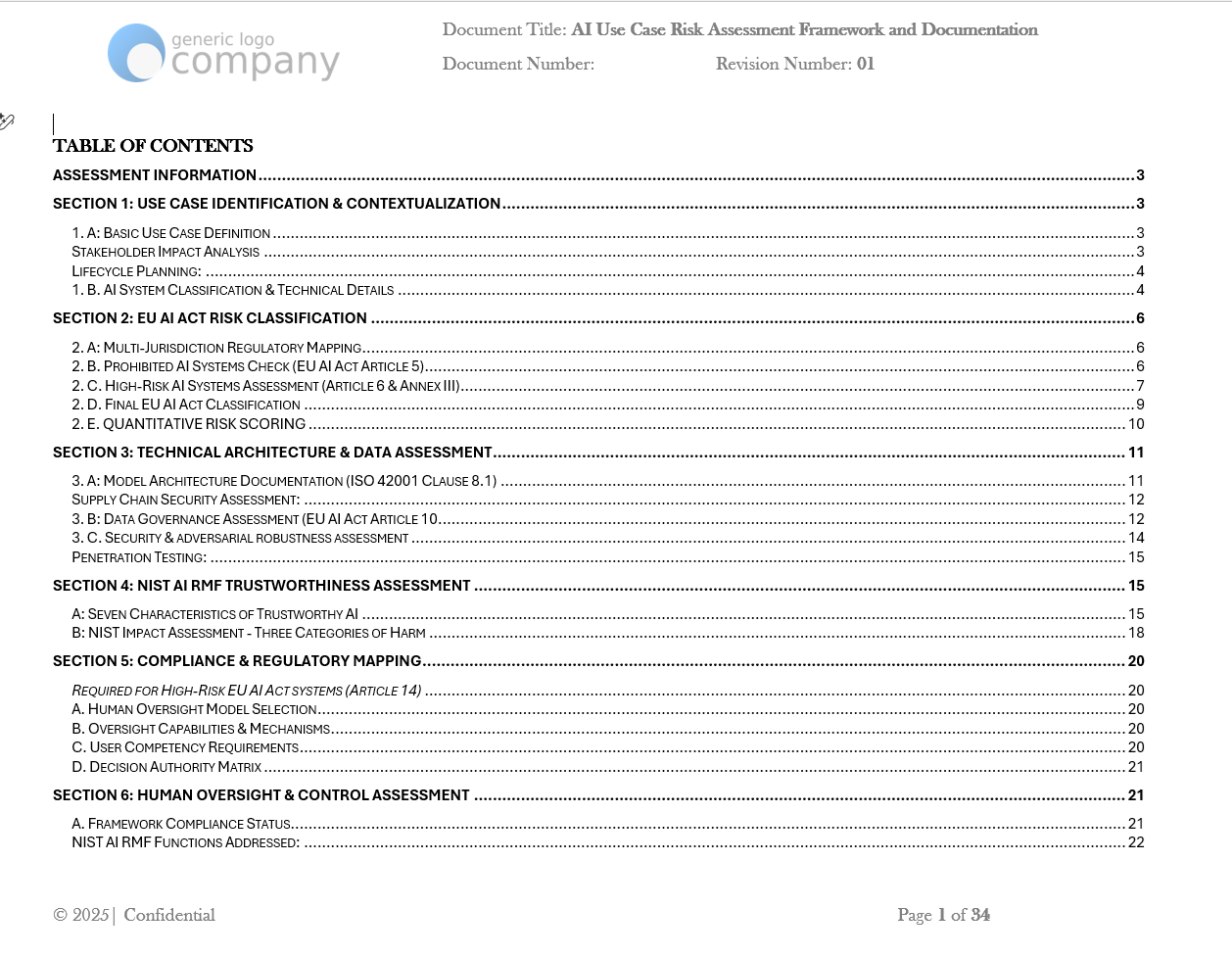

AI Use Case Risk Assessment Framework

Assess, classify, and govern AI use cases with a compliance-ready AI Use Case Risk Assessment framework aligned to global regulations.

Ensure AI Use Cases are properly Evaluated: [Download Now]

Every AI deployment begins with the use case — and regulators now require that risks are classified and managed before implementation. The AI Use Case Risk Assessment Framework provides organizations with a structured, audit-ready methodology to evaluate risks across jurisdictions, technical architecture, and stakeholder impacts.

Key Benefits:

-

✅ End-to-End Lifecycle Coverage: From use case definition to monitoring and decommissioning.

-

✅ Regulatory Alignment: Built for EU AI Act, NIST AI RMF, ISO/IEC 42001, and OECD AI Principles.

-

✅ Jurisdictional Mapping: Multi-country compliance including GDPR, CCPA, HIPAA, BIPA.

-

✅ Risk Transparency: Includes risk scoring, high-risk Annex III mapping, and audit evidence.

-

✅ Governance Built-In: Defines oversight, approval pathways, and accountability.

Who Uses This?

Compliance officers, risk managers, governance committees, and product leaders conducting AI Use Case Risk Assessments to ensure ethical, fair, and compliant deployment.

Why This Matters

The EU AI Act requires organizations to conduct a use case-based risk classification before AI implementation. Without an AI Use Case Risk Assessment, businesses risk deploying prohibited or high-risk systems without safeguards. This framework provides a repeatable, governance-ready process to classify risks, document regulatory alignment, and ensure oversight.

Framework Alignment

The AI Use Case Risk Assessment Framework supports:

-

EU AI Act — Article 5 prohibited systems, Article 6 high-risk classification, Annex III use cases.

-

NIST AI RMF — Risk classification and trustworthy AI characteristics.

-

ISO/IEC 42001 & 23894 — Governance and AI risk management integration.

-

GDPR, CCPA, HIPAA, BIPA — Privacy and biometric protection requirements.

-

OECD AI Principles — Fairness, transparency, and accountability.

Key Features

-

Use Case Identification & Contextualization: Stakeholder analysis, lifecycle planning, and system classification.

-

EU AI Act Risk Classification: Prohibited use case checks, high-risk Annex III mapping, exemptions, and final classification.

-

Regulatory Mapping: Multi-jurisdictional requirements across EU, U.S., and sectoral laws.

-

Technical Architecture Review: Model architecture, data governance, supply chain security, adversarial robustness.

-

NIST AI RMF Integration: Seven trustworthiness categories, harm assessment, and oversight models.

-

Human Oversight & Control: Decision authority matrix, oversight mechanisms, and competency requirements.

-

Risk Scoring & Approval Pathway: Likelihood × impact, final classification, and approval chain.

-

Monitoring & Action Plans: Pre-implementation actions, lifecycle monitoring, and residual risk acceptance.

Comparison Table

| Feature | Generic Checklist | AI Use Case Risk Assessment Framework |

|---|---|---|

| Use case risk classification | Basic/absent | EU AI Act Articles 5 & 6 + Annex III mapping |

| Jurisdictional mapping | Not included | EU, U.S. federal/state, sectoral frameworks |

| Technical architecture review | Minimal | Model, data, supply chain, adversarial testing |

| Trustworthiness assessment | Absent | NIST AI RMF 7 characteristics |

| Audit evidence | Weak | Risk scoring, evidence checklist, approval chain |

| Lifecycle monitoring | Not addressed | Ongoing monitoring + decommissioning criteria |

FAQ Section

Q1: What is an AI Use Case Risk Assessment?

A: An AI Use Case Risk Assessment is a structured evaluation of risks associated with specific AI use cases, including classification, regulatory mapping, and oversight requirements.

Q2: Which regulations does this framework support?

A: It aligns with the EU AI Act, NIST AI RMF, ISO/IEC 42001, ISO/IEC 23894, GDPR, CCPA, HIPAA, and BIPA.

Q3: Does it include prohibited use case checks?

A: Yes. It explicitly includes EU AI Act Article 5 prohibited systems (e.g., social scoring, subliminal manipulation, untargeted biometric ID).

Q4: How does this help with high-risk systems?

A: It includes Annex III mapping, exemption checks, quantitative risk scoring, and oversight requirements for high-risk AI.

Q5: Is it suitable for SMEs as well as enterprises?

A: Yes. SMEs can use the streamlined sections, while enterprises apply the full classification, scoring, and oversight models.

Q6: What is the best way to use these templates?

A: Documents are best viewed and used via Microsoft Word or Microsoft Excel. Formatting may not fully display in Google Docs or other editors.

Ideal For

-

Compliance & Risk Officers

-

Chief AI Officers (CAIOs)

-

AI Governance Committees

-

Product Owners & Business Leaders

-

Audit & Assurance Teams

-

Vendor Risk Management Functions