- Version 1

- Download 1

- File Size 158.73 KB

- File Count 1

- Create Date October 13, 2025

- Last Updated October 20, 2025

- Download

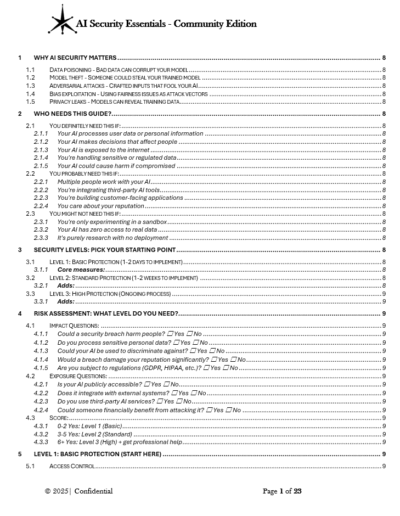

AI Security Essentials - Community Edition

A practical, level-based security framework designed to protect AI systems from common threats without requiring enterprise security teams

AI Security Essentials - Community Edition

This guide provides a three-level security framework for protecting AI systems against unique threats including data poisoning, model theft, adversarial attacks, bias exploitation, and privacy leaks. Each level includes specific implementation steps with realistic time estimates. Teams can start with basic protections implementable in 1-2 days and scale up as their risk profile grows. The framework requires customization to your specific AI applications and threat environment.

Key Benefits

✓ Three security levels with clear implementation timeframes (Basic: 1-2 days, Standard: 1-2 weeks, High: ongoing)

✓ Covers five AI-specific threats with practical defense strategies for each

✓ Includes risk assessment tool with nine questions to determine appropriate security level

✓ Provides daily, weekly, monthly, quarterly, and annual security checklists

✓ Lists free and affordable security tools for access control, monitoring, dependency scanning, testing, and privacy

✓ Contains incident response playbook with severity levels and step-by-step response procedures

✓ Scales for team sizes from 1-5 people through 20+ with role definitions for each

✓ Free to use and modify under Community Edition license

Who Uses This?

This guide is designed for:

- Teams building AI systems who need practical security guidance

- Organizations processing user data or personal information through AI

- Developers deploying customer-facing AI applications

- Teams integrating third-party AI services

- Small to medium organizations without dedicated security staff

- Anyone responsible for AI system security and data protection

What's Included

The guide contains 20 main sections with actionable checklists:

- Three-level security framework with implementation time estimates

- Risk assessment questionnaire to determine needed security level

- Five common threat categories with defense strategies

- Incident response playbook with four severity levels

- Role definitions for small, medium, and large teams

- Budget guidelines for professional security assistance

- Free and affordable security tools list

- Daily through annual security task checklists

Why This Matters

AI systems face unique security challenges that traditional software security doesn't address. Models can be corrupted through poisoned training data, stolen through systematic API queries, fooled by carefully crafted adversarial inputs, exploited through fairness vulnerabilities, or leak private information from their training data. Without AI-specific security measures, teams may miss these threats until after deployment when they're far more expensive to remediate.

The guide acknowledges that most teams don't have enterprise security budgets or dedicated security personnel. By organizing security into three distinct levels with realistic time estimates, organizations can implement baseline protections quickly and expand coverage as their AI systems grow in complexity and risk.

The three-level approach recognizes that security requirements differ dramatically based on AI application context. Internal tools processing low-sensitivity data need different protections than customer-facing systems making decisions about people. The included risk assessment helps teams determine their appropriate starting point based on impact and exposure factors.

What You Get

Level 1: Basic Protection (1-2 Days Implementation) Essential security measures including access controls, data encryption, basic monitoring, and incident response plan. Suitable for internal tools and low-risk applications.

Level 2: Standard Protection (1-2 Weeks Implementation)

Adds security testing, adversarial resilience checks, audit logging, dependency management, and deployment security. Appropriate for customer-facing applications and business-critical systems.

Level 3: High Protection (Ongoing Process) Comprehensive security including advanced adversarial testing, bias monitoring, compliance documentation, third-party security management, and external assessments. Required for healthcare, finance, legal, and regulated industries.

Threat Defense Strategies Specific defense guidance for data poisoning, model theft, adversarial attacks, privacy leaks, and bias exploitation with actionable mitigation steps for each.

Incident Response Framework Four severity levels (Critical, High, Medium, Low) with corresponding response timeframes and step-by-step playbook covering detection, investigation, remediation, and regulatory reporting requirements.

Security Tools Reference Curated list of free and affordable tools across five categories: access control, monitoring and logging, dependency scanning, testing, and privacy protection.

Operational Checklists Pre-built checklists for daily security habits, weekly tasks, monthly reviews, quarterly assessments, and annual audits to maintain consistent security posture.

Professional Help Guidelines Identifies when to engage external security professionals with budget ranges ($5k-$20k for small projects, $20k-$100k for medium, $100k+ for high-risk systems).

Key Features

Three-Level Framework: Organizes security measures into Basic (1-2 days), Standard (1-2 weeks), and High (ongoing) with specific checklists and time estimates for each level

Risk Assessment Tool: Nine-question questionnaire evaluating impact and exposure to determine appropriate security level based on harm potential, data sensitivity, public accessibility, and regulatory requirements

AI-Specific Threats: Addresses five threat categories unique to AI systems rather than general cybersecurity concerns

Scalable Role Definitions: Separate guidance for small teams (1-5 people), medium teams (6-20), and large teams (20+) acknowledging that one person may handle multiple security functions in smaller organizations

Realistic Time Estimates: Every security measure includes implementation time from 2 hours for incident response planning to ongoing processes for high-risk systems

Free Tool Focus: Prioritizes no-cost and affordable options including GitHub Dependabot, cloud provider IAM, open source monitoring stacks, and built-in security scanning

Incident Severity Framework: Four-tier system with specific response timeframes from immediate action for active attacks to one-week response for low-priority issues

Professional Help Budget Guidance: Specific cost ranges for security assessments, penetration testing, compliance work, and incident response services

Comparison Table: No Security vs. Community Edition Framework

| Aspect | No Security Approach | This Framework |

|---|---|---|

| Threat Awareness | Generic software security mindset | Five AI-specific threats (data poisoning, model theft, adversarial attacks, bias exploitation, privacy leaks) |

| Implementation Guidance | Ad hoc security measures | Three levels with time estimates: Basic (1-2 days), Standard (1-2 weeks), High (ongoing) |

| Risk Assessment | Gut feeling about security needs | Nine-question questionnaire determining appropriate security level |

| Incident Response | Panic and scramble when problems occur | Four severity levels with step-by-step playbook and response timeframes |

| Tools | Unknown which tools to use | Curated list of free and affordable options across five security categories |

| Team Scaling | Unclear security responsibilities | Role definitions for small (1-5), medium (6-20), and large (20+) teams |

FAQ Section

Q: How long does it take to implement this framework? A: Level 1 (Basic Protection) takes 1-2 days to implement and covers essential measures like access controls, data encryption, basic monitoring, and incident response planning. Level 2 (Standard Protection) requires 1-2 weeks and adds security testing, audit logging, and deployment security. Level 3 (High Protection) is an ongoing process for high-risk systems.

Q: What makes AI security different from regular application security? A: AI systems face unique threats including data poisoning (corrupting training data), model theft (extracting trained models through queries), adversarial attacks (crafted inputs that fool models), bias exploitation (using fairness issues as attack vectors), and privacy leaks (models revealing training data). Traditional security measures don't address these AI-specific vulnerabilities.

Q: Do I need a dedicated security team to use this framework? A: No. The framework scales for teams of all sizes. For small teams (1-5 people), one person can own security part-time while everyone follows security practices. Medium teams (6-20) designate a security champion spending about 20% of their time on security. Only large teams (20+) typically need dedicated security roles.

Q: How do I know which security level I need? A: The guide includes a nine-question risk assessment covering impact (could breaches harm people, do you process sensitive data, are you regulated) and exposure (is AI publicly accessible, does it integrate with external systems, could attackers financially benefit). Scoring 0-2 "yes" suggests Level 1, 3-5 suggests Level 2, and 6+ suggests Level 3 plus professional help.

Q: What if we don't have budget for security tools? A: The guide prioritizes free and affordable options including GitHub Dependabot (free), cloud provider built-in tools (AWS IAM, CloudWatch, Google Cloud IAM), open source options (ELK Stack, Prometheus, Grafana, OWASP tools), and free tiers of commercial tools (Auth0, Datadog, Snyk).

Q: When should we hire professional security help? A: Consider external help for high-stakes systems (healthcare, finance, legal, critical infrastructure), serious incidents (major breaches, active attacks, discrimination events), strict compliance requirements (certifications, government contracts), or complex environments (multi-cloud, sensitive data processing). Budget guidelines range from $5k-$20k for small project assessments to $100k+ annually for large high-risk systems.

Documents are optimized for Microsoft Word to ensure proper formatting and collaborative editing capabilities.

Ideal For

- Development teams building AI systems without dedicated security personnel

- Small to mid-size organizations needing practical security guidance without enterprise overhead

- AI product managers responsible for security but lacking security expertise

- Startups and scale-ups deploying customer-facing AI applications

- Teams processing personal data through AI systems requiring basic protection

- Organizations integrating third-party AI services who need vendor security assessment guidance

- Anyone deploying AI to production who needs a practical starting point for security implementation

| File | Action |

|---|---|

| AI-Security-Essentials-Community-Edition.docx | Download |