Automated and Interpretable Survival Analysis from Multimodal Datacs.AI updates on arXiv.orgon September 29, 2025 at 4:00 am arXiv:2509.21600v1 Announce Type: new

Abstract: Accurate and interpretable survival analysis remains a core challenge in oncology. With growing multimodal data and the clinical need for transparent models to support validation and trust, this challenge increases in complexity. We propose an interpretable multimodal AI framework to automate survival analysis by integrating clinical variables and computed tomography imaging. Our MultiFIX-based framework uses deep learning to infer survival-relevant features that are further explained: imaging features are interpreted via Grad-CAM, while clinical variables are modeled as symbolic expressions through genetic programming. Risk estimation employs a transparent Cox regression, enabling stratification into groups with distinct survival outcomes. Using the open-source RADCURE dataset for head and neck cancer, MultiFIX achieves a C-index of 0.838 (prediction) and 0.826 (stratification), outperforming the clinical and academic baseline approaches and aligning with known prognostic markers. These results highlight the promise of interpretable multimodal AI for precision oncology with MultiFIX.

arXiv:2509.21600v1 Announce Type: new

Abstract: Accurate and interpretable survival analysis remains a core challenge in oncology. With growing multimodal data and the clinical need for transparent models to support validation and trust, this challenge increases in complexity. We propose an interpretable multimodal AI framework to automate survival analysis by integrating clinical variables and computed tomography imaging. Our MultiFIX-based framework uses deep learning to infer survival-relevant features that are further explained: imaging features are interpreted via Grad-CAM, while clinical variables are modeled as symbolic expressions through genetic programming. Risk estimation employs a transparent Cox regression, enabling stratification into groups with distinct survival outcomes. Using the open-source RADCURE dataset for head and neck cancer, MultiFIX achieves a C-index of 0.838 (prediction) and 0.826 (stratification), outperforming the clinical and academic baseline approaches and aligning with known prognostic markers. These results highlight the promise of interpretable multimodal AI for precision oncology with MultiFIX. Read More

Jailbreaking on Text-to-Video Models via Scene Splitting Strategycs.AI updates on arXiv.orgon September 29, 2025 at 4:00 am arXiv:2509.22292v1 Announce Type: cross

Abstract: Along with the rapid advancement of numerous Text-to-Video (T2V) models, growing concerns have emerged regarding their safety risks. While recent studies have explored vulnerabilities in models like LLMs, VLMs, and Text-to-Image (T2I) models through jailbreak attacks, T2V models remain largely unexplored, leaving a significant safety gap. To address this gap, we introduce SceneSplit, a novel black-box jailbreak method that works by fragmenting a harmful narrative into multiple scenes, each individually benign. This approach manipulates the generative output space, the abstract set of all potential video outputs for a given prompt, using the combination of scenes as a powerful constraint to guide the final outcome. While each scene individually corresponds to a wide and safe space where most outcomes are benign, their sequential combination collectively restricts this space, narrowing it to an unsafe region and significantly increasing the likelihood of generating a harmful video. This core mechanism is further enhanced through iterative scene manipulation, which bypasses the safety filter within this constrained unsafe region. Additionally, a strategy library that reuses successful attack patterns further improves the attack’s overall effectiveness and robustness. To validate our method, we evaluate SceneSplit across 11 safety categories on T2V models. Our results show that it achieves a high average Attack Success Rate (ASR) of 77.2% on Luma Ray2, 84.1% on Hailuo, and 78.2% on Veo2, significantly outperforming the existing baseline. Through this work, we demonstrate that current T2V safety mechanisms are vulnerable to attacks that exploit narrative structure, providing new insights for understanding and improving the safety of T2V models.

arXiv:2509.22292v1 Announce Type: cross

Abstract: Along with the rapid advancement of numerous Text-to-Video (T2V) models, growing concerns have emerged regarding their safety risks. While recent studies have explored vulnerabilities in models like LLMs, VLMs, and Text-to-Image (T2I) models through jailbreak attacks, T2V models remain largely unexplored, leaving a significant safety gap. To address this gap, we introduce SceneSplit, a novel black-box jailbreak method that works by fragmenting a harmful narrative into multiple scenes, each individually benign. This approach manipulates the generative output space, the abstract set of all potential video outputs for a given prompt, using the combination of scenes as a powerful constraint to guide the final outcome. While each scene individually corresponds to a wide and safe space where most outcomes are benign, their sequential combination collectively restricts this space, narrowing it to an unsafe region and significantly increasing the likelihood of generating a harmful video. This core mechanism is further enhanced through iterative scene manipulation, which bypasses the safety filter within this constrained unsafe region. Additionally, a strategy library that reuses successful attack patterns further improves the attack’s overall effectiveness and robustness. To validate our method, we evaluate SceneSplit across 11 safety categories on T2V models. Our results show that it achieves a high average Attack Success Rate (ASR) of 77.2% on Luma Ray2, 84.1% on Hailuo, and 78.2% on Veo2, significantly outperforming the existing baseline. Through this work, we demonstrate that current T2V safety mechanisms are vulnerable to attacks that exploit narrative structure, providing new insights for understanding and improving the safety of T2V models. Read More

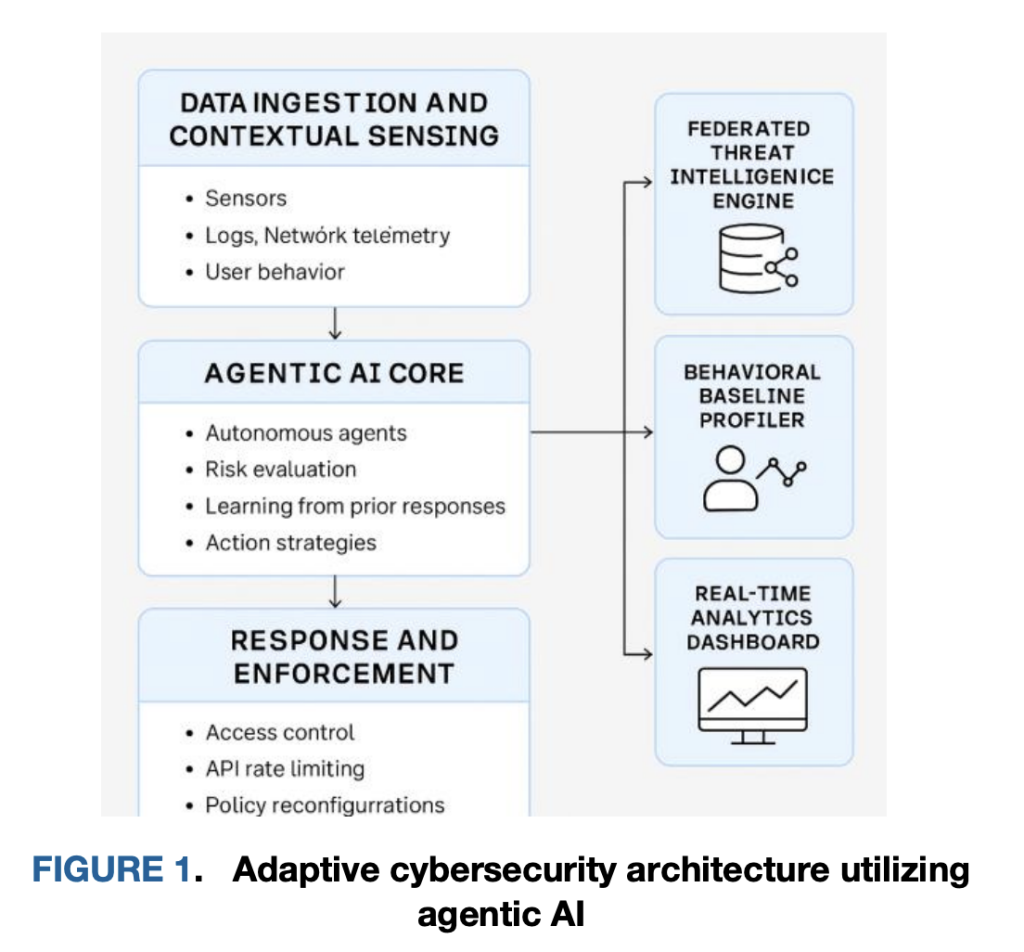

This AI Research Proposes an AI Agent Immune System for Adaptive Cybersecurity: 3.4× Faster Containment with Can your AI security stack profile, reason, and neutralize a live security threat in ~220 ms—without a central round-trip? A team of researchers from Google and University of Arkansas at Little Rock outline an agentic cybersecurity “immune system” built from lightweight, autonomous sidecar AI agents colocated with workloads (Kubernetes pods, API gateways, edge services). Instead

The post This AI Research Proposes an AI Agent Immune System for Adaptive Cybersecurity: 3.4× Faster Containment with <10% Overhead appeared first on MarkTechPost.

Can your AI security stack profile, reason, and neutralize a live security threat in ~220 ms—without a central round-trip? A team of researchers from Google and University of Arkansas at Little Rock outline an agentic cybersecurity “immune system” built from lightweight, autonomous sidecar AI agents colocated with workloads (Kubernetes pods, API gateways, edge services). Instead

The post This AI Research Proposes an AI Agent Immune System for Adaptive Cybersecurity: 3.4× Faster Containment with <10% Overhead appeared first on MarkTechPost. Read More

Eulerian Melodies: Graph Algorithms for Music CompositionTowards Data Scienceon September 28, 2025 at 2:30 pm Conceptual overview and an end-to-end Python implementation

The post Eulerian Melodies: Graph Algorithms for Music Composition appeared first on Towards Data Science.

Conceptual overview and an end-to-end Python implementation

The post Eulerian Melodies: Graph Algorithms for Music Composition appeared first on Towards Data Science. Read More

InqEduAgent: Adaptive AI Learning Partners with Gaussian Process Augmentationcs.AI updates on arXiv.orgon September 29, 2025 at 4:00 am arXiv:2508.03174v3 Announce Type: replace

Abstract: Collaborative partnership matters in inquiry-oriented education. However, most study partners are selected either rely on experience-based assignments with little scientific planning or build on rule-based machine assistants, encountering difficulties in knowledge expansion and inadequate flexibility. This paper proposes an LLM-empowered agent model for simulating and selecting learning partners tailored to inquiry-oriented learning, named InqEduAgent. Generative agents are designed to capture cognitive and evaluative features of learners in real-world scenarios. Then, an adaptive matching algorithm with Gaussian process augmentation is formulated to identify patterns within prior knowledge. Optimal learning-partner matches are provided for learners facing different exercises. The experimental results show the optimal performance of InqEduAgent in most knowledge-learning scenarios and LLM environment with different levels of capabilities. This study promotes the intelligent allocation of human-based learning partners and the formulation of AI-based learning partners. The code, data, and appendix are publicly available at https://github.com/InqEduAgent/InqEduAgent.

arXiv:2508.03174v3 Announce Type: replace

Abstract: Collaborative partnership matters in inquiry-oriented education. However, most study partners are selected either rely on experience-based assignments with little scientific planning or build on rule-based machine assistants, encountering difficulties in knowledge expansion and inadequate flexibility. This paper proposes an LLM-empowered agent model for simulating and selecting learning partners tailored to inquiry-oriented learning, named InqEduAgent. Generative agents are designed to capture cognitive and evaluative features of learners in real-world scenarios. Then, an adaptive matching algorithm with Gaussian process augmentation is formulated to identify patterns within prior knowledge. Optimal learning-partner matches are provided for learners facing different exercises. The experimental results show the optimal performance of InqEduAgent in most knowledge-learning scenarios and LLM environment with different levels of capabilities. This study promotes the intelligent allocation of human-based learning partners and the formulation of AI-based learning partners. The code, data, and appendix are publicly available at https://github.com/InqEduAgent/InqEduAgent. Read More

Developing Strategies to Increase Capacity in AI Educationcs.AI updates on arXiv.orgon September 29, 2025 at 4:00 am arXiv:2509.21713v1 Announce Type: cross

Abstract: Many institutions are currently grappling with teaching artificial intelligence (AI) in the face of growing demand and relevance in our world. The Computing Research Association (CRA) has conducted 32 moderated virtual roundtable discussions of 202 experts committed to improving AI education. These discussions slot into four focus areas: AI Knowledge Areas and Pedagogy, Infrastructure Challenges in AI Education, Strategies to Increase Capacity in AI Education, and AI Education for All. Roundtables were organized around institution type to consider the particular goals and resources of different AI education environments. We identified the following high-level community needs to increase capacity in AI education. A significant digital divide creates major infrastructure hurdles, especially for smaller and under-resourced institutions. These challenges manifest as a shortage of faculty with AI expertise, who also face limited time for reskilling; a lack of computational infrastructure for students and faculty to develop and test AI models; and insufficient institutional technical support. Compounding these issues is the large burden associated with updating curricula and creating new programs. To address the faculty gap, accessible and continuous professional development is crucial for faculty to learn about AI and its ethical dimensions. This support is particularly needed for under-resourced institutions and must extend to faculty both within and outside of computing programs to ensure all students have access to AI education. We have compiled and organized a list of resources that our participant experts mentioned throughout this study. These resources contribute to a frequent request heard during the roundtables: a central repository of AI education resources for institutions to freely use across higher education.

arXiv:2509.21713v1 Announce Type: cross

Abstract: Many institutions are currently grappling with teaching artificial intelligence (AI) in the face of growing demand and relevance in our world. The Computing Research Association (CRA) has conducted 32 moderated virtual roundtable discussions of 202 experts committed to improving AI education. These discussions slot into four focus areas: AI Knowledge Areas and Pedagogy, Infrastructure Challenges in AI Education, Strategies to Increase Capacity in AI Education, and AI Education for All. Roundtables were organized around institution type to consider the particular goals and resources of different AI education environments. We identified the following high-level community needs to increase capacity in AI education. A significant digital divide creates major infrastructure hurdles, especially for smaller and under-resourced institutions. These challenges manifest as a shortage of faculty with AI expertise, who also face limited time for reskilling; a lack of computational infrastructure for students and faculty to develop and test AI models; and insufficient institutional technical support. Compounding these issues is the large burden associated with updating curricula and creating new programs. To address the faculty gap, accessible and continuous professional development is crucial for faculty to learn about AI and its ethical dimensions. This support is particularly needed for under-resourced institutions and must extend to faculty both within and outside of computing programs to ensure all students have access to AI education. We have compiled and organized a list of resources that our participant experts mentioned throughout this study. These resources contribute to a frequent request heard during the roundtables: a central repository of AI education resources for institutions to freely use across higher education. Read More

Python for Data Science (Free 7-Day Mini-Course)KDnuggetson September 29, 2025 at 12:00 pm Want to learn Python for data science? Start today with this beginner-friendly mini-course packed with bite-sized lessons and hands-on examples.

Want to learn Python for data science? Start today with this beginner-friendly mini-course packed with bite-sized lessons and hands-on examples. Read More

Can AI Perceive Physical Danger and Intervene?cs.AI updates on arXiv.orgon September 29, 2025 at 4:00 am arXiv:2509.21651v1 Announce Type: new

Abstract: When AI interacts with the physical world — as a robot or an assistive agent — new safety challenges emerge beyond those of purely “digital AI”. In such interactions, the potential for physical harm is direct and immediate. How well do state-of-the-art foundation models understand common-sense facts about physical safety, e.g. that a box may be too heavy to lift, or that a hot cup of coffee should not be handed to a child? In this paper, our contributions are three-fold: first, we develop a highly scalable approach to continuous physical safety benchmarking of Embodied AI systems, grounded in real-world injury narratives and operational safety constraints. To probe multi-modal safety understanding, we turn these narratives and constraints into photorealistic images and videos capturing transitions from safe to unsafe states, using advanced generative models. Secondly, we comprehensively analyze the ability of major foundation models to perceive risks, reason about safety, and trigger interventions; this yields multi-faceted insights into their deployment readiness for safety-critical agentic applications. Finally, we develop a post-training paradigm to teach models to explicitly reason about embodiment-specific safety constraints provided through system instructions. The resulting models generate thinking traces that make safety reasoning interpretable and transparent, achieving state of the art performance in constraint satisfaction evaluations. The benchmark will be released at https://asimov-benchmark.github.io/v2

arXiv:2509.21651v1 Announce Type: new

Abstract: When AI interacts with the physical world — as a robot or an assistive agent — new safety challenges emerge beyond those of purely “digital AI”. In such interactions, the potential for physical harm is direct and immediate. How well do state-of-the-art foundation models understand common-sense facts about physical safety, e.g. that a box may be too heavy to lift, or that a hot cup of coffee should not be handed to a child? In this paper, our contributions are three-fold: first, we develop a highly scalable approach to continuous physical safety benchmarking of Embodied AI systems, grounded in real-world injury narratives and operational safety constraints. To probe multi-modal safety understanding, we turn these narratives and constraints into photorealistic images and videos capturing transitions from safe to unsafe states, using advanced generative models. Secondly, we comprehensively analyze the ability of major foundation models to perceive risks, reason about safety, and trigger interventions; this yields multi-faceted insights into their deployment readiness for safety-critical agentic applications. Finally, we develop a post-training paradigm to teach models to explicitly reason about embodiment-specific safety constraints provided through system instructions. The resulting models generate thinking traces that make safety reasoning interpretable and transparent, achieving state of the art performance in constraint satisfaction evaluations. The benchmark will be released at https://asimov-benchmark.github.io/v2 Read More

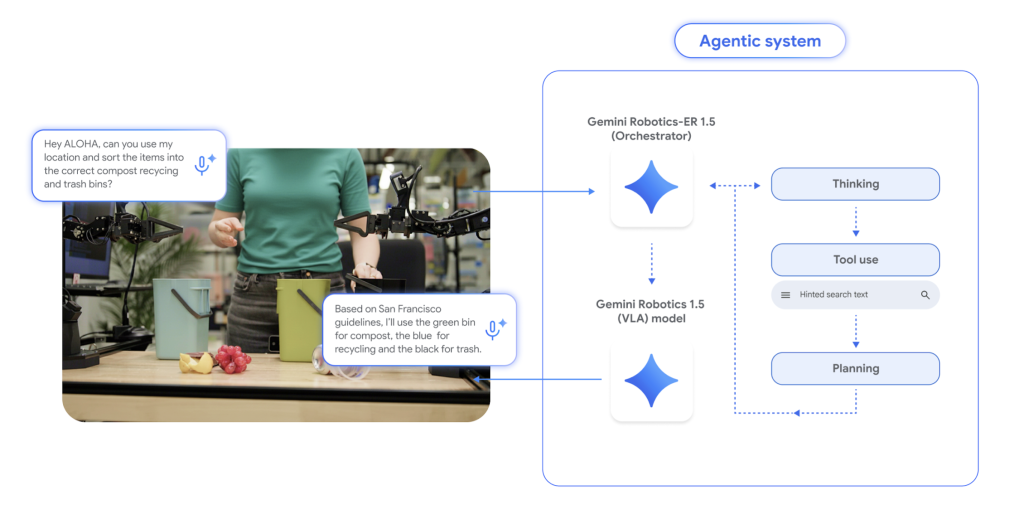

Gemini Robotics 1.5: DeepMind’s ER↔VLA Stack Brings Agentic Robots to the Real WorldMarkTechPoston September 28, 2025 at 8:29 am Can a single AI stack plan like a researcher, reason over scenes, and transfer motions across different robots—without retraining from scratch? Google DeepMind’s Gemini Robotics 1.5 says yes, by splitting embodied intelligence into two models: Gemini Robotics-ER 1.5 for high-level embodied reasoning (spatial understanding, planning, progress/success estimation, tool-use) and Gemini Robotics 1.5 for low-level visuomotor

The post Gemini Robotics 1.5: DeepMind’s ER↔VLA Stack Brings Agentic Robots to the Real World appeared first on MarkTechPost.

Can a single AI stack plan like a researcher, reason over scenes, and transfer motions across different robots—without retraining from scratch? Google DeepMind’s Gemini Robotics 1.5 says yes, by splitting embodied intelligence into two models: Gemini Robotics-ER 1.5 for high-level embodied reasoning (spatial understanding, planning, progress/success estimation, tool-use) and Gemini Robotics 1.5 for low-level visuomotor

The post Gemini Robotics 1.5: DeepMind’s ER↔VLA Stack Brings Agentic Robots to the Real World appeared first on MarkTechPost. Read More

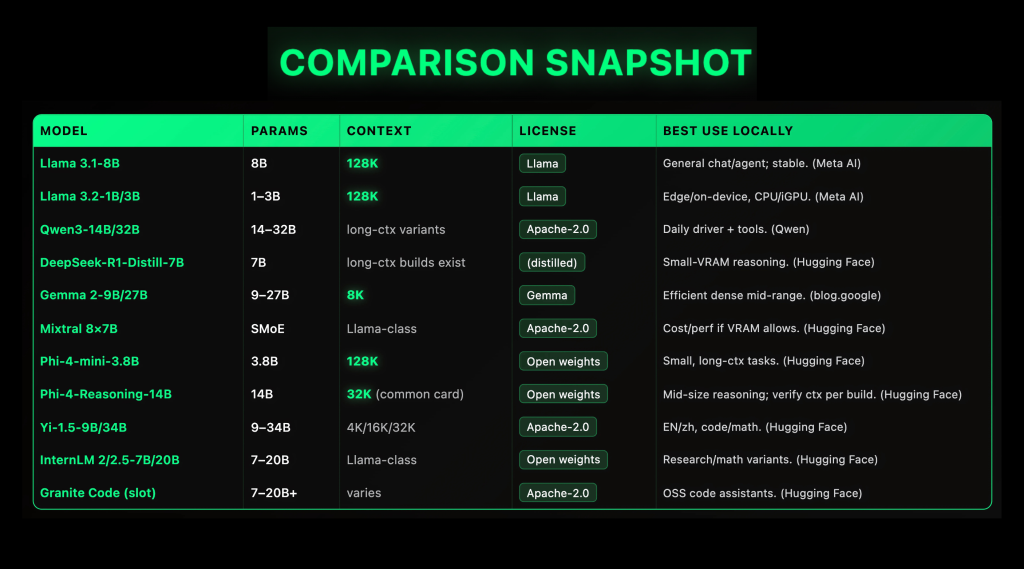

Top 10 Local LLMs (2025): Context Windows, VRAM Targets, and Licenses ComparedMarkTechPoston September 28, 2025 at 6:21 am Local LLMs matured fast in 2025: open-weight families like Llama 3.1 (128K context length (ctx)), Qwen3 (Apache-2.0, dense + MoE), Gemma 2 (9B/27B, 8K ctx), Mixtral 8×7B (Apache-2.0 SMoE), and Phi-4-mini (3.8B, 128K ctx) now ship reliable specs and first-class local runners (GGUF/llama.cpp, LM Studio, Ollama), making on-prem and even laptop inference practical if you

The post Top 10 Local LLMs (2025): Context Windows, VRAM Targets, and Licenses Compared appeared first on MarkTechPost.

Local LLMs matured fast in 2025: open-weight families like Llama 3.1 (128K context length (ctx)), Qwen3 (Apache-2.0, dense + MoE), Gemma 2 (9B/27B, 8K ctx), Mixtral 8×7B (Apache-2.0 SMoE), and Phi-4-mini (3.8B, 128K ctx) now ship reliable specs and first-class local runners (GGUF/llama.cpp, LM Studio, Ollama), making on-prem and even laptop inference practical if you

The post Top 10 Local LLMs (2025): Context Windows, VRAM Targets, and Licenses Compared appeared first on MarkTechPost. Read More