Schoenfeld’s Anatomy of Mathematical Reasoning by Language Modelscs.AI updates on arXiv.org arXiv:2512.19995v1 Announce Type: cross

Abstract: Large language models increasingly expose reasoning traces, yet their underlying cognitive structure and steps remain difficult to identify and analyze beyond surface-level statistics. We adopt Schoenfeld’s Episode Theory as an inductive, intermediate-scale lens and introduce ThinkARM (Anatomy of Reasoning in Models), a scalable framework that explicitly abstracts reasoning traces into functional reasoning steps such as Analysis, Explore, Implement, Verify, etc. When applied to mathematical problem solving by diverse models, this abstraction reveals reproducible thinking dynamics and structural differences between reasoning and non-reasoning models, which are not apparent from token-level views. We further present two diagnostic case studies showing that exploration functions as a critical branching step associated with correctness, and that efficiency-oriented methods selectively suppress evaluative feedback steps rather than uniformly shortening responses. Together, our results demonstrate that episode-level representations make reasoning steps explicit, enabling systematic analysis of how reasoning is structured, stabilized, and altered in modern language models.

arXiv:2512.19995v1 Announce Type: cross

Abstract: Large language models increasingly expose reasoning traces, yet their underlying cognitive structure and steps remain difficult to identify and analyze beyond surface-level statistics. We adopt Schoenfeld’s Episode Theory as an inductive, intermediate-scale lens and introduce ThinkARM (Anatomy of Reasoning in Models), a scalable framework that explicitly abstracts reasoning traces into functional reasoning steps such as Analysis, Explore, Implement, Verify, etc. When applied to mathematical problem solving by diverse models, this abstraction reveals reproducible thinking dynamics and structural differences between reasoning and non-reasoning models, which are not apparent from token-level views. We further present two diagnostic case studies showing that exploration functions as a critical branching step associated with correctness, and that efficiency-oriented methods selectively suppress evaluative feedback steps rather than uniformly shortening responses. Together, our results demonstrate that episode-level representations make reasoning steps explicit, enabling systematic analysis of how reasoning is structured, stabilized, and altered in modern language models. Read More

Decoupling the “What” and “Where” With Polar Coordinate Positional Embeddingscs.AI updates on arXiv.org arXiv:2509.10534v2 Announce Type: replace-cross

Abstract: The attention mechanism in a Transformer architecture matches key to query based on both content — the what — and position in a sequence — the where. We present an analysis indicating that what and where are entangled in the popular RoPE rotary position embedding. This entanglement can impair performance particularly when decisions require independent matches on these two factors. We propose an improvement to RoPE, which we call Polar Coordinate Position Embeddings or PoPE, that eliminates the what-where confound. PoPE is far superior on a diagnostic task requiring indexing solely by position or by content. On autoregressive sequence modeling in music, genomic, and natural language domains, Transformers using PoPE as the positional encoding scheme outperform baselines using RoPE with respect to evaluation loss (perplexity) and downstream task performance. On language modeling, these gains persist across model scale, from 124M to 774M parameters. Crucially, PoPE shows strong zero-shot length extrapolation capabilities compared not only to RoPE but even a method designed for extrapolation, YaRN, which requires additional fine tuning and frequency interpolation.

arXiv:2509.10534v2 Announce Type: replace-cross

Abstract: The attention mechanism in a Transformer architecture matches key to query based on both content — the what — and position in a sequence — the where. We present an analysis indicating that what and where are entangled in the popular RoPE rotary position embedding. This entanglement can impair performance particularly when decisions require independent matches on these two factors. We propose an improvement to RoPE, which we call Polar Coordinate Position Embeddings or PoPE, that eliminates the what-where confound. PoPE is far superior on a diagnostic task requiring indexing solely by position or by content. On autoregressive sequence modeling in music, genomic, and natural language domains, Transformers using PoPE as the positional encoding scheme outperform baselines using RoPE with respect to evaluation loss (perplexity) and downstream task performance. On language modeling, these gains persist across model scale, from 124M to 774M parameters. Crucially, PoPE shows strong zero-shot length extrapolation capabilities compared not only to RoPE but even a method designed for extrapolation, YaRN, which requires additional fine tuning and frequency interpolation. Read More

Evasion-Resilient Detection of DNS-over-HTTPS Data Exfiltration: A Practical Evaluation and Toolkitcs.AI updates on arXiv.org arXiv:2512.20423v1 Announce Type: cross

Abstract: The purpose of this project is to assess how well defenders can detect DNS-over-HTTPS (DoH) file exfiltration, and which evasion strategies can be used by attackers. While providing a reproducible toolkit to generate, intercept and analyze DoH exfiltration, and comparing Machine Learning vs threshold-based detection under adversarial scenarios. The originality of this project is the introduction of an end-to-end, containerized pipeline that generates configurable file exfiltration over DoH using several parameters (e.g., chunking, encoding, padding, resolver rotation). It allows for file reconstruction at the resolver side, while extracting flow-level features using a fork of DoHLyzer. The pipeline contains a prediction side, which allows the training of machine learning models based on public labelled datasets and then evaluates them side-by-side with threshold-based detection methods against malicious and evasive DNS-Over-HTTPS traffic. We train Random Forest, Gradient Boosting and Logistic Regression classifiers on a public DoH dataset and benchmark them against evasive DoH exfiltration scenarios. The toolkit orchestrates traffic generation, file capture, feature extraction, model training and analysis. The toolkit is then encapsulated into several Docker containers for easy setup and full reproducibility regardless of the platform it is run on. Future research regarding this project is directed at validating the results on mixed enterprise traffic, extending the protocol coverage to HTTP/3/QUIC request, adding a benign traffic generation, and working on real-time traffic evaluation. A key objective is to quantify when stealth constraints make DoH exfiltration uneconomical and unworthy for the attacker.

arXiv:2512.20423v1 Announce Type: cross

Abstract: The purpose of this project is to assess how well defenders can detect DNS-over-HTTPS (DoH) file exfiltration, and which evasion strategies can be used by attackers. While providing a reproducible toolkit to generate, intercept and analyze DoH exfiltration, and comparing Machine Learning vs threshold-based detection under adversarial scenarios. The originality of this project is the introduction of an end-to-end, containerized pipeline that generates configurable file exfiltration over DoH using several parameters (e.g., chunking, encoding, padding, resolver rotation). It allows for file reconstruction at the resolver side, while extracting flow-level features using a fork of DoHLyzer. The pipeline contains a prediction side, which allows the training of machine learning models based on public labelled datasets and then evaluates them side-by-side with threshold-based detection methods against malicious and evasive DNS-Over-HTTPS traffic. We train Random Forest, Gradient Boosting and Logistic Regression classifiers on a public DoH dataset and benchmark them against evasive DoH exfiltration scenarios. The toolkit orchestrates traffic generation, file capture, feature extraction, model training and analysis. The toolkit is then encapsulated into several Docker containers for easy setup and full reproducibility regardless of the platform it is run on. Future research regarding this project is directed at validating the results on mixed enterprise traffic, extending the protocol coverage to HTTP/3/QUIC request, adding a benign traffic generation, and working on real-time traffic evaluation. A key objective is to quantify when stealth constraints make DoH exfiltration uneconomical and unworthy for the attacker. Read More

On Efficient Adjustment for Micro Causal Effects in Summary Causal Graphscs.AI updates on arXiv.org arXiv:2512.18315v2 Announce Type: replace-cross

Abstract: Observational studies in fields such as epidemiology often rely on covariate adjustment to estimate causal effects. Classical graphical criteria, like the back-door criterion and the generalized adjustment criterion, are powerful tools for identifying valid adjustment sets in directed acyclic graphs (DAGs). However, these criteria are not directly applicable to summary causal graphs (SCGs), which are abstractions of DAGs commonly used in dynamic systems. In SCGs, each node typically represents an entire time series and may involve cycles, making classical criteria inapplicable for identifying causal effects. Recent work established complete conditions for determining whether the micro causal effect of a treatment or an exposure $X_{t-gamma}$ on an outcome $Y_t$ is identifiable via covariate adjustment in SCGs, under the assumption of no hidden confounding. However, these identifiability conditions have two main limitations. First, they are complex, relying on cumbersome definitions and requiring the enumeration of multiple paths in the SCG, which can be computationally expensive. Second, when these conditions are satisfied, they only provide two valid adjustment sets, limiting flexibility in practical applications. In this paper, we propose an equivalent but simpler formulation of those identifiability conditions and introduce a new criterion that identifies a broader class of valid adjustment sets in SCGs. Additionally, we characterize the quasi-optimal adjustment set among these, i.e., the one that minimizes the asymptotic variance of the causal effect estimator. Our contributions offer both theoretical advancement and practical tools for more flexible and efficient causal inference in abstracted causal graphs.

arXiv:2512.18315v2 Announce Type: replace-cross

Abstract: Observational studies in fields such as epidemiology often rely on covariate adjustment to estimate causal effects. Classical graphical criteria, like the back-door criterion and the generalized adjustment criterion, are powerful tools for identifying valid adjustment sets in directed acyclic graphs (DAGs). However, these criteria are not directly applicable to summary causal graphs (SCGs), which are abstractions of DAGs commonly used in dynamic systems. In SCGs, each node typically represents an entire time series and may involve cycles, making classical criteria inapplicable for identifying causal effects. Recent work established complete conditions for determining whether the micro causal effect of a treatment or an exposure $X_{t-gamma}$ on an outcome $Y_t$ is identifiable via covariate adjustment in SCGs, under the assumption of no hidden confounding. However, these identifiability conditions have two main limitations. First, they are complex, relying on cumbersome definitions and requiring the enumeration of multiple paths in the SCG, which can be computationally expensive. Second, when these conditions are satisfied, they only provide two valid adjustment sets, limiting flexibility in practical applications. In this paper, we propose an equivalent but simpler formulation of those identifiability conditions and introduce a new criterion that identifies a broader class of valid adjustment sets in SCGs. Additionally, we characterize the quasi-optimal adjustment set among these, i.e., the one that minimizes the asymptotic variance of the causal effect estimator. Our contributions offer both theoretical advancement and practical tools for more flexible and efficient causal inference in abstracted causal graphs. Read More

Development and external validation of a multimodal artificial intelligence mortality prediction model of critically ill patients using multicenter datacs.AI updates on arXiv.org arXiv:2512.19716v1 Announce Type: cross

Abstract: Early prediction of in-hospital mortality in critically ill patients can aid clinicians in optimizing treatment. The objective was to develop a multimodal deep learning model, using structured and unstructured clinical data, to predict in-hospital mortality risk among critically ill patients after their initial 24 hour intensive care unit (ICU) admission. We used data from MIMIC-III, MIMIC-IV, eICU, and HiRID. A multimodal model was developed on the MIMIC datasets, featuring time series components occurring within the first 24 hours of ICU admission and predicting risk of subsequent inpatient mortality. Inputs included time-invariant variables, time-variant variables, clinical notes, and chest X-ray images. External validation occurred in a temporally separated MIMIC population, HiRID, and eICU datasets. A total of 203,434 ICU admissions from more than 200 hospitals between 2001 to 2022 were included, in which mortality rate ranged from 5.2% to 7.9% across the four datasets. The model integrating structured data points had AUROC, AUPRC, and Brier scores of 0.92, 0.53, and 0.19, respectively. We externally validated the model on eight different institutions within the eICU dataset, demonstrating AUROCs ranging from 0.84-0.92. When including only patients with available clinical notes and imaging data, inclusion of notes and imaging into the model, the AUROC, AUPRC, and Brier score improved from 0.87 to 0.89, 0.43 to 0.48, and 0.37 to 0.17, respectively. Our findings highlight the importance of incorporating multiple sources of patient information for mortality prediction and the importance of external validation.

arXiv:2512.19716v1 Announce Type: cross

Abstract: Early prediction of in-hospital mortality in critically ill patients can aid clinicians in optimizing treatment. The objective was to develop a multimodal deep learning model, using structured and unstructured clinical data, to predict in-hospital mortality risk among critically ill patients after their initial 24 hour intensive care unit (ICU) admission. We used data from MIMIC-III, MIMIC-IV, eICU, and HiRID. A multimodal model was developed on the MIMIC datasets, featuring time series components occurring within the first 24 hours of ICU admission and predicting risk of subsequent inpatient mortality. Inputs included time-invariant variables, time-variant variables, clinical notes, and chest X-ray images. External validation occurred in a temporally separated MIMIC population, HiRID, and eICU datasets. A total of 203,434 ICU admissions from more than 200 hospitals between 2001 to 2022 were included, in which mortality rate ranged from 5.2% to 7.9% across the four datasets. The model integrating structured data points had AUROC, AUPRC, and Brier scores of 0.92, 0.53, and 0.19, respectively. We externally validated the model on eight different institutions within the eICU dataset, demonstrating AUROCs ranging from 0.84-0.92. When including only patients with available clinical notes and imaging data, inclusion of notes and imaging into the model, the AUROC, AUPRC, and Brier score improved from 0.87 to 0.89, 0.43 to 0.48, and 0.37 to 0.17, respectively. Our findings highlight the importance of incorporating multiple sources of patient information for mortality prediction and the importance of external validation. Read More

Fine-Tuned In-Context Learners for Efficient Adaptationcs.AI updates on arXiv.org arXiv:2512.19879v1 Announce Type: cross

Abstract: When adapting large language models (LLMs) to a specific downstream task, two primary approaches are commonly employed: (1) prompt engineering, often with in-context few-shot learning, leveraging the model’s inherent generalization abilities, and (2) fine-tuning on task-specific data, directly optimizing the model’s parameters. While prompt-based methods excel in few-shot scenarios, their effectiveness often plateaus as more data becomes available. Conversely, fine-tuning scales well with data but may underperform when training examples are scarce. We investigate a unified approach that bridges these two paradigms by incorporating in-context learning directly into the fine-tuning process. Specifically, we fine-tune the model on task-specific data augmented with in-context examples, mimicking the structure of k-shot prompts. This approach, while requiring per-task fine-tuning, combines the sample efficiency of in-context learning with the performance gains of fine-tuning, leading to a method that consistently matches and often significantly exceeds both these baselines. To perform hyperparameter selection in the low-data regime, we propose to use prequential evaluation, which eliminates the need for expensive cross-validation and leverages all available data for training while simultaneously providing a robust validation signal. We conduct an extensive empirical study to determine which adaptation paradigm – fine-tuning, in-context learning, or our proposed unified approach offers the best predictive performance on a concrete data downstream-tasks.

arXiv:2512.19879v1 Announce Type: cross

Abstract: When adapting large language models (LLMs) to a specific downstream task, two primary approaches are commonly employed: (1) prompt engineering, often with in-context few-shot learning, leveraging the model’s inherent generalization abilities, and (2) fine-tuning on task-specific data, directly optimizing the model’s parameters. While prompt-based methods excel in few-shot scenarios, their effectiveness often plateaus as more data becomes available. Conversely, fine-tuning scales well with data but may underperform when training examples are scarce. We investigate a unified approach that bridges these two paradigms by incorporating in-context learning directly into the fine-tuning process. Specifically, we fine-tune the model on task-specific data augmented with in-context examples, mimicking the structure of k-shot prompts. This approach, while requiring per-task fine-tuning, combines the sample efficiency of in-context learning with the performance gains of fine-tuning, leading to a method that consistently matches and often significantly exceeds both these baselines. To perform hyperparameter selection in the low-data regime, we propose to use prequential evaluation, which eliminates the need for expensive cross-validation and leverages all available data for training while simultaneously providing a robust validation signal. We conduct an extensive empirical study to determine which adaptation paradigm – fine-tuning, in-context learning, or our proposed unified approach offers the best predictive performance on a concrete data downstream-tasks. Read More

Mitigating LLM Hallucination via Behaviorally Calibrated Reinforcement Learningcs.AI updates on arXiv.org arXiv:2512.19920v1 Announce Type: cross

Abstract: LLM deployment in critical domains is currently impeded by persistent hallucinations–generating plausible but factually incorrect assertions. While scaling laws drove significant improvements in general capabilities, theoretical frameworks suggest hallucination is not merely stochastic error but a predictable statistical consequence of training objectives prioritizing mimicking data distribution over epistemic honesty. Standard RLVR paradigms, utilizing binary reward signals, inadvertently incentivize models as good test-takers rather than honest communicators, encouraging guessing whenever correctness probability exceeds zero. This paper presents an exhaustive investigation into behavioral calibration, which incentivizes models to stochastically admit uncertainty by abstaining when not confident, aligning model behavior with accuracy. Synthesizing recent advances, we propose and evaluate training interventions optimizing strictly proper scoring rules for models to output a calibrated probability of correctness. Our methods enable models to either abstain from producing a complete response or flag individual claims where uncertainty remains. Utilizing Qwen3-4B-Instruct, empirical analysis reveals behavior-calibrated reinforcement learning allows smaller models to surpass frontier models in uncertainty quantification–a transferable meta-skill decouplable from raw predictive accuracy. Trained on math reasoning tasks, our model’s log-scale Accuracy-to-Hallucination Ratio gain (0.806) exceeds GPT-5’s (0.207) in a challenging in-domain evaluation (BeyondAIME). Moreover, in cross-domain factual QA (SimpleQA), our 4B LLM achieves zero-shot calibration error on par with frontier models including Grok-4 and Gemini-2.5-Pro, even though its factual accuracy is much lower.

arXiv:2512.19920v1 Announce Type: cross

Abstract: LLM deployment in critical domains is currently impeded by persistent hallucinations–generating plausible but factually incorrect assertions. While scaling laws drove significant improvements in general capabilities, theoretical frameworks suggest hallucination is not merely stochastic error but a predictable statistical consequence of training objectives prioritizing mimicking data distribution over epistemic honesty. Standard RLVR paradigms, utilizing binary reward signals, inadvertently incentivize models as good test-takers rather than honest communicators, encouraging guessing whenever correctness probability exceeds zero. This paper presents an exhaustive investigation into behavioral calibration, which incentivizes models to stochastically admit uncertainty by abstaining when not confident, aligning model behavior with accuracy. Synthesizing recent advances, we propose and evaluate training interventions optimizing strictly proper scoring rules for models to output a calibrated probability of correctness. Our methods enable models to either abstain from producing a complete response or flag individual claims where uncertainty remains. Utilizing Qwen3-4B-Instruct, empirical analysis reveals behavior-calibrated reinforcement learning allows smaller models to surpass frontier models in uncertainty quantification–a transferable meta-skill decouplable from raw predictive accuracy. Trained on math reasoning tasks, our model’s log-scale Accuracy-to-Hallucination Ratio gain (0.806) exceeds GPT-5’s (0.207) in a challenging in-domain evaluation (BeyondAIME). Moreover, in cross-domain factual QA (SimpleQA), our 4B LLM achieves zero-shot calibration error on par with frontier models including Grok-4 and Gemini-2.5-Pro, even though its factual accuracy is much lower. Read More

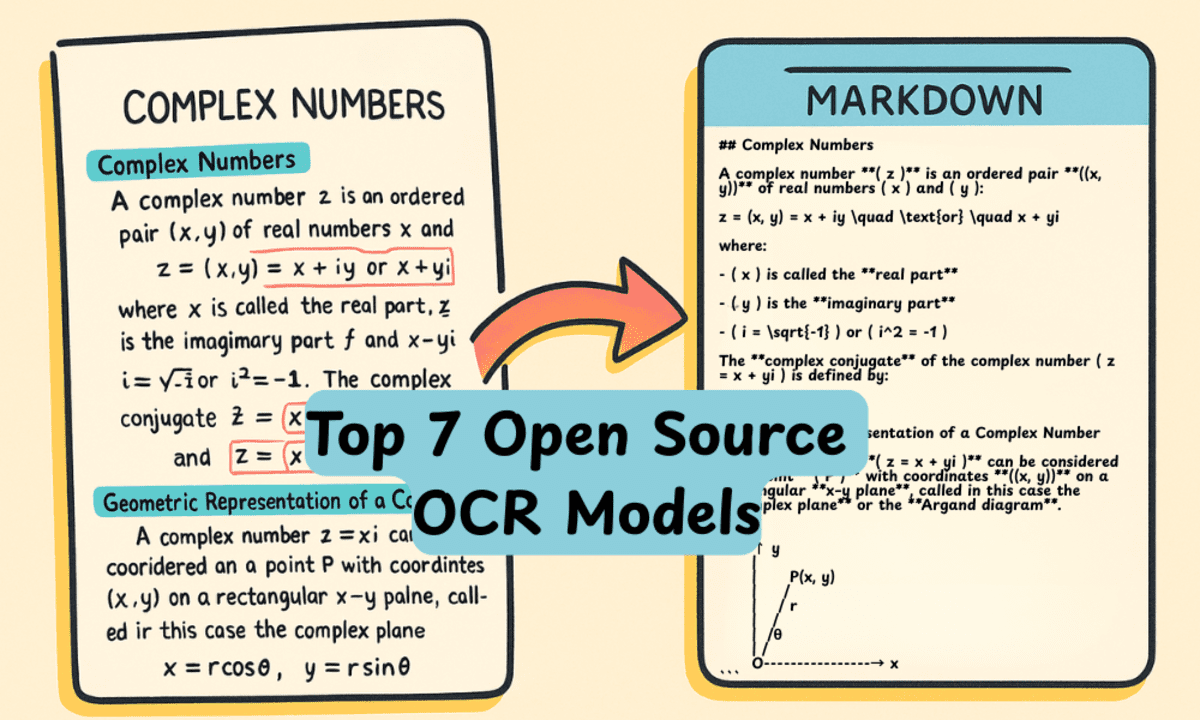

Top 7 Open Source OCR ModelsKDnuggets Best OCR and vision language models you can run locally that transform documents, tables, and diagrams into flawless markdown copies with benchmark-crushing accuracy.

Best OCR and vision language models you can run locally that transform documents, tables, and diagrams into flawless markdown copies with benchmark-crushing accuracy. Read More

Discovering Lie Groups with Flow Matchingcs.AI updates on arXiv.org arXiv:2512.20043v1 Announce Type: new

Abstract: Symmetry is fundamental to understanding physical systems, and at the same time, can improve performance and sample efficiency in machine learning. Both pursuits require knowledge of the underlying symmetries in data. To address this, we propose learning symmetries directly from data via flow matching on Lie groups. We formulate symmetry discovery as learning a distribution over a larger hypothesis group, such that the learned distribution matches the symmetries observed in data. Relative to previous works, our method, lieflow, is more flexible in terms of the types of groups it can discover and requires fewer assumptions. Experiments on 2D and 3D point clouds demonstrate the successful discovery of discrete groups, including reflections by flow matching over the complex domain. We identify a key challenge where the symmetric arrangement of the target modes causes “last-minute convergence,” where samples remain stationary until relatively late in the flow, and introduce a novel interpolation scheme for flow matching for symmetry discovery.

arXiv:2512.20043v1 Announce Type: new

Abstract: Symmetry is fundamental to understanding physical systems, and at the same time, can improve performance and sample efficiency in machine learning. Both pursuits require knowledge of the underlying symmetries in data. To address this, we propose learning symmetries directly from data via flow matching on Lie groups. We formulate symmetry discovery as learning a distribution over a larger hypothesis group, such that the learned distribution matches the symmetries observed in data. Relative to previous works, our method, lieflow, is more flexible in terms of the types of groups it can discover and requires fewer assumptions. Experiments on 2D and 3D point clouds demonstrate the successful discovery of discrete groups, including reflections by flow matching over the complex domain. We identify a key challenge where the symmetric arrangement of the target modes causes “last-minute convergence,” where samples remain stationary until relatively late in the flow, and introduce a novel interpolation scheme for flow matching for symmetry discovery. Read More

Emergent temporal abstractions in autoregressive models enable hierarchical reinforcement learningcs.AI updates on arXiv.org arXiv:2512.20605v1 Announce Type: cross

Abstract: Large-scale autoregressive models pretrained on next-token prediction and finetuned with reinforcement learning (RL) have achieved unprecedented success on many problem domains. During RL, these models explore by generating new outputs, one token at a time. However, sampling actions token-by-token can result in highly inefficient learning, particularly when rewards are sparse. Here, we show that it is possible to overcome this problem by acting and exploring within the internal representations of an autoregressive model. Specifically, to discover temporally-abstract actions, we introduce a higher-order, non-causal sequence model whose outputs control the residual stream activations of a base autoregressive model. On grid world and MuJoCo-based tasks with hierarchical structure, we find that the higher-order model learns to compress long activation sequence chunks onto internal controllers. Critically, each controller executes a sequence of behaviorally meaningful actions that unfold over long timescales and are accompanied with a learned termination condition, such that composing multiple controllers over time leads to efficient exploration on novel tasks. We show that direct internal controller reinforcement, a process we term “internal RL”, enables learning from sparse rewards in cases where standard RL finetuning fails. Our results demonstrate the benefits of latent action generation and reinforcement in autoregressive models, suggesting internal RL as a promising avenue for realizing hierarchical RL within foundation models.

arXiv:2512.20605v1 Announce Type: cross

Abstract: Large-scale autoregressive models pretrained on next-token prediction and finetuned with reinforcement learning (RL) have achieved unprecedented success on many problem domains. During RL, these models explore by generating new outputs, one token at a time. However, sampling actions token-by-token can result in highly inefficient learning, particularly when rewards are sparse. Here, we show that it is possible to overcome this problem by acting and exploring within the internal representations of an autoregressive model. Specifically, to discover temporally-abstract actions, we introduce a higher-order, non-causal sequence model whose outputs control the residual stream activations of a base autoregressive model. On grid world and MuJoCo-based tasks with hierarchical structure, we find that the higher-order model learns to compress long activation sequence chunks onto internal controllers. Critically, each controller executes a sequence of behaviorally meaningful actions that unfold over long timescales and are accompanied with a learned termination condition, such that composing multiple controllers over time leads to efficient exploration on novel tasks. We show that direct internal controller reinforcement, a process we term “internal RL”, enables learning from sparse rewards in cases where standard RL finetuning fails. Our results demonstrate the benefits of latent action generation and reinforcement in autoregressive models, suggesting internal RL as a promising avenue for realizing hierarchical RL within foundation models. Read More