Table of Contents

Introduction

Amazon Web Services quietly changed everything about enterprise machine learning in late 2025. The updated Amazon SageMaker is a complete architectural rethink that merges data engineering, analytics, and ML into one unified platform.

The SageMaker Story: From ML Tool to Data Platform

SageMaker launched at AWS re:Invent in November 2017. Andy Jassy (then AWS CEO) introduced it as “an easy way to train and deploy machine learning models for everyday developers.” The timing was intentional. In 2017, machine learning still required specialized skills that most organizations simply didn’t have. PhDs, research scientists, and well-funded tech companies dominated the space. Everyone else watched from the sidelines.

The original SageMaker addressed this gap with three core components: Jupyter notebooks for data exploration, managed training infrastructure, and one-click model deployment. AWS handled the underlying complexity (node failures, auto-scaling, security patching) so developers could focus on the actual ML work.

The approach worked. SageMaker became one of the fastest-growing services in AWS history. By its fifth anniversary in 2022, tens of thousands of customers had created millions of models using the platform. AWS kept adding capabilities (over 380 features since launch) including SageMaker Studio, the first integrated development environment purpose-built for machine learning.

But the landscape shifted. Foundation models and Large Language Models changed what “machine learning” meant for enterprises. Training runs grew from hours to weeks. Data pipelines became more important than model architecture. Organizations realized they weren’t just building models anymore. They were building data systems that happened to include ML.

The 2025 release responds to that reality.

What Is Amazon SageMaker AI?

SageMaker AI is AWS’s end-to-end machine learning platform, now expanded to serve as what AWS positions as a “Data and AI” operating system. The platform encompasses both the broader SageMaker ecosystem (Unified Studio, Lakehouse, Catalog) and Amazon SageMaker AI, which handles the ML-specific capabilities like model training, inference, and MLOps.

The platform integrates Amazon Athena, Amazon EMR, AWS Glue, and Amazon Redshift directly into the SageMaker interface through its new Unified Studio component.

This matters because modern AI development (particularly with Large Language Models) is predominantly a data logistics challenge rather than purely a model architecture problem. AWS built SageMaker to address that reality directly.

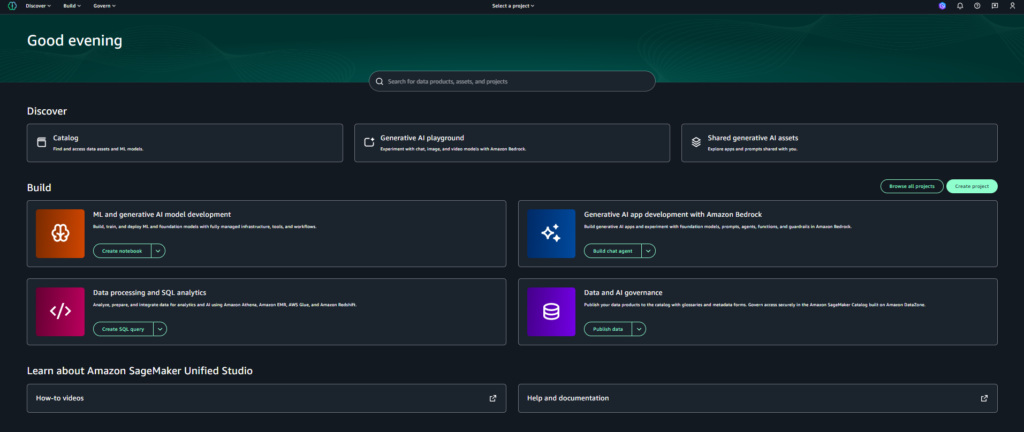

The SageMaker Unified Studio

The centerpiece of the 2025 release is SageMaker Unified Studio. Previously, AWS developers navigated separate consoles for ETL (AWS Glue), SQL queries (Amazon Athena), and model training (SageMaker Studio). That fragmentation created friction, latency, and governance gaps.

Function:

Unified Studio consolidates these tools into a single environment. Data engineers can define transformation pipelines in Glue, and data scientists immediately see resulting feature sets in the SageMaker Model Registry. Lineage and governance stay intact throughout the process.

Three capabilities stand out:

Serverless Compute Abstraction. The system automatically provisions resources when users initiate queries or transformations. A data scientist can run a SQL query against petabyte-scale data, and compute scales dynamically. When the job finishes, resources scale back to zero. No more “idle tax” from pre-provisioned clusters.

Project-Centric Governance. SageMaker now offers one-click onboarding that automatically inherits data permissions from AWS Lake Formation and the Glue Data Catalog. IAM execution roles generate in the background. This addresses the historic complexity of AWS identity management for ML environments.

Polyglot Notebooks. Users can interleave SQL, Python, and natural language prompts in single notebooks. The compute backend (Athena for Spark) scales transparently based on workload requirements.

Benefits:

Why does this matter? Unified Studio reduces development time by eliminating context switching and lowers cost by provisioning compute only when needed. Teams see faster experimentation cycles, lower infrastructure waste, and fewer IAM and access misconfigurations.

The SageMaker Lakehouse Architecture

Function:

The SageMaker Lakehouse is AWS’s unified data architecture that allows analytics engines and ML workloads to operate on the same datasets using open table formats. AWS formalized the Amazon SageMaker Lakehouse to compete with Databricks Lakehouse and Microsoft Fabric OneLake.

Capabilities and Benefits:

The architecture standardizes on Apache Iceberg as the open table format. Iceberg tables support ACID transactions, enabling Redshift, Athena, EMR, and third-party tools to safely read and write shared datasets with transactional guarantees. No more data corruption from concurrent access

The SageMaker Catalog

Function:

SageMaker Catalog provides a governed inventory of data assets, models, features, and experiments across the organization. The Glue Data Catalog serves as the Iceberg catalog and supports the Iceberg REST API. Third-party tools like Snowflake or Databricks can access SageMaker-managed data without physical data movement.

Capabilities and Benefits:

The platform also automates data maintenance tasks that typically require dedicated engineering time. Compaction (merging small files), snapshot expiration (cleaning old data versions), and table optimization now run as background processes.

The practical benefit? Teams no longer need to copy data between warehouses and ML pipelines. This reduces data duplication, storage cost, and synchronization failures. Organizations see reduced storage and ETL costs, faster model feature availability, and lower risk of inconsistent datasets across the enterprise.

SageMaker AI HyperPod: Infrastructure for Large-Scale Training

Foundation Models keep growing. Training runs can take weeks. Hardware failures during these runs become nearly certain at scale.

Amazon SageMaker AI HyperPod addresses these infrastructure challenges. Unlike standard SageMaker training jobs (which are ephemeral), HyperPod clusters persist and support complex job scheduling.

Auto-Recovery. HyperPod continuously monitors instance health and replaces faulty nodes automatically, often without requiring full training restarts.

Elastic Training. Training jobs can expand and contract based on resource availability. If additional capacity becomes available, jobs expand into it. If the cluster faces pressure, jobs contract without terminating completely.

Checkpointless Training. Traditional large-scale training requires frequent checkpoints (saving model state to disk). As models reach terabyte scale, writing checkpoints can take hours while expensive GPUs sit idle. HyperPod’s checkpointless mechanism uses peer-to-peer transfer of model states between healthy accelerators and replacement nodes. AWS claims this enables over 95% training goodput on clusters with thousands of accelerators.

The platform also integrates AWS custom silicon. Trainium3 UltraServers connect multiple chips via high-bandwidth networks, positioned to deliver faster response times and higher throughput compared to previous generations.

The Data Agent: Agentic Development

SageMaker now embeds reasoning capabilities directly into the development workflow through the SageMaker Data Agent.

The agent understands the user’s specific data catalog and environment. When a user provides a natural language prompt (“Analyze customer churn patterns in Q3 sales data”), the agent generates a multi-step execution plan and writes corresponding Spark SQL or Python code.

Crucially, the agent handles self-correction. If generated code fails, it analyzes the error, hypothesizes a fix, and offers auto-correction options. This reduces the debugging loop that often consumes substantial portions of data science time.

MLOps and Governance

SageMaker AI standardizes on MLflow for experiment tracking. The platform provides managed MLflow Tracking Servers that launch in minutes without infrastructure provisioning. SageMaker AI Pipelines automatically log metrics and artifacts to associated MLflow instances.

For governance, SageMaker Catalog (built on Amazon DataZone) uses LLMs to auto-generate business descriptions and context for data assets. This AI Recommendations feature is priced at $0.015 per 1,000 input tokens.

Pricing Considerations

SageMaker pricing rewards sophisticated FinOps management. Key models include:

- Pay-As-You-Go: Billed per second, maximum flexibility, highest cost

- SageMaker Savings Plans: Up to 64% discounts for 1- or 3-year commitments that float across instance families and regions

Hidden costs exist. The Data Agent operates on credits ($0.04 per credit). Complex prompts can consume 4-8 credits per interaction. Metadata storage runs $0.40/GB/month after a 20MB free tier.

Competitive Position

SageMaker competes directly with Databricks and Google Vertex AI.

Against Databricks, SageMaker’s advantage is the breadth of AWS ecosystem integration (native connections to Kinesis, Lambda, DynamoDB without egress charges). Databricks maintains advantages in data processing with its Photon engine and Delta Lake format maturity.

Against Google Vertex AI, SageMaker maintains model neutrality through Amazon Bedrock and SageMaker AI JumpStart, offering equal access to Anthropic Claude, Meta Llama, Mistral, and others. Vertex AI is optimized primarily for Google’s Gemini models.

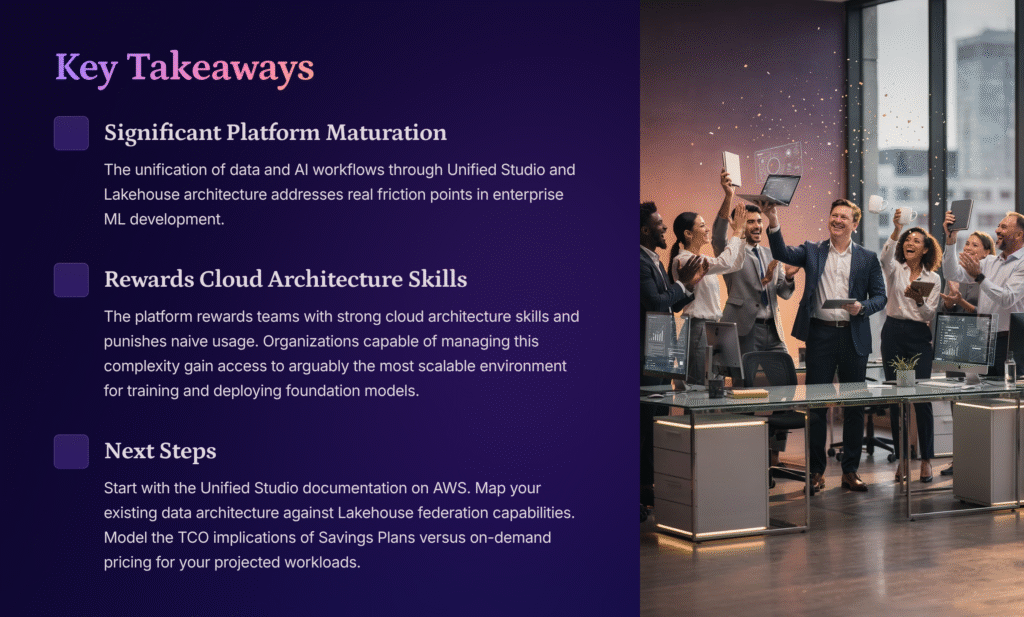

Key Takeaways

SageMaker represents a significant maturation of AWS’s ML platform strategy. The unification of data and AI workflows through Unified Studio and Lakehouse architecture addresses real friction points in enterprise ML development.

The platform rewards teams with strong cloud architecture skills and punishes naive usage. Organizations capable of managing this complexity gain access to arguably the most scalable environment for training and deploying foundation models at scale.

Start with the Unified Studio documentation on AWS. Map your existing data architecture against the Lakehouse federation capabilities. Model the TCO implications of Savings Plans versus on-demand pricing for your projected workloads.

Check out our AWS Certifications Overviews to Learn More

References

Primary Source

- “Exploring Amazon SageMaker Capabilities” (Amazon Web Services, December 2025)

AWS Official Documentation

- Amazon SageMaker Product Page. https://aws.amazon.com/sagemaker/

- Amazon SageMaker Unified Studio Documentation: Getting Started. https://docs.aws.amazon.com/sagemaker-unified-studio/latest/userguide/getting-started.html

- Support for Apache Iceberg in the SageMaker Lakehouse Architecture. https://docs.aws.amazon.com/sagemaker-lakehouse-architecture/latest/userguide/lakehouse-iceberg.html

- Amazon SageMaker Pricing. https://aws.amazon.com/sagemaker/pricing/

- Amazon SageMaker AI HyperPod Features. https://aws.amazon.com/sagemaker/ai/hyperpod/features/

- Amazon SageMaker AI Pipelines. https://aws.amazon.com/sagemaker/ai/pipelines/

- AWS Lake Formation. https://aws.amazon.com/lake-formation/

- Amazon DataZone. https://aws.amazon.com/datazone/

- Amazon Bedrock. https://aws.amazon.com/bedrock/

AWS Blog Posts

- “Collaborate and Build Faster with Amazon SageMaker Unified Studio – Now Generally Available.” AWS News Blog. https://aws.amazon.com/blogs/aws/collaborate-and-build-faster-with-amazon-sagemaker-unified-studio-now-generally-available/

- “AWS Celebrates 5 Years of Innovation with Amazon SageMaker.” AWS Machine Learning Blog, October 2022. https://aws.amazon.com/blogs/machine-learning/aws-celebrates-5-years-of-innovation-with-amazon-sagemaker/

- “New One-Click Onboarding and Notebooks with AI Agent in Amazon SageMaker Unified Studio.” AWS News Blog. https://aws.amazon.com/blogs/aws/new-one-click-onboarding-and-notebooks-with-ai-agent-in-amazon-sagemaker-unified-studio/

- “Accelerate AI Development Using Amazon SageMaker AI with Serverless MLflow.” AWS News Blog. https://aws.amazon.com/blogs/aws/accelerate-ai-development-using-amazon-sagemaker-ai-with-serverless-mlflow/

- “Introducing Checkpointless and Elastic Training on Amazon SageMaker HyperPod.” AWS News Blog. https://aws.amazon.com/blogs/aws/introducing-checkpointless-and-elastic-training-on-amazon-sagemaker-hyperpod/

- “Adaptive Infrastructure for Foundation Model Training with Elastic Training on SageMaker HyperPod.” AWS Machine Learning Blog. https://aws.amazon.com/blogs/machine-learning/adaptive-infrastructure-for-foundation-model-training-with-elastic-training-on-sagemaker-hyperpod/

- “Access Amazon S3 Iceberg Tables from Databricks Using AWS Glue Iceberg REST Catalog in Amazon SageMaker Lakehouse.” AWS Big Data Blog. https://aws.amazon.com/blogs/big-data/access-amazon-s3-iceberg-tables-from-databricks-using-aws-glue-iceberg-rest-catalog-in-amazon-sagemaker-lakehouse/

- “The Amazon SageMaker Lakehouse Architecture Now Automates Optimization Configuration of Apache Iceberg Tables on Amazon S3.” AWS Big Data Blog. https://aws.amazon.com/blogs/big-data/the-amazon-sagemaker-lakehouse-architecture-now-automates-optimization-configuration-of-apache-iceberg-tables-on-amazon-s3/

Historical Sources

- Miller, Ron. “AWS Releases SageMaker to Make It Easier to Build and Deploy Machine Learning Models.” TechCrunch, November 30, 2017. https://techcrunch.com/2017/11/29/aws-releases-sagemaker-to-make-it-easier-to-build-and-deploy-machine-learning-models/

- “Amazon SageMaker’s Fifth Birthday: Looking Back, Looking Forward.” Amazon Science, October 2024. https://www.amazon.science/blog/amazon-sagemakers-fifth-birthday-looking-back-looking-forward

- “Amazon SageMaker.” Wikipedia. https://en.wikipedia.org/wiki/Amazon_SageMaker

Competitor References

- Databricks. https://www.databricks.com/

- Google Cloud Vertex AI. https://cloud.google.com/vertex-ai