AI Governance EU AI ACT Guide

- Home

- AI Governance EU AI ACT Guide

EU AI Act Explained:

Obligations, Risk Tiers & Who It Applies To

Credentials: CISSP, CRISC, CCSP

Table of Contents

The EU AI Act establishes harmonized rules for developing, placing, and using AI systems in the EU market (Article 1). It’s the first regulation to classify AI systems by risk tier and attach binding obligations to each one.

You don’t have to sell directly to EU customers to fall within scope. The Act reaches any organization whose AI system’s output is used within the EU, and it assigns obligations across the entire value chain: providers who develop AI systems, deployers who use them professionally, importers and distributors who bring them to market, and product manufacturers who embed AI into their offerings (Articles 2 and 3). A US company building an AI model that a downstream customer uses to serve EU clients? In scope. A US company reselling another vendor’s AI tool under their own brand? Potentially reclassified as a provider (Article 25).

The workforce blind spot is equally significant. US companies with employees located in the EU face deployer obligations when AI systems touch those workers, regardless of citizenship. The Act specifically classifies employment-related AI as high-risk (Annex III, Section 4). That covers AI used in recruitment screening, performance monitoring, promotion and termination decisions, and task allocation. The performance analytics dashboard flagging underperformers in your Dublin office, the automated resume screener processing applications for your Berlin team, the productivity tracking tool scoring your Warsaw support staff? All potentially high-risk deployments carrying obligations around transparency, human oversight, fundamental rights impact assessments, and worker notification (Articles 26 and 27).

This hub covers the Act’s four-tier risk framework, the obligations tied to each classification, and the compliance deadlines your organization needs to track. Which AI systems are prohibited, which require conformity assessments and human oversight, which fall under lighter transparency requirements. Content is organized by role: CTOs planning AI strategy, compliance officers mapping regulatory requirements, risk managers conducting impact assessments, and teams evaluating whether their current AI systems fall under the Act’s scope.

Beginner

What is the EU AI Act

Intermediate

Implementation Roadmaps

Advanced

Compliance monitoring systems

EU AI ACT Article Tracker Reference - Example - Demo Only

Disclaimer: This tracker is an illustrative example designed to demonstrate how the spreadsheet can be used. Columns such as Time to Complete, Completion %, ROI Timeline, Cost Implication, Order, and Implementation are pre-filled with sample data to show the tracker’s functionality. If you choose to use this tracker, you’ll need to verify and update each column to reflect your organization’s specific use case, requirements, and compliance obligations.

EU AI ACT Risk Categories

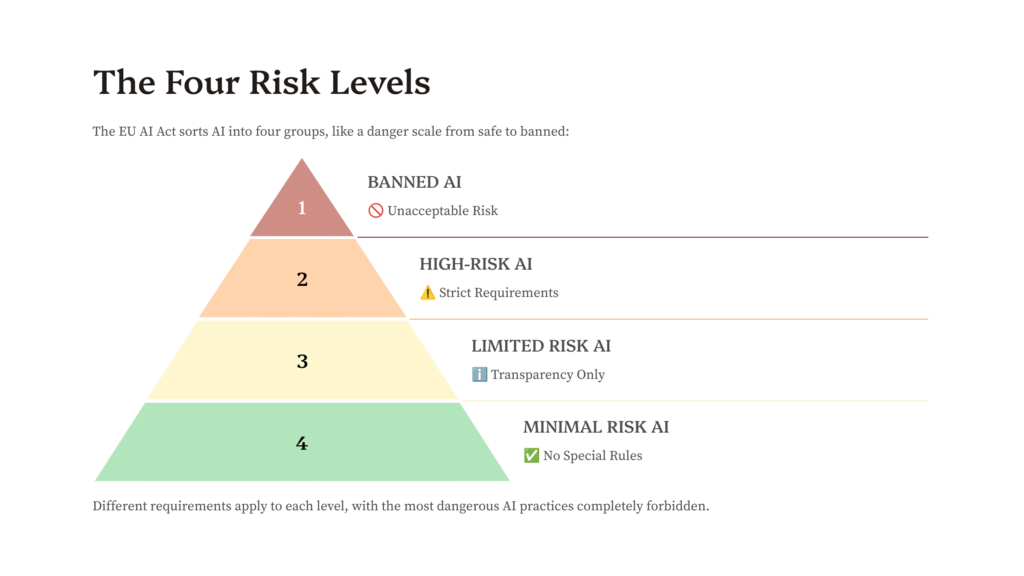

The Act establishes different risk categories through Articles 5 (prohibited practices), 6 (high-risk classification), and 50 (transparency obligations), which are commonly interpreted as a four-tier framework.

Prohibited AI Systems Under the EU AI Act

- Article 5: Prohibited AI Practices

- Commission publishes the Guidelines on prohibited artificial intelligence (AI) practices, as defined by the AI Act

The EU AI Act prohibits the following categories of AI systems:

Behavioral Manipulation AI systems that manipulate human behavior to circumvent users’ free will, such as toys using voice assistance encouraging dangerous behavior of minors.

Social Scoring Systems that allow social scoring by governments or companies.

Predictive Policing Certain applications of predictive policing.

Biometric Systems

Article 5(1)(h) on biometric identification

- Emotion recognition systems used at the workplace

- Some systems for categorizing people

- Real-time remote biometric identification for law enforcement purposes in publicly accessible spaces (with narrow exceptions)

Discrimination and Exploitation AI systems that discriminate against individuals or exploit their vulnerabilities.

Penalties and Timeline

Article 99: Penalties

Violations in this category carry the highest fines under the Act: up to €35 million or 7% of global annual turnover, whichever is higher.

Prohibitions for these systems apply six months after the AI Act’s entry into force, which is February 2, 2025.

High-Risk AI Systems Under the EU AI Act

Article 6: Classification Rules for High-Risk AI Systems

High-risk AI systems are subject to stringent requirements because they pose significant risks to health and safety or fundamental rights.

Classification Criteria

The AI Act identifies high-risk systems through two main categories:

1. AI as Safety Components (Annex I) AI systems intended to be used as a safety component of a product, or where the AI system is itself a product, covered by specific EU harmonization legislation including:

- Medical devices

- Toys

- Machinery

- Vehicles

- Civil aviation

- Lifts

- Radio equipment

This classification applies when the product incorporating the AI system requires third-party conformity assessment under that legislation.

2. Specific Use Cases (Annex III) AI systems used in the following sensitive areas:

- Critical infrastructure: Traffic, electricity, gas, water management

- Medical devices

- Employment and worker management: Evaluating job applications, making decisions about promotions

- Education and vocational training: Determining access to institutions, evaluating learning outcomes, detecting cheating

- Law enforcement: Risk assessment of criminal offenses, reliability of evidence, profiling

- Migration, asylum, and border control: Polygraphs, risk assessment for entry/visa/asylum

- Administration of justice and democratic processes

- Access to essential services: Healthcare, social security, creditworthiness, insurance

- Biometric systems: Identification, categorization, and emotion recognition (with exceptions for verification or cybersecurity purposes)

AI systems under Annex III are always considered high-risk if they perform profiling of individuals.

Requirements for Providers

Providers of high-risk AI systems must:

- Implement a continuous risk management system throughout the AI system’s lifecycle

- Ensure data governance for training/validation/testing datasets

- Draw up technical documentation

- Enable record-keeping (logs)

- Provide instructions for use to deployers

- Allow for human oversight

- Achieve appropriate levels of accuracy, robustness, and cybersecurity

- Establish a quality management system

- Perform a conformity assessment

- Register the system in a central EU database

The Act provides Obligations of Deployers of High-Risk AI Systems (Art. 26).

Article 26: Obligations of Deployers of High-Risk AI Systems

(Link to our services that can help guide you on your Risk Management): AI Governance & Risk Management solutions

Penalties and Timeline

Fines for non-compliance: up to €15 million or 3% of global revenues.

Implementation timeline:

- Annex III systems: Obligations apply after 24 months (August 2, 2026)

- Annex I systems: Obligations apply after 36 months (August 2, 2027)

Limited Risk AI Systems Under the EU AI Act

Limited risk AI systems are subject to lighter transparency obligations, primarily requiring developers and deployers to ensure that end-users are aware they are interacting with AI.

Examples of Limited Risk Systems

- Chatbots

- Deepfakes and other AI-generated content – must be labeled as such

- Emotion recognition systems or biometric categorization systems – users must be informed of their operation

Key Requirement

The primary obligation for limited risk systems is transparency: users must know when they’re interacting with AI rather than human-generated content or human operators.

Minimal Risk AI Systems Under the EU AI Act

Most AI systems fall into this category and are unregulated by the Act, presenting minimal or no risk to citizens’ rights or safety.

Examples of Minimal Risk Systems

- AI-enabled recommender systems

- Spam filters

Companies may voluntarily commit to additional codes of conduct for these AI systems.

General-Purpose AI (GPAI) Models Under the EU AI Act

General-Purpose AI (GPAI) Models Under the EU AI Act

The AI Act introduces dedicated rules for GPAI models, which are capable of performing a wide range of distinct tasks and are typically trained on large amounts of data.

Dedicated rules for GPAI models are in Arts. 54–56.

Article 54: Authorized Representatives of Providers of General-Purpose AI Models

Requirements for All GPAI Model Providers

- Provide technical documentation (including training and testing processes and evaluation results)

- Provide information and documentation for downstream providers to help them understand capabilities and limitations

- Establish a policy to comply with Union copyright law

- Publish a sufficiently detailed summary about the content used for training

Free and Open-Source GPAI Models Subject to lighter regulation – only need to comply with copyright and publish the training data summary, unless they present a systemic risk.

GPAI Models with Systemic Risk

These models face additional binding obligations due to their high-impact capabilities (cumulative training compute exceeding 10^25 FLOPs or determined by Commission decision based on Annex XIII criteria).

Additional requirements:

- Perform model evaluation, including conducting and documenting adversarial testing to identify and mitigate systemic risks

- Assess and mitigate possible systemic risks at the Union level

- Track, document, and report serious incidents and possible corrective measures to the AI Office and national competent authorities without undue delay

- Ensure adequate cybersecurity protection for the model and its physical infrastructure

Timeline Obligations for GPAI systems apply after 12 months (August 2, 2025).

WHAT THE EU AI ACT MEANS FOR YOU

Expand the role below, to learn more about how the EU AI ACT can shape responsibilities.

Core Concerns Under the EU AI Act: As the strategic lead for AI implementation, you're accountable for ensuring your organization's entire AI portfolio complies with the Act's risk-based framework. Your primary concerns include establishing governance structures that align AI development with organizational risk tolerance and ethical principles. You must oversee compliance across all risk categories, from prohibited systems to GPAI models with systemic risk.

Key responsibilities include implementing continuous risk management systems for high-risk AI, ensuring technical documentation and conformity assessments are completed, and maintaining the central EU database registrations. You're also responsible for AI education strategies and preventing shadow AI usage. For GPAI models, you must ensure compliance with copyright law, training data transparency, and additional obligations for systemic risk models (including adversarial testing and cybersecurity measures). Your role requires balancing innovation with regulatory compliance while fostering an appropriate organizational culture around responsible AI use.

Get guidance via our article: Operationalizing our 8-stage AI Governance Framework

Core Concerns Under the EU AI Act: Your role centers on ensuring AI systems meet their intended purpose while complying with the Act's requirements. You must validate that AI use cases align with business objectives without crossing into prohibited categories. For high-risk systems, you're accountable for approval decisions, ensuring models meet performance and fairness criteria before deployment. Transparency is crucial—you must ensure users know when they're interacting with AI (chatbots, deepfakes, emotion recognition systems). You participate in decision tollgates throughout the AI lifecycle, documenting system purposes, uses, and risks.

Key concerns include defining clear technical specifications, ensuring systems don't manipulate behavior or discriminate, and verifying compliance with sector-specific regulations. For systems using GPAI models, you must understand their capabilities and limitations through provider documentation. Your decisions directly impact whether AI systems require conformity assessments, database registration, or specific transparency measures based on their risk classification.

Core Concerns Under the EU AI Act: You're at the technical forefront of ensuring AI systems meet the Act's stringent requirements for accuracy, robustness, and cybersecurity. For high-risk systems, you must implement comprehensive data governance for training, validation, and testing datasets, ensuring data quality and representativeness while preventing errors. Your responsibilities include identifying and mitigating biases, implementing secure coding practices, and maintaining detailed technical documentation. For GPAI models with systemic risk, you must conduct adversarial testing and model evaluations.

Key concerns include version control, dependency tracking, and automated integrity checks. You're responsible for implementing human oversight capabilities and ensuring systems achieve appropriate performance levels. The Act requires you to document the entire development process, including hyperparameter tuning and model training procedures. You must also verify user instructions through testing and apply explainable AI techniques where needed, particularly for high-risk applications in employment, law enforcement, or essential services.

Core Concerns Under the EU AI Act: Your role is fundamental in navigating the Act's complex legal landscape and ensuring organizational compliance across all AI systems. You must advise on risk classifications, determining whether systems fall into prohibited, high-risk, limited-risk, or minimal-risk categories. Key concerns include protecting fundamental rights, ensuring GDPR alignment, and managing contractual obligations with AI vendors. For high-risk systems, you oversee conformity assessments, CE marking requirements, and database registration obligations. You must ensure GPAI models comply with Union copyright law and training data transparency requirements.

Critical responsibilities include developing AI compliance frameworks, managing liability issues, and ensuring proper documentation for potential audits. You handle serious incident reporting obligations and coordinate with national authorities. For international deployments, you navigate cross-border compliance. Your expertise is vital in interpreting the Act's requirements, especially regarding biometric systems, profiling applications, and sector-specific regulations that trigger additional obligations.

Core Concerns Under the EU AI Act: You're responsible for implementing AI-specific risk management frameworks that align with the Act's risk-based approach. For high-risk systems, you must establish continuous risk management processes throughout the AI lifecycle, systematically identifying, analyzing, and mitigating risks. Your role includes conducting regular audits to verify policy compliance and adherence to the Act's requirements.

Key concerns include assessing whether AI systems pose risks to health, safety, or fundamental rights, and ensuring appropriate mitigation measures. You develop audit frameworks to evaluate AI usage, monitor compliance with internal policies, and assess vendor risks. For GPAI models with systemic risk, you oversee the assessment and mitigation of Union-level risks. You're responsible for establishing mechanisms to track serious incidents and ensure proper reporting. Your work includes evaluating the effectiveness of human oversight measures, accuracy benchmarks, and cybersecurity protections, while ensuring trustworthy AI characteristics are integrated into organizational policies.

Core Concerns Under the EU AI Act: Your role is critical in managing supply chain risks and ensuring third-party AI products meet the Act's requirements. You must conduct thorough due diligence on vendors, verifying their compliance with relevant risk categories and obligations. For high-risk AI systems, you ensure contracts specify necessary information, capabilities, and technical access to enable compliance.

Key concerns include establishing vendor risk management programs covering security, ethical, and compliance factors. You must ensure external GPAI models provide required technical documentation, copyright compliance policies, and training data summaries. Critical responsibilities include developing standardized data usage agreements and ensuring content provenance standards. You verify that vendors of high-risk systems have completed conformity assessments and maintain proper CE marking. For AI components integrated into your products, you must ensure the supply chain maintains compliance throughout. Your work directly impacts whether your organization can demonstrate compliance when using third-party AI technologies.

Core Concerns Under the EU AI Act: You're responsible for the technical infrastructure ensuring AI systems maintain compliance throughout their operational lifecycle. For high-risk systems, you must implement automatic recording of events (logs) and ensure traceability of system functioning. Your role includes hardening MLOps pipelines, implementing automated integrity checks, and integrating security measures into CI/CD processes.

Key concerns include maintaining model accuracy, robustness, and cybersecurity protection as required by the Act. You establish continuous monitoring mechanisms to detect performance degradation or emerging risks. For GPAI models with systemic risk, you ensure adequate cybersecurity for both the model and physical infrastructure. Critical responsibilities include managing change control processes, maintaining Software Bill of Materials (SBOMs), and implementing secure deployment practices. You handle the technical aspects of serious incident detection and reporting. Your work ensures AI systems remain within their approved operational parameters and maintain required performance levels post-deployment.

Core Concerns Under the EU AI Act: Your role bridges GDPR and AI Act compliance, ensuring data protection throughout AI system lifecycles. You oversee how personal data is collected, processed, and stored for AI training and operations, ensuring compliance with both regulations. Key concerns include conducting Data Protection Impact Assessments (DPIAs) that complement the Act's Fundamental Rights Impact Assessments. For high-risk AI systems, you ensure data governance meets Article 10 requirements for quality, representativeness, and bias prevention. You must verify that biometric and categorization systems comply with both GDPR and the Act's specific restrictions.

Critical responsibilities include ensuring transparency in data use, implementing data minimization principles, and safeguarding individual rights. For GPAI models, you address copyright compliance and training data transparency from a privacy perspective. You manage consent requirements for AI processing and ensure appropriate legal bases. Your expertise is vital when AI systems process special categories of personal data or involve automated decision-making with legal effects.

EU AI ACT Essential Reference Tools

Click each card to learn more:

EU AI ACT Risk Assessment

Documentaton & Tools Templates

Or expand text below:

QMS Requirements

Quality Management System (QMS) Requirements Under the EU AI Act

The EU AI Act mandates comprehensive Quality Management System requirements for providers of high-risk AI systems to ensure responsible development, deployment, and management that upholds safety, transparency, accountability, and fundamental rights protection.

Purpose and Framework

The QMS must ensure compliance with the EU AI Act and establish sound quality management practices to mitigate risks and ensure trustworthiness. Documentation must be systematic and orderly, presented as written policies, procedures, and instructions.

Integration Options

- Existing Products: Providers of AI systems already covered by Union harmonization legislation may integrate QMS elements into their existing quality systems, provided equivalent protection levels are achieved

- Public Authorities: May implement QMS requirements within national or regional quality management systems

- Microenterprises: Permitted to comply with certain QMS elements in a simplified manner

Core QMS Requirements (Quality Management System Article 17)

1. Strategy for Regulatory Compliance

- Compliance with conformity assessment procedures

- Processes for managing modifications to high-risk AI systems

- Documentation of compliance verification methods

2. Management and Organization

- Management Responsibilities: Clearly defined allocation of QMS management roles

- Staff Competence: Measures ensuring personnel have necessary competence and training

- Management Review: Periodic review procedures to ensure QMS suitability, adequacy, and effectiveness

3. Technical Standards and Specifications

- Documentation of applied technical standards

- When harmonized standards aren’t fully applicable or don’t cover all requirements, documentation of alternative compliance methods

4. Comprehensive Data Management

All data operations performed before market placement or service deployment must be documented, including:

- Data Acquisition – sourcing and obtaining data

- Data Collection – gathering and compiling datasets

- Data Analysis – examination and evaluation procedures

- Data Labeling – annotation and categorization processes

- Data Storage – secure retention methods

- Data Filtration – cleaning and preprocessing

- Data Mining – extraction of patterns and insights

- Data Aggregation – combination and synthesis

- Data Retention – preservation policies and timelines

Special emphasis on ensuring training, validation, and testing datasets are relevant, representative, error-free, and complete to the best extent possible for intended purposes.

5. Documentation Requirements

- Comprehensive QMS documentation

- Technical documentation for each high-risk AI system

- Procedures ensuring QMS remains adequate and effective

- Name and address of provider

- List of AI systems covered by the QMS

6. Post-Market Monitoring

- Documented monitoring plan for deployed systems

- Collection and review of usage experience

- Identification of needs for immediate corrective or preventive actions

- Continuous improvement mechanisms

7. Authority Communication

- Established procedures for interacting with competent authorities

- Reporting mechanisms for serious incidents

- Documentation accessibility for regulatory reviews

Conformity Assessment and QMS

During conformity assessment procedures:

- Providers must verify QMS compliance with Article 17 requirements

- Design, development, and testing systems undergo examination and ongoing surveillance

- Applications for notified body assessment must include comprehensive QMS documentation

Notified Body Requirements

Notified bodies conducting QMS assessments must satisfy:

- Organizational requirements

- Quality management standards

- Resource adequacy

- Process requirements

- Cybersecurity measures

Alignment with International Standards

The EU AI Act’s QMS requirements align with broader AI governance frameworks such as ISO/IEC 42001, emphasizing:

- Comprehensive risk management processes

- AI impact assessments

- Full lifecycle management

- Continuous improvement culture

Implementation Timeline

QMS requirements apply when high-risk AI systems obligations take effect:

- August 2, 2026: For most high-risk AI systems (Annex III)

- August 2, 2027: For high-risk AI systems as safety components (Annex I)

Providers should begin QMS implementation well before these deadlines to ensure compliance readiness and allow time for refinement based on operational experience.

EU AI Act – Article Reference Guide

EU AI Act – Article Reference Guide Based on Regulation (EU) 2024/1689 of 13 June 2024

Chapter I: General Provisions

- [Article 1: Subject Matter]: Defines the purpose of the Regulation to improve internal market functioning, promote human-centric and trustworthy AI, ensure high protection of health, safety, and fundamental rights against harmful AI effects, and support innovation.

- [Article 2: Scope]: Specifies application to providers, deployers, importers, and distributors of AI systems and GPAI models within or outside the Union if output is used in the Union; excludes AI for military, defense, or national security objectives.

- [Article 3: Definitions]: Provides definitions for key terms used throughout the Regulation, such as ‘AI system’, ‘provider’, ‘deployer’, ‘high-risk’, and ‘general-purpose AI model’.

- [Article 4: AI Literacy]: Mandates that providers and deployers ensure sufficient AI literacy among their staff and persons dealing with AI system operation and use on their behalf.

Chapter II: Prohibited AI Practices

- [Article 5: Prohibited AI Practices]: Lists strictly prohibited AI practices including systems that manipulate human behavior, exploit vulnerabilities, or use social scoring; prohibitions apply from February 2, 2025.

Chapter III: High-Risk AI Systems

Section 1: Classification

- [Article 6: Classification Rules for High-Risk AI Systems]: Establishes conditions for high-risk classification including AI as safety components of products under EU harmonization legislation (Annex I) or systems used for purposes in Annex III; Commission can amend these lists.

- [Article 7: Amendments to Annex III]: Grants Commission power to amend Annex III by adding or removing high-risk use cases based on criteria like risk of harm to health, safety, or fundamental rights.

Section 2: Requirements

- [Article 8: Compliance with the Requirements]: States that high-risk AI systems must comply with requirements set out in this section.

- [Article 9: Risk Management System]: Requires providers to establish, implement, document, and maintain continuous risk management system throughout AI system lifecycle to identify, assess, and mitigate risks.

- [Article 10: Data and Data Governance]: Mandates training, validation, and testing datasets meet quality criteria emphasizing relevance, representativeness, error-freeness, and completeness to mitigate biases.

- [Article 11: Technical Documentation]: Requires comprehensive technical documentation before market placement detailing design, development, data, and risk management; SMEs can provide simplified documentation.

- [Article 12: Record-Keeping]: Requires automatic recording of events (logs) throughout system lifetime for traceability and post-market monitoring.

- [Article 13: Transparency and Provision of Information to Deployers]: High-risk systems must include clear, comprehensive instructions enabling deployers to understand operation, functionality, strengths, and limitations.

- [Article 14: Human Oversight]: Requires design and development allowing effective human oversight during use.

- [Article 15: Accuracy, Robustness and Cybersecurity]: Stipulates appropriate levels of accuracy, robustness, and cybersecurity based on intended purpose and context.

Section 3: Obligations

- [Article 16: Obligations of Providers of High-Risk AI Systems]: Outlines general provider obligations including ensuring compliance, quality management system, documentation, conformity assessment, EU declaration, and corrective actions.

- [Article 17: Quality Management System]: Requires providers to implement documented quality management system ensuring regulatory compliance.

- [Article 18: Documentation Keeping]: Providers must keep technical documentation, QMS documentation, and records available to authorities for 10 years.

- [Article 19: Automatically Generated Logs]: Providers must keep logs from high-risk AI systems for at least six months when under their control.

- [Article 20: Corrective Actions and Duty of Information]: Addresses corrective actions and duty to inform authorities if AI system not in conformity.

- [Article 21: Cooperation with Competent Authorities]: Obliges providers to cooperate by providing necessary information and documentation upon request.

- [Article 22: Authorised Representatives of Providers]: Non-EU providers must appoint EU authorized representative for compliance matters.

- [Article 23: Obligations of Importers]: Importers must verify CE marking, documentation, and provider compliance before making systems available.

- [Article 24: Obligations of Distributors]: Distributors must verify CE marking, documentation, and provider/importer compliance.

- [Article 25: Responsibilities Along the AI Value Chain]: Clarifies when distributors, importers, deployers, or third parties become providers with provider obligations.

- [Article 26: Obligations of Deployers of High-Risk AI Systems]: Deployers must use systems per instructions, monitor operation, inform affected persons, and comply with registration requirements.

- [Article 27: Fundamental Rights Impact Assessment]: Public authorities and certain private entities must perform FRIA before using high-risk AI systems, identifying and mitigating fundamental rights risks.

Section 4: Notifying Authorities and Notified Bodies

- [Articles 28-39]: Establish requirements for notifying authorities, conformity assessment bodies, notification procedures, operational obligations, coordination, and third-country recognition.

Section 5: Standards, Conformity Assessment, Certificates, Registration

- [Article 40: Harmonised Standards and Standardisation Deliverables]: Compliance with harmonized standards published in Official Journal presumes conformity with requirements.

- [Article 41: Common Specifications]: Allows common specifications to presume conformity where harmonized standards unavailable.

- [Article 42: Presumption of Conformity with Certain Requirements]: Establishes specific presumptions of conformity for systems trained on representative data or certified under cybersecurity schemes.

- [Article 43: Conformity Assessment]: High-risk systems require conformity assessment before market placement via internal control or notified body assessment.

- [Article 44: Certificates]: Details validity periods and renewal processes for notified body certificates.

- [Article 45: Information Obligations of Notified Bodies]: Notified bodies must inform authorities about issued, refused, or withdrawn certificates.

- [Article 46: Derogation from Conformity Assessment Procedure]: Outlines conditions for conformity assessment derogations.

- [Article 47: EU Declaration of Conformity]: Providers must draw up written declaration for each high-risk system declaring conformity with AI Act and applicable EU legislation.

- [Article 48: CE Marking]: Providers must affix CE marking to systems, packaging, or documentation indicating conformity.

- [Article 49: Registration]: Providers/representatives must register themselves and high-risk systems in EU database; public authorities acting as deployers also have registration obligations.

Chapter IV: Transparency Obligations

- [Article 50: Transparency Obligations for Certain AI Systems]: Requires informing users they’re interacting with AI unless obvious; mandates labeling of deepfakes and AI-generated content.

Chapter V: General-Purpose AI Models

Section 1: Classification Rules

- [Article 51: Classification of GPAI Models with Systemic Risk]: Defines criteria for classifying GPAI models as having systemic risk based on high-impact capabilities or compute exceeding 10^25 FLOPs; providers must notify AI Office if criteria met.

- [Article 52: Procedure]: Outlines procedure for classifying GPAI models with systemic risk and maintaining list of such models.

Section 2: Obligations for Providers of General-Purpose AI Models

- [Article 53: Obligations for Providers of GPAI Models]: Requires technical documentation, information for downstream providers, copyright compliance policy, and training content summary; exemptions for non-systemic risk open-source models.

- [Article 54: Authorised Representatives of GPAI Providers]: Non-EU GPAI providers must appoint Union authorized representative for compliance tasks.

Section 3: Obligations for GPAI Models with Systemic Risk

- [Article 55: Obligations for GPAI Models with Systemic Risk]: Additional obligations including model evaluation, adversarial testing, risk assessment/mitigation, incident reporting, and cybersecurity protection.

Section 4: Codes of Practice

- [Article 56: Codes of Practice]: AI Office encourages Union-level codes covering GPAI obligations including risk management; codes should be ready by May 2, 2025.

Chapter VI: Measures in Support of Innovation

- [Article 57: AI Regulatory Sandboxes]: Member States must establish at least one sandbox by August 2, 2026 to facilitate AI development and testing under regulatory oversight.

- [Article 58: Detailed Arrangements for AI Regulatory Sandboxes]: Common principles for sandbox establishment and operation to avoid Union fragmentation.

- [Article 59: Testing in Real World Conditions]: Allows real-world testing under specific safeguards.

- [Article 60: Further Provisions for Testing in Real World Conditions]: Additional rules including required plan and EU database registration.

- [Article 61: Informed Consent for Testing]: Requires documented informed consent from participants in real-world testing.

- [Article 62: Measures for SMEs and Start-ups]: Member States should provide priority sandbox access and information platforms for SMEs.

- [Article 63: Derogations for Specific Operators]: Allows microenterprises simplified compliance with quality management system elements.

Chapter VII: Governance

Section 1: Governance at Union Level

- [Article 64: AI Office]: Establishes European AI Office within Commission to develop Union AI expertise and implement Regulation.

- [Article 65: European Artificial Intelligence Board]: Establishes Board of Member State representatives for uniform application and coordination.

- [Article 66: Tasks of the Board]: Board provides opinions, recommendations, and implementation guidance.

- [Article 67: Advisory Forum]: Establishes stakeholder forum for input.

- [Article 68: Scientific Panel of Independent Experts]: Panel provides alerts and advice on GPAI systemic risks.

- [Article 69: Access to Pool of Experts]: Facilitates Member State access to expert pool.

Section 2: National Competent Authorities

- [Article 70: Designation of National Competent Authorities]: Each Member State designates notifying and market surveillance authorities for supervising Regulation implementation.

Chapter VIII: EU Database

- [Article 71: EU Database for High-Risk AI Systems]: Establishes central public database for registered high-risk systems with exceptions for sensitive data.

Chapter IX: Post-Market Monitoring, Information Sharing and Market Surveillance

Section 1: Post-Market Monitoring

- [Article 72: Post-Market Monitoring]: Providers must establish monitoring system and plan to collect usage experience and identify corrective action needs.

Section 2: Sharing of Information

- [Article 73: Reporting of Serious Incidents]: Providers must report serious incidents and corrective measures to AI Office and authorities without undue delay.

Section 3: Enforcement

- [Articles 74-84]: Cover market surveillance, mutual assistance, supervision of testing, authority powers, confidentiality, procedures for risk evaluation, safeguards, compliance issues, and testing support structures.

Section 4: Remedies

- [Article 85: Right to Lodge a Complaint]: Confirms right to lodge complaints with market surveillance authority.

- [Article 86: Right to Explanation of Individual Decision-Making]: Grants affected persons right to clear explanation of high-risk AI decisions with legal or significant effects.

- [Article 87: Reporting of Infringements]: Applies whistleblower protection directive to AI Act infringement reporting.

Section 5: Supervision of General-Purpose AI Models

- [Articles 88-94]: Commission/AI Office has exclusive GPAI enforcement powers including monitoring, documentation requests, evaluations, and procedural rights.

Chapter X: Codes of Conduct and Guidelines

- [Article 95: Codes of Conduct]: Encourages voluntary application of high-risk requirements to other AI systems.

- [Article 96: Guidelines from the Commission]: Commission issues implementation guidelines with attention to SMEs and local authorities.

Chapter XI: Delegation of Power and Committee Procedure

- [Articles 97-98]: Specify conditions for delegated acts and committee procedures.

Chapter XII: Penalties

- [Article 99: Penalties]: Member States set effective, proportionate penalties; fines up to €35 million or 7% turnover for prohibited practices violations.

- [Article 100: Administrative Fines on Union Institutions]: Fines for non-compliant EU bodies.

- [Article 101: Fines for GPAI Providers]: Commission can fine GPAI providers up to 3% turnover or €15 million.

Chapter XIII: Final Provisions

- [Articles 102-110]: Amend existing EU regulations/directives for AI Act consistency.

- [Article 111: AI Systems Already Placed on Market]: Transitional provisions with different compliance deadlines based on system nature.

- [Article 112: Evaluation and Review]: Commission regularly evaluates need for Act amendments.

- [Article 113: Entry into Force]: Regulation entered force August 2, 2024 with phased implementation.

EU AI Act Glossary: Complete Terms and Definitions

This comprehensive glossary defines essential terms from the

EU Artificial Intelligence Act (Regulation 2024/1689).

Understanding these definitions is crucial for compliance with the

AI Act’s requirements for providers, deployers, and other stakeholders.

Last updated: [Date] | Version: Official EU AI Act terminology

Skills, knowledge and understanding that allow providers, deployers and affected persons, taking into account their respective rights and obligations in the context of this Regulation, to make an informed deployment of AI systems, as well as to gain awareness about the opportunities and risks of AI and possible harm it can cause.

AI OfficeThe Commission’s function of contributing to the implementation, monitoring and supervision of AI systems and general-purpose AI models, and AI governance, provided for in Commission Decision of 24 January 2024; references in this Regulation to the AI Office shall be construed as references to the Commission.

AI Regulatory SandboxA controlled framework set up by a competent authority which offers providers or prospective providers of AI systems the possibility to develop, train, validate and test, where appropriate in real-world conditions, an innovative AI system, pursuant to a sandbox plan for a limited time under regulatory supervision.

AI SystemA machine-based system that is designed to operate with varying levels of autonomy and that may exhibit adaptiveness after deployment, and that, for explicit or implicit objectives, infers, from the input it receives, how to generate outputs such as predictions, content, recommendations, or decisions that can influence physical or virtual environments. Related: Recital 12

Authorised RepresentativeA natural or legal person located or established in the Union who has received and accepted a written mandate from a provider of an AI system or a general-purpose AI model to, respectively, perform and carry out on its behalf the obligations and procedures established by this Regulation.

B

Biometric Categorisation SystemAn AI system for the purpose of assigning natural persons to specific categories on the basis of their biometric data, unless it is ancillary to another commercial service and strictly necessary for objective technical reasons. Related: Recital 16

Biometric DataPersonal data resulting from specific technical processing relating to the physical, physiological or behavioral characteristics of a natural person, such as facial images or dactyloscopic data. Related: Recital 14

Biometric IdentificationThe automated recognition of physical, physiological, behavioral, or psychological human features for the purpose of establishing the identity of a natural person by comparing biometric data of that individual to biometric data of individuals stored in a database. Related: Recital 15

Biometric VerificationThe automated, one-to-one verification, including authentication, of the identity of natural persons by comparing their biometric data to previously provided biometric data. Related: Recital 15

C

CE MarkingA marking by which a provider indicates that an AI system is in conformity with the requirements set out in Chapter III, Section 2 and other applicable Union harmonisation legislation providing for its affixing.

Common SpecificationA set of technical specifications as defined in Article 2, point (4) of Regulation (EU) No 1025/2012, providing means to comply with certain requirements established under this Regulation.

Conformity AssessmentThe process of demonstrating whether the requirements set out in Chapter III, Section 2 relating to a high-risk AI system have been fulfilled.

Conformity Assessment BodyA body that performs third-party conformity assessment activities, including testing, certification and inspection.

Critical InfrastructureCritical infrastructure as defined in Article 2, point (4), of Directive (EU) 2022/2557.

D

Deep FakeAI-generated or manipulated image, audio or video content that resembles existing persons, objects, places, entities or events and would falsely appear to a person to be authentic or truthful.

DeployerA natural or legal person, public authority, agency or other body using an AI system under its authority except where the AI system is used in the course of a personal non-professional activity. Related: Recital 13

DistributorA natural or legal person in the supply chain, other than the provider or the importer, that makes an AI system available on the Union market.

Downstream ProviderA provider of an AI system, including a general-purpose AI system, which integrates an AI model, regardless of whether the AI model is provided by themselves and vertically integrated or provided by another entity based on contractual relations.

E

Emotion Recognition SystemAn AI system for the purpose of identifying or inferring emotions or intentions of natural persons on the basis of their biometric data. Related: Recital 18

F

Floating-Point OperationAny mathematical operation or assignment involving floating-point numbers, which are a subset of the real numbers typically represented on computers by an integer of fixed precision scaled by an integer exponent of a fixed base. Related: Recital 110

G

General-Purpose AI ModelAn AI model, including where such an AI model is trained with a large amount of data using self-supervision at scale, that displays significant generality and is capable of competently performing a wide range of distinct tasks regardless of the way the model is placed on the market and that can be integrated into a variety of downstream systems or applications, except AI models that are used for research, development or prototyping activities before they are placed on the market. Related: Recitals 97, 98, and 99

General-Purpose AI SystemAn AI system which is based on a general-purpose AI model and which has the capability to serve a variety of purposes, both for direct use as well as for integration in other AI systems. Related: Recital 100

H

Harmonized StandardA harmonized standard as defined in Article 2(1), point (c), of Regulation (EU) No 1025/2012.

High-Impact CapabilitiesCapabilities that match or exceed the capabilities recorded in the most advanced general-purpose AI models. Related: Recital 110

I

ImporterA natural or legal person located or established in the Union that places on the market an AI system that bears the name or trademark of a natural or legal person established in a third country.

Informed ConsentA subject’s freely given, specific, unambiguous and voluntary expression of his or her willingness to participate in a particular testing in real-world conditions, after having been informed of all aspects of the testing that are relevant to the subject’s decision to participate.

Input DataData provided to or directly acquired by an AI system on the basis of which the system produces an output.

Instructions for UseThe information provided by the provider to inform the deployer of, in particular, an AI system’s intended purpose and proper use.

Intended PurposeThe use for which an AI system is intended by the provider, including the specific context and conditions of use, as specified in the information supplied by the provider in the instructions for use, promotional or sales materials and statements, as well as in the technical documentation.

L

Law EnforcementActivities carried out by law enforcement authorities or on their behalf for the prevention, investigation, detection or prosecution of criminal offences or the execution of criminal penalties, including safeguarding against and preventing threats to public security.

Law Enforcement AuthorityEither:

- (a) any public authority competent for the prevention, investigation, detection or prosecution of criminal offences or the execution of criminal penalties, including the safeguarding against and the prevention of threats to public security; or

- (b) any other body or entity entrusted by Member State law to exercise public authority and public powers for the purposes of the prevention, investigation, detection or prosecution of criminal offences or the execution of criminal penalties, including the safeguarding against and the prevention of threats to public security.

M

Making Available on the MarketThe supply of an AI system or a general-purpose AI model for distribution or use on the Union market in the course of a commercial activity, whether in return for payment or free of charge.

Market Surveillance AuthorityThe national authority carrying out the activities and taking the measures pursuant to Regulation (EU) 2019/1020.

N

National Competent AuthorityA notifying authority or a market surveillance authority; as regards AI systems put into service or used by Union institutions, agencies, offices and bodies, references to national competent authorities or market surveillance authorities in this Regulation shall be construed as references to the European Data Protection Supervisor.

Non-Personal DataData other than personal data as defined in Article 4, point (1), of Regulation (EU) 2016/679.

Notified BodyA conformity assessment body notified in accordance with this Regulation and other relevant Union harmonization legislation.

Notifying AuthorityThe national authority responsible for setting up and carrying out the necessary procedures for the assessment, designation and notification of conformity assessment bodies and for their monitoring.

O

OperatorA provider, product manufacturer, deployer, authorised representative, importer or distributor.

P

Performance of an AI SystemThe ability of an AI system to achieve its intended purpose.

Personal DataPersonal data as defined in Article 4, point (1), of Regulation (EU) 2016/679.

Placing on the MarketThe first making available of an AI system or a general-purpose AI model on the Union market.

Post Remote Biometric Identification SystemA remote biometric identification system other than a real-time remote biometric identification system. Related: Recital 17

Post-Market Monitoring SystemAll activities carried out by providers of AI systems to collect and review experience gained from the use of AI systems they place on the market or put into service for the purpose of identifying any need to immediately apply any necessary corrective or preventive actions.

ProfilingProfiling as defined in Article 4, point (4), of Regulation (EU) 2016/679.

ProviderA natural or legal person, public authority, agency or other body that develops an AI system or a general-purpose AI model or that has an AI system or a general-purpose AI model developed and places it on the market or puts the AI system into service under its own name or trademark, whether for payment or free of charge.

Publicly Accessible SpaceAny publicly or privately owned physical place accessible to an undetermined number of natural persons, regardless of whether certain conditions for access may apply, and regardless of the potential capacity restrictions. Related: Recital 19

Putting into ServiceThe supply of an AI system for first use directly to the deployer or for own use in the Union for its intended purpose.

R

Real-Time Remote Biometric Identification SystemA remote biometric identification system, whereby the capturing of biometric data, the comparison and the identification all occur without a significant delay, comprising not only instant identification, but also limited short delays in order to avoid circumvention. Related: Recital 17

Real-World Testing PlanA document that describes the objectives, methodology, geographical, population and temporal scope, monitoring, organisation and conduct of testing in real-world conditions.

Reasonably Foreseeable MisuseThe use of an AI system in a way that is not in accordance with its intended purpose, but which may result from reasonably foreseeable human behaviour or interaction with other systems, including other AI systems.

Recall of an AI SystemAny measure aiming to achieve the return to the provider or taking out of service or disabling the use of an AI system made available to deployers.

Remote Biometric Identification SystemAn AI system for the purpose of identifying natural persons, without their active involvement, typically at a distance through the comparison of a person’s biometric data with the biometric data contained in a reference database. Related: Recital 17

RiskThe combination of the probability of an occurrence of harm and the severity of that harm.

S

Safety ComponentA component of a product or of an AI system which fulfils a safety function for that product or AI system, or the failure or malfunctioning of which endangers the health and safety of persons or property.

Sandbox PlanA document agreed between the participating provider and the competent authority describing the objectives, conditions, timeframe, methodology and requirements for the activities carried out within the sandbox.

Sensitive Operational DataOperational data related to activities of prevention, detection, investigation or prosecution of criminal offences, the disclosure of which could jeopardise the integrity of criminal proceedings.

Serious IncidentAn incident or malfunctioning of an AI system that directly or indirectly leads to any of the following:

- (a) the death of a person, or serious harm to a person’s health;

- (b) a serious and irreversible disruption of the management or operation of critical infrastructure;

- (c) the infringement of obligations under Union law intended to protect fundamental rights;

- (d) serious harm to property or the environment.

The categories of personal data referred to in Article 9(1) of Regulation (EU) 2016/679, Article 10 of Directive (EU) 2016/680 and Article 10(1) of Regulation (EU) 2018/1725.

SubjectFor the purpose of real-world testing, means a natural person who participates in testing in real-world conditions.

Substantial ModificationA change to an AI system after its placing on the market or putting into service which is not foreseen or planned in the initial conformity assessment carried out by the provider and as a result of which the compliance of the AI system with the requirements set out in Chapter III, Section 2 is affected or results in a modification to the intended purpose for which the AI system has been assessed. Related: Recital 128

Systemic RiskA risk that is specific to the high-impact capabilities of general-purpose AI models, having a significant impact on the Union market due to their reach, or due to actual or reasonably foreseeable negative effects on public health, safety, public security, fundamental rights, or the society as a whole, that can be propagated at scale across the value chain. Related: Recital 110

T

Testing DataData used for providing an independent evaluation of the AI system in order to confirm the expected performance of that system before its placing on the market or putting into service.

Testing in Real-World ConditionsThe temporary testing of an AI system for its intended purpose in real-world conditions outside a laboratory or otherwise simulated environment, with a view to gathering reliable and robust data and to assessing and verifying the conformity of the AI system with the requirements of this Regulation and it does not qualify as placing the AI system on the market or putting it into service within the meaning of this Regulation, provided that all the conditions laid down in Article 57 or 60 are fulfilled.

Training DataData used for training an AI system through fitting its learnable parameters.

V

Validation DataData used for providing an evaluation of the trained AI system and for tuning its non-learnable parameters and its learning process in order, inter alia, to prevent underfitting or overfitting.

Validation Data SetA separate data set or part of the training data set, either as a fixed or variable split.

W

Widespread InfringementAny act or omission contrary to Union law protecting the interest of individuals, which:

- (a) has harmed or is likely to harm the collective interests of individuals residing in at least two Member States other than the Member State in which:

- (i) the act or omission originated or took place;

- (ii) the provider concerned, or, where applicable, its authorised representative is located or established; or

- (iii) the deployer is established, when the infringement is committed by the deployer;

- (b) has caused, causes or is likely to cause harm to the collective interests of individuals and has common features, including the same unlawful practice or the same interest being infringed, and is occurring concurrently, committed by the same operator, in at least three Member States.

Any measure aiming to prevent an AI system in the supply chain being made available on the market.

EU AI Act Fine Structure

Maximum Penalties by Violation Type

Prohibited AI (Most Serious)

Violation: Using banned AI systems (Article 5)

- Companies: €35 million OR 7% of global annual revenue (whichever is higher)

- EU Institutions: €1.5 million

Other Violations

Violations: Breaking rules for providers, importers, distributors, deployers, transparency

- Companies: €15 million OR 3% of global annual revenue (whichever is higher)

- EU Institutions: €750,000

False Information

Violation: Lying to authorities or providing misleading information

- Companies: €7.5 million OR 1% of global annual revenue (whichever is higher)

Special Categories

Small Businesses (SMEs)

- Pay the lower of percentage OR fixed amount

- Ensures fines don’t destroy small companies

- Applies to all violation types

General-Purpose AI Providers

- Maximum: €15 million OR 3% of global revenue

- Enforced by: European Commission directly

- Violations include:

- Breaking GPAI rules

- Refusing to provide information

- Blocking model evaluation

- Ignoring compliance orders

How Fines Are Calculated

Authorities consider these factors:

Severity Factors:

- Nature and gravity of violation

- How long it lasted

- Number of people affected

- Damage caused

- Intent (on purpose vs. accident)

Company Factors:

- Size and market share

- Annual revenue

- Previous violations

- Financial benefit gained

Cooperation Factors:

- Self-reporting the violation

- Helping with investigation

- Actions to fix the problem

- Measures to prevent repeat

Enforcement Process

- Detection

- Market surveillance finds violation

- Someone files complaint

- Company self-reports

- Investigation

- Authorities gather evidence

- Company can respond

- All circumstances reviewed

- Decision

- Fine amount calculated

- Company notified

- Appeal rights explained

- Payment

- Company fines → National budget

- EU institution fines → EU budget

- Annual reporting to Commission

Quick Reference Table

| Violation | Company Fine | EU Institution Fine |

|---|---|---|

| Banned AI | €35M or 7% | €1.5M |

| Other violations | €15M or 3% | €750K |

| False info | €7.5M or 1% | N/A |

| GPAI violations | €15M or 3% | N/A |

For companies: Always the HIGHER of fixed amount or percentage

Key Points

- Double punishment avoided – Can’t be fined twice for same violation

- Appeal rights – All fines can be challenged in court

- Proportionality – Fines match the severity and company size

- Annual reporting – All fines tracked and reported to EU Commission

EU AI ACT Key Dates & Timeline

Before 2002:

- Prior to 2002/58/EC: Existing Union law protects private life and communications, providing conditions for data storage and access from terminal equipment (Recital 10).

2002:

- July 12, 2002: Directive 2002/58/EC concerning the processing of personal data and the protection of privacy in the electronic communications sector is adopted (Recital 10).

- June 13, 2002: Council Framework Decision 2002/584/JHA on the European arrest warrant and surrender procedures between Member States is adopted, serving as a basis for criminal offences listed in Annex II of the AI Act (Recital 33).

2003:

- May 6, 2003: Commission Recommendation 2003/361/EC concerning the definition of micro, small and medium-sized enterprises is adopted (Recital 143, 146).

2005:

- May 11, 2005: Directive 2005/29/EC concerning unfair business-to-consumer commercial practices is adopted, with its prohibitions being complementary to those in the AI Act (Recital 29).

2006:

- May 17, 2006: Directive 2006/42/EC on machinery is adopted (Recital 46, Annex I.A.1).

2008:

- July 9, 2008: Regulation (EC) No 765/2008 setting out requirements for accreditation and market surveillance is adopted (Recital 9, 28).

- July 9, 2008: Decision No 768/2008/EC on a common framework for the marketing of products is adopted (Recital 9, 126).

- March 11, 2008: Regulation (EC) No 300/2008 on common rules in the field of civil aviation security is adopted (Recital 49, Annex I.B.13).

- April 23, 2008: Directive 2008/48/EC on credit agreements for consumers is adopted (Recital 158).

2009:

- June 18, 2009: Directive 2009/48/EC on the safety of toys is adopted (Annex I.A.2).

- July 13, 2009: Regulation (EC) No 810/2009 establishing a Community Code on Visas is adopted (Recital 60).

- November 25, 2009: Directive 2009/138/EC on the taking-up and pursuit of the business of Insurance and Reinsurance (Solvency II) is adopted (Recital 158).

2012:

- October 25, 2012: Regulation (EU) No 1025/2012 on European standardisation is adopted (Recital 121, 40, 41).

2013:

- February 5, 2013: Regulation (EU) No 167/2013 on the approval and market surveillance of agricultural and forestry vehicles is adopted (Recital 49, Annex I.B.15).

- January 15, 2013: Regulation (EU) No 168/2013 on the approval and market surveillance of two- or three-wheel vehicles and quadricycles is adopted (Recital 49, Annex I.B.14).

- June 26, 2013: Regulation (EU) No 575/2013 on prudential requirements for credit institutions is adopted (Recital 158).

- June 26, 2013: Directive 2013/32/EU on common procedures for granting and withdrawing international protection is adopted (Recital 60).

- June 26, 2013: Directive 2013/36/EU on access to the activity of credit institutions is adopted (Recital 158).

2014:

- July 23, 2014: Directive 2014/90/EU on marine equipment is adopted (Recital 49, Annex I.B.16).

- February 4, 2014: Directive 2014/17/EU on credit agreements for consumers relating to residential immovable property is adopted (Recital 158).

- February 26, 2014: Directive 2014/31/EU on the harmonisation of laws relating to non-automatic weighing instruments is adopted (Recital 74).

- February 26, 2014: Directive 2014/32/EU on the harmonisation of laws relating to measuring instruments is adopted (Recital 74).

- February 26, 2014: Directive 2014/33/EU on lifts and safety components for lifts is adopted (Annex I.A.4).

- February 26, 2014: Directive 2014/34/EU on equipment and protective systems intended for use in potentially explosive atmospheres is adopted (Annex I.A.5).

- April 16, 2014: Directive 2014/53/EU on the harmonisation of laws relating to radio equipment is adopted (Annex I.A.6).

- May 15, 2014: Directive 2014/68/EU on pressure equipment is adopted (Annex I.A.7).

2016:

- April 27, 2016: Regulation (EU) 2016/679 (General Data Protection Regulation – GDPR) is adopted (Recital 10, 14, 70, 94).

- April 27, 2016: Directive (EU) 2016/680 on the protection of natural persons with regard to the processing of personal data by competent authorities for law enforcement purposes is adopted (Recital 10, 14, 38, 94).

- March 9, 2016: Regulation (EU) 2016/424 on cableway installations is adopted (Annex I.A.8).

- March 9, 2016: Regulation (EU) 2016/425 on personal protective equipment is adopted (Annex I.A.9).

- March 9, 2016: Regulation (EU) 2016/426 on appliances burning gaseous fuels is adopted (Annex I.A.10).

- January 20, 2016: Directive (EU) 2016/97 on insurance distribution is adopted (Recital 158).

- May 11, 2016: Directive (EU) 2016/797 on the interoperability of the rail system within the European Union is adopted (Recital 49, Annex I.B.17).

- October 26, 2016: Directive (EU) 2016/2102 on the accessibility of websites and mobile applications of public sector bodies is adopted (Recital 80).

2017:

- April 5, 2017: Regulation (EU) 2017/745 on medical devices is adopted (Recital 46, 51, 84, 147, Annex I.A.11).

- April 5, 2017: Regulation (EU) 2017/746 on in vitro diagnostic medical devices is adopted (Recital 46, 51, 147, Annex I.A.12).

- November 30, 2017: Regulation (EU) 2017/2226 establishing an Entry/Exit System (EES) is adopted (Annex X.4).

2018:

- May 30, 2018: Regulation (EU) 2018/858 on the approval and market surveillance of motor vehicles and their trailers is adopted (Recital 49, Annex I.B.18).

- July 4, 2018: Regulation (EU) 2018/1139 on common rules in the field of civil aviation and establishing a European Union Aviation Safety Agency is adopted (Recital 49, Annex I.B.20).

- October 23, 2018: Regulation (EU) 2018/1725 on the protection of natural persons with regard to the processing of personal data by the Union institutions, bodies, offices and agencies is adopted (Recital 10, 14, 70).

- September 12, 2018: Regulation (EU) 2018/1240 establishing a European Travel Information and Authorisation System (ETIAS) is adopted (Annex X.5.a).

- September 12, 2018: Regulation (EU) 2018/1241 amending Regulation (EU) 2016/794 for ETIAS is adopted (Annex X.5.b).

- November 28, 2018: Regulation (EU) 2018/1860 on the use of SIS for return of illegally staying third-country nationals is adopted (Annex X.1.a).

- November 28, 2018: Regulation (EU) 2018/1861 on the establishment, operation and use of SIS in border checks is adopted (Annex X.1.b).

- November 28, 2018: Regulation (EU) 2018/1862 on the establishment, operation and use of SIS in police and judicial cooperation is adopted (Annex X.1.c).

2019:

- June 20, 2019: Regulation (EU) 2019/1020 on market surveillance and compliance of products is adopted (Recital 9, 149, 156, 158, 161, 164, 166, 170, 74, 75, 79, 84).

- November 27, 2019: Regulation (EU) 2019/2144 on type-approval requirements for motor vehicles and their trailers is adopted (Recital 49, Annex I.B.19).

- April 17, 2019: Regulation (EU) 2019/881 on ENISA (the European Union Agency for Cybersecurity) and on information and communications technology cybersecurity certification (Cybersecurity Act) is adopted (Recital 78, 122).

- April 17, 2019: Directive (EU) 2019/790 on copyright and related rights in the Digital Single Market is adopted (Recital 104, 105, 106).

- April 17, 2019: Regulation (EU) 2019/816 establishing a centralised system for the identification of Member States holding conviction information on third-country nationals and stateless persons (ECRIS-TCN) is adopted (Annex X.6).

- May 20, 2019: Regulation (EU) 2019/817 establishing a framework for interoperability between EU information systems in the field of borders and visa is adopted (Annex X.7.a).

- May 20, 2019: Regulation (EU) 2019/818 establishing a framework for interoperability between EU information systems in the field of police and judicial cooperation, asylum and migration is adopted (Annex X.7.b).

- October 23, 2019: Directive (EU) 2019/1937 on the protection of persons who report breaches of Union law (Whistleblower Directive) is adopted (Recital 172).

- April 17, 2019: Directive (EU) 2019/882 on the accessibility requirements for products and services is adopted (Recital 29, 80, 131).

2020:

- October 1, 2020: European Council Special meeting conclusions (EUCO 13/20) state the objective of promoting a human-centric approach to AI and being a global leader in secure, trustworthy, and ethical AI (Recital 8).

- October 20, 2020: European Parliament resolution 2020/2012(INL) with recommendations to the Commission on a framework of ethical aspects of AI, robotics, and related technologies is adopted (Recital 8).

- November 25, 2020: Directive (EU) 2020/1828 on representative actions for the protection of the collective interests of consumers is adopted (Annex I.B.20).

2021:

- December 22, 2021: European Economic and Social Committee opinion on the AI Act (OJ C 517) is published (Recital 3).

- July 7, 2021: Regulation (EU) 2021/1133 amending several regulations regarding the Visa Information System is adopted (Annex X.2.a).

- July 7, 2021: Regulation (EU) 2021/1134 amending several regulations for the purpose of reforming the Visa Information System is adopted (Annex X.2.b).

- 2021: UNCRC General Comment No 25 on children’s rights in relation to the digital environment is developed (Recital 9, 48).

2022:

- March 11, 2022: European Central Bank opinion on the AI Act (OJ C 115) is published (Recital 3).

- February 28, 2022: Committee of the Regions opinion on the AI Act (OJ C 97) is published (Recital 3).

- June 29, 2022: Commission notice ‘The “Blue Guide” on the implementation of EU product rules 2022’ is published (Recital 46, 64).

- October 19, 2022: Regulation (EU) 2022/2065 on a Single Market For Digital Services (Digital Services Act) is adopted (Recital 11, 118, 120, 136).

- May 30, 2022: Regulation (EU) 2022/868 on European data governance is adopted (Recital 141).

- December 14, 2022: Directive (EU) 2022/2557 on the resilience of critical entities is adopted (Recital 33, 55).

2023:

- May 10, 2023: Regulation (EU) 2023/988 on general product safety is adopted (Recital 166).

- December 13, 2023: Regulation (EU) 2023/2854 on harmonised rules on fair access to and use of data (Data Act) is adopted (Recital 141).

2024:

- January 24, 2024: Commission Decision C(2024) 390 establishing the European Artificial Intelligence Office (AI Office) is adopted (Recital 148, 64).

- March 13, 2024: Position of the European Parliament on the AI Act (not yet published in the Official Journal) is established (Recital 3).

- March 13, 2024: Regulation (EU) 2024/900 on the transparency and targeting of political advertising is adopted (Recital 62).

- May 14, 2024: Regulation (EU) 2024/1358 on the establishment of ‘Eurodac’ for biometric data comparison is adopted (Annex X.3).

- May 21, 2024: Decision of the Council on the AI Act is established (Recital 3).

- June 13, 2024: REGULATION (EU) 2024/1689 of the European Parliament and of the Council (Artificial Intelligence Act) is adopted (Document Title).

- July 12, 2024: The Artificial Intelligence Act is published in the Official Journal of the European Union (Publication Date).

- August 1, 2024: The power to adopt delegated acts referred to in various articles is conferred on the Commission for a period of five years (Article 97(2)).

- November 2, 2024: Member States must identify and make publicly available a list of national public authorities or bodies that supervise or enforce Union law protecting fundamental rights in relation to high-risk AI systems (Article 77(2)).

2025:

- February 2, 2025: Chapters I (General Provisions) and II (Prohibited AI Practices) of the AI Act begin to apply (Article 113(a)).

- May 2, 2025: Codes of practice for general-purpose AI models should be ready (Article 56(9)).

- August 2, 2025: Chapter III Section 4 (Notifying Authorities and Notified Bodies), Chapter V (General-Purpose AI Models), Chapter VII (Governance), Chapter XII (Penalties), and Article 78 (Confidentiality) begin to apply, with the exception of Article 101 (Fines for providers of general-purpose AI models) (Article 113(b)).

- August 2, 2025: Provisions on penalties begin to apply (Recital 179).

- August 2, 2025: Member States must make publicly available information on how competent authorities and single points of contact can be contacted (Article 70(2)).

- August 2, 2025: Member States must lay down and notify to the Commission the rules on penalties, including administrative fines (Recital 179).

- August 2, 2025: Obligations for providers of general-purpose AI models begin to apply (Recital 179).

- August 2, 2025: Commission guidance to facilitate compliance with serious incident reporting obligations is to be issued (Article 73(7)).

2026:

- February 2, 2026: The Commission, after consulting the Board, is to provide guidelines specifying the practical implementation of Article 6 (classification of high-risk AI systems) with examples (Article 6(5)).

- February 2, 2026: The Commission is to adopt an implementing act laying down detailed provisions and a template for the post-market monitoring plan (Article 72(3)).

- August 2, 2026: Member States must ensure that their competent authorities establish at least one AI regulatory sandbox at national level, which must be operational (Article 57(1)).

- August 2, 2026: The main body of the AI Act begins to apply (Article 113).

2027:

- August 2, 2027: Providers of general-purpose AI models that were placed on the market before August 2, 2025, must comply with the obligations of the Regulation (Article 111(3)).

2028:

- August 2, 2028 and every four years thereafter: The Commission is to evaluate and report to the European Parliament and Council on the need for amendments to Annex III (high-risk areas), Article 50 (transparency measures), and the effectiveness of the supervision and governance system (Article 112(2)).

- August 2, 2028 and every four years thereafter: The Commission is to report on the review of progress on the energy-efficient development of general-purpose AI models (Article 112(6)).

- August 2, 2028 and every three years thereafter: The Commission is to evaluate the impact and effectiveness of voluntary codes of conduct for non-high-risk AI systems (Article 112(7)).

- August 2, 2028: The Commission is to evaluate the functioning of the AI Office and report to the European Parliament and Council (Article 112(5)).

2029:

- August 2, 2029 and every four years thereafter: The Commission is to submit a report on the evaluation and review of the entire Regulation to the European Parliament and Council, potentially including proposals for amendment (Article 112(3)).

2030:

- August 2, 2030: Providers and deployers of high-risk AI systems intended to be used by public authorities must comply with the requirements and obligations of the Regulation (Article 111(2)).

- December 31, 2030: AI systems that are components of large-scale IT systems listed in Annex X, placed on the market or put into service before August 2, 2027, must be brought into compliance (Article 111(1)).

2031:

- August 2, 2031: The Commission is to carry out an assessment of the enforcement of the Regulation and report to the European Parliament, the Council, and the European Economic and Social Committee (Article 112(13)).

Ongoing:

- Annually (until end of delegation of power): The Commission assesses the need for amendment of Annex III and the list of prohibited AI practices (Article 112(1)).

- Regularly: National market surveillance authorities and data protection authorities submit annual reports to the Commission on the use of ‘real-time’ remote biometric identification systems (Article 5(6)).

- Regularly: The Commission publishes annual reports on the use of real-time remote biometric identification systems based on aggregated data (Article 5(7)).

- As needed: The Commission can adopt delegated acts to amend conditions for high-risk AI systems, lists of high-risk AI systems, technical documentation, EU declaration of conformity, conformity assessment procedures, thresholds for general-purpose AI models with systemic risk, and transparency information for general-purpose AI models (Article 97).

- As needed: The AI Office (Commission) can take actions to monitor and enforce compliance by providers of general-purpose AI models (Article 88, 89).

- As needed: The scientific panel may provide qualified alerts to the AI Office regarding general-purpose AI models (Article 90).

- As needed: Member States report to the Commission on the status of financial and human resources of national competent authorities every two years (Article 70(6)).

EU AI ACT Risk Assessment

EU AI Act Risk Assessment Checklist & Template

Document Version: 1.0

Based on: EU AI Act (Regulation (EU) 2024/1689)

Last Updated: [Date]

Implementation Timeline & Stage Gates

Stage | When to Perform | Key Deliverables |

Stage 1: Conception | During initial AI system design | Initial Classification Report |

Stage 2: Development | Throughout development phase | Risk Management Documentation, Data Governance Plan |

Stage 3: Pre-Market | Before market placement | Technical Documentation, Conformity Assessment, CE Marking |

Stage 4: Pre-Deployment | Before operational use | FRIA (if applicable), User Instructions |

Stage 5: Post-Market | Continuous monitoring | Incident Reports, Monitoring Logs |

Section 1: Initial Classification Assessment

Stage: Conception

Deliverable: Classification Determination Report

1.1 System Identification

Field | Information Required | Response |

AI System Name | Official designation | _________ |

Version | Current version number | _________ |

Provider Name | Legal entity name | _________ |

Provider Registration | Company registration number | _________ |

Intended Purpose | Clear description of primary use | _________ |

Target Users | Who will deploy/use the system | _________ |

1.2 High-Risk Classification Screening

[Reference: Article 6]

Safety Component Check

Does your AI system fall under any of these categories?

- Machinery (Annex I)

- Toys

- Lifts

- Medical Devices

- In Vitro Diagnostic Medical Devices

- Radio Equipment

- Pressure Equipment

- Recreational Craft/Personal Watercraft

- Cableway Installations

- Gas Appliances

- Civil Aviation Systems

- Two/Three-Wheel Vehicles

- Agricultural/Forestry Vehicles

- Marine Equipment

- Rail System Components

- Motor Vehicles

- None of the above

Requires third-party conformity assessment? [ ] Yes [ ] No

Annex III Category Check

[Reference: Annex III]

Check all that apply:

1. Biometric Systems

- Remote biometric identification (real-time or post)

- Biometric categorization based on sensitive attributes

- Emotion recognition systems

2. Critical Infrastructure

- Safety components for road traffic management

- Safety components for water/gas/heating/electricity supply

- Safety components for critical digital infrastructure

3. Education and Vocational Training

- Determining access/admission to educational institutions

- Assigning persons to educational institutions

- Evaluating learning outcomes/student assessment

- Monitoring prohibited behavior during tests