AI Governance Committee

- Home

- AI Governance Committee

Table of Contents

AI Governance Committee Definition:

Click Image for Definition

AI Governance Committee Hub: Your Central Resource for AI Ethics, Regulation, and Oversight

Beyond Ethics Boards That Nobody Listens To

Think your AI ethics board has real power? It probably doesn’t.

An AI Governance Committee is different. It’s a cross-functional team with actual authority to approve, modify, or terminate AI projects. Unlike ethics boards that predominately just give advice, these committees make binding decisions that can stick.

This isn’t just semantics. Early ethics boards failed spectacularly when companies ignored their recommendations. Axon’s ethics board resigned after being overruled. Modern committees learned from these failures. They don’t advise. They govern.

The shift reflects AI’s evolution “from a technology to be considered to a business function to be managed”. You can’t manage what you can’t control.

Real Authority Means Real Results

The key difference? Decision-making power. Effective committees have “unambiguous authority to make binding decisions,” including the power for “initiation, continuation, or termination of AI projects”.

Without these teeth, you’re back to advisory theater.

This authority spans the entire AI lifecycle. Data governance through deployment. Model training through retirement. Development through monitoring. It’s comprehensive oversight, not spot checks.

Cross-Functional by Necessity

AI touches everything. So should your committee.

Effective committees are cross-functional and multidisciplinary because AI risks don’t respect departmental boundaries. You need technical expertise (data science, IT security), legal knowledge (compliance, risk management), and business context (operations, strategy).

One department can’t govern what affects every department.

From IT Project to Board Priority

Here’s what changed: AI governance moved upstairs. Committees now “elevate AI from a siloed IT concern to a board-level strategic priority”.

The numbers prove it. Board-level AI oversight disclosures jumped 150% since 2022 among S&P 500 companies. When lawyers start writing proxy statements about your AI governance, you know it matters.

Why Does Your Organization Need an AI Governance Committee

The AI Discovery Problem

Most organizations don’t know what AI they actually have. This creates immediate governance challenges because you can’t manage what you can’t see.

The discovery process typically reveals AI usage across departments that was never formally approved or documented. Employee tools, embedded vendor AI, and algorithmic decision-making systems operate without oversight or risk assessment.

Risk Mitigation Became Business Critical

Immature risk management is a key contributor to the failure of AI projects. But project failure isn’t just about poor performance anymore. It creates legal exposure, regulatory penalties, and reputation damage.

The research identifies specific scenarios that governance prevents: “biased AI models leading to discriminatory hiring or lending practices, privacy breaches resulting from mishandling sensitive data, and performance degradation from unmonitored model drift”.

These aren’t hypothetical risks. They’re documented incidents creating real business liability. Effective governance prevents costly and damaging outcomes before they escalate into legal or regulatory problems.

Trust as Competitive Advantage

Smart companies recognized something: 46% of executives identifying responsible AI as a top objective for achieving a competitive advantage.

The business logic is clear. A lack of trust in AI systems is a growing barrier to enterprise adoption. Organizations that demonstrate responsible AI practices differentiate themselves as trustworthy providers.

This trust translates into tangible business benefits: customer confidence, partner relationships, and investor appeal. Companies with governance structures deploy AI systems faster because clear guidelines reduce uncertainty for development teams and foster a predictable environment where innovation can thrive.

Regulatory Mandates, Not Suggestions

The regulatory landscape shifted from voluntary to mandatory. The European Union’s AI Act, the world’s first comprehensive legal framework for AI, imposes stringent, non-negotiable obligations on any organization providing or deploying “high-risk” AI systems within the EU market.

The financial stakes are severe. The EU AI Act’s provision for fines up to 7% of global annual turnover for serious violations transforms a compliance mandate into a potentially catastrophic financial risk.

Even existing regulations gained new significance. Under GDPR, fines can reach the higher of €20m or 4% of global turnover (Art. 83). Under California’s CCPA/CPRA, fines are per-violation (e.g., up to $2,663–$7,988 after 2024 CPI adjustment) Failure to comply can result in fines of up to 4% of global annual turnover, amounting to billions of dollars for large corporations.

Regulatory Mandates, Not Suggestions

The regulatory landscape shifted from voluntary to mandatory. The European Union’s AI Act, the world’s first comprehensive legal framework for AI, imposes stringent, non-negotiable obligations on any organization providing or deploying “high-risk” AI systems within the EU market.

The financial stakes are severe. The EU AI Act’s provision for fines up to 7% of global annual turnover for serious violations transforms a compliance mandate into a potentially catastrophic financial risk.

Even existing regulations gained new significance. Data protection laws, such as GDPR and CCPA, already impose significant obligations that are amplified by AI. Failure to comply can result in fines of up to 4% of global annual turnover, amounting to billions of dollars for large corporations.

Strategic Repositioning Signal

The governance trend reflects fundamental business evolution. The increasing disclosure of board-level oversight of AI in corporate proxy statements—a practice that has soared by over 150% since 2022 among S&P 500 companies—is a clear indicator of this strategic repositioning.

This isn’t just regulatory theater. It represents AI’s elevation from departmental IT concern to board-level strategic priority that affects enterprise risk, competitive positioning, and shareholder value.

Need to discover what AI systems you actually have? Start with our comprehensive AI inventory methodology to map your organization’s AI landscape.

These operational activities represent the practical implementation of AI governance principles. The committee serves as the central mechanism for balancing the interests of all key stakeholders—including leadership, employees, customers, and investors—to ensure that AI is developed and deployed ethically and for the company’s good. Success depends on systematic execution of these functions rather than ad-hoc AI management approaches.

What Does an AI Governance Committee Actually Do?

The Use Case Gatekeeper Function

Every AI project starts with a question: “Should we do this?”

The committee answers definitively. A formal intake and review process for all proposed AI use cases and tools. The committee is responsible for the approval, rejection, or conditional approval of all AI projects and third-party vendor procurements.

Projects get submitted through standardized channels. Risk assessments happen before development begins. Business justification gets scrutinized carefully. Technical feasibility gets verified. Each proposal faces the same rigorous review regardless of who sponsors it.

Approve as-is. Require modifications. Kill entirely.

That’s real authority.

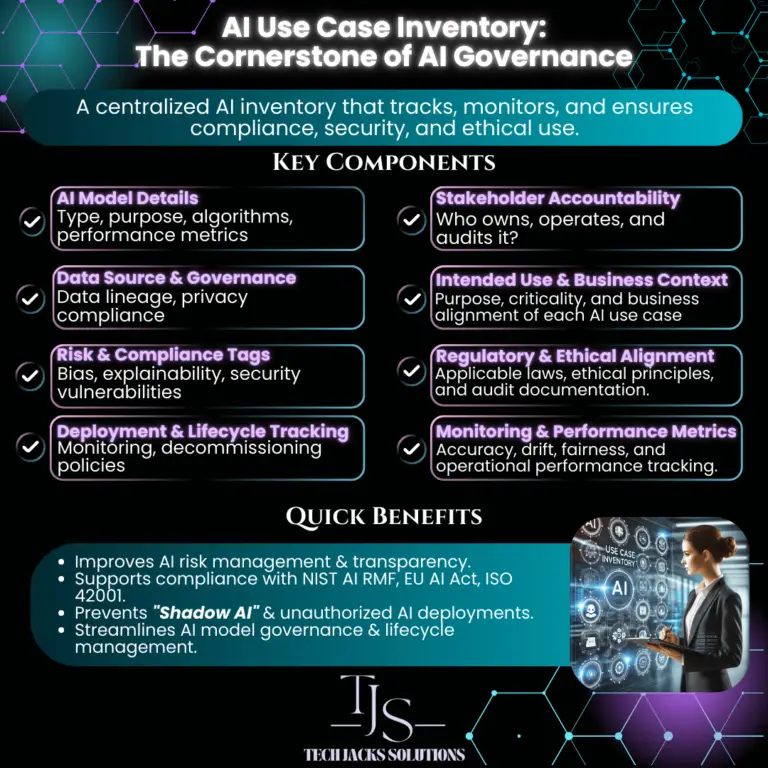

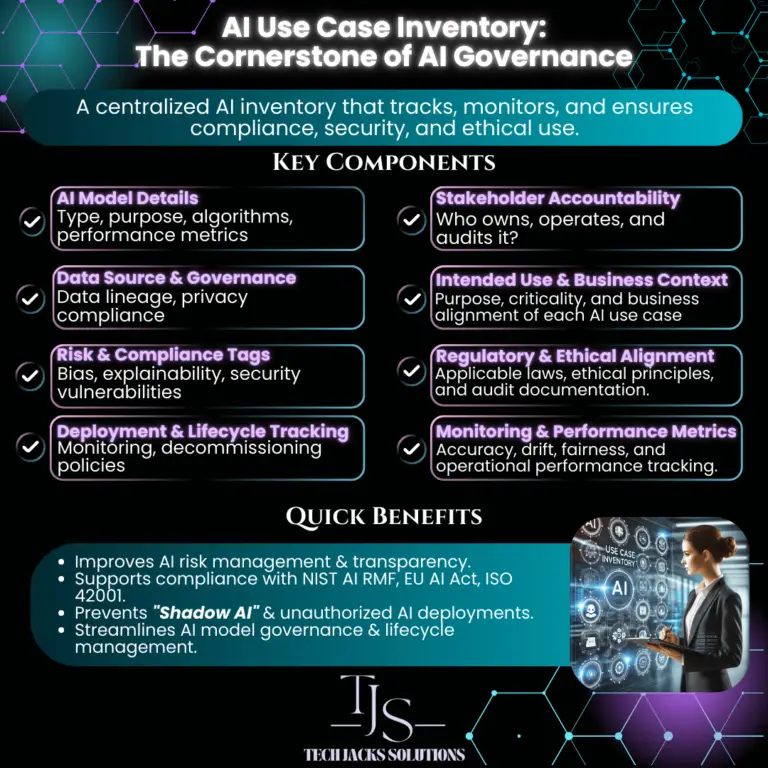

AI System Discovery and Inventory Management

You can’t govern what you don’t know exists. Simple truth.

The committee oversees the creation and maintenance of a centralized, comprehensive inventory of all AI systems used within the organization, including those embedded in third-party products. Employee tools, vendor solutions, algorithmic decision-making, embedded AI in purchased software.

The discovery process typically reveals AI usage across departments that was never formally approved: sales scoring tools, HR screening algorithms, finance automation, customer service chatbots.

Once identified, each system gets classified by risk level, business impact, and regulatory requirements. This inventory becomes the foundation for all subsequent governance activities.

Need a systematic approach to AI discovery? Our comprehensive inventory methodology walks you through the entire cataloging process.

Why Your Organization Needs a Comprehensive AI Use Case Tracker (And What to Track)

Risk Assessment and Ongoing Monitoring

Committee oversight doesn’t end at project approval. That’s when real work begins.

The committee establishes and oversees robust methodologies for identifying, analyzing, prioritizing, and mitigating AI-related risks across all stages of development and deployment. Initial risk evaluation, ongoing performance monitoring, incident response coordination.

Active monitoring covers multiple dimensions:

- Model performance (accuracy degradation, drift detection, output quality)

- Bias assessment (fairness metrics across protected categories)

- Security monitoring (adversarial attacks, data poisoning, prompt injection)

- Compliance verification (regulatory requirement adherence)

The committee implements processes for the regular, ongoing auditing of AI systems in production to check for performance degradation, model drift, and continued compliance with policies and regulations. Production systems get continuous oversight.

Vendor Risk and Third-Party Oversight

AI governance extends beyond internal development. External partnerships need oversight too.

Committee responsibilities include evaluating AI vendor relationships, assessing third-party tool risks, managing supplier governance requirements. ChatGPT usage policies to complex AI platform integrations.

Vendor evaluation examines training data sources, security practices, compliance certifications, contract terms. The committee determines which external AI tools employees can use and under what conditions.

For major AI vendor relationships? The committee oversees due diligence, contract negotiation, ongoing performance monitoring.

Policy Development and Framework Management

Policies require continuous evolution as regulations and technologies change. New regulatory requirements emerge. Technologies evolve rapidly. Operational experience teaches lessons.

The committee ensures policies remain practical, enforceable, aligned with business objectives. Living documents, not static rules.

Want to formalize your AI policies? Our use case policy framework provides templates and implementation guidance.

AI Acceptable Use Policy: Proven Actions for Effective Implementation (2025)

Incident Response Coordination

When AI systems fail, committees coordinate the response. No exceptions.

Incident response covers immediate containment, root cause analysis, stakeholder communication, regulatory reporting, system remediation. The committee ensures incidents get properly documented and lessons learned get integrated into future governance practices.

Board and Executive Reporting

Committee work ultimately serves executive decision-making and board oversight. Regular communication up the chain.

Reporting includes governance metrics, risk assessments, incident summaries, strategic recommendations. The committee translates technical AI issues into business language that executives and board members can understand and act upon.

This reporting function connects operational AI governance to corporate governance. AI risks and opportunities get appropriate board-level attention.

Ready to implement these functions systematically? Start with our step-by-step committee charter to formalize authority and responsibilities.

These operational activities represent the practical implementation of AI governance principles. The committee serves as the central mechanism for balancing the interests of all key stakeholders—including leadership, employees, customers, and investors—to ensure that AI is developed and deployed ethically and for the company’s good. Success depends on systematic execution of these functions rather than ad-hoc AI management approaches.

How to Get Started: Your Strategic Implementation Roadmap

Joining the Responsible AI Leadership Community

Smart organizations build AI governance committees because responsible AI creates business value. Microsoft’s Office of Responsible AI, Google’s AI Principles framework, and IBM’s Ethics Board demonstrate how governance becomes competitive advantage.

These companies built governance structures proactively. They recognized that sustainable AI requires ethical accountability and stakeholder trust.

Multiple Strategic Benefits

Governance committees deliver value across several dimensions:

Business Acceleration: A robust governance framework enables the “faster and more reliable deployment of AI systems,” turning risk management from a compliance hurdle into a business accelerator. Clear guidelines eliminate uncertainty for development teams.

Trust and Market Position: 46% of executives identify responsible AI as a top objective for achieving competitive advantage. Organizations that embed responsible AI principles into their strategy differentiate themselves as trustworthy providers.

Regulatory Preparedness: Governance frameworks prepare organizations for evolving regulatory landscapes. Building governance proactively positions you ahead of requirements.

Structured Implementation Approach

Successful governance implementation requires systematic progression. Your comprehensive implementation can follow established methodologies that align with industry frameworks.

Committee development involves three strategic areas: establishing formal authority, developing governance frameworks, and operationalizing oversight functions. Each area builds on the previous foundation.

Committee Authority: Operational vs Advisory

Your committee needs substantive decision-making power. The fundamental distinction lies in authority and function. While an ethics board primarily advises on principles, a governance committee implements, monitors, and enforces policies.

Framework Integration for Maturity

Effective committees align with established frameworks:

Industry Standards: ISO/IEC 42001 provides a certifiable management structure for ensuring consistency and repeatability.

EU AI Act: For applicable organizations, the committee is the central body responsible for implementing stringent requirements including continuous Risk Management Systems, comprehensive Quality Management Systems, and robust Post-Market Monitoring processes.

Implementation Resource Considerations

Committee implementation requires organizational commitment across multiple areas including executive sponsorship, cross-functional team participation, training, and administrative support. The specific resource allocation varies based on organizational size, AI complexity, and governance maturity objectives.

Success Through Strategic Implementation

Ready for comprehensive implementation methodology? Our detailed 8-stage strategic framework provides regulatory alignment, framework integration, and proven implementation approaches that align with industry best practices and established governance frameworks.

The structured implementation approach reflects industry best practices for governance maturity development. Organizations investing in proactive oversight position themselves as responsible AI leaders while capturing the business advantages that come from stakeholder trust, systematic risk management, and strategic positioning within the evolving AI landscape.

Ready to build yours? Our Step-by-Step Implementation guide walks you through the setup process.

AI Governance Committee Implementation: 8 Basic Stages to Mitigate Risks

Framework Builders: Where Governance Standards Actually Come From

Beyond Corporate Examples: The Source Code

Microsoft didn’t invent responsible AI governance. Neither did Google or IBM.

They used frameworks built by standards organizations, government agencies, and research institutions. The same frameworks available to your organization. You don’t need to reverse-engineer governance from corporate case studies when you can access the original blueprints.

Smart organizations go directly to framework builders rather than copying implementations.

NIST: The Operational Foundation

The U.S. National Institute of Standards and Technology (NIST) AI Risk Management Framework provides a voluntary but highly influential and practical methodology for implementation. The AI RMF is structured around four core functions—GOVERN, MAP, MEASURE, and MANAGE—that provide a comprehensive lifecycle approach to risk management.

NIST didn’t create theory. They built operational methodology.

The framework is designed to be operationalized by a cross-functional governance team, and its activities directly align with committee mandates. Each function provides specific subcategories with actionable outcomes that committees can implement immediately.

The NIST approach gives you proven structure without requiring innovation. Your committee implements rather than invents.

EU AI Act: Regulatory Architecture

The European Union’s AI Act is arguably the most significant piece of AI regulation globally. But beyond compliance requirements, the Act provides governance architecture that applies broadly.

Article 9’s continuous Risk Management System requirements, Article 17’s comprehensive Quality Management System mandates, and Article 72’s robust Post-Market Monitoring processes create operational frameworks any organization can adopt.

The EU didn’t just write regulations. They defined governance processes.

Even organizations outside EU jurisdiction benefit from adopting these systematic approaches to risk management, quality control, and ongoing monitoring. The processes work regardless of regulatory scope.

ISO/IEC 42001: Certifiable Systems

ISO/IEC 42001 is the first international, certifiable standard for an AI Management System (AIMS). Adopting this standard and achieving certification provides powerful, externally validated demonstration of an organization’s commitment to responsible AI.

ISO provides the management system architecture that turns governance principles into auditable practice.

The standard’s high-level structure mirrors other ISO management system standards, with clauses covering Context, Leadership, Planning, Support, Operation, Performance Evaluation, and Improvement. Your organization can integrate AI governance into existing management systems rather than building parallel structures.

OECD: International Consensus Building

The OECD AI Principles represent international consensus on responsible AI development and deployment. These principles inform national policies and corporate frameworks worldwide.

The OECD approach emphasizes human-centered values, fairness, transparency, and accountability. But they also provide practical guidance on implementing these principles through governance structures.

Your committee can adopt OECD principles directly rather than developing ethics frameworks from scratch. International consensus provides credibility and stakeholder acceptance.

Standards Development Organizations: Technical Specifications

IEEE, BSI, and other standards development organizations create technical specifications for AI governance implementation. These provide detailed methodologies for risk assessment, testing protocols, and documentation requirements.

Technical standards offer specificity that high-level frameworks sometimes lack. Your committee benefits from proven methodologies rather than experimental approaches.

Research Institutions: Evidence-Based Approaches

Organizations like MIT’s Computer Science and Artificial Intelligence Laboratory, Stanford’s Human-Centered AI Institute, and Cambridge’s Centre for the Future of Intelligence conduct governance research that informs framework development.

These institutions provide evidence basis for governance approaches, helping committees understand which practices actually work versus those that sound reasonable but lack effectiveness data.

Accelerating Your Framework Development

Framework builders offer several strategic advantages:

Proven Methodology: Tested approaches rather than experimental implementations

Broad Acceptance: Stakeholder familiarity with established standards

Ongoing Updates: Frameworks evolve with regulatory changes and emerging practices

Implementation Support: Training, certification, and consulting resources available

Peer Network: Community of organizations using similar approaches

Accessing Framework Resources

Most framework builders provide implementation resources:

- NIST: Free AI RMF documentation, implementation guides, training materials

- ISO: Certification programs, auditor training, implementation consulting

- EU: AI Act guidance documents, implementation timelines, compliance checklists

- OECD: Policy guidance, country implementation examples, stakeholder resources

Your committee doesn’t need to build governance from scratch when comprehensive frameworks already exist.

Ready to formalize your framework selection and implementation? Our governance charter development guide helps you select appropriate frameworks and establish formal authority structures that align with international standards and regulatory requirements.

Need specific EU AI Act compliance guidance? Our comprehensive EU AI Act implementation resource provides detailed regulatory requirements and compliance strategies.

Framework builders provide the governance architecture that successful organizations implement rather than creating proprietary approaches. By adopting established frameworks, committees benefit from proven methodologies, stakeholder familiarity, and ongoing support rather than experimental governance that may lack effectiveness or credibility.