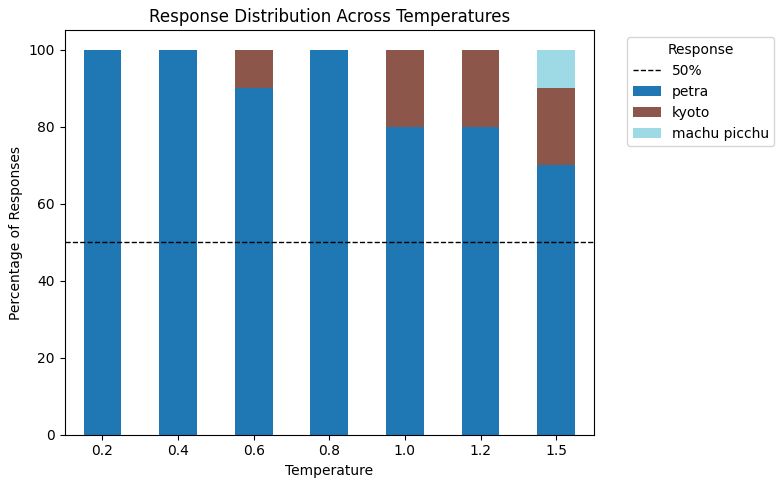

Large language models (LLMs) offer several parameters that let you fine-tune their behavior and control how they generate responses. If a model isn’t producing the desired output, the issue often lies in how these parameters are configured. In this tutorial, we’ll explore some of the most commonly used ones — max_completion_tokens, temperature, top_p, presence_penalty, and

The post 5 Common LLM Parameters Explained with Examples appeared first on MarkTechPost. Read More