Author: Derrick D. Jackson

Title: Founder & Senior Director of Cloud Security Architecture & Risk

Credentials: CISSP, CRISC, CCSP

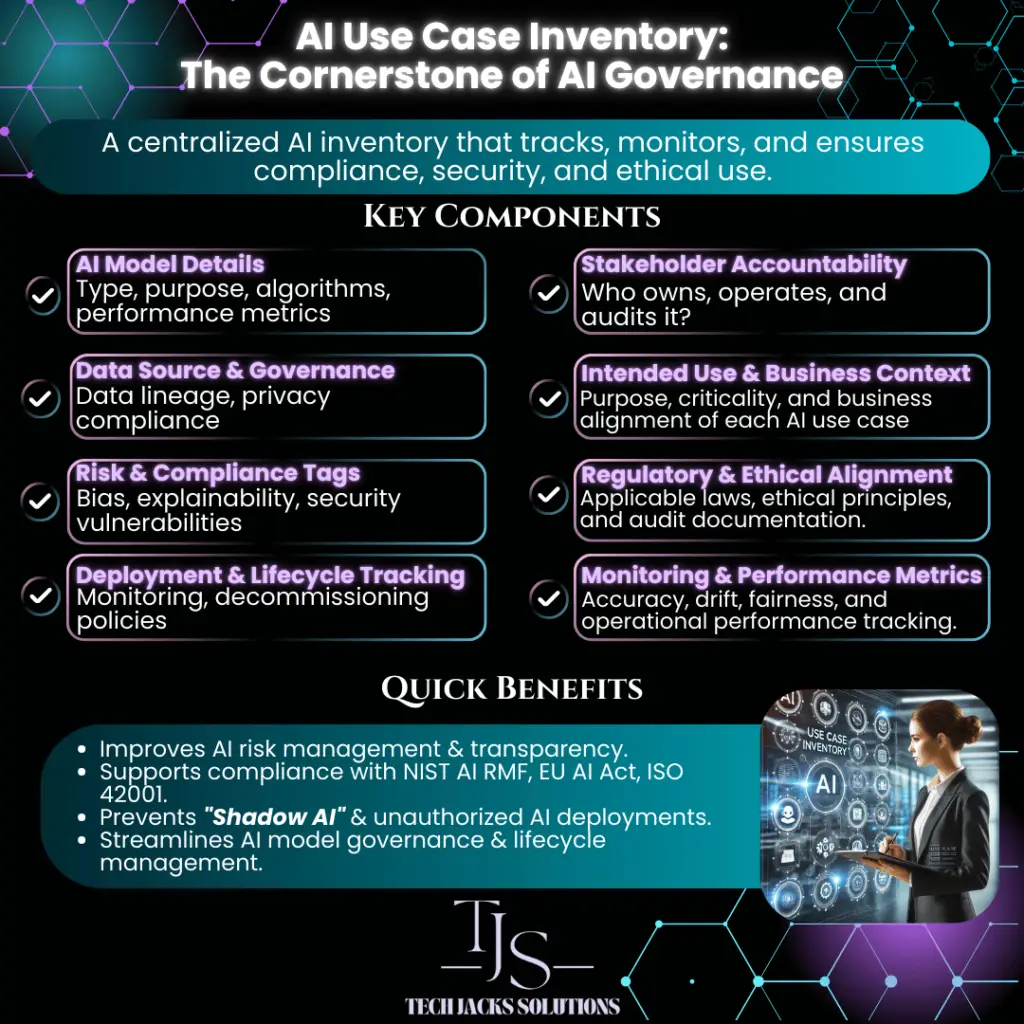

AI Use Case Trackers

You know what’s funny about AI governance? Everyone talks about it, but most organizations are flying blind. They’ve got AI systems scattered across departments, no one knows who owns what, and when regulators come knocking, it’s a scramble to find documentation.

An AI Use Case Tracker fixes this mess. Think of it as a master spreadsheet that answers the question: “What AI are we actually using, and should we be worried about any of it?”

Why Bother With an AI Use Case Tracker?

Four reasons, really.

First, regulations. The EU AI Act isn’t messing around. NIST has frameworks. Your industry probably has its own rules. Without documentation, you’re toast when auditors show up.

Second, risk. AI can discriminate against customers, leak sensitive data, or make decisions nobody can explain. You need to know which systems pose which risks.

Third, it makes operations smoother. When something breaks at 2 AM, you want to know exactly who to call and what version of the model is running.

Fourth, money. Companies pour millions into AI without tracking whether it actually works. A tracker forces you to document expected ROI and measure against it.

The 32 Things You Should Track (And Why Each Matters)

Let me break these into logical groups so your brain doesn’t melt reading through them all.

📘 Identification & Ownership

These fields answer: “What is this AI and who’s responsible for it?”

Use Case ID

Every AI system needs a unique identifier. UC-2024-001 or whatever format works for you.

Without this, you can’t track anything. Try explaining to an auditor which version of “the marketing model” had the bias issue six months ago. Good luck with that. NIST AI RMF’s Govern function says system identification is foundational, and they’re right.

Use Case Name

“Customer Churn Prediction” beats “ML Model v2” every time. People need to understand what they’re talking about without a decoder ring. OECD AI Principles emphasize transparency starts with clear naming.

Business Function

Which department uses this? HR’s hiring algorithms face different regulations than Marketing’s recommendation engines. The EU AI Act (Title III, Chapter 2) cares deeply about context. An AI screening resumes is high-risk. An AI suggesting products isn’t.

Owner(s) and Email

Someone needs to be accountable. Not a team. Not a committee. A person with a name and email address.

When your AI starts making weird decisions, you need to know exactly who to call. ISO/IEC 42001 Section 6 hammers this point home: no accountability means no governance.

Objective

What problem does this AI solve? “Reduce customer churn by 10%” is measurable. “Improve customer experience” is meaningless fluff.

Clear objectives prevent scope creep and let you kill underperforming projects. ISO/IEC 42001 Section 5 requires documented objectives for AI systems.

🧠 Technical/Model Metadata

These fields capture the technical DNA of your AI systems

AI Type

Specify the category: Natural Language Processing, Computer Vision, Recommender System, Time Series Forecasting, etc. Different types carry different risks.

1NLP systems reading customer emails create privacy concerns. Computer vision in warehouses might track employee movements. Recommender systems can create filter bubbles. Document the type to anticipate category-specific risks per OECD AI classifications.

Model Type

Specify architecture (e.g., Decision Tree, CNN, Transformer, XGBoost). This directly impacts explainability (can a human understand decisions?) and your ability to audit, retrain, or patch models.

Example: A Random Forest can show feature importance. A deep neural network is a black box. A rules-based system shows exact logic. Regulators increasingly care about model transparency, especially in high-risk areas like hiring or credit decisions (NIST SP 1270).

Model Version

Track releases meticulously. Version 1.2.3 had a bug that rejected qualified female candidates. Version 1.2.4 fixed it. Without version tracking, you can’t identify affected users or demonstrate remediation to regulators.

Use semantic versioning (major.minor.patch) and maintain a changelog. ISO/IEC 42001 requires configuration management for AI systems.

🗄️ Data & Risk Attributes

The most lawsuit-prone section of your tracker

Data Source

List every source explicitly. “Customer database” isn’t enough. Try: “Salesforce CRM (2020-2024), AWS transaction logs, demographic data from InfoGroup (under MSA dated 3/15/23).”

Data provenance matters legally. Using scraped LinkedIn data for hiring? That’s a lawsuit. Using customer data beyond your privacy policy scope? Another lawsuit. NIST SP 1271 requires detailed data source documentation.

Data Sensitivity

Rate your data (Public/Internal/Confidential/Restricted). Does it include:

- PII (names, addresses, SSNs)?

- PHI (health records under HIPAA)?

- Financial data (account numbers, transactions)?

- Biometric data (fingerprints, facial recognition)?

Get this wrong and GDPR fines start at 4% of global revenue. NIST 800-53 Rev5 and ISO/IEC 27001 provide classification frameworks.

Data Governance / Lineage

Document the data journey. Who approved its use? How does it flow through systems? What transformations occur?

Example: “Raw data → ETL pipeline strips PII → Feature engineering adds derived fields → Model training on anonymized set → Audit log captures all transformations”

Regulators love these diagrams. NIST AI RMF Map function requires understanding data dependencies.

Retention Period

Specify exact timeframes with justification. “Financial predictions: 7 years (IRS requirement). Training data: 3 years (model refresh cycle). User behavior data: 90 days (privacy policy limit).”

GDPR Article 5(1)(e) requires data minimization. Keeping data “just in case” violates this principle. ISO/IEC 27701 provides retention guidance.

Bias & Fairness Concerns

Document specific, measurable disparities. Vague statements don’t cut it. Better:

- “Loan model approves 72% of white applicants vs 58% of Black applicants”

- “Resume screener favors male-associated names (78% callback vs 61%)”

- “Customer service bot responds 2.3x slower to non-English names”

Include your mitigation strategies. NIST SP 1270 provides bias testing frameworks.

AI Inherent Risk

Rate based on autonomy and impact. Consider:

- Low: Makes suggestions humans review (spell checker)

- Medium: Automates decisions with human oversight (fraud alerts)

- High: Makes autonomous decisions affecting people (loan approvals, hiring)

- Critical: Safety-critical or legally-binding decisions (medical diagnosis, autonomous vehicles)

EU AI Act defines four risk tiers with different requirements for each.

Data Sensitivity Risk

Combine data classification with volume and retention. A system processing millions of SSNs for years is riskier than one processing hundreds of email addresses temporarily.

NIST 800-53 provides control requirements based on data sensitivity levels.

Combined Risk

Don’t overcomplicate this. Use a simple matrix:

- High autonomy + sensitive data = High risk

- Low autonomy + public data = Low risk

- Everything else = Medium risk

Document your reasoning. ISO/IEC 23894:2023 provides holistic risk assessment methods.

Business & Compliance

Regulatory Impact

List every regulation that applies. EU AI Act, GDPR, HIPAA, industry-specific rules.

Miss one and enforcement actions get expensive. Your legal team will thank you for maintaining this list.

Security Requirements

TLS encryption? Access controls? Audit logging?

AI systems are attack targets. Model theft is real. Adversarial attacks happen. Document your defenses.

Explainability Level

Can you explain why the model made a specific decision? High/Medium/Low is fine.

Black box models in lending or hiring will get you in trouble. The EU AI Act requires transparency for high-risk applications.

Impact Assessment Completed?

Did you do a Data Protection Impact Assessment? An AI Impact Assessment?

Many regulations require these. “We forgot” doesn’t impress regulators.

Ethical Considerations

Beyond what’s legal, what’s right? Does this system respect user autonomy? Could it discriminate against vulnerable groups?

Legal compliance is the floor, not the ceiling. Document ethical reviews and decisions.

User / Stakeholder Impact

Who does this affect? Employees getting performance reviews? Customers getting loan decisions? Job applicants?

The same AI technology has different implications depending on who it affects.

KPIs & Metrics

Precision: 0.87. Recall: 0.76. Customer satisfaction: +12%. Processing time: -34%.

Without metrics, you’re guessing whether your AI works. With metrics, you know.

Expected Benefits / ROI

Why did you build this? “Reduce manual review time by 50%” or “Increase conversion rate by 8%” or “Save $2M annually in operational costs.”

If you can’t articulate expected value, why are you building it?

Oversight & Audit

Approval Status

Is this approved for production? In review? Rejected?

Deploying unapproved AI is how companies end up in headlines for all the wrong reasons.

Development Status

Planning? Testing? Production? Decommissioned?

Different stages need different oversight. Don’t apply production controls to a proof of concept.

Tool or Vendor Name

Using OpenAI’s API? AWS SageMaker? Document it.

Vendor dependencies create risks. What happens if they change their terms? Raise prices? Get hacked?

Audit History

When was it last reviewed? By whom? What did they find?

“Internal audit March 2024: Identified need for additional bias testing. Completed April 2024.” Shows you’re actively managing governance.

License

MIT? Apache? Proprietary? Commercial license from vendor?

Using GPL code in your proprietary system? That’s a problem. Using unlicensed code? Bigger problem.

Contracting

Master service agreement with cloud provider? Statement of work with consultants?

Contracts define who’s liable when things go wrong. You want to know this before things go wrong.

Last Updated

When did someone last verify this information is accurate?

Six-month-old information might be completely wrong. Set calendar reminders for regular updates.

Governance Contacts

Who’s responsible for oversight? Include backups.

“Jane Smith (primary), John Doe (backup), Lisa Park (escalation)” beats “AI Ethics Committee” when you need decisions fast.

Making This Actually Work

An AI Use Case tracker is only useful if it’s accurate and current. Some tips:

Start small. Don’t try to document 200 systems on day one. Pick your highest-risk systems and work down.

Use what you have. Got a ServiceNow instance? A SharePoint list? Even Excel works. Fancy tools can come later.

Make it mandatory. No production deployment without tracker documentation. Period.

Review regularly. Quarterly reviews catch drift before it becomes a problem.

Keep it readable. If legal, technical, and business teams can’t understand it, simplify.

The Bottom Line

AI governance isn’t optional. Regulators are paying attention. Customers are asking questions. Your board wants answers.

A comprehensive AI Use Case Tracker gives you those answers. It shows regulators you’re serious about compliance. It helps you catch risks before they explode. It proves your AI investments actually pay off.

Most importantly? It means when something goes wrong (and something always goes wrong), you know exactly what happened, who’s responsible, and how to fix it.

That’s not corporate transformation or revolutionary thinking. It’s just good management. And in the world of AI, good management is surprisingly rare.

- OECD (2022), “OECD Framework for the Classification of AI systems”, OECD Digital Economy Papers, No. 323, OECD Publishing, Paris, https://doi.org/10.1787/cb6d9eca-en. ↩︎