Author: Derrick D. Jackson

Title: Founder & Senior Director of Cloud Security Architecture & Risk

Credentials: CISSP, CRISC, CCSP

Table of Contents

Safe AI Usage: The Complete Workplace Guide

Scope: This Safe AI Usage guide covers AI usage for typical business applications (content creation, analysis, customer support) used by knowledge workers. For organizations developing high-risk AI systems or subject to specific regulatory requirements, additional compliance measures beyond this Safe AI Usage framework will be necessary.

Most workers think AI is this complex thing requiring special skills, but Safe AI Usage is really about avoiding basic mistakes that waste time, produce unreliable answers, or potentially expose sensitive data. After observing workplace AI implementations across various industries, we’ve identified common patterns that separate effective Safe AI Usage from problematic approaches.

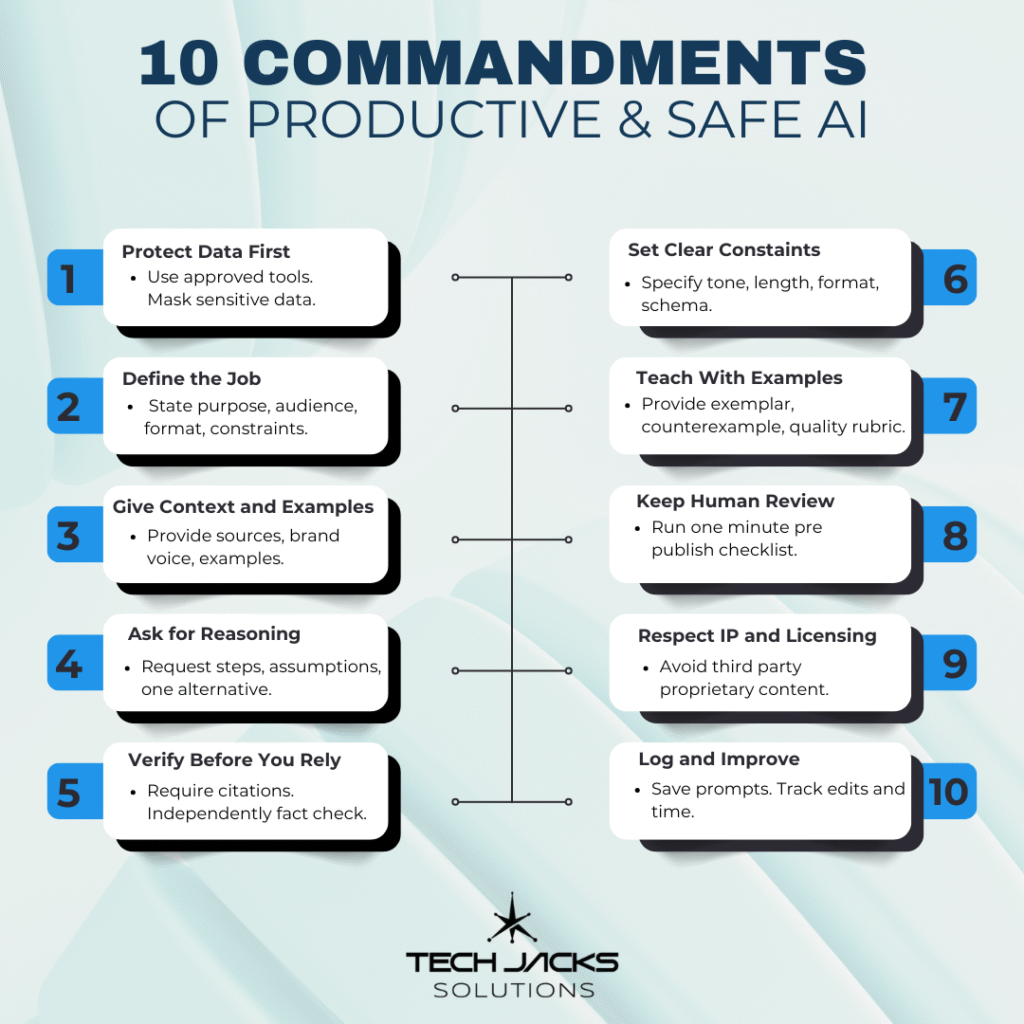

The 10 Commandments below aren’t theoretical frameworks. They’re practical Safe AI Usage habits developed from documented workplace experiences and established data management practices. Teams applying these Safe AI Usage principles consistently report fewer output errors, reduced rework time, and better alignment with organizational policies. This AI Training approach works for regular employees who need reliable results without deep technical expertise.

The 10 Commandments of Safe AI Usage

1) Protect Data First

Why it matters: Effective Safe AI Usage begins with data protection. Data exposure, privacy violations, and unauthorized access create immediate liability and erode stakeholder trust.

Use case example: A sales manager needs help drafting a proposal containing customer pricing and contact details. Instead of using a public AI tool with this sensitive information, they use their organization’s approved AI platform and replace specific client names and prices with generic placeholders like “[CLIENT_NAME]” and “[PRICE_RANGE]”. The approved tool provides session logging and data loss prevention controls. The resulting proposal draft is useful while keeping confidential information secure.

How to apply:

- Use only organization-approved AI tools for work tasks

- Replace or mask names, identifiers, and confidential data before input

- Store AI-generated drafts in approved repositories, not personal storage

- Verify your tool’s data handling practices match your organization’s requirements

Common pitfalls: Using consumer AI tools for business data, inputting complete datasets without sanitization, saving prompts containing unmasked sensitive information.

Measurement approach: Track percentage of AI tasks completed using approved tools and data handling protocols.

Regulatory context: Organizations subject to GDPR, CCPA, or industry-specific regulations should ensure AI tool usage aligns with existing data protection requirements.

2) Define Clear Objectives and Success Criteria

Why it matters: This AI Training principle emphasizes that specific instructions reduce iteration cycles and improve output quality on first attempts.

Use case example: A customer support lead needs an email template for service outages. They specify: “Draft a 200-word customer notification email about system downtime. Tone: professional but empathetic. Must include: acknowledgment of issue, estimated resolution timeframe, link to status page, contact information for urgent needs. Avoid: technical jargon, speculative timelines, promises we can’t guarantee.” The resulting draft requires minimal editing and maintains consistent messaging standards.

How to apply:

- State the specific purpose in one clear sentence

- Define audience, tone, length, and required format

- Include 3-5 specific success criteria

- Specify what to avoid or exclude

Common pitfalls: Vague requests like “improve this document,” omitting audience specification, failing to set length parameters.

Measurement approach: Count revision cycles needed before output meets requirements.

3) Provide Structured Context and Examples

Why it matters: AI systems pattern-match against provided inputs. Quality context is essential for Safe AI Usage because it produces outputs that align with organizational standards.

Use case example: A marketing team member requests blog post variants and provides: current brand voice guidelines, two high-performing previous posts, a FAQ about the product, and a list of prohibited claims from legal review. They specify: “Follow the tone from Post A, avoid the technical density of Post B, and don’t make any claims not supported in the FAQ.” The output maintains brand consistency and avoids compliance issues.

How to apply:

- Include relevant source materials and brand guidelines

- Provide one strong example to emulate and one to avoid

- List prohibited terms, claims, or approaches

- Update context materials regularly to prevent outdated information

Common pitfalls: Requesting creativity without constraints, linking to outdated materials, assuming AI knows current brand standards.

Measurement approach: Score outputs against brand compliance during review process.

4) Request Reasoning for High-Stakes Decisions

Why it matters: Understanding AI reasoning helps identify flawed assumptions and reveals alternative approaches.

Use case example: A product manager evaluating two customer onboarding approaches asks: “Compare these options with step-by-step reasoning. List key assumptions for each approach. Provide one alternative method we haven’t considered. Identify what additional data would strengthen this analysis.” The response reveals that Option A assumes integration capabilities not yet built, preventing a potentially costly implementation mistake.

How to apply:

- Request explicit reasoning and underlying assumptions

- Ask for at least one alternative approach

- Identify information gaps or dependencies

- Compare options against known constraints before deciding

Common pitfalls: Accepting first recommendations without analysis, skipping assumption validation, failing to consider alternatives.

Measurement approach: Track high-impact decisions where reasoning was documented versus those where it wasn’t.

5) Verify Before Implementation

Why it matters: AI outputs can contain inaccuracies, outdated information, or fabricated details that create reputational and legal risks.

Use case example: An analyst creating a market research summary requests citations for all statistics and claims. They verify each source independently or replace uncertain information with verified alternatives. Claims that can’t be substantiated are removed or clearly marked as estimates. The final report passes leadership review because all assertions can be traced to reliable sources.

How to apply:

- Require specific citations for factual claims

- Independently verify statistics, quotes, and references

- Remove or clearly label any unverifiable information

- Establish verification standards appropriate to content importance

Common pitfalls: Treating AI output as authoritative without checking, using broken or questionable links, accepting fabricated sources.

Measurement approach: Calculate percentage of AI-generated claims that pass verification on review.

6) Set Output Constraints Matching Your Workflow

Why it matters: Specific constraints produce drafts that integrate seamlessly with existing templates and processes.

Use case example: An HR specialist needs job descriptions that fit directly into their applicant tracking system. They specify: “8th-grade reading level, exactly 5 bullet points for responsibilities, salary range in [RANGE] format, location as [CITY, STATE], required qualifications in numbered list.” The output drops into their system template without reformatting work.

How to apply:

- Specify reading level, tone, and length requirements

- Provide exact formatting specifications or templates

- Request output that’s ready for direct use in your systems

- Include technical constraints (character limits, required fields)

Common pitfalls: Accepting free-form outputs when structured formats are needed, unclear formatting requirements.

Measurement approach: Time spent reformatting AI outputs before use.

7) Use Templates and Examples to Ensure Consistency

Why it matters: Consistent examples improve AI Training effectiveness and reduce variation across team members. This Safe AI Usage approach ensures organizational standards are maintained.

Use case example: A legal operations team creates a contract summary template with examples of effective and poor summaries, plus scoring criteria. Team members reference these materials when requesting contract analysis. Results become more uniform and require fewer review cycles because everyone works from the same quality standards.

How to apply:

- Maintain current examples for each document type

- Provide clear quality criteria or rubrics

- Ask AI to explain significant deviations from provided examples

- Regular update examples to reflect evolving standards

Common pitfalls: Using outdated examples, inconsistent templates across team members, not updating materials as standards evolve.

Measurement approach: Count review cycles required per document type over time.

8) Implement Systematic Quality Checks

Why it matters: Standardized review processes are fundamental to Safe AI Usage because they catch common errors before content reaches stakeholders.

Use case example: Before publishing knowledge base articles, a technical writing team uses a consistent audit: verify all links function, confirm technical accuracy of procedures, check tone against style guide, review for potential bias, ensure no confidential information included, validate formatting consistency. This 2-minute process catches errors that would otherwise require time-consuming corrections after publication.

How to apply:

- Create a standardized checklist for your content type

- Include verification of links, data accuracy, tone, bias, confidentiality, formatting

- Complete checks before any external use or publication

- Track common error types to refine checklist over time

Common pitfalls: Skipping reviews under time pressure, inconsistent review standards, not updating checklists based on discovered issues.

Measurement approach: Percentage of outputs passing quality review on first attempt.

9) Respect Intellectual Property and Licensing

Why it matters: Copyright violations and licensing issues create legal exposure and damage business relationships.

Use case example: A creative team requesting marketing assets specifies: “Generate original visual concepts, ensure all elements are created new rather than derivative, provide usage terms summary, note any attribution requirements.” They avoid incorporating existing copyrighted materials and maintain clear records of asset origins for legal review.

How to apply:

- Request original content creation rather than derivative works

- Specify licensing requirements for your intended use

- Avoid inputting third-party copyrighted materials you don’t own

- Document content origins and usage rights for compliance tracking

Common pitfalls: Using copyrighted materials without permission, unclear licensing status, inadequate usage documentation.

Measurement approach: Track intellectual property issues or takedown requests related to AI-generated content.

10) Document and Improve Systematically

Why it matters: Systematic documentation enables process improvement and knowledge sharing across teams.

Use case example: A content marketing team saves effective prompts, AI model versions, source materials, edit counts, and time investments for high-value projects. Monthly review identifies the most effective approaches. After three months, they standardize on proven prompt templates and reduce average content creation time by 35% while maintaining quality standards.

How to apply:

- Save successful prompts, versions, and source materials for important work

- Track editing time and iterations required

- Build a library of proven approaches for common tasks

- Review effectiveness data regularly to identify improvements

Common pitfalls: No documentation system, saving everything without organization, not analyzing effectiveness data for insights.

Measurement approach: Time from initial prompt to approved final output, trending over time.

Implementation Framework

Start Small: Choose 2-3 commandments most relevant to your immediate needs rather than implementing all Safe AI Usage practices simultaneously.

Team Coordination: Ensure consistent Safe AI Usage approaches across team members to maximize effectiveness and reduce confusion.

Regular Review: Assess what’s working in your AI Training program and adjust approaches based on actual results rather than assumptions.

Documentation: Keep records of effective approaches to build organizational AI Training knowledge over time.

The Compound Effect of Safe AI Usage

Poor data quality creates cascading problems in any AI Training program. When teams skip verification steps, accept outputs without validation, or ignore data protection practices, they’re not just risking immediate project failure. They’re contributing information pollution that affects future AI training and organizational knowledge systems.

MIT research on machine learning applications confirms that AI model quality directly correlates with training data quality. Recent studies published in Nature demonstrate that when AI systems train on AI-generated content without quality controls, output quality degrades progressively. University of Oxford researcher Ilia Shumailov describes this as “model collapse,” where systems eventually produce incoherent results.

Organizations following systematic Safe AI Usage practices create positive feedback loops. Verified outputs become reliable knowledge base content. Consistent AI Training templates reduce training time for new team members. Documented effective approaches accelerate similar future projects.

Building Organizational AI Competency

Systematic approaches transform AI from experimental technology into reliable business capability. When teams use consistent templates, apply uniform verification standards, and follow shared quality processes, organizations build institutional knowledge that compounds over time.

These practices align with emerging regulatory frameworks. The European Union’s AI Act requires systematic data governance for high-risk systems. ISO/IEC 42001 provides voluntary standards for AI management systems emphasizing systematic approaches and measurable objectives. The NIST AI Risk Management Framework emphasizes governance, measurement, and continuous improvement.

Important note: These regulatory frameworks primarily address organizations developing or deploying high-risk AI systems. Most workplace AI usage described in this guide falls outside these regulatory requirements, but following similar systematic approaches provides business benefits and prepares organizations for evolving compliance landscapes.

Teams that master systematic Safe AI Usage position themselves for advanced applications. Understanding prompt engineering, output validation, and quality measurement creates foundations for custom tool development, automated workflows, and specialized AI implementations.

Organizations that establish systematic Safe AI Usage approaches now avoid common pitfalls: inconsistent results across teams, repeated mistakes, inefficient processes, and quality problems that require expensive correction later. The investment in AI Training and systematic practices pays dividends as AI capabilities expand and organizational needs evolve.

For additional resources on Safe AI Usage and AI Training implementation strategies, consult your organization’s IT and compliance teams for guidance specific to your industry and regulatory requirements.

Visit our AI Governance Hub for more information on Safe AI, Responsible AI, and AI Governance

BC

August 19, 2025Excellent framework, Derrick – especially the systematic approach to Safe AI Usage.

Your 10 Commandments really hit the mark on practical implementation. I’ve been running local AI setups with LM Studio and Ollama, and your emphasis on data protection (Commandment #1) becomes even more critical when you see how much control you actually have with local deployments.

The verification principle (#5) resonates strongly with my experience. When running local models, you can see precisely how different prompts and contexts affect output quality. It’s eye-opening to see how much “hallucination” can be reduced with proper prompt engineering and systematic verification, as you outline.

Your point about the compound effect is spot-on. I’ve noticed that maintaining consistent templates and quality checks with local AI creates much more reliable outputs. The ability to iterate quickly with local models while following your systematic approach has been game-changing for content creation and analysis workflows.

The regulatory compliance angle is valuable for organizations hesitant about AI adoption. Having a systematic documentation framework, as you describe, makes it much easier to show due diligence when compliance questions arise.

This should be required reading for any team implementing AI workflows. The practical examples make it actionable rather than just theoretical – exactly what most organizations need right now.

Tech Jacks Solutions

August 23, 2025Always appreciate it! Thanks