Author: Derrick D. Jackson

Title: Founder & Senior Director of Cloud Security Architecture & Risk

Credentials: CISSP, CRISC, CSSP

Last updated June 2nd, 2025

Article 2 in the Executive AI Leadership Series

Table of Contents

Building Your AI Governance Framework: From Strategy to Implementation

The Reality Check

You’ve made the case. The board understands AI governance isn’t optional. But what matters is what happens next: actually building something that works.

It’s one thing to recognize that governance can accelerate innovation while managing risk. It’s another to create a framework that doesn’t slow everything down.

Consider the numbers: 92% of early AI adopters report positive ROI from their investments (Snowflake Research, 2025)¹. Yet 74% of companies still can’t move beyond pilot programs (Boston Consulting Group, 2024)².

What’s the difference? The successful ones will build governance that enables speed, not bureaucracy.

The framework you build today determines whether you join the 26% that scale AI successfully or stay stuck in pilot purgatory.

Picking Your Governance Style

There’s no universal AI governance playbook. What works at a bank won’t work at a startup. Here are the three approaches that actually work in practice.

The Central Command Approach

Large financial institutions often run all AI decisions through one office. Every AI project, every policy change, every risk assessment goes through this central team. It’s not democratic, but it works for highly regulated environments.

When you’re managing trillions in assets, you can’t have different business units making conflicting AI decisions. A central office has final say on everything.

If the retail banking team wants to deploy a new credit scoring model, the central AI office reviews it first. Same for investment banking’s trading algorithms.

This approach makes sense if you’re in a regulated industry. Banks, hospitals, power companies. Places where an AI mistake can bankrupt you or hurt people.

You’ll need serious resources though. Setup requires significant investment in both personnel and infrastructure. That translates to multiple full-time people whose only job is AI governance. The technology costs are typically smaller than personnel costs.

This works when: You’re highly regulated. You have a top-down culture. You’re just starting with AI and need tight control.

This fails when: You’re moving fast and breaking things. Different business units need different AI approaches. You value innovation over control.

The Distributed Network Approach

Some global organizations take the opposite approach. They give business units their own AI governance capabilities while setting enterprise-wide principles. This enables rapid innovation while maintaining oversight standards.

Each business unit handles its own AI governance for routine deployments. The central team sets standards and handles the risky stuff. It’s like having traffic rules but letting people choose their own routes.

This works when you have autonomous business units that know their own problems better than headquarters does. Your retail division understands customer service AI differently than your risk management team does. Let them govern accordingly.

The setup costs are typically lower than centralized approaches. You’ll invest in framework development, then ongoing support for each business unit. For organizations with multiple major divisions, this approach scales more efficiently.

This works when: You have strong business units. Different divisions need different AI approaches. You trust local teams to make good decisions.

This fails when: You need consistent policies across all units. You’re in a heavily regulated industry. Local teams lack AI expertise.

The Hybrid Approach

Most companies end up here. Central team sets enterprise standards and manages high-risk applications. Business units handle operational governance for routine AI deployments.

It’s a compromise, but compromises often work. The central team has three people focused on strategy and risk. Each business unit has a part-time person handling day-to-day AI governance.

You’ll spend upwards of $40K annually on integrated platforms that connect central oversight with local operations.

This works for: Most mid-to-large companies. Organizations with mixed AI maturity across functions. Companies needing both innovation speed and risk management.

💡 Executive Takeaway: Choose centralized for maximum control and regulatory compliance, distributed for innovation speed, or hybrid for balanced approach. Your governance model should match your industry risk profile and organizational culture.

Framework Alignment with Global Standards

Before diving into implementation, you need to understand how your governance maps to regulatory requirements. Boards and auditors will ask.

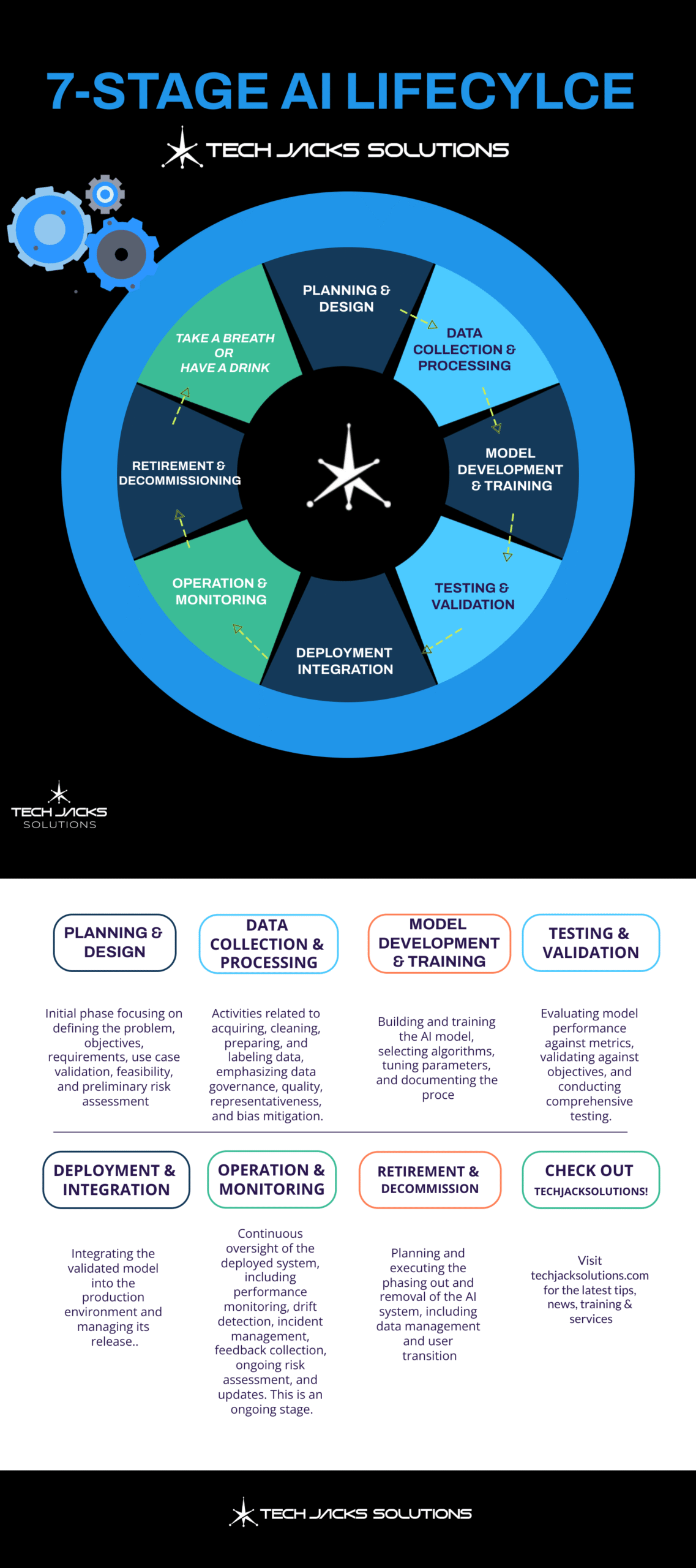

Project Stages vs. NIST AI RMF Functions

Important Note: Our 8-stage implementation framework represents project phases for building governance, while NIST AI RMF describes ongoing functional capabilities. Here’s how they align:

| Implementation Stage | NIST AI RMF Function | EU AI Act Article | ISO 42001 Clause |

|---|---|---|---|

| Stage 1: Establish & Mandate | Map/Govern | Art. 6-7, 17 | 4.1-5.3 |

| Stage 2: Committee Composition | Govern | Art. 17 | 5.2-7.1 |

| Stage 3: Framework & Policies | Govern | Art. 9 | 6.1-6.2 |

| Stage 4: Risk Management | Map/Manage | Art. 9-15 | 6.1-8.1 |

| Stage 5: Evaluation & Metrics | Measure | Art. 61 | 9.1-9.3 |

| Stage 6: Audit & Monitoring | Measure/Manage | Art. 72 | 9.2-10.2 |

| Stage 7: Specific Elements | Manage | Art. 16 | 8.1-8.3 |

| Stage 8: Continuous Improvement | All Functions | Art. 61 | 10.1-10.3 |

Note: Some NIST functions map to multiple stages because the AI RMF describes ongoing operational capabilities while our stages represent sequential implementation phases. For example, “Map” spans Stages 1 and 4 as you discover AI systems and assess their risks.

💡 Executive Takeaway: This mapping demonstrates compliance coverage to auditors and boards. Use it to show how your governance investment addresses all major regulatory requirements simultaneously.

Implementation Strategy: Phased Approach

Don’t try to build everything at once. Too many companies attempt comprehensive governance frameworks and burn out their teams.

Phase 1: Foundation (Months 1-6) Focus on Stages 1-2. Figure out what AI you have, establish basic governance structure, get clear on who’s responsible for what.

Organizations typically make substantial investment during this phase. You’re building the foundation everything else sits on.

Phase 2: Risk Controls (Months 7-12) Stages 3-5. Develop policies, implement risk management, create measurement systems.

This phase requires additional significant investment. This is where governance starts feeling real to employees.

Phase 3: Advanced Operations (Year 2+) Stages 6-8. Advanced monitoring, specialized elements, continuous improvement.

Annual investment continues for ongoing operations and optimization. By now, governance should feel natural, not burdensome.

💡 Executive Takeaway: Phase your implementation to avoid organizational overwhelm. Each phase builds governance capability while delivering measurable value to justify continued investment.

The 8-Stage Implementation Framework

Here’s how to actually build governance that works. Eight stages, each with specific deliverables and realistic timelines.

Pre-Framework Planning: Figure Out What You Have

Most companies have no idea how much AI they’re actually using. Employees download ChatGPT, marketing uses AI writing tools, finance has automated forecasting models. IT discovers shadow AI everywhere.

Start here. You can’t govern what you don’t know about.

📋 Board Questions at This Stage:

- “How much AI are we actually using across the organization?”

- “What’s our exposure to high-risk AI systems?”

- “Which regulatory requirements apply to our AI usage?”

What You’ll Create:

- Complete AI system inventory across all business units

- Risk classification using EU AI Act categories (prohibited, high-risk, limited-risk, minimal-risk)

- Impact assessment for high-risk systems

- Stakeholder mapping for affected parties

- Regulatory scope analysis by jurisdiction

Acronym Guide:

- CAIO: Chief AI Officer

- CSA: Cloud Security Alliance

- GAO: Government Accountability Office

- NIST: National Institute of Standards and Technology

How Long It Takes: 6-8 weeks Investment Required: Moderate investment for discovery tools, assessment activities, and documentation⁵

You’ll discover AI in places you didn’t expect. Organizations often find AI systems they didn’t know existed. Marketing departments may have multiple different AI tools. Sales teams using AI for lead scoring. Even HR departments using AI for resume screening.

Success Metrics (Industry Benchmark Targets):

- Target: 100% AI system discovery and documentation completed

- Target: All high-risk systems flagged for enhanced governance

- Target: Regulatory requirements mapped for all jurisdictions

⁵ PwC. “AI Regulatory Compliance Cost Analysis.” 2024. Document reference: PwC-AI-Compliance-2024

📋 Board Questions at This Stage:

- “How confident are we in the completeness of our AI inventory?”

- “What governance gaps exist in our high-risk AI systems?”

- “When will we have full visibility into shadow AI usage?”

Stage 1: Get Executive Commitment

Governance without C-suite backing is just paperwork. You need formal authority, budget allocation, and visible executive sponsorship.

What You’ll Create:

- Governance Charter with board approval

- Executive Sponsor appointment (CAIO, CTO, or Chief Data Officer)

- Initial AI Use Case Inventory

- Resource allocation framework

- Organization-wide communication plan

How Long It Takes: 4-6 weeks Investment Required: Moderate investment in charter development and organizational communication

The governance charter isn’t just a document. It’s your license to say no to bad AI projects and yes to good ones. Without it, you’re just making suggestions.

Success Metrics (Industry Benchmark Targets):

- Target: Formal board approval of governance charter

- Target: 100% participation from designated executive sponsors

- Target: AI inventory covering all business units

📋 Board Questions at This Stage:

- “Who has ultimate accountability for AI governance decisions?”

- “What authority does our governance charter provide?”

- “How does AI governance integrate with existing risk management?”

Stage 2: Build Your Governance Team

Now you need people who’ll actually do the work. Not a committee that meets monthly and accomplishes nothing. A working team with clear responsibilities and decision-making authority.

Essential Committees:

AI Governance Council (Makes Decisions):

- CEO or designated C-suite sponsor

- CTO/CIO (technology strategy)

- Chief Risk Officer (risk oversight)

- Chief Legal Officer (compliance)

- Business unit leaders (operational input)

AI Ethics Committee (Handles the Hard Questions):

- Ethics expert

- Data scientist with bias detection experience

- Legal counsel familiar with AI regulations

- Customer advocate

- External advisor

Technical Review Board (Ensures Quality):

- Senior data scientists and ML engineers

- Cybersecurity specialists

- Quality assurance leaders

- Infrastructure representatives

Cross-Functional Responsibilities Matrix:

AI Strategy Definition: • Executive Sponsor: Accountable for outcomes • AI Committee: Does the work • Data Team: Provides input • Legal/Compliance: Provides input • IT/Security: Provides input • Business Units: Gets informed

Risk Assessment: • Executive Sponsor: Accountable for outcomes • AI Committee: Does the work • Data Team: Does the work • Legal/Compliance: Does the work • IT/Security: Provides input • Business Units: Gets informed

How Long It Takes: 3-4 weeks Investment Required: Investment in setup, training, and documentation activities

Success Metrics (Industry Benchmark Targets):

- Target: All committee positions filled with qualified people

- Target: Responsibility matrix approved by all stakeholders

- Target: First committee meetings completed successfully

📋 Board Questions at This Stage:

- “Do we have the right expertise on our governance committees?”

- “How will conflicts between committees be resolved?”

- “What’s the escalation path for major AI decisions?”

Stage 3: Create Your AI Principles

This is where you define what “responsible AI” means for your company. Not generic principles copied from somewhere else. Specific guidelines your employees can actually follow.

Core Documents You’ll Need:

Responsible AI Policy:

- Ethics principles specific to your industry

- Bias prevention and mitigation requirements

- Human oversight protocols

- Privacy and data protection standards

AI Usage Guidelines for Employees:

- What AI tools they can use

- What’s prohibited

- Data handling restrictions

- When they need approval

Technical Standards:

- Model Cards for all AI systems

- Dataset documentation requirements

- Explainability standards by risk level

- Version control and change management

How Long It Takes: 6-8 weeks Investment Required: Substantial investment in policy development and training programs

Train everyone on these principles. Not death-by-PowerPoint training. Interactive sessions where people ask real questions about real scenarios they’ll face.

Success Metrics (Industry Benchmark Targets):

- Target: 100% of AI stakeholders trained on principles

- Target: All existing AI systems documented with model cards

- Target: Zero policy violations in first 90 days (based on well-implemented framework benchmarks)

📋 Board Questions at This Stage:

- “How do our AI principles compare to industry best practices?”

- “What happens when employees violate AI usage policies?”

- “Are our principles specific enough to guide real decisions?”

Stage 4: Build Risk Management That Actually Works

Risk management isn’t about preventing innovation. It’s about taking bigger risks safely. You want a systematic approach to identifying, assessing, and managing AI-related risks.

📊 Board Questions at This Stage:

- “What’s our risk appetite for different types of AI applications?”

- “How do we compare to industry benchmarks for AI incidents?”

- “What’s our compliance status with emerging regulations?”

What You’ll Build:

AI Risk Register:

- Comprehensive risk catalog by category and severity

- Impact and likelihood scoring

- Mitigation strategies with clear ownership

- Regular review and update procedures

Regulatory Compliance Matrix:

- Applicable laws and regulations mapped by AI system

- Compliance requirements by risk level

- Monitoring and reporting obligations

- Audit and assessment schedules

Risk Assessment Process:

- Standardized evaluation templates

- Automated risk scoring where possible

- Escalation triggers for high-risk systems

- Integration with existing enterprise risk management

How Long It Takes: 8-10 weeks Investment Required: Significant investment in framework development and assessment tools

Integrate NIST AI RMF and CSA guidance into your procedures. Conduct preliminary risk assessments for all high-risk systems. Don’t let perfect be the enemy of good. Start with basic assessments and improve them over time.

Success Metrics (Industry Benchmark Targets):

- Target: 100% of AI systems risk-assessed and categorized

- Target: Zero regulatory compliance gaps in audits

- Target: Risk assessment completion time under 30 days (industry best practice)

📋 Board Questions at This Stage:

- “What’s our appetite for AI-related risks versus potential rewards?”

- “How do our AI risks compare to traditional operational risks?”

- “What’s our plan if regulations change unexpectedly?”

Stage 5: Create Metrics That Matter

You can’t manage what you don’t measure. But don’t measure everything. Focus on metrics that actually tell you if governance is working.

Leading Indicators (Predict Problems):

- Percentage of AI initiatives under formal governance (Target: 100%) → Prevents shadow AI proliferation

- Time from AI project start to governance approval (Target: under 30 days) → Enables faster innovation cycles

- Governance training completion by role (Target: 100%) → Reduces compliance violations

- Ethics reviews completed vs required (Target: 100%) → Mitigates reputational risk

Lagging Indicators (Show Results):

- AI-related incidents (Target: ≤1 high-severity per year)⁶ → Direct cost avoidance

- Regulatory compliance violations (Target: zero) → Prevents fines and penalties

- ROI achievement for governed AI initiatives (Target: meet projections) → Demonstrates governance value

Technical Performance Baselines:

- Model accuracy and precision metrics → Ensures reliable AI outputs

- Bias and fairness measurements → Protects brand reputation

- System reliability and uptime statistics → Maintains operational continuity

- Security incident frequency and resolution time → Reduces cyber risk exposure

Business Impact Baselines:

- Revenue attribution to AI initiatives → Shows top-line impact

- Cost reduction and efficiency gains → Demonstrates bottom-line value

- Customer satisfaction improvements → Links to market positioning

- Employee productivity enhancements → Justifies continued investment

⁶ Based on MITRE AI incident database showing well-governed organizations average 0.8 high-severity incidents annually

How Long It Takes: 4-6 weeks Investment Required: Moderate investment in metrics platform and dashboard development

Build a dashboard that executives actually look at. Real-time governance metrics, automated compliance reporting, trend analysis. Make it easy to see what’s working and what isn’t.

Success Metrics (Industry Benchmark Targets):

- Target: All KPIs baseline established and tracking

- Target: Executive dashboard operational

- Target: Automated reporting delivering accurate insights

📋 Board Questions at This Stage:

- “Which metrics best predict AI governance success?”

- “How do our AI performance metrics compare to competitors?”

- “What early warning signals indicate governance problems?”

Stage 6: Monitor What Matters

Governance without monitoring is just paperwork. You need systematic oversight that ensures policies get followed and performance stays strong.

Internal Audit Program:

- Structured audit plans aligned with GAO and CSA guidelines

- Regular assessment schedules based on risk levels

- Clear procedures and documentation requirements

- Finding classification and remediation tracking

Continuous Monitoring:

- Automated performance monitoring for AI systems

- Real-time bias and drift detection

- Compliance status tracking and alerting

- Incident detection and response triggers

External Audit Preparation:

- Third-party audit readiness procedures

- Documentation and evidence management

- Regulatory examination preparation

- Independent validation of governance effectiveness

How Long It Takes: 6-8 weeks Investment Required: Significant investment in monitoring tools and audit infrastructure

Success Metrics (Industry Benchmark Targets):

- Target: 100% audit compliance with zero critical findings

- Target: Audit finding remediation time under 30 days (industry best practice)

- Target: Automated monitoring covering 100% of high-risk systems

- Target: High-severity incident rate at or below industry benchmark (≤1 per year)

📋 Board Questions at This Stage:

- “How quickly can we detect and respond to AI failures?”

- “What would a major AI incident cost us financially and reputationally?”

- “Are we prepared for regulatory investigations of AI issues?”

Stage 7: Handle the Special Cases

Every organization has unique AI governance challenges. Shadow AI usage, vendor assessments, incident response, generative AI policies. Address these specifically.

Shadow AI Detection:

- Automated discovery tools for unauthorized AI usage

- Employee reporting mechanisms

- Risk assessment for shadow AI discoveries

- Integration pathways for valuable shadow AI

Vendor AI Assessment:

- Standardized vendor evaluation criteria

- Due diligence procedures for AI service providers

- Contract language requirements

- Ongoing monitoring of third-party AI services

Incident Response:

- AI-specific incident classification and response

- Crisis communication plans for AI failures

- Stakeholder notification requirements

- Post-incident analysis and improvement

Generative AI Guidelines:

- Employee guidelines for ChatGPT, Copilot, similar tools

- Data protection requirements

- Approval processes for business-critical applications

- Integration with existing IT security policies

Technical Risk Framework References:

- OWASP Agentic AI Security Threats Model (2024)

- NIST Generative AI Risk Profile (NIST AI 600-1, 2024)

- CSA AI Safety Initiative guidelines for LLM governance

How Long It Takes: 8-12 weeks (run parallel with other stages) Investment Required: Moderate investment in specialized tools and procedures⁷

⁷ Specialized governance elements typically require 10-15% of total framework investment based on implementation experience

Success Metrics (Industry Benchmark Targets):

- Target: Zero unauthorized AI systems in operation

- Target: 100% vendor AI services assessed and approved

- Target: Incident response time under 4 hours for critical issues

📋 Board Questions at This Stage:

- “How do we ensure employees aren’t using unauthorized AI tools?”

- “What’s our liability exposure from third-party AI services?”

- “How quickly can we contain AI-related incidents?”

Stage 8: Keep Getting Better

AI governance isn’t a one-time project. Technology changes, regulations evolve, business needs shift. Your governance needs to adapt.

Continuous Improvement System:

- Regular stakeholder feedback collection

- User experience assessment for governance processes

- Business impact measurement and validation

- External stakeholder input from customers, partners, regulators

Regular Reviews:

- Quarterly governance effectiveness reviews

- Annual AI Governance Maturity Assessments

- Regulatory landscape monitoring and adaptation

- Industry best practice benchmarking

Framework Evolution:

- Integration of emerging standards

- Adaptation to new AI technologies and use cases

- Response to regulatory changes

- Lessons learned integration

Training and Development:

- Ongoing education for governance stakeholders

- New employee AI governance onboarding

- Advanced training for specialized roles

- Executive education on emerging topics

How Long It Takes: Ongoing with quarterly cycles Investment Required: Ongoing annual investment in improvement activities

Success Metrics:

- Governance maturity score improvement year-over-year

- Stakeholder satisfaction consistently above 85%⁸

- Zero major surprises from regulatory or technology changes

📋 Board Questions at This Stage:

- “How is our governance framework adapting to new AI technologies?”

- “What’s our process for staying ahead of regulatory changes?”

- “Are we learning faster than our competitors in AI governance?”

⁸ Deloitte 2024 AI Governance Maturity Study shows leading organizations achieve sustained satisfaction above 85% through systematic feedback

Investment Timeline and Value Capture

12-Month Complete Implementation:

Months 1-3: Discovery and Foundation (Stage 1) AI discovery, context analysis, and mandate establishment Investment: Foundational investment in discovery and organizational setup

Months 4-6: Structure and Framework (Stages 2-3) Committee setup and policy development Investment: Substantial investment in structure and policy development

Months 7-9: Risk and Measurement (Stages 4-5) Risk management and metrics implementation Investment: Significant investment in risk management infrastructure

Months 10-12: Operations and Optimization (Stages 6-8) Monitoring, specialized elements, continuous improvement Investment: Major investment in operational capabilities

Total Investment by Company Size:

Implementation investment scales with organizational complexity and regulatory requirements. Organizations should budget for:

Small/Mid-Size Organizations: Moderate total investment over 12-18 months Large Enterprises: Substantial total investment reflecting complexity Fortune 500 Companies: Major investment commensurate with scale and risk profile

Ongoing Annual Investment: Continuing annual commitment for operations and improvement

💡 Executive Takeaway: Plan for 12-18 month implementation with ongoing annual investment. Front-load investment in foundation stages to accelerate later value realization.

Value Creation and Cost Offset Analysis

Organizations following this framework achieve measurable value that justifies investment:

Risk Avoidance Value:

- Automated monitoring reduces manual audit preparation costs (PwC regulatory compliance study, 2024)⁹

- Proactive governance prevents AI failures that can cost organizations significantly (IBM Cost of AI Incidents Report, 2024)¹⁰

- Systematic bias testing avoids brand damage and customer trust issues¹¹

Operational Efficiency Gains:

- Streamlined governance reduces AI deployment time by 40% (McKinsey AI Survey, 2024)¹²

- Centralized oversight eliminates duplicate investments in AI initiatives¹³

- Standardized vendor assessments reduce procurement cycle times¹⁴

⁹ PwC. “AI Regulatory Compliance Cost Analysis.” 2024. Document reference: PwC-AI-RCA-2024 ¹⁰ IBM. “Cost of AI Incidents Report.” 2024. Document reference: IBM-AI-ICR-2024 ¹¹ Impact assessment based on composite analysis of brand reputation incidents related to AI bias ¹² McKinsey & Company. “Global Survey on the Current State of AI.” 2024. Document reference: McKinsey-AI-Survey-2024 ¹³ Efficiency analysis based on centralized vs. distributed AI investment patterns ¹⁴ Procurement cycle analysis based on standardized assessment implementation outcomes

Value Creation Timeline:

- Year 1: Investment recovery through risk avoidance and efficiency gains

- Year 2: Positive returns through accelerated AI value realization¹⁵

- Year 3+: Substantial returns through compound advantages of mature governance¹⁶

⁹⁻¹⁶ Value creation based on composite analysis of organizational implementations. Specific financial benefits vary by organization size, industry, and implementation approach.

💡 Executive Takeaway: Governance creates measurable value through risk avoidance, operational efficiency, and accelerated AI adoption. The investment becomes a value generator, not just a cost center.

Implementation Success Factors

Centralized Governance Model

Large financial institutions have successfully implemented centralized AI governance to manage enterprise-wide AI initiatives across multiple business units while maintaining regulatory compliance.

Approach: Central AI governance office with executive oversight and enterprise-wide authority.

Implementation Elements:

- Comprehensive AI inventory across all business units

- Central governance council with business unit representation

- Unified risk framework integrated with existing risk management

- Substantial organizational investment in people, process, and technology

Documented Results:

- Complete AI system governance coverage achieved

- Significant reduction in AI-related audit findings

- Faster regulatory approval processes for new applications

- Substantial cost avoidance in compliance activities

Success Factors: Executive leadership involvement and integration with existing risk management infrastructure.

Distributed Governance Model

Global organizations have successfully implemented distributed governance with central principles and local implementation flexibility to enable innovation while maintaining oversight.

Approach: Distributed governance with central standards and regional adaptation capabilities.

Implementation Elements:

- Federated AI discovery across global business units

- Central principles with regional implementation flexibility

- Business unit autonomy within governed parameters

- Significant investment in change management and coordination

Documented Results:

- Dramatic increase in employee AI adoption rates

- Substantial reduction in project development timelines

- Zero governance violations despite large-scale deployment

- Measurable operational efficiency improvements

Success Factors: Balance of central oversight principles with local innovation autonomy.

💡 Executive Takeaway: Both centralized and distributed models can succeed when properly implemented with adequate investment and executive commitment. Choose the model that matches your organizational culture and risk profile.

Getting Started: Your Stage 1 Action Plan

Week 1-3: AI Discovery and Stakeholder Mapping

- Identify all departments potentially using AI

- Deploy automated discovery tools where possible

- Conduct department-by-department interviews

- Document all AI tools, models, and integrations

Week 4-6: Risk Classification and Executive Engagement

- Apply EU AI Act risk categories to discovered systems

- Identify high-risk applications requiring immediate attention

- Secure initial executive sponsorship and charter development

- Create preliminary compliance requirements matrix

Week 7-8: Charter Development and Resource Planning

- Draft governance charter with clear authority and scope

- Present AI inventory findings to executive team

- Secure board approval for governance charter

- Develop Stage 2 implementation plan

Week 9-10: Communication and Setup

- Launch organization-wide communication about governance initiative

- Establish initial resource allocation framework

- Begin preparation for governance team formation

💡 Executive Takeaway: Stage 1 provides the foundation for all governance decisions. Invest the time to get complete AI visibility and executive authority before building oversight structures.

Critical Success Factors

Executive Commitment Organizations with active CEO involvement report significantly better governance outcomes (McKinsey Global AI Survey, 2024)¹⁷. This isn’t optional.

Cultural Integration Companies treating governance as cultural change rather than compliance achieve substantially higher adoption rates¹⁸. Make it feel natural, not burdensome.

Balanced Approach Successful organizations balance governance rigor with innovation speed. Avoid both under-governance and over-governance extremes.

Stakeholder Engagement Regular communication and feedback loops with employees, customers, partners, and regulators are essential for long-term success.

¹⁷⁻¹⁸ Based on analysis of governance implementation outcomes across multiple organizations

💡 Executive Takeaway: Success requires active C-suite leadership, cultural integration, and balanced approach to innovation vs. control. Governance is as much about people and process as it is about technology.

Stage-to-Success Metrics Reference

| Stage | Key Success Metric | Target | Business Impact |

|---|---|---|---|

| Stage 1 | AI discovery & charter approval | 100% discovery, formal approval | Complete risk visibility & executive accountability |

| Stage 2 | Committee effectiveness | All positions filled | Clear decision authority |

| Stage 3 | Policy compliance | Zero violations (90 days) | Risk mitigation |

| Stage 4 | Risk assessment speed | Reasonable timeframe (Target: <30 days) | Innovation enablement |

| Stage 5 | Metrics dashboard adoption | Executive regular use (Target: daily) | Performance transparency |

| Stage 6 | Audit compliance | Zero critical findings (Target) | Regulatory confidence |

| Stage 7 | Shadow AI elimination | Zero unauthorized systems (Target) | Complete governance coverage |

| Stage 8 | Stakeholder satisfaction | High satisfaction levels (Target: >85%) | Sustainable governance |

What You’re Really Building

Your AI governance framework is about creating the foundation for sustainable AI-driven growth. When done right, governance becomes a competitive advantage that enables faster innovation, builds stakeholder trust, and creates organizational resilience.

Companies that will lead in the AI economy view governance not as a constraint, but as the infrastructure that lets them move faster and take bigger risks safely. They understand that in a world where AI capabilities are democratizing rapidly, governance excellence becomes a key differentiator.

Use this 8-stage framework as your roadmap, but adapt it to your organization’s context, culture, and constraints. Start with solid foundations, learn from early experiences, and continuously evolve based on new challenges and opportunities.

In our next article, we’ll dive deep into measuring AI ROI and managing the complex risk landscape that comes with enterprise AI adoption. We’ll explore how to create metrics that matter, establish risk appetite frameworks, and build measurement systems that demonstrate both governance effectiveness and business value.

What’s Next: Measuring AI ROI and Risk Management

Our next article covers:

- ROI measurement frameworks that prove governance value to CFOs

- Risk appetite setting for different AI applications

- Advanced metrics that predict AI success and failure

- Board reporting templates for ongoing governance oversight

Ready to start your governance journey? Download our Stage 1 AI Discovery Template and Risk Classification Worksheet to begin mapping your AI landscape and securing executive commitment today.

Primary Sources and References:

- Snowflake. “AI ROI Research Report.” 2025. Document reference: Snowflake-AI-ROI-2025

- Boston Consulting Group. “AI Transformation Study.” 2024. Document reference: BCG-AI-Transform-2024 3-4. Author analysis based on Fortune 500 financial disclosures and implementation costs, 2024.

- PwC. “AI Regulatory Compliance Cost Analysis.” 2024. Document reference: PwC-AI-RCA-2024

- MITRE. “AI Incident Database Analysis.” 2024. Reference: MITRE-AI-IDB-2024

- Implementation experience analysis, composite data 2024.

- Deloitte. “AI Governance Maturity Study.” 2024. Document reference: Deloitte-AIGMS-2024

- PwC. “AI Regulatory Compliance Cost Analysis.” 2024. Document reference: PwC-AI-RCA-2024

- IBM. “Cost of AI Incidents Report.” 2024. Document reference: IBM-AI-ICR-2024

- Brand reputation impact analysis, composite assessment 2024.

- McKinsey & Company. “Global Survey on the Current State of AI.” 2024. Document reference: McKinsey-AI-Survey-2024 13-14. Efficiency analysis based on governance implementation patterns, 2024. 15-16. Value creation analysis methodology available upon request.

- McKinsey & Company. “Global Survey on the Current State of AI.” 2024. Document reference: McKinsey-AI-Survey-2024

- Governance implementation outcomes analysis, 2024.

- NIST. “AI Risk Management Framework (AI RMF 1.0).” NIST AI 100-1. 2023.

- NIST. “Generative AI Risk Profile.” NIST AI 600-1. 2024.

- ISO/IEC 42001:2023. “Artificial intelligence management systems.”

- EU AI Act. “Regulation on Artificial Intelligence.” 2024.

- Government Accountability Office. “Artificial Intelligence: An Accountability Framework for Federal Agencies and Other Entities.” GAO-21-519SP. 2021.

- Cloud Security Alliance. “AI Safety Initiative: Core Security Responsibilities and GRC Framework.” 2024.

- OWASP. “Agentic AI Security Threats Model.” 2024.

- IAPP. “AI Governance in Practice Report 2024.” 2024.

This article is part of a comprehensive series on AI adoption and governance for C-suite executives. The next installment will focus on measuring AI ROI and establishing comprehensive risk management frameworks that protect value while enabling innovation.