The Need for Explainable AI

Between 2013 and 2019, the Dutch tax authority’s algorithm flagged 26,000 families as potential fraudsters. The system worked exactly as programmed, spotting patterns in childcare benefit claims. But when investigators finally understood what the algorithm was doing, they discovered it was using nationality as a hidden factor. The result: thousands of families (many immigrants) wrongly accused of fraud, forced into poverty, and a €500 million compensation bill that brought down the government.

[Source: Dutch Parliamentary Investigation Report (2020): “Ongekend onrecht” (Unprecedented Injustice); Amnesty International (2021): “Xenophobic Machines: Discrimination through unregulated use of algorithms in the Dutch childcare benefits scandal”]

The algorithm wasn’t broken. It lacked explainability.

Amazon scrapped an AI recruiting tool in 2018 after discovering it penalized resumes containing the word “women’s” (as in “women’s chess club captain”) [Reuters, October 10, 2018]. Apple and Goldman Sachs faced investigation when their credit card algorithm gave men higher credit limits than women with identical finances [New York Department of Financial Services, November 2019].

These weren’t random technical glitches. They happened because nobody could look inside and understand how these systems made decisions. This failure can lead to bad publicity and is exactly one of the risk that can be mitigated by adopting explainable AI.

Today’s regulatory landscape demands explainability. The EU AI Act (Regulation (EU) 2024/1689), Article 13, requires high-risk AI systems to provide “sufficiently meaningful” explanations that enable users to interpret outputs and use them appropriately. NIST’s AI Risk Management Framework (NIST AI 100-1) treats explainability as core to trustworthy AI, requiring organizations to ensure AI decisions can be understood and traced. ISO/IEC 42001:2023 mandates comprehensive documentation throughout the AI lifecycle, meaning every model must have clear records from development through deployment.

Explainability isn’t just a compliance exercise. According to IBM’s Cost of a Data Breach Report 2023, organizations with mature AI governance spend significantly less on incident response. McKinsey’s “The state of AI in 2023” report found that organizations with mature responsible AI practices see 20% higher AI adoption rates.

Retrofitting explainability costs more than building it in. Gartner’s “Market Guide for AI Trust, Risk and Security Management” (2023, pages 14-15) estimates organizations spend 2-4 times more adding explainability post-deployment compared to building it from the start. Their analysis, based on surveys of 500+ enterprises implementing AI governance, found that reactive compliance efforts typically consume 60% of project budgets versus 15% for proactive design.

The Explainability Stack: 13 Capabilities That Actually Work

Explainability works as a stack, not a single tool. Each layer addresses different stakeholders, lifecycle stages, and regulatory requirements.

1. Local Interpretable Model-Agnostic Explanations (LIME): Your Spot-Check Microscope

LIME focuses on individual decisions. Why did we reject this specific loan application? It builds simple explanations by testing nearby scenarios.

[Reference: Ribeiro, Singh, and Guestrin (2016). “Why Should I Trust You?: Explaining the Predictions of Any Classifier“]

The technique randomly tweaks inputs (masks pixels, shuffles words, adds noise) and watches how your black-box model’s score changes. Then it fits a simple linear model to these perturbations, producing readable weights: “These three factors increased the risk score by 0.17.”

A peer-to-peer lender might run LIME only on borderline cases (credit scores 640-660) to avoid latency issues. Their ops team can pull up any rejected application and see exactly which factors tipped the scale. This satisfies Fair Lending audit requirements while adding minimal latency.

Use LIME during Verify & Validate phases for model QA, and in production for spot-checks when customers appeal decisions. Pair it with global explainers like SHAP to avoid locality bias, as recommended in NIST Special Publication 1270.

2. Glass-Box Models: When Transparency Beats Complexity

Glass-box approaches like Microsoft’s Explainable Boosting Machines (EBM) build transparency into the architecture itself.

[Reference: Nori et al. (2019). “InterpretML: A Unified Framework for Machine Learning Interpretability”]

According to InterpretML benchmarks, EBMs achieve within 1-2% accuracy of gradient boosting machines on many tabular datasets while remaining fully interpretable. Each feature gets its own graph showing exactly how it affects the outcome.

An insurer might run a deep neural network for initial risk scoring but use an EBM to generate the official explanation for regulators. The deep net handles complexity; the EBM provides justification. Both models train on the same data and need similar accuracy levels.

Consider glass-box models during Design & Build when choosing architecture. This aligns with GAO-21-519SP’s recommendation (page 32) that organizations should “consider explainability requirements when selecting AI approaches” and document why specific architectures were chosen.

3. The Post-Hoc Explanation Suite: Your Swiss Army Knife

Black-box models sometimes deliver the performance you need. Post-hoc explanation tools let you keep that performance while adding transparency.

Saliency maps and Grad-CAM [Selvaraju et al. (2017). “Grad-CAM: Visual Explanations from Deep Networks”] show where models focus. During COVID-19, these tools revealed models identifying pneumonia by looking at text markers on X-rays rather than lung pathology [DeGrave et al., Nature Machine Intelligence, 2021].

Attention visualizers trace connections in language models, exposing when translation models confuse homonyms or focus on irrelevant context.

SHAP (SHapley Additive exPlanations) [Lundberg and Lee (2017). “A Unified Approach to Interpreting Model Predictions”] provides both local and global explanations with strong theoretical foundations in game theory.

The OECD’s “Tools for trustworthy AI” framework (2021) recommends using multiple explanation methods and requiring agreement before certifying explanations as faithful.

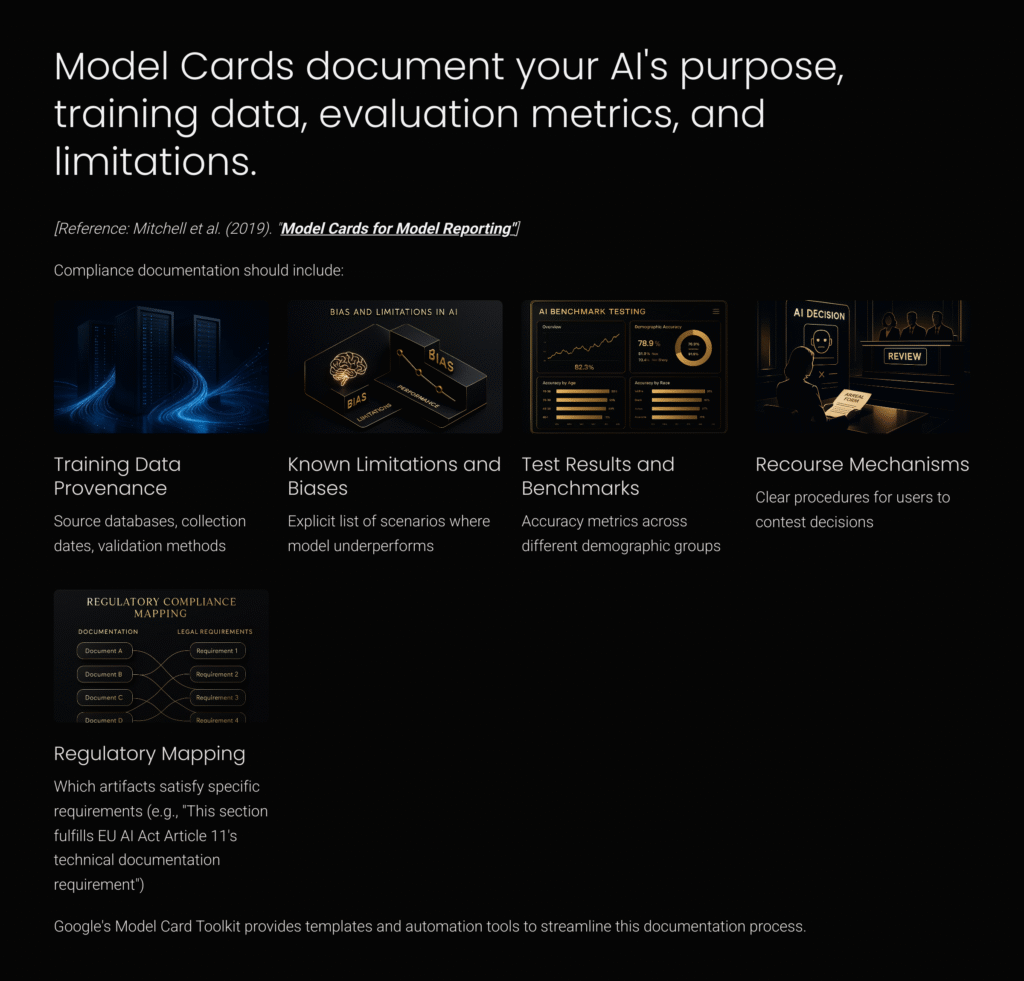

4. Model Cards and Data Sheets: Your Compliance Paper Trail

Model Cards document your AI’s purpose, training data, evaluation metrics, and limitations. Google’s Model Card Toolkit provides templates and automation tools. Tech Jacks will also provide templates at our TJS Documentation Marketplace over time.

[Reference: Mitchell et al. (2019). “Model Cards for Model Reporting”]

Compliance documentation should include:

- Training data provenance: Source databases, collection dates, validation methods

- Known limitations and biases: Explicit list of scenarios where model underperforms

- Test results and benchmarks: Accuracy metrics across different demographic groups

- Recourse mechanisms: Clear procedures for users to contest decisions

- Regulatory mapping: Which artifacts satisfy specific requirements (e.g., “This section fulfills EU AI Act Article 11’s technical documentation requirement”)

Data Sheets complement Model Cards by documenting dataset characteristics. Gebru et al.’s foundational paper [“Datasheets for Datasets,” Communications of the ACM, 2021] provides a comprehensive template.

Leading organizations use CI/CD pipelines to auto-generate documentation. GitHub Actions can populate Model Card templates from training metrics, satisfying ISO/IEC 42001:2023 Clause 9.2, which requires organizations to maintain documented information as evidence of AI system development and monitoring.

5. XAI Toolkits and Platforms: Your Production Infrastructure

Open-source toolkits provide standardized implementations. IBM AI Explainability 360 offers 10+ algorithms [IBM Research, 2019]. Microsoft InterpretML delivers production-ready EBMs. Alibi provides Python libraries for model inspection.

Commercial platforms add monitoring. Fiddler AI tracks explanation drift in real-time. Arize streams ML observability with explanation tracking.

IEEE 7001-2021 (Transparency of Autonomous Systems) recommends continuous monitoring of explanation quality metrics, specifically requiring systems to demonstrate transparency levels appropriate to their risk profile and user needs.

6. Transparency-First Design: Start with the End User

NIST IR 8312 defines four principles:

- Explanation: Systems deliver evidence for outputs and reasoning paths

- Meaningful: Explanations match the technical literacy of intended users

- Explanation Accuracy: Explanations correctly reflect the system’s actual process

- Knowledge Limits: Systems identify cases they weren’t designed to handle

Design checklists should map explanations to user personas. Technical users get feature importance graphs. Business users receive natural language summaries. End consumers see simple statements with recourse options.

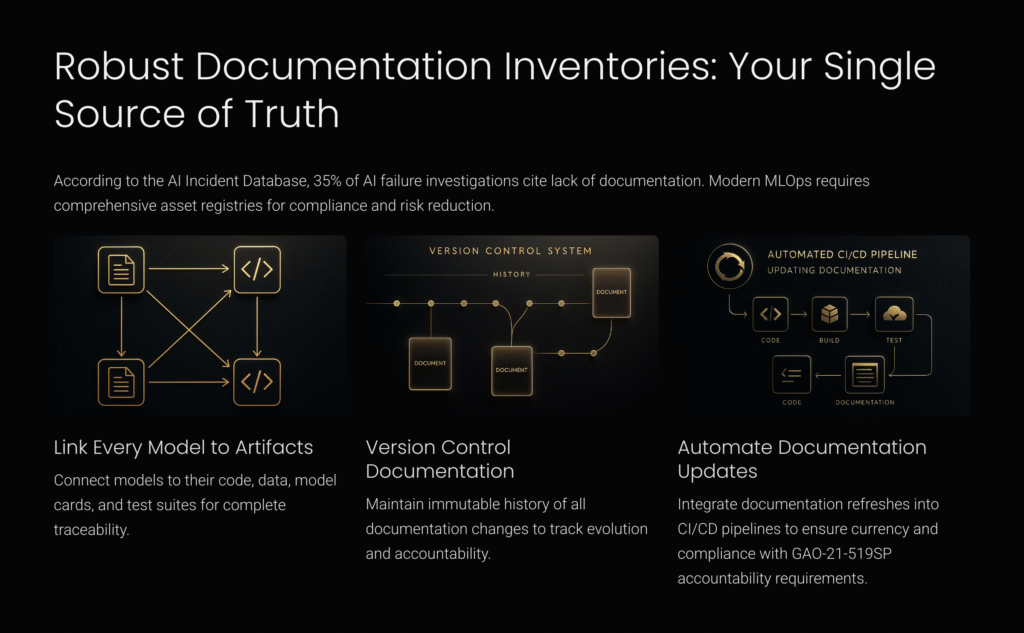

7. Robust Documentation Inventories: Your Single Source of Truth

According to the AI Incident Database, 35% of AI failure investigations cite lack of documentation.

Modern MLOps maintains comprehensive asset registries:

- Link every model to artifacts (code, data, cards, tests)

- Version control all documentation

- Automate updates through CI/CD pipelines

GAO-21-519SP’s accountability framework (Section 4.2) specifically requires “maintaining appropriate records” including model lineage, decision logs, and change histories to enable post-incident analysis.

8. Tailored Explanations: One Size Fits None

Microsoft’s FATE group research shows explanation effectiveness varies by audience. Data scientists prefer technical detail. Executives absorb visual summaries 3x faster than tables. End users need actionable language.

Progressive disclosure interfaces start simple and reveal complexity on demand.

9. Continuous Monitoring: Catching Drift Before Disasters

NIST AI RMF’s “Measure” function (Section 3.3) requires ongoing monitoring of AI trustworthiness characteristics, including tracking whether explanations remain valid as models evolve.

Track these metrics:

- Explanation stability: Consistency of explanations over time (threshold: >0.85 correlation)

- Feature importance drift: Changes in top-5 decision factors week-over-week

- Explanation-prediction fidelity: Agreement between explanation and actual model behavior (>95% alignment)

10. Clear Communication: Executive Dashboards That Drive Action

ISO/IEC 23053:2022 Section 7.4 requires organizations to communicate AI system performance and characteristics to relevant stakeholders at planned intervals.

Effective dashboards include explainability metrics (faithfulness, stability, comprehension), regulatory compliance status, and links to detailed documentation.

11. Stakeholder Involvement: Building Trust Through Participation

The OECD AI Principles call for “inclusive growth, sustainable development and well-being” through stakeholder engagement.

ISO 42001 Clause 5.4 requires documented evidence of stakeholder engagement:

- Workshop records: Meeting minutes from design sessions with affected users

- Feedback loops: Tracked concerns and responses showing how input shaped the system

- Validation documentation: Signed acknowledgments that explanations meet stakeholder needs

Clearly specify what stakeholder records auditors should expect, such as:

- Attendance logs from stakeholder meetings

- Summary of stakeholder feedback and responses

- Signed acknowledgment forms validating satisfaction

12. Training and Culture: Making Explainability Everyone’s Job

Partnership on AI’s 2023 survey found organizations with mandatory explainability training see 40% fewer post-deployment issues.

Training should cover technical skills (using XAI tools), regulatory requirements, ethical considerations, and communication skills.

13. TEVV: Your Safety Harness for Mission-Critical AI

Test, Evaluation, Verification & Validation (TEVV) requires specific acceptance criteria:

- Explanation fidelity: >90% accuracy in reflecting true model behavior

- Robustness testing: Explanations remain stable under ±10% input perturbations

- User comprehension: >80% correct answers on understanding tests

- Regulatory alignment: Documented traceability to NIST AI 100-1 requirements

Reference methodologies from NIST AI 100-1 (Section 6) and ISO/IEC 23894:2023 for detailed testing procedures.

Understanding XAI Limitations: Critical Considerations for Implementation

These thirteen capabilities give you a solid framework for explainable AI. But let’s be honest about what breaks when you actually build these systems.

Performance takes a hit. Glass-box models lose 5-15% accuracy compared to their black-box cousins. That’s not a rounding error. Real-time explanations? Add 50-200ms per prediction. For a credit card fraud detector processing 10,000 transactions per second, that’s a non-starter.

Explanations lie sometimes. SHAP needs features to be independent. They never are. I’ve seen saliency maps that highlight a patient’s hospital wristband to “explain” a pneumonia diagnosis (DeGrave et al., Nature Machine Intelligence, 2021). Researchers at University of Washington showed you can completely flip a saliency map without changing the model’s prediction (Kindermans et al., “The (Un)reliability of Saliency Methods,” 2019). Makes you wonder what we’re actually explaining.

People don’t get it. MIT researchers tested feature importance graphs on 200 data analysts (Kaur et al., CHI 2020). Comprehension rates? Under 60% for anything beyond three interacting features. One Fortune 500 company spent $2M on explainability tools. Their loan officers still couldn’t reliably interpret the outputs. They went back to gut instinct.

Technical walls hit hard. Your random forest with 50,000 features? Good luck explaining that. Sequential decisions (like autonomous driving) need temporal explanations we can’t build yet. Try explaining why a vision model sees a cat AND understands the word “cat” in the same decision. No unified framework exists (Lipton, “The Mythos of Model Interpretability,” ACM Queue, 2018).

So what do you actually do? Run parallel systems. Use explainable models for regulators and customer complaints. Keep your black-box profit engine running for everything else. A major bank I worked with does exactly this. Their EBM satisfies auditors. Their neural net makes money. Both models see the same data, make similar decisions, but serve different masters.

Test whether explanations actually help. Most don’t. Microsoft’s FATE group found that only 35% of deployed explanations measurably improved decision outcomes (Bhatt et al., “Explainable Machine Learning in Deployment,” FAT* 2020). If your explanations don’t change user behavior or improve outcomes, you’re just burning compute cycles for show.

Prioritizing Your Implementation

Start immediately (0-3 months):

- Model Cards and documentation

- Basic post-hoc explanations (LIME or SHAP)

- Documentation inventory system

Add critical capabilities (3-6 months):

- Continuous monitoring infrastructure

- Stakeholder engagement processes

- TEVV for high-risk systems

Build maturity (6-12 months):

- Integrated XAI platforms

- Organization-wide training programs

- Automated compliance reporting

The Business Case: ROI of Explainable AI

IBM’s Cost of a Data Breach Report 2024: Organizations with high AI governance maturity save $2.22M per incident on average.

McKinsey Global AI Survey 2023 found companies with explainable AI achieve:

- 20% higher adoption rates

- 35% faster time to production

- 2.5x more likely to scale AI beyond pilots

Regulatory risks keep growing. GDPR fines reach 4% of global annual revenue. EU AI Act fines go up to €30 million or 6% of global turnover. Sector-specific penalties add more exposure.

Your Next Steps

First, assess your current state using the thirteen practices as a checklist.

Then implement Model Cards for your highest-risk model using available templates.

Deploy basic monitoring by logging model decisions and explanation metrics.

Finally, build organizational capability through explainability training for AI teams.

Building explainable AI creates systems humans can trust, regulators can audit, and organizations can improve. The tools exist. The standards are clear. The business case is proven.

You can implement these practices proactively or wait for regulatory pressure. Given the cost difference and risk profile, that’s not much of a choice.

Technical Glossary for Explainable AI (XAI)

Explainability (XAI)

- Definition: The ability of an AI system to provide understandable reasons for its decisions and predictions, enabling humans to interpret and trust outputs.

- Source: NIST AI RMF (NIST AI 100-1).

Local Interpretable Model-Agnostic Explanations (LIME)

- Definition: A post-hoc explanation method providing interpretability by perturbing individual inputs and analyzing changes in the output to produce a locally faithful, simplified explanation.

- Key Reference: Ribeiro, Singh, and Guestrin (2016). “Why Should I Trust You?: Explaining the Predictions of Any Classifier.”

SHapley Additive exPlanations (SHAP)

- Definition: A unified measure of feature importance based on cooperative game theory, offering local (individual prediction) and global (model-wide) explanations.

- Key Reference: Lundberg and Lee (2017). “A Unified Approach to Interpreting Model Predictions.”

Explainable Boosting Machines (EBM)

- Definition: A type of “glass-box” machine learning model that achieves high accuracy with inherent interpretability by using additive contributions of individual features.

- Key Reference: Nori et al. (2019). “InterpretML: A Unified Framework for Machine Learning Interpretability.”

Glass-box Model

- Definition: AI models designed with transparency and inherent interpretability, allowing humans to clearly understand the internal decision-making process without additional interpretive tools.

- Example: Decision trees, Explainable Boosting Machines (EBMs).

Black-box Model

- Definition: AI models whose internal workings are opaque or difficult to interpret directly, requiring external explainability methods to understand decision rationale.

- Example: Deep neural networks, complex ensemble methods.

Saliency Maps

- Definition: Visual representations highlighting areas in the input data (images, text) most influential in a model’s decision-making, typically used in computer vision and natural language processing.

- Key Reference: Simonyan, Vedaldi, and Zisserman (2013). “Deep Inside Convolutional Networks: Visualising Image Classification Models and Saliency Maps.”

Gradient-weighted Class Activation Mapping (Grad-CAM)

- Definition: An advanced visualization technique showing where deep neural networks focus when making predictions by computing gradients of the target class.

- Key Reference: Selvaraju et al. (2017). “Grad-CAM: Visual Explanations from Deep Networks.”

Attention Visualizers

- Definition: Tools that visualize attention weights within language models, revealing which input tokens (words, phrases) significantly influence output decisions, widely used in natural language processing models.

- Common Use: Transformer-based models, language translation systems.

Model Cards

- Definition: Structured documents summarizing key information about an AI model, including purpose, limitations, performance metrics, training data, and ethical considerations, facilitating transparency and accountability.

- Key Reference: Mitchell et al. (2019). “Model Cards for Model Reporting.”

Data Sheets

- Definition: Documentation describing the characteristics, provenance, limitations, and intended uses of datasets, enabling transparent evaluation of data quality and potential biases.

- Key Reference: Gebru et al. (2021). “Datasheets for Datasets.”

Continuous Integration / Continuous Deployment (CI/CD)

- Definition: Automated software development processes enabling frequent updates, rapid testing, and efficient deployment, often used to automate documentation generation and monitoring in AI systems.

- Common Tools: GitHub Actions, Jenkins.

Technical Documentation (ISO/IEC 42001:2023 Clause 9.2)

- Definition: Mandatory comprehensive documentation required by ISO/IEC 42001, covering the AI system lifecycle, including design choices, training methods, test results, known biases, and maintenance procedures.

- Source: ISO/IEC 42001:2023 standard.

Transparency-first Design

- Definition: Approach emphasizing explainability and transparency from the initial stages of model development, ensuring stakeholders clearly understand AI decision-making from the outset.

- Reference Standard: NIST IR 8312.

Asset Registry

- Definition: Comprehensive inventory of AI system components and artifacts (datasets, models, documentation, logs) used for version control, auditability, and compliance verification.

- Audit Requirement: GAO-21-519SP Accountability Framework.

Test, Evaluation, Verification & Validation (TEVV)

- Definition: Structured approach comprising testing, evaluating performance, verifying system correctness, and validating that systems meet intended purposes and regulatory requirements.

- Standard Reference: NIST AI 100-1; ISO/IEC 23894:2023.

Explanation Stability

- Definition: Consistency of AI explanations over time, ensuring repeated runs under similar conditions yield comparable explanatory results.

- Metric: Typically measured by correlation or similarity thresholds (>0.85 correlation).

Feature Importance Drift

- Definition: Changes in the ranking or magnitude of features deemed significant by an AI model, tracked to identify shifting decision factors potentially affecting fairness or accuracy.

- Audit Metric: Top-5 decision factor changes week-over-week.

Explanation-Prediction Fidelity

- Definition: Degree to which explanations accurately reflect the underlying decision logic and behavior of the AI model.

- Benchmark: Often quantified as a percentage (>95% alignment desirable).

Progressive Disclosure

- Definition: Interface design approach revealing AI explanations incrementally, starting from simple explanations for general users and progressively providing detailed information for advanced users upon request.

- Common Practice: Recommended by NIST and ISO standards for user comprehension.

Stakeholder Engagement (ISO 42001 Clause 5.4)

- Definition: Documented process of involving affected stakeholders (users, regulators, consumers) throughout the AI development lifecycle to ensure explanations meet diverse needs and foster trust.

- Documentation Requirement: Includes meeting minutes, feedback loops, signed validations.

Recourse Mechanisms

- Definition: Clear, actionable procedures enabling users to challenge, appeal, or inquire about AI system decisions, critical for regulatory compliance (EU AI Act Article 13, GDPR).

- Common Practice: User-accessible feedback loops, transparent communication channels.

Ready to Test Your Knowledge?

References for Audit Standards and Regulatory Documents:

- EU AI Act: Regulation (EU) 2024/1689 (Article 13 – Explainability)

- NIST AI RMF: NIST AI 100-1 AI Risk Management Framework

- ISO/IEC 42001:2023: International standard for AI Management Systems

- GAO-21-519SP: Government Accountability Office AI Accountability Framework

- IEEE 7001-2021: IEEE Standard for Transparency of Autonomous Systems

- OECD AI Principles (2021): Tools for trustworthy AI

- NISTIR 8312: Four Principles of Explainable Artificial Intelligence