AI Governance Hub Guide & Resources | Tech Jacks

- Home

- AI Governance Hub Guide & Resources | Tech Jacks

AI Governance HUB

from strategy to implementation and Management

Title: Founder & Senior Director of Cloud Security Architecture & Risk

Credentials: CISSP, CRISC, CCSP

Hello Everyone, Help us grow our community by sharing and/or supporting us on other platforms. This allow us to show verification that what we are doing is valued. It also allows us to plan and allocate resources to improve what we are doing, as we then know others are interested/supportive.

Table of Contents

Welcome to our AI Governance Hub. We’re building resources for people navigating AI governance – whether you’re an executive trying to figure out AI strategy, a compliance officer dealing with new regulations, or a technical leader wondering how to make governance frameworks actually work in practice.

AI governance can feel overwhelming, but you’re not alone in figuring it out. We’re documenting what we learn: step-by-step guides for setting up governance committees, detailed walkthroughs of regulatory requirements like NIST AI RMF and the EU AI Act, and real examples from organizations implementing these frameworks.

Everything we create gets shared here. Start with our 8-stage committee setup guide, dive into implementation details, or browse case studies. We’re all learning as regulations evolve and organizations adapt.

Jump in wherever makes sense for your situation. Let’s figure out responsible AI governance together.

Additional Resources:

AI Governance Career Hub

AI Governance Committee Hub

AI Data Governance Hub

Getting Started With AI Governance: The Beginning

AI Governance Committee Implementation: 8 Basic Stages to Mitigate Risks

AI Use Case Inventories: 8 Key Components for Strong AI Tracking

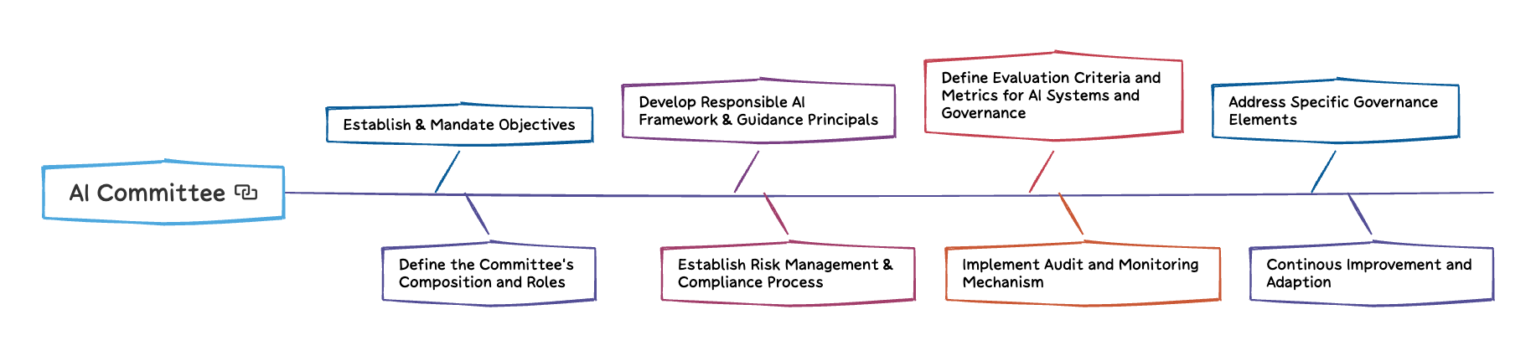

The 8-Critical Stages Of AI Governance

In our article: AI Governance: 8 Defined Stages to Mitigate Risks we discussed 8 stages that organizations can implement to establish a robust AI Governance Committee, tasked with providing AI governance over 64 sub-activities:

Establish & Mandate Objectives

Define the Committee’s Composition and Roles

Develope Responsible AI Framework & Guidance ePrincipals (AI Bias, AI Acceptable Use Policy )

Establish Risk Management & Compliance Processes

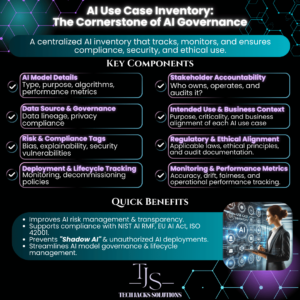

Define Evaluation Criteria and Metrics for AI Systems and Governance (AI Use Case Inventories Tracker)

Implement Audit and Monitoring Mechanisms

Address Specific governance Elements

Continous Improvement and Adaption

These AI Governance Committee stages establish the visibility and activities that are needed to reign in ai usage and ai risk. These stages ensure that the committee has a comprehensive understanding of how AI is being managed and utilized within their organizations. Our insights are always defined and generated from sources such as: EU AI ACT, ISO/IEC 42001, NIST AI RMF, OCED, CSA. We don’t reinvent the wheel.

Quick Start Recommendations:

Often, organizations are not able to start their AI Governance journey with a formal Governance Charter and AI Governance Committee. When AI is already in use (and Safe Responsible AI is paramount,)begin by immediately performing an inventory (gathering visibility) into the AI usage within the organization. Utilize our articles that provide guidance on AI Inventory & Use Case Tracking below:

- Why Your Organization Needs a Comprehensive AI Use Case Tracker (And What to Track)

- AI Use Case Inventories: 8 Key Components for Strong AI Tracking

Next – Define Your Governance Structure & Early Objectives:

- AI Governance Charter – A Great Investment #1

- AI Governance Charter: 5 Sturdy Pillars to Consider for Absolute Control

Review our AI Governance: 8 Defined Stages to Mitigate Risks

This article helps define and set the stage for more specific AI Risk Management processes that will be needed for Responsible, Ethical and Safe AI Governance.

Define an Acceptable AI Use Policy:

- AI Acceptable Use Policy: Proven Actions for Effective Implementation

- AI Use Case Policy: 5 Essential Steps for Success

Adopt AI Lifecycle Framework

- The 7-Stage AI Lifecycle Framework: Your Playbook for Responsible and Profitable AI

- AI Lifecycle: Planning & Design – Objectives & Problem Definition

Articles & Guidance: AI Governance Planning - Strategy

Priority : AI Use Case Tracker

AI Governance Solutions by Role

C-Suite Executives

AI Leadership for the C-Suite: Navigating Governance in a Transformative Era

Boardroom conversations have shifted from “Should we adopt AI?” to “How do we use it responsibly while staying ahead?” As a C-suite leader, the pressure is real. While 78% of businesses use AI across functions, only 28% report active CEO involvement in shaping AI strategy. This leadership gap can lead to missed opportunities and underperformance.

McKinsey research shows companies with CEOs directly involved in AI governance achieve stronger EBIT results. Without solid frameworks, risks like regulatory breaches, operational breakdowns, and reputational harm increase significantly. The stakes couldn’t be higher.

Governing AI doesn’t have to feel overwhelming. Reframing AI governance as an enabler of innovation rather than a compliance burden can make all the difference. Clear frameworks and actionable roadmaps can help scale AI adoption, keep risks in check, and empower you to lead with confidence.

Whether building an AI governance council or scaling an enterprise strategy, it’s about asking the right questions and using the right tools. With the right approach, AI can drive transformation while protecting your organization. Let’s make that vision a reality.

Compliance Officers

You’ve probably seen this before. A shiny new tech comes along, promising to change everything, and compliance is left scrambling to figure out the risks nobody thought about. But AI? It’s different. It’s already here, embedded in how your organization works, whether you’re ready for it or not.

Whether it’s ready or not.

Think about it. Someone’s using ChatGPT to review contracts. Marketing is pushing out AI-generated campaigns. IT just rolled out a few “AI-powered” tools without looping anyone in.

The rules you’re trying to follow seem to change every other month, and the AI governance advice out there? It doesn’t feel connected to the day-to-day realities of compliance work. It’s one thing to talk about risk in theory. It’s another to sit in front of an auditor and explain what went wrong.

We get it. It’s frustrating. That’s why we focus on creating practical frameworks you can actually use. No fluff, no endless theory; just tools and methods that keep up with the speed of the tech. Because when someone’s asking tough questions, a good plan beats good intentions every time.

European Union Artificial Intelligence Act (EU AI Act)

Description: The EU AI Act is the first comprehensive legal framework on AI, aiming to ensure that AI systems used in the EU are safe and respect existing laws on fundamental rights and values.

Official EU Policy Page: Digital Strategy Europe

Interactive Version: Artificial Intelligence Act

Official Journal Text: AI Act | Digital Strategy Europe+1European Parliament+1

NIST AI Risk Management Framework (AI RMF 1.0)

Description: A voluntary framework developed by the National Institute of Standards and Technology (NIST) to help organizations manage risks associated with AI systems. It emphasizes trustworthiness, fairness, and transparency in AI.

Official Site: NIST

AI RMF Playbook: NIST

NIST AI Resource Center: NIST AI Resource CenterNIST AI Resource Center

ISO/IEC 42001:2023 – AI Management System Standard

OECD AI Principles

IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems

Description: This initiative by IEEE provides guidelines and standards to ensure ethical considerations are integrated into the design and development of autonomous and intelligent systems.

Initiative Overview: IEEE Standards Association

Ethically Aligned Design Document: IEEE Standards Association

- AI Now Institute – Algorithmic Accountability Policy Toolkit

https://ainowinstitute.org/aap-toolkit.pdf Canada Directive on Automated Decision-Making

https://www.canada.ca/en/treasury-board-secretariat/services/information-technology/artificial-intelligence/algorithmic-impact-assessment.htmlCloud Security Alliance – AI Governance & Compliance Working Group

https://cloudsecurityalliance.org/research/working-groups/ai-governance-complianceCSA – AI Model Risk Management Framework (2024)

https://cloudsecurityalliance.org/artifacts/ai-model-risk-management-frameworkCSA – AI Organizational Responsibilities: Core Security Responsibilities

https://cloudsecurityalliance.org/artifacts/ai-organizational-responsibilities-core-security-responsibilitiesCSA – Don’t Panic! Getting Real About AI Governance

https://cloudsecurityalliance.org/artifacts/dont-panic-getting-real-about-ai-governanceCSA – AI Risk Management: Thinking Beyond Regulatory Boundaries

https://cloudsecurityalliance.org/artifacts/ai-risk-management-thinking-beyond-regulatory-boundaries

EU AI Act – Interactive Portal

https://artificialintelligenceact.eu/EU AI Act – Official Journal Text

https://eur-lex.europa.eu/eli/reg/2024/1689/ojEU AI Act – Policy Overview

https://digital-strategy.ec.europa.eu/en/policies/regulatory-framework-aiGAO – AI Accountability Framework

https://www.gao.gov/products/gao-21-519spIEEE – Ethically Aligned Design (EAD) v2

https://standards.ieee.org/wp-content/uploads/import/documents/other/ead1e.pdfIEEE Global Initiative on Ethics of Autonomous and Intelligent Systems

https://standards.ieee.org/industry-connections/ec/autonomous-systems/IMDA – Singapore Model AI Governance Framework

https://www.imda.gov.sg/resources/press-releases-factsheets-and-speeches/factsheets/2020/model-ai-governance-framework

ISO – AI Management Systems Overview

https://www.iso.org/artificial-intelligence/ai-management-systems.htmlISO/IEC 23894:2023 – AI Risk Management Standard

https://www.iso.org/standard/77304.htmlISO/IEC 42001 – AI Management System Standard

https://www.iso.org/standard/81230.htmlISO/IEC JTC 1/SC 42 – AI Standards Committee

https://www.iso.org/committee/6794475.htmlNIST – AI Resource Center

https://airc.nist.gov/NIST – AI RMF Playbook

https://www.nist.gov/itl/ai-risk-management-framework/nist-ai-rmf-playbookNIST – AI Risk Management Framework (AI RMF 1.0)

https://www.nist.gov/itl/ai-risk-management-framework

NIST SP 1270 – Managing Bias in AI

https://nvlpubs.nist.gov/nistpubs/SpecialPublications/NIST.SP.1270.pdfNIST SP 1271 – Towards a Standard for AI Bias

https://nvlpubs.nist.gov/nistpubs/SpecialPublications/NIST.SP.1271.pdfNIST SP 1272 – Proposal for Identifying AI Bias

https://nvlpubs.nist.gov/nistpubs/SpecialPublications/NIST.SP.1272.pdfOECD – AI Policy Observatory

https://oecd.ai/OECD – State of Implementation of AI Principles

https://www.oecd.org/publications/the-state-of-implementation-of-the-oecd-ai-principles-four-years-on-835641c9-en.htmOECD – Tools for Trustworthy AI

https://www.oecd.org/publications/tools-for-trustworthy-ai-008232ec-en.htmOECD AI Principles

https://oecd.ai/en/ai-principlesUK CDEI – AI Assurance Framework

https://www.gov.uk/government/publications/the-cdei-ai-assurance-roadmapUNESCO – Recommendation on the Ethics of AI

https://unesdoc.unesco.org/ark:/48223/pf0000381137

IT Leaders & Data Scientist

Your employees are already using AI tools you don’t know about.

They’re feeding company data into ChatGPT, running models through third-party APIs, and building “quick experiments” that somehow ended up in production.

Meanwhile, you’re getting blamed when AI systems mysteriously start performing worse, and compliance is asking for explanations about models you didn’t even know existed. The last thing you need is more governance overhead that slows down legitimate AI work.

You need technical solutions that give you visibility into what’s actually running, monitoring that catches problems before users notice, and frameworks that help your data scientists build more reliable systems without drowning in paperwork.

This isn’t about committee meetings or risk assessments. It’s about tools and processes that make your AI infrastructure more observable, more reliable, and more secure without making your team’s job harder.

Legal & Risk Teams

When your AI system makes a discriminatory hiring decision, who gets sued?

The CEO who approved the project? The data scientist who built the model? The vendor who provided the algorithm?

The honest answer is probably all of them, and good luck explaining to a jury how a neural network reached its conclusion. Legal precedent for AI liability is practically nonexistent, insurance carriers are still figuring out what they’ll actually cover, and your existing risk frameworks weren’t designed for systems that learn and change after deployment.

Every department wants to deploy AI faster, and they’re asking you questions you can’t answer with confidence.

You need more than generic “AI ethics policies” written by consultants who’ve never defended an AI-related lawsuit. You need practical frameworks (AI Strategy) for documenting decisions, clear accountability structures that will hold up in court, and risk management approaches that translate AI technical concepts into language that judges, regulators, and insurance adjusters actually understand.

Latest AI Governance Insights

Staying on top of the latest AI developments can feel overwhelming with so much happening across platforms like OpenAI, MIT Technology Review, and arXiv.

That’s why we’ve created an automated feed that pulls together relevant updates and delivers them straight to your AI governance hub, saving you from jumping between multiple sites.

Having this information right next to your governance frameworks and implementation guides creates a centralized resource for strategic planning and staying current with the evolving AI landscape. It’s like having a compass that helps you navigate AI complexities while keeping everything in context with your governance work.

AI Governance Resource Hub

Visit our Template Market Place: Documentation Template Marketplace

The market place will house templates for:

- Policies

- Procedures

- Evaluations

- Assessments

- Checklist

These policies cover AI Strategy, AI Planning, AI Risk Management, AI Evaluations, AI Assessments and more.

Derrick D Jackson

I’m the Founder of Tech Jacks Solutions and Senior Director of Cloud Security Architecture & Risk

(CISSP, CRISC, CCSP), with 20+ years helping organizations—from SMBs to Fortune 500—secure their IT,

navigate compliance frameworks, and build responsible AI programs.

Hello Everyone, Help us grow our community by sharing and/or supporting us on other platforms. This allow us to show verification that what we are doing is valued. It also allows us to plan and allocate resources to improve what we are doing, as we then know others are interested/supportive.