Author: Derrick D. Jackson

Title: Founder & Senior Director of Cloud Security Architecture & Risk

Credentials: CISSP, CRISC, CCSP

Last updated: September 10st, 2025

AI Governance Committee Implementation: 8 Basic Stages to Mitigate Risks

Table of Contents

Pressed For Time?

Review or Download our 2-3 min Quick Slides or the 5-7 min Article Insights to gain knowledge with the time you have!

Pressed For Time?

Review or Download our 2-3 min Quick Slides or the 5-7 min Article Insights to gain knowledge with the time you have!

EU AI Act Compliance: The New Regulatory Reality

The European Union’s AI Act, adopted in 2024 with enforcement beginning in phases through 2026, fundamentally changes how organizations must approach AI governance. With potential fines reaching 7% of global annual turnover for serious violations, establishing a formal AI Governance Committee isn’t just best practice…it becomes regulatory survival.

For organizations deploying “high-risk” AI systems within EU markets, Article 9’s Risk Management System requirements, Article 17’s Quality Management System mandates, and Article 72’s Post-Market Monitoring obligations create non-negotiable compliance obligations. Your AI Governance Committee becomes the central body responsible for implementing these stringent requirements and avoiding catastrophic financial penalties.

Unleashing AI Potential Through Better Governance

Building an AI Governance Committee doesn’t have to be complicated. You need eight strategic stages that align with global regulatory frameworks.

Most companies struggle with AI oversight because they treat it like a technology problem. It’s not. It’s a business problem that needs people from legal, compliance, ethics, security, data science, and operations working together. Your AI Governance Committee becomes an early warning system for bias issues, privacy violations, and security gaps while ensuring compliance with NIST AI RMF guidelines, EU AI Act requirements, and ISO/IEC 42001 standards.

NIST, GAO, and CSA publish frameworks that emphasize formalizing AI oversight. Companies without an AI Governance Committee face greater exposure to regulatory and reputational risks. Those with established committees can identify and address issues during regular planning meetings.

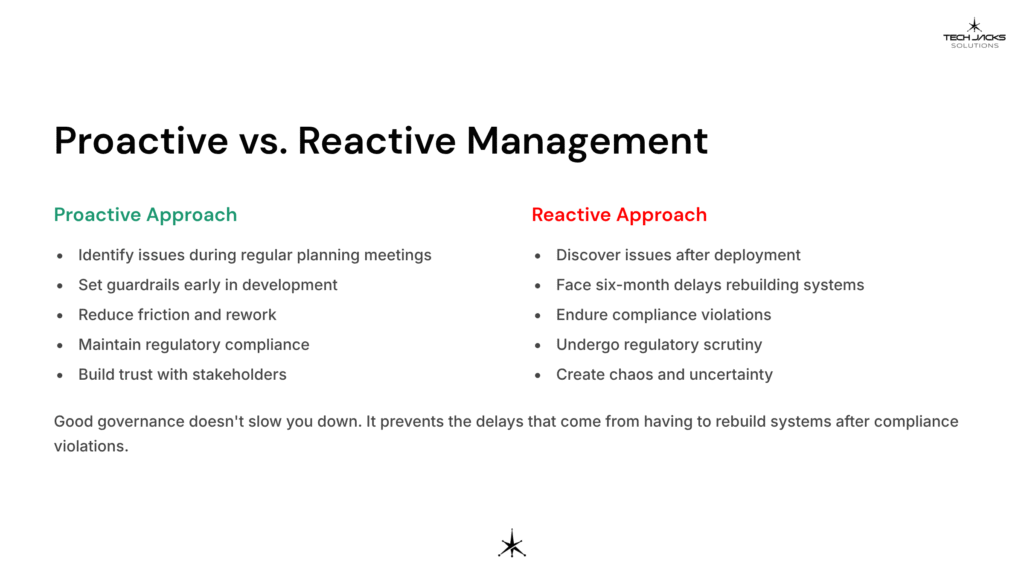

The difference comes down to proactive versus reactive management.

A well-structured AI Governance Committee with board-level authority and a defined AI lifecycle framework speeds up value creation. Setting guardrails early (especially around model training, deployment, and monitoring) reduces friction, cuts rework, and lets teams experiment with confidence while maintaining regulatory compliance.

Committee Authority Requirements: Your committee must have substantive decision-making power, including the authority to approve, modify, or terminate AI projects. Without this authority, your governance becomes performative rather than protective.

Good governance doesn’t slow you down. It prevents the six-month delays that come from having to rebuild systems after compliance violations or regulatory scrutiny.

AI offers competitive advantages, but moving too fast creates regulatory exposure. Racing ahead without oversight is like speeding past a police station. You might gain ground temporarily, but the penalties catch up. An AI Governance Committee lets you move quickly while managing risk. You build trust instead of chaos.

AI Governance Committee Implementation Timeline: 120-Day Rollout (30% Buffer Included)

Days 1-40: Foundation & Mandate (30% buffer: 31 days +30% = ~ 40 days)

- Secure C-suite and board approval

- Draft governance charter with legal authority

- Assemble cross-functional committee

- Conduct initial AI system inventory

- Begin stakeholder training

Days 41-80: Framework Development (30% buffer: 31 days +30% = ~ 40 days)

- Develop responsible AI policies

- Establish risk assessment methodologies

- Create compliance matrices for EU AI Act, NIST RMF

- Pilot governance processes on 1-2 systems

- Define metrics and KPIs

Days 81-120: Operationalization (30% buffer: 31 days +30% = ~ 40 days)

- Launch formal review processes

- Implement monitoring mechanisms

- Begin regular audit cycles

- Establish reporting to leadership

- Document lessons learned and iterate

The 8 Strategic Stages: Framework-Aligned Implementation

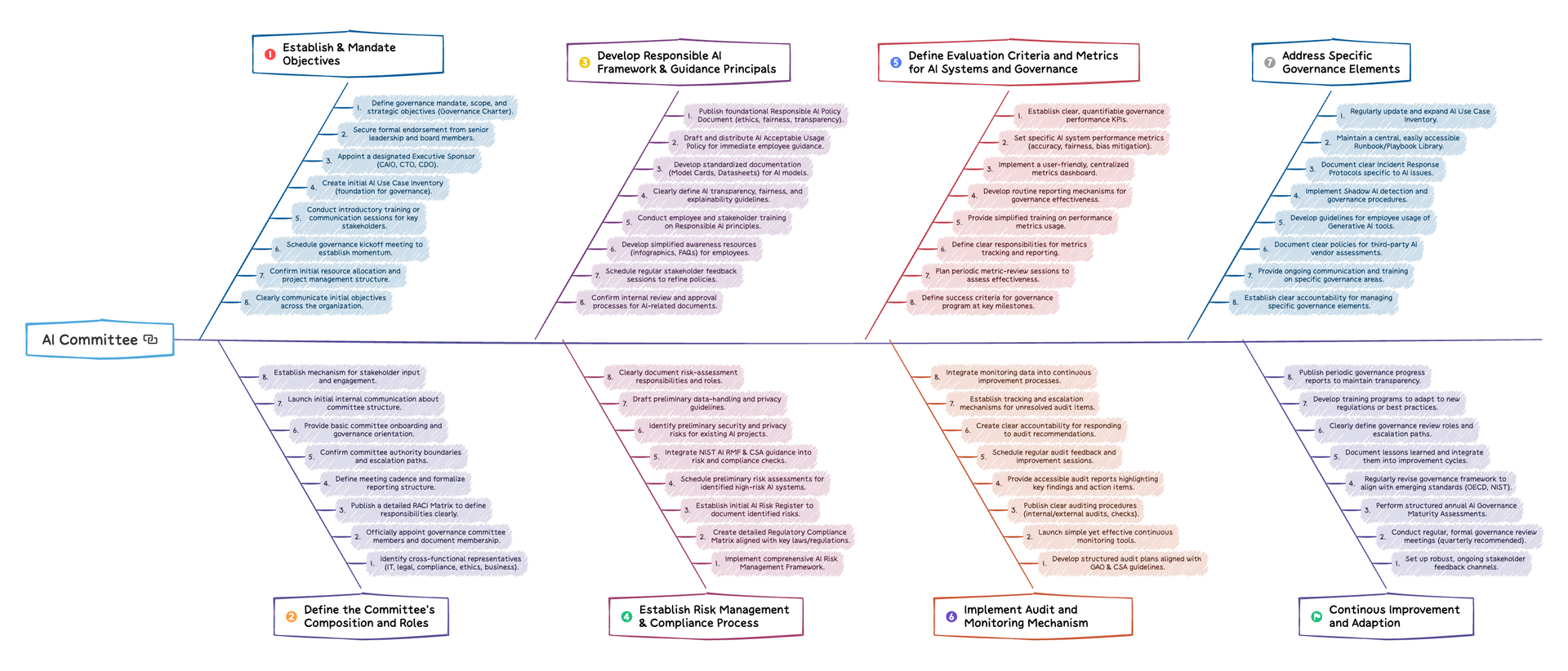

Stage 1: Establish the Mandate and Objectives

Primary Objectives: The initial and paramount step is to clearly define the purpose, scope, and objectives of the AI Governance Committee. This involves understanding why the committee is being formed and what it is expected to achieve in relation to the organization’s AI initiatives.

Critical Success Factors:

- Obtain formal mandate from board of directors and C-suite leadership

- Industry best practices recommend granting committees authority to grant committee authority to approve, modify, or terminate AI projects

- Establish direct reporting lines to executive leadership

- Define clear goals and objectives for AI systems that inform committee priorities

Framework Alignment:

| Framework | Mapping | Specific Requirements |

| NIST AI RMF | GOVERN-1: Policies and procedures | Establish transparent AI risk management policies with clear organizational accountability |

| EU AI Act | Article 17: Quality Management System | Document strategy for regulatory compliance and establish management responsibility framework |

| ISO/IEC 42001 | Clause 5: Leadership | Demonstrate top management commitment to AIMS and establish AI policy with clear objectives |

Key Activities:

- Establish governance mandate, scope, and strategic objectives (Governance Charter)

- Secure formal endorsement from senior leadership and board members

- Appoint designated Executive Sponsor (CAIO, CTO, CDO) with budget authority

- Create initial AI Use Case Inventory (foundation for governance)

- Conduct introductory training sessions for key stakeholders

- Schedule governance kickoff meeting to establish momentum

- Confirm resource allocation and project management structure

- Clearly communicate objectives across the organization

Stage 2: Define the Committee’s Composition and Roles

Primary Objectives: Identify and appoint members to the AI Governance Committee, ensuring multidisciplinary representation from relevant departments. The committee should include technical teams (AI Development, IT Security, Data Science), business units, legal and compliance, risk management, ethics experts, and executive leadership.

Critical Success Factors:

- Cross-functional representation with appropriate expertise mix

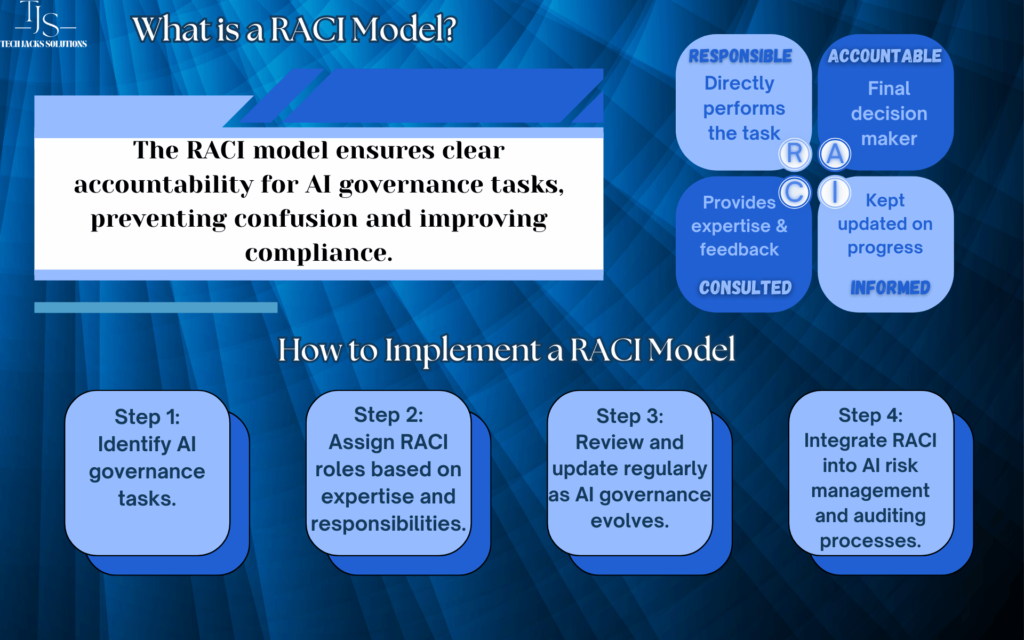

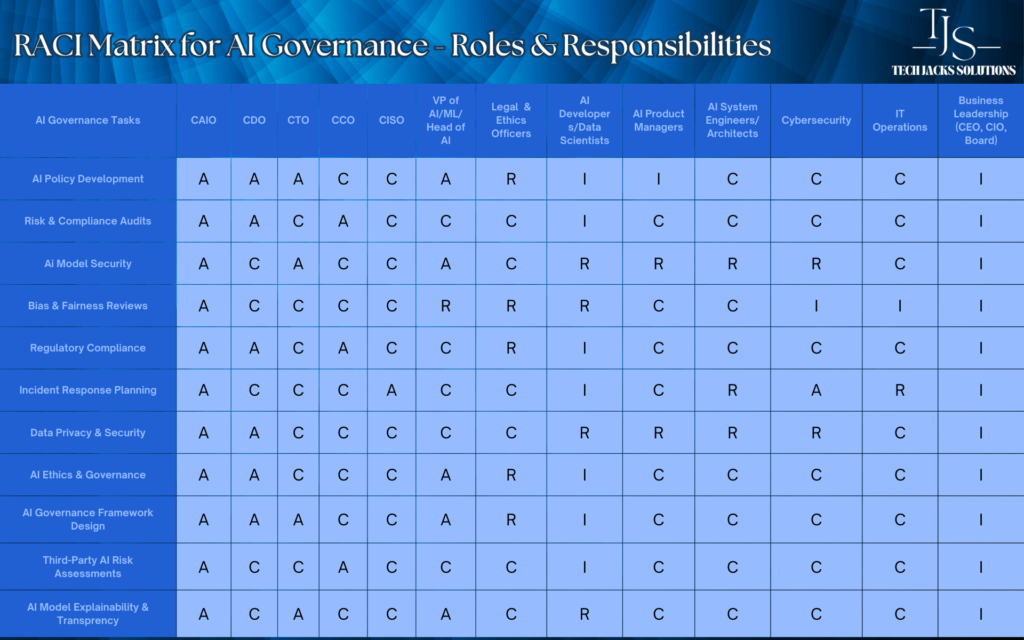

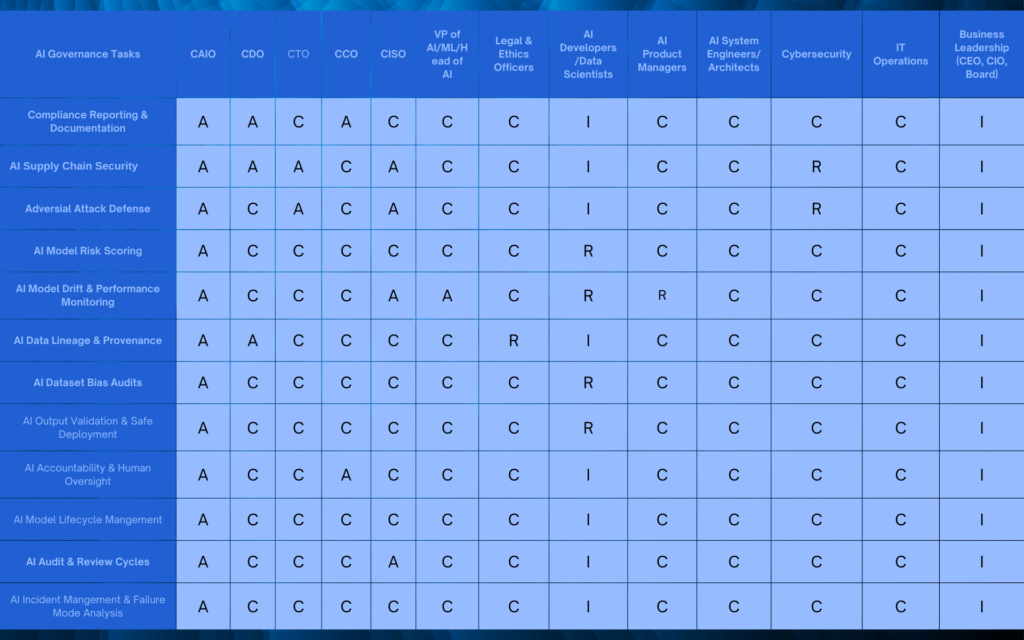

- Clear accountability through RACI matrix implementation

- Regular meeting cadence with formal documentation

- Escalation paths to board-level oversight

Framework Alignment:

| Framework | Mapping | Specific Requirements |

| NIST AI RMF | GOVERN-2: Human-AI configuration | Define clear roles, responsibilities, and appropriate human involvement in AI system oversight |

| EU AI Act | Article 17: QMS Personnel | Ensure committee has competent personnel with defined responsibilities and authority |

| ISO/IEC 42001 | Clause 5.3: Organizational roles | Assign responsibilities and authorities for AIMS roles relevant to AI governance |

Key Activities:

- Identify cross-functional representatives (IT, legal, compliance, ethics, business)

- Officially appoint governance committee members and document membership

- Publish detailed RACI Matrix to define responsibilities clearly

- Define meeting cadence and formalize reporting structure

- Confirm committee authority boundaries and escalation paths

- Provide comprehensive committee onboarding and governance orientation

- Launch internal communication about committee structure

- Establish stakeholder input and engagement mechanisms

Stage 3: Develop a Responsible AI Framework and Guiding Principles

Primary Objectives: Create and approve a comprehensive Responsible AI framework that outlines ethical principles, values, and guidelines for AI development and deployment. This framework must address fairness, bias mitigation, transparency, accountability, security, privacy, and societal impact while ensuring compliance with regulatory requirements.

Critical Success Factors:

- Alignment with OECD AI Principles and industry best practices

- Integration of EU AI Act transparency and human oversight requirements

- Measurable objectives and success criteria

- Regular review and updating mechanisms

Framework Alignment:

| Framework | Mapping | Specific Requirements |

| NIST AI RMF | GOVERN-1.2: Characteristics of trustworthy AI | Incorporate fairness, accountability, transparency, and explainability into organizational policies |

| EU AI Act | Article 13: Transparency and Information | Ensure AI systems provide clear, adequate information about capabilities, limitations, and intended purpose |

| ISO/IEC 42001 | Clause 6.2: AI objectives | Establish measurable AI objectives consistent with AI policy and applicable requirements |

Key Activities:

- Publish foundational Responsible AI Policy Document (ethics, fairness, transparency)

- Draft and distribute AI Acceptable Usage Policy for employee guidance

- Develop standardized documentation (Model Cards, Datasheets) for AI models

- Define AI transparency, fairness, and explainability guidelines

- Conduct employee and stakeholder training on Responsible AI principles

- Develop awareness resources (infographics, FAQs) for employees

- Schedule stakeholder feedback sessions to refine policies

- Confirm internal review and approval processes for AI-related documents

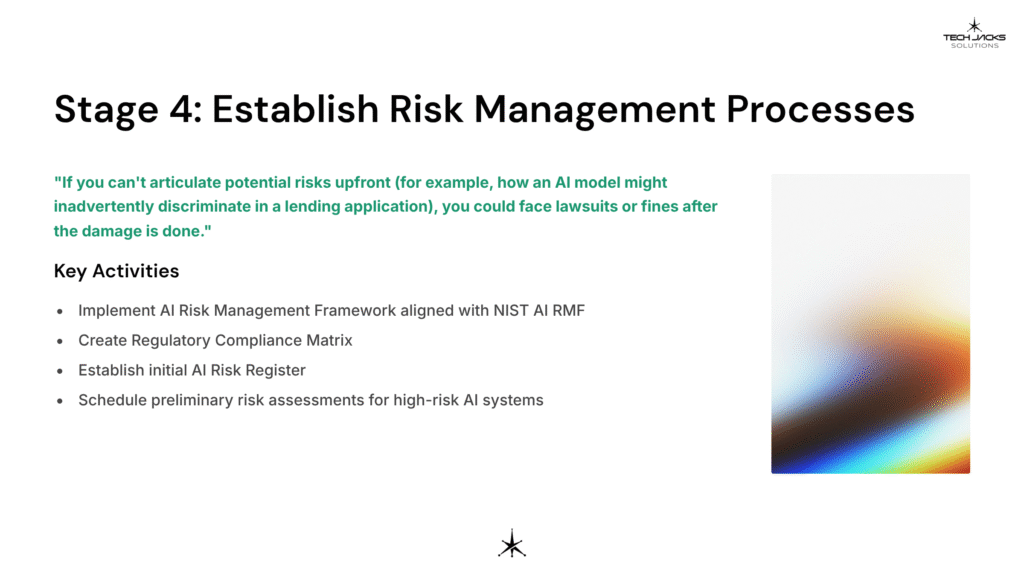

Stage 4: Establish Risk Management and Compliance Processes

Primary Objectives: Implement robust risk assessment and management methodologies specific to AI systems. This stage involves identifying, analyzing, and prioritizing AI-related risks across the AI lifecycle while ensuring compliance with EU AI Act Article 9 requirements and other regulatory frameworks.

Critical Success Factors:

- Continuous, iterative risk management throughout AI system lifecycle

- Integration with enterprise risk management (ERM) frameworks

- Documentation of risk assessment and mitigation strategies

- Regular review and updating of risk registers

Framework Alignment:

| Framework | Mapping | Specific Requirements |

| NIST AI RMF | MAP-1: Context and purpose | Document AI system context, intended purpose, and deployment settings for risk assessment |

| EU AI Act | Article 9: Risk Management System | Establish continuous, documented risk management process throughout high-risk AI system lifecycle |

| ISO/IEC 42001 | Clause 6.1: Risk and opportunity management | Identify AI-related risks and opportunities, plan actions to address them |

If you can’t articulate potential risks upfront (for example, how an AI model might inadvertently discriminate in a lending application), you could face lawsuits or fines after the damage is done.

Key Activities:

- Implement comprehensive AI Risk Management Framework aligned with NIST AI RMF

- Create detailed Regulatory Compliance Matrix for key laws/regulations

- Establish initial AI Risk Register to document identified risks

- Schedule preliminary risk assessments for high-risk AI systems

- Integrate security and privacy risk assessments

- Draft data-handling and privacy guidelines

- Document risk-assessment responsibilities and roles

- Establish risk tolerance levels and acceptance criteria

Stage 5: Define Evaluation Criteria and Metrics for AI Systems and Governance

Primary Objectives: Establish quantifiable evaluation criteria and key performance indicators (KPIs) for both AI systems and governance processes. These metrics help assess performance, reliability, security, ethical compliance, and overall impact while measuring governance framework effectiveness.

Critical Success Factors:

- Quantifiable metrics for both technical performance and governance effectiveness

- Integration with existing business intelligence and reporting systems

- Regular monitoring and reporting mechanisms

- Clear accountability for metrics tracking and improvement

Framework Alignment:

| Framework | Mapping | Specific Requirements |

| NIST AI RMF | MEASURE-2: Testing and evaluation | Establish systematic approaches for measuring AI system performance and trustworthy characteristics |

| EU AI Act | Article 15: Accuracy and robustness | Ensure systems achieve appropriate levels of accuracy, robustness, and cybersecurity throughout their lifecycle |

| ISO/IEC 42001 | Clause 9.1: Monitoring and measurement | Monitor, measure, analyze and evaluate AI system performance and AIMS effectiveness |

Tracking metrics like model accuracy, false positives/negatives, or fairness scores can detect performance dips early, preventing costly rollbacks or reputational damage.

Similarly, monitor the governance process itself by measuring how long it takes to review compliance for new AI projects. This keeps your team from getting bogged down in red tape and helps maintain balance between rapid innovation and proper oversight.

Key Activities:

- Establish clear, quantifiable governance performance KPIs

- Set specific AI system performance metrics (accuracy, fairness, bias mitigation)

- Implement centralized metrics dashboard

- Develop routine reporting mechanisms for governance effectiveness

- Provide training on performance metrics usage

- Define responsibilities for metrics tracking and reporting

- Plan periodic metric-review sessions to assess effectiveness

- Define success criteria for governance program at key milestones

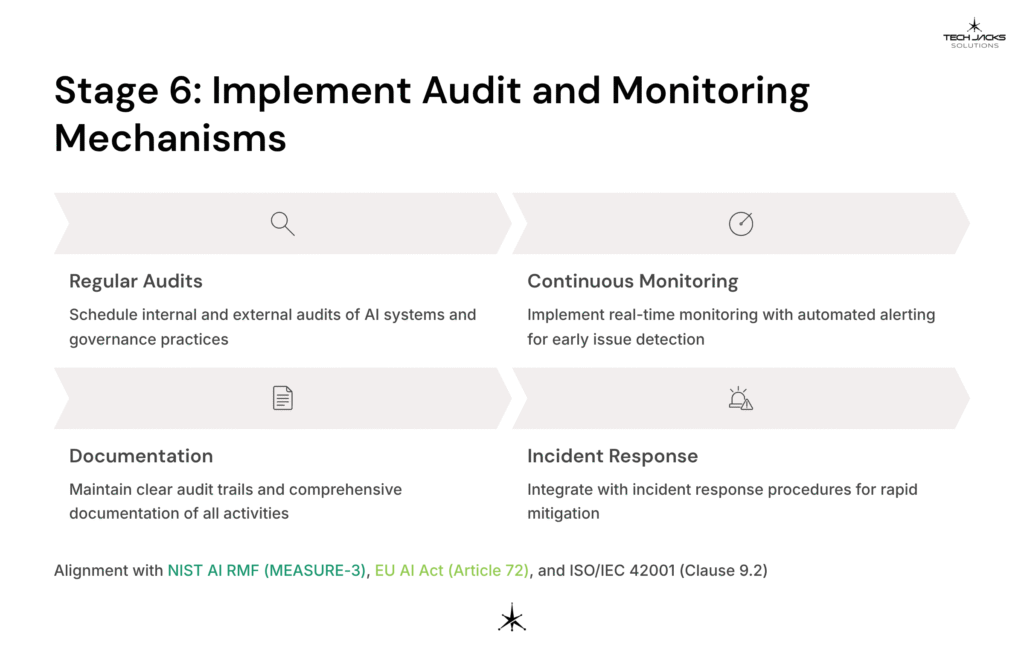

Stage 6: Implement Audit and Monitoring Mechanisms

Primary Objectives: Establish processes for regular audits of AI systems and governance practices, ensuring ongoing oversight and compliance. This includes continuous monitoring and reporting mechanisms for early detection of issues and proactive management aligned with EU AI Act Article 72 post-market monitoring requirements.

Critical Success Factors:

- Regular internal and external audit schedules

- Continuous monitoring systems with automated alerting

- Clear audit trails and documentation

- Integration with incident response procedures

Framework Alignment:

| Framework | Mapping | Specific Requirements |

| NIST AI RMF | MEASURE-3: Monitoring deployed AI systems | Track existing, unanticipated, and emergent AI risks over time through systematic monitoring |

| EU AI Act | Article 72: Post-market monitoring | Actively and systematically collect and analyze data on high-risk AI system performance in real-world deployment |

| ISO/IEC 42001 | Clause 9.2: Internal audit | Conduct regular internal audits to ensure AIMS conforms to requirements and is effectively implemented |

Without scheduled audits and transparent logging, unapproved changes or security gaps can remain hidden, making it harder to detect and address issues before they escalate. Consistent monitoring builds trust with stakeholders: when regulators or senior leadership see robust evidence of oversight, they’re more likely to support new AI initiatives.

Key Activities:

- Develop structured audit plans aligned with GAO & CSA guidelines

- Launch continuous monitoring tools with automated reporting

- Publish clear auditing procedures (internal/external audits, checks)

- Provide accessible audit reports highlighting key findings and action items

- Schedule regular audit feedback and improvement sessions

- Create accountability for responding to audit recommendations

- Establish tracking and escalation mechanisms for unresolved audit items

- Integrate monitoring data into continuous improvement processes

Stage 7: Address Specific Governance Elements

Primary Objectives: Focus on specific governance elements including shadow AI prevention, data governance, model governance, access control, output evaluation, third-party risk management, employee GenAI usage, and incident response procedures.

Critical Success Factors:

- Comprehensive coverage of AI governance domains

- Integration with existing enterprise governance frameworks

- Clear policies and procedures for each governance element

- Regular review and updating of governance controls

Framework Alignment:

| Framework | Mapping | Specific Requirements |

| NIST AI RMF | MANAGE-1: Response planning | Develop and implement risk response strategies across different AI governance domains |

| EU AI Act | Article 14: Human oversight | Design systems for effective human oversight during use, including stop functions and interventions |

| ISO/IEC 42001 | Clause 8: Operation | Implement operational planning and control processes for AI system lifecycle management |

If shadow AI systems or untracked data sources crop up, your organization risks compliance breaches, ethical lapses, and wasted investment in duplicate or unauthorized projects. Clear guardrails around data usage, model oversight, and third-party risks help maintain consistent standards across teams. They ensure no one goes rogue and jeopardizes the entire AI program.

Key Activities:

- Regularly update and expand AI Use Case Inventory

- Maintain central, accessible Runbook/Playbook Library

- Document Incident Response Protocols specific to AI issues

- Implement Shadow AI detection and governance procedures

- Develop guidelines for employee usage of Generative AI tools

- Document policies for third-party AI vendor assessments

- Provide ongoing communication and training on specific governance areas

- Establish accountability for managing specific governance elements

Specific Governance Elements:

- Shadow AI Prevention: Implementing measures to identify unauthorized AI systems

- Data Governance: Focusing on data quality, security, privacy, and ethical use

- Model Governance: Policies for development, deployment, monitoring, and retirement

- Access Control: Role-based access to AI systems and data

- Output Evaluation: Mechanisms to assess and control AI system outputs

- Third-Party Management: Processes for assessing AI vendors and dependencies

- Transparency and Explainability: Ensuring appropriate transparency levels

Stage 8: Continuous Improvement and Adaptation

Primary Objectives: AI technology and associated risks evolve constantly. The committee must prioritize continuous monitoring, review, and updating of governance frameworks, policies, and procedures. This includes staying informed about emerging regulations, best practices, and security threats.

Critical Success Factors:

- Regular governance framework reviews and updates

- Integration of emerging regulatory requirements

- Stakeholder feedback mechanisms

- Lessons learned documentation and integration

Framework Alignment:

| Framework | Mapping | Specific Requirements |

| NIST AI RMF | All functions: Continuous improvement | Regular review and update of risk management approaches based on new information and changing contexts |

| EU AI Act | Article 17: QMS continuous improvement | Regular review of QMS effectiveness and implementation of improvements to ensure continued compliance |

| ISO/IEC 42001 | Clause 10: Improvement | Continuously improve AIMS suitability, adequacy, and effectiveness through corrective actions |

Rapid AI advancements mean new vulnerabilities can emerge overnight. If you’re not iterating on policies and best practices, you’ll always be playing catch-up.

Key Activities:

- Set up robust, ongoing stakeholder feedback channels

- Conduct regular, formal governance review meetings (quarterly recommended)

- Perform structured annual AI Governance Maturity Assessments

- Regularly revise governance framework to align with emerging standards

- Document lessons learned and integrate into improvement cycles

- Define governance review roles and escalation paths

- Develop training programs for new regulations or best practices

- Publish periodic governance progress reports for transparency

Board-Level Oversight and Authority Requirements

Modern AI governance requires board-level oversight with clear reporting structures. Your committee must have:

- Executive Sponsorship: Direct reporting to C-suite (CEO, CTO, or CAIO)

- Decision Authority: Power to approve, modify, or terminate AI projects

- Budget Control: Allocated resources for governance activities

- Board Reporting: Regular updates to board of directors on governance effectiveness

- Legal Standing: Formal charter establishing committee authority and responsibilities

Without this authority structure, your governance becomes advisory rather than operational, significantly reducing effectiveness in regulatory compliance and risk mitigation.

Regulatory Penalty Awareness

Understanding the financial stakes helps justify governance investment:

- EU AI Act: Up to 7% of global annual turnover for serious violations

- GDPR: Up to 4% of annual global turnover for data protection violations

- Sector-Specific: Additional penalties under HIPAA, SOX, financial services regulations

- Reputational Costs: Often exceed direct regulatory penalties

Developing vs. Consuming AI

While these governance stages apply broadly, implementation specifics vary:

Organizations Developing AI Models:

- Deeper controls around data pipelines and model security

- Specialized testing for bias and fairness across training datasets

- Enhanced model lifecycle management and version control

- More extensive technical documentation requirements

Organizations Consuming AI Services:

- Focus on vendor due diligence and contractual compliance

- Data handling standards for third-party AI tools

- Integration testing and performance monitoring

- Clear terms of service and liability allocation

With these measures, your AI Governance Committee isn’t just reacting to challenges; it’s actively shaping the responsible, ethical, and compliant AI landscape your organization needs for sustainable growth.

Appendix A: EU AI Act Compliance Checklist for High-Risk Systems

Article 9: Risk Management System Requirements

- [ ] Establish continuous, iterative risk management process

- [ ] Document risk identification and analysis procedures

- [ ] Implement risk evaluation and mitigation measures

- [ ] Maintain risk management documentation throughout system lifecycle

- [ ] Regular testing to identify most appropriate risk mitigation measures

- [ ] Formal acceptance of residual risk levels

Article 17: Quality Management System Requirements

- [ ] Document QMS strategy for regulatory compliance

- [ ] Establish procedures for design, development, and quality control

- [ ] Implement examination, testing, and validation procedures

- [ ] Create data management systems and procedures

- [ ] Integrate Article 9 Risk Management System

- [ ] Establish post-market monitoring system (Article 72)

- [ ] Implement serious incident reporting procedures (Article 73)

Article 72: Post-Market Monitoring Requirements

- [ ] Establish systematic data collection on AI system performance

- [ ] Implement analysis procedures for real-world system behavior

- [ ] Create monitoring plan as part of technical documentation

- [ ] Regular review of monitoring findings

- [ ] Integration with risk management system updates

Article 73: Serious Incident Reporting Requirements

- [ ] Establish incident identification and classification procedures

- [ ] Create reporting protocols to relevant authorities

- [ ] Implement strict timeline adherence (as short as 2 days for critical infrastructure)

- [ ] Document incident investigation procedures

- [ ] Establish corrective action and follow-up processes

Please share and/or contact us for consultation to help educate, train, and support your business with comprehensive AI governance implementation.

Ready to Test Your Knowledge?

Resources and Citations

Regulatory and Government Sources

- European Union AI Act Official Documentation: https://digital-strategy.ec.europa.eu/en/policies/regulatory-framework-ai

- EU AI Act Article 9 (Risk Management System): https://artificialintelligenceact.eu/article/9/

- EU AI Act Article 13 (Transparency Requirements): https://artificialintelligenceact.eu/article/13/

- EU AI Act Article 17 (Quality Management System): https://artificialintelligenceact.eu/article/17/

- EU AI Act Article 72 (Post-Market Monitoring): https://artificialintelligenceact.eu/article/72/

- EU AI Act Article 73 (Serious Incident Reporting): https://artificialintelligenceact.eu/article/73/

- NIST AI Risk Management Framework: https://www.nist.gov/itl/ai-risk-management-framework

- NIST AI RMF 1.0 Official Document: https://nvlpubs.nist.gov/nistpubs/ai/NIST.AI.100-1.pdf

- GAO AI Accountability Framework: https://www.gao.gov/products/gao-21-519sp

- GDPR Penalty Information: https://gdpr.eu/fines/

International Standards and Frameworks

- ISO/IEC 42001:2023 AI Management Systems: https://www.iso.org/standard/81230.html

- OECD AI Principles: https://oecd.ai/en/ai-principles

Industry and Research Sources

- Harvard Law Corporate Governance – AI Board Oversight: https://corpgov.law.harvard.edu/2025/04/02/ai-in-focus-in-2025-boards-and-shareholders-set-their-sights-on-ai/

- Forrester AI Governance RACI Matrix Report: https://www.forrester.com/report/the-ai-governance-raci-matrix/RES181597

- Cloud Security Alliance: https://cloudsecurityalliance.org/

- CSA AI Safety Initiative Report: https://cloudsecurityalliance.org/artifacts/ai-safety-initiative-report

Related AI Governance Resources

- AI Lifecycle Framework Guide: https://techjacksolutions.com/the-7-stage-ai-lifecycle-framework/

- AI Acceptable Use Policy Template: https://techjacksolutions.com/ai-acceptable-use-policy/

- Understanding AI Bias Prevention: https://techjacksolutions.com/understanding-ai-bias/

- AI Use Case Inventory Management: https://techjacksolutions.com/ai-use-case-inventories/

- AI Use Case Tracker Implementation: https://techjacksolutions.com/why-you-need-an-ai-use-case-tracker/

- AI Governance Charter Development: https://techjacksolutions.com/5-sturdy-pillars-ai-governance-charter/

- AI Incident Response Planning: https://techjacksolutions.com/guide-to-effective-incident-response/

- Employee AI Use Case Policies: https://techjacksolutions.com/ai-use-case-policy/

- Identity and Access Management for AI: https://techjacksolutions.com/what-is-identity-and-access-management/

- Building Explainable AI Systems: https://techjacksolutions.com/guide-to-building-explainable-ai/

Get Started with you AI Governance Charter. Our Article covers this in detail to help you get started. Free AI Governance Charter Community Edition available.

| Establish & Mandate Objectives | Define governance mandate, scope, and strategic objectives (Governance Charter). |

| Secure formal endorsement from senior leadership and board members. | |

| Appoint a designated Executive Sponsor (CAIO, CTO, CDO). | |

| Create initial AI Use Case Inventory (foundation for governance). | |

| Conduct introductory training or communication sessions for key stakeholders. | |

| Schedule governance kickoff meeting to establish momentum. | |

| Confirm initial resource allocation and project management structure. | |

| Clearly communicate initial objectives across the organization. | |

| Define the Committee’s Composition and Roles | Identify cross-functional representatives (IT, legal, compliance, ethics, business). |

| Officially appoint governance committee members and document membership. | |

| Publish a detailed RACI Matrix to define responsibilities clearly. | |

| Define meeting cadence and formalize reporting structure. | |

| Confirm committee authority boundaries and escalation paths. | |

| Provide basic committee onboarding and governance orientation. | |

| Launch initial internal communication about committee structure. | |

| Establish mechanism for stakeholder input and engagement. | |

| Develop Responsible AI Framework & Guidance Principals | Publish foundational Responsible AI Policy Document (ethics, fairness, transparency). |

| Draft and distribute AI Acceptable Usage Policy for immediate employee guidance. | |

| Develop standardized documentation (Model Cards, Datasheets) for AI models. | |

| Clearly define AI transparency, fairness, and explainability guidelines. | |

| Conduct employee and stakeholder training on Responsible AI principles. | |

| Develop simplified awareness resources (infographics, FAQs) for employees. | |

| Schedule regular stakeholder feedback sessions to refine policies. | |

| Confirm internal review and approval processes for AI-related documents. | |

| Establish Risk Management & Compliance Process | Implement comprehensive AI Risk Management Framework. |

| Create detailed Regulatory Compliance Matrix aligned with key laws/regulations. | |

| Establish initial AI Risk Register to document identified risks. | |

| Schedule preliminary risk assessments for identified high-risk AI systems. | |

| Integrate NIST AI RMF & CSA guidance into risk and compliance checks. | |

| Identify preliminary security and privacy risks for existing AI projects. | |

| Draft preliminary data-handling and privacy guidelines. | |

| Clearly document risk-assessment responsibilities and roles. | |

| Define Evaluation Criteria and Metrics for AI Systems and Governance | Establish clear, quantifiable governance performance KPIs. |

| Set specific AI system performance metrics (accuracy, fairness, bias mitigation). | |

| Implement a user-friendly, centralized metrics dashboard. | |

| Develop routine reporting mechanisms for governance effectiveness. | |

| Provide simplified training on performance metrics usage. | |

| Define clear responsibilities for metrics tracking and reporting. | |

| Plan periodic metric-review sessions to assess effectiveness. | |

| Define success criteria for governance program at key milestones. | |

| Implement Audit and Monitoring Mechanism | Develop structured audit plans aligned with GAO & CSA guidelines. |

| Launch simple yet effective continuous monitoring tools. | |

| Publish clear auditing procedures (internal/external audits, checks). | |

| Provide accessible audit reports highlighting key findings and action items. | |

| Schedule regular audit feedback and improvement sessions. | |

| Create clear accountability for responding to audit recommendations. | |

| Establish tracking and escalation mechanisms for unresolved audit items. | |

| Integrate monitoring data into continuous improvement processes. | |

| Address Specific Governance Elements | Regularly update and expand AI Use Case Inventory. |

| Maintain a central, easily accessible Runbook/Playbook Library. | |

| Document clear Incident Response Protocols specific to AI issues. | |

| Implement Shadow AI detection and governance procedures. | |

| Develop guidelines for employee usage of Generative AI tools. | |

| Document clear policies for third-party AI vendor assessments. | |

| Provide ongoing communication and training on specific governance areas. | |

| Establish clear accountability for managing specific governance elements. | |

| Continous Improvement and Adaption | Set up robust, ongoing stakeholder feedback channels. |

| Conduct regular, formal governance review meetings (quarterly recommended). | |

| Perform structured annual AI Governance Maturity Assessments. | |

| Regularly revise governance framework to align with emerging standards (OECD, NIST). | |

| Document lessons learned and integrate them into improvement cycles. | |

| Clearly define governance review roles and escalation paths. | |

| Develop training programs to adapt to new regulations or best practices. | |

| Publish periodic governance progress reports to maintain transparency. |