Author: Derrick D. Jackson

Title: Founder & Senior Director of Cloud Security Architecture & Risk

Credentials: CISSP, CRISC, CCSP

Last updated: 08/21/2025

Table of Contents

Pressed for Time?

Review or Download our 2-3 min Quick Slides or the 5-7 min Article Insights to gain knowledge with the time you have!

Review or Download our 2-3 min Quick Slides or the 5-7 min Article Insights to gain knowledge with the time you have!

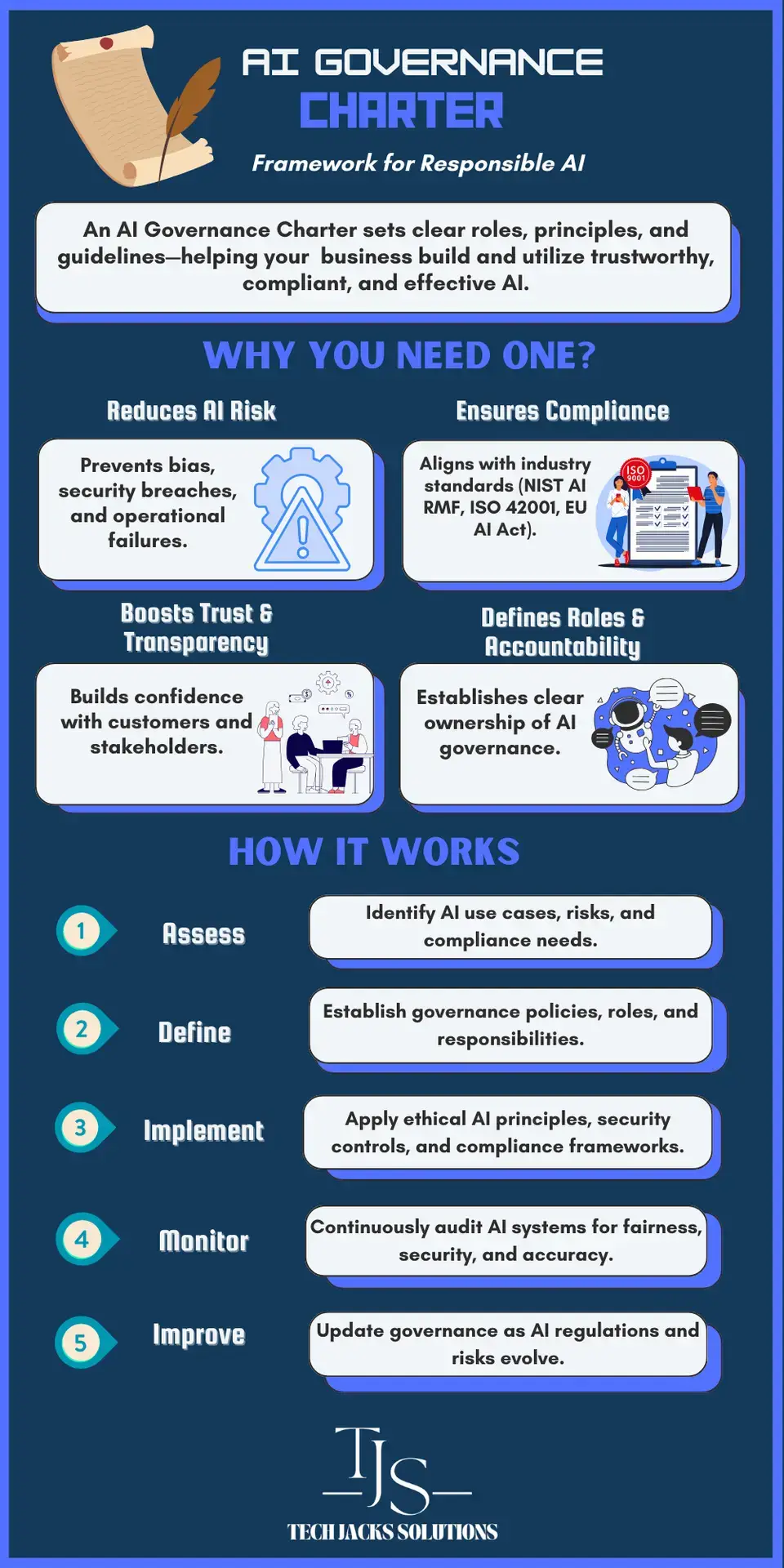

AI Governance Charter: Definition, Benefits

Lawyers file lawsuits. Regulators write penalties. Boards demand accountability.

Welcome to 2025, where AI governance isn’t optional anymore. The EU AI Act mandates robust governance for high-risk systems, and organizations worldwide are scrambling to build frameworks that actually work. Most fail because they treat governance like a compliance checkbox rather than what it really is: the foundational constitution for how your organization manages artificial intelligence.

An AI Governance Charter is the foundational, constitutional document for an organization’s artificial intelligence program. It is not merely a policy but a strategic instrument for managing enterprise risk, ensuring regulatory compliance, and building stakeholder trust. Think of it as your organization’s AI constitution — the document that establishes who has power, how decisions get made, and what principles guide every AI initiative from concept to retirement.

This isn’t about creating another policy document that goes unopen for years in the rarely used SharePoint repository. It’s about building an operational framework that transforms abstract ethical principles into concrete, accountable actions while navigating the complex legal landscape that now includes the European Union’s AI Act, NIST’s AI Risk Management Framework, and ISO/IEC 42001 certification requirements.

Visit our Hubs for more information on EU ACT, NIST AI RMF, ISO/IEC 42001

What is an AI Governance Charter?

Let’s go into the details. Here’s what you’re actually building.

An AI Governance Charter is a formal, foundational document that defines the mission, scope, authority, and accountability structures for managing artificial intelligence within an organization. It serves as the constitution for the AI governance program, codifying the organization’s commitment to the responsible, ethical, and compliant use of AI technologies.

The Charter isn’t just another policy. Unlike a standard policy, the Charter establishes the governing bodies and their decision-making rights, making it the source of authority for all subsequent AI-related policies and procedures.

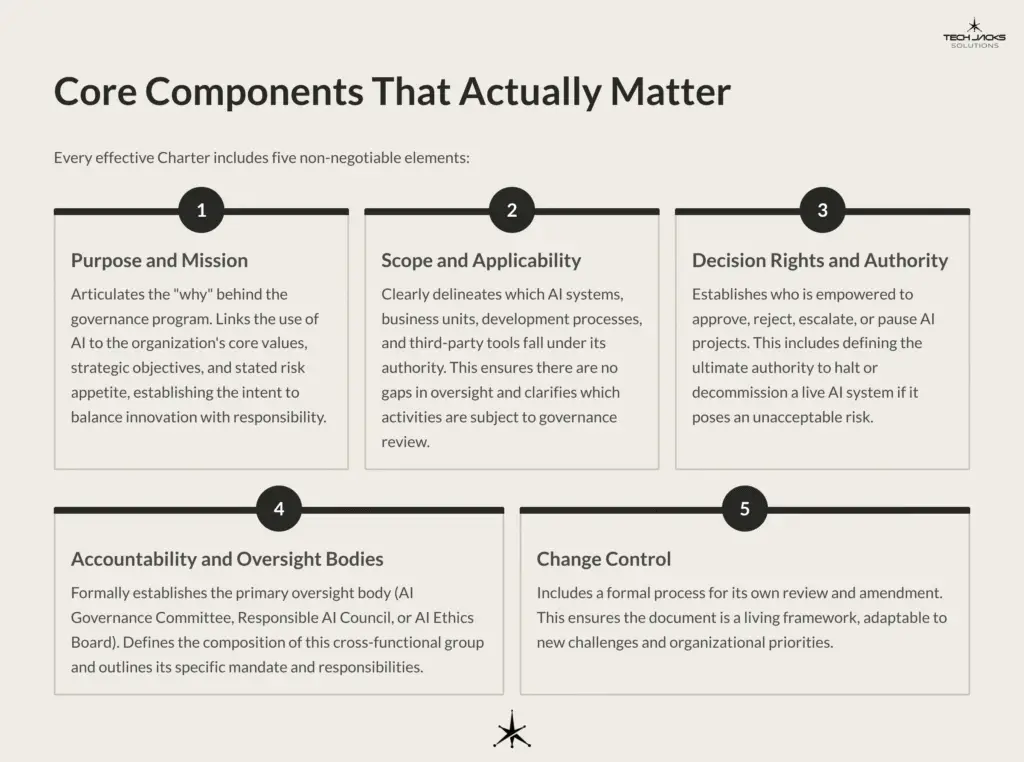

Core Components That Actually Matter

Every effective Charter includes five non-negotiable elements:

Purpose and Mission. This section articulates the “why” behind the governance program. It links the use of AI to the organization’s core values, strategic objectives, and stated risk appetite, establishing the intent to balance innovation with responsibility. You’re not building governance for governance’s sake. You’re creating a framework that enables safe innovation.

Scope and Applicability. The Charter must clearly delineate which AI systems, business units, development processes, and third-party tools fall under its authority. This ensures there are no gaps in oversight and clarifies which activities are subject to governance review. No ambiguity allowed. If your team builds it, buys it, or uses it, the Charter covers it.

Decision Rights and Authority. This is where most organizations fail. A critical function of the Charter is to establish who is empowered to approve, reject, escalate, or pause AI projects. This includes defining the ultimate authority to halt or decommission a live AI system if it poses an unacceptable risk. Someone needs the power to pull the plug. Define who that is.

Accountability and Oversight Bodies. The Charter formally establishes the primary oversight body, often named the AI Governance Committee, Responsible AI Council, or AI Ethics Board. It defines the composition of this cross-functional group (e.g., representatives from Legal, Compliance, IT, Data Science, and business units) and outlines its specific mandate and responsibilities.

Change Control. To remain relevant amid rapid technological and regulatory evolution, the Charter must include a formal process for its own review and amendment. This ensures the document is a living framework, adaptable to new challenges and organizational priorities.

Why a Charter is Non-Negotiable

The mandate comes from three converging forces: legal requirements, industry standards, and business risk. Let’s examine each.

EU AI Act Compliance

For any organization developing, deploying, or using “high-risk” AI systems within the European Union, a structured governance framework is a legal obligation under Regulation (EU) 2024/1689 (the EU AI Act). High-risk systems are defined under Article 6 and include those used in critical infrastructure, employment, education, and access to essential services, as detailed in Annex III.

The Charter directly operationalizes four critical EU AI Act requirements:

Article 9 Risk Management System. This article mandates the establishment of a “risk management system” that functions as a “continuous iterative process… throughout the entire lifecycle of a high-risk AI system”. This system must identify, analyze, evaluate, and mitigate foreseeable risks to health, safety, or fundamental rights. Your Charter mandates the specific risk assessment processes and assigns accountability for their execution.

Article 17 Quality Management System. The Act requires providers of high-risk systems to implement a Quality Management System (QMS) that is “documented in a systematic and orderly manner in the form of written policies, procedures and instructions”. The Charter serves as the top-level policy document of the QMS, authorizing and directing the creation of these subordinate procedures.

Article 72 Post-Market Monitoring. Providers must establish and maintain a post-market monitoring system to “actively and systematically collect, document and analyse” data regarding the performance of high-risk AI systems once they are on the market. Your Charter embeds this requirement into operational fabric.

Article 73 Serious Incident Reporting. The Act mandates a formal process for providers to report any “serious incident” involving a high-risk AI system to the market surveillance authorities in the relevant Member States. The Charter establishes the internal incident management framework and assigns clear responsibility for this external reporting obligation, defining timelines and escalation paths.

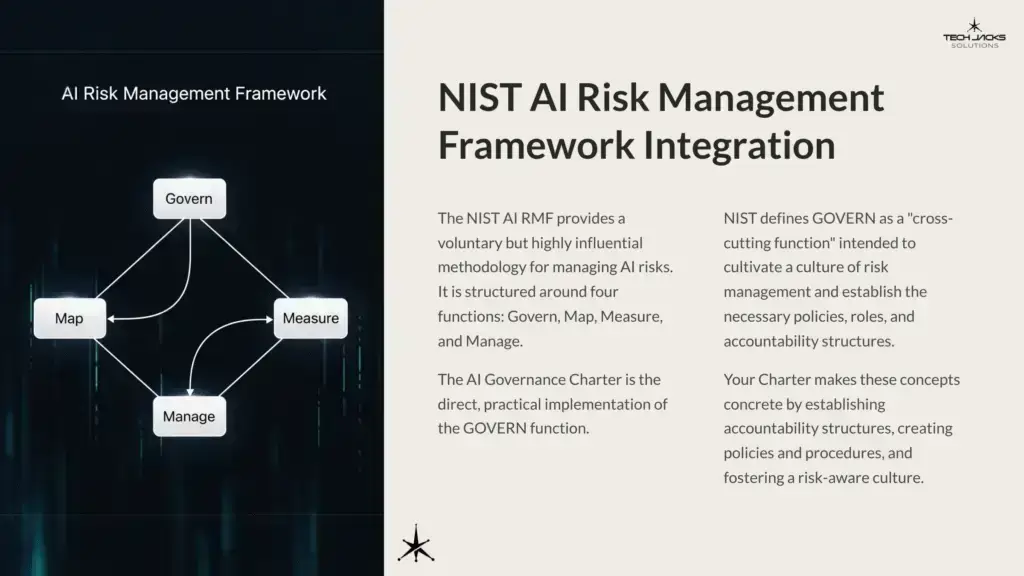

NIST AI Risk Management Framework Integration

The NIST AI RMF provides a voluntary but highly influential methodology for managing AI risks. It is structured around four functions: Govern, Map, Measure, and Manage. The AI Governance Charter is the direct, practical implementation of the GOVERN function.

NIST defines GOVERN as a “cross-cutting function” intended to cultivate a culture of risk management and establish the necessary policies, roles, and accountability structures. Your Charter makes these concepts concrete by establishing accountability structures through a formal committee and RACI matrix, creating policies and procedures for mapping, measuring, and managing AI risks, and fostering a risk-aware culture by providing clear leadership mandate and communication channels.

ISO/IEC 42001 Certification Path

ISO/IEC 42001 is the first international, certifiable standard for an AI Management System (AIMS). It provides a formal structure for organizations to “establish, implement, maintain, and continually improve” their AI governance. The Charter serves as the foundational policy document required by the standard.

The Charter directly addresses two key ISO clauses:

Clause 5 Leadership. This clause requires top management to demonstrate leadership and commitment, establish an AI policy, and ensure roles and responsibilities are assigned. The Charter, endorsed by executive leadership, is the primary evidence of meeting these requirements.

Clause 6 Planning. This clause requires the organization to plan actions to address risks and opportunities and to establish AI objectives. The Charter sets the strategic direction and mandates the risk management processes necessary to fulfill this clause.

Framework Alignment: Mapping Your Charter to Requirements

Your Charter doesn’t exist in isolation. It must explicitly align with legal and operational frameworks. Here are the direct mappings that demonstrate compliance:

EU AI Act Alignment

| Charter Component | EU AI Act Article | Implementation |

| Risk Management Policy | Article 9 (Risk management system) | The AIIA process directly implements the requirement for a “continuous iterative process” of risk identification, analysis, evaluation, and mitigation for high-risk systems throughout their lifecycle |

| Data Governance Policy | Article 10 (Data and data governance) | The policy mandates practices to ensure training, validation, and testing datasets are relevant, representative, and free of errors and biases to the best extent possible |

| Documentation & Records Policy | Articles 11 & 12 (Technical documentation & Record-keeping) | The AI registry and model cards serve as the core of the technical documentation, providing necessary information for authorities to assess compliance |

| Lifecycle Management Policy | Article 17 (Quality management system) | The Charter mandates a QMS with written policies and procedures for design, development, testing, and modification management, as required by the Act |

NIST AI RMF Alignment

| Charter Component | NIST Function | Category | Implementation |

| Roles & Bodies (AI Governance Committee) | GOVERN | GOV.2: Accountability structures are in place | The Charter formally establishes the AI Governance Committee, defines its cross-functional membership, and assigns it ultimate accountability for overseeing AI risk³¹ |

| Principles & Commitments | GOVERN | GOV.1: Policies, processes, procedures… are in place | The Charter’s principles section codifies the organization’s commitment to trustworthy AI characteristics (e.g., fairness, transparency), forming the basis for all AI-related policies³² |

| AI Impact Assessment (AIIA) Process | MAP | MAP.2: Risks and benefits are identified, documented, and mapped | The AIIA is the structured process for identifying and documenting specific risks (e.g., bias, privacy, safety) and mapping them to potentially impacted stakeholders³³ |

| Risk Treatment & Corrective Action Plans | MANAGE | MAN.1: AI risks… are prioritized and addressed | Based on the AIIA’s findings, the governance process requires teams to create and implement risk treatment plans to mitigate identified risks before deployment³² |

ISO/IEC 42001 Alignment

| Charter Component | ISO Clause | Evidence of Conformance |

| Purpose, Scope, Principles | 5.2 (Policy) | The AI Governance Charter document itself serves as the top-level, leadership-endorsed AI policy required by the standard⁴⁰ |

| Roles & Bodies (RACI Matrix) | 5.3 (Organizational roles, responsibilities and authorities) | The RACI matrix, included as an appendix to the Charter, explicitly defines roles and responsibilities for the AI Management System (AIMS)⁵⁸ |

| Risk Management Policy & AIIA Process | 6.1 (Actions to address risks and opportunities); 8.2 (AI risk assessment) | The policy mandates the AI risk assessment process, and the completed AIIA documents provide evidence of risk identification, analysis, and treatment planning³⁹ |

| Training & Competency Section | 7.2 (Competence); 7.3 (Awareness) | The Charter mandates the development of an AI training and awareness program for all relevant personnel, with records of completion serving as evidence³⁹ |

Foundational Prerequisites for Success

Don’t start writing before you have these elements in place. Attempting to create a Charter without this groundwork often results in a document that is disconnected from business needs and lacks the power to enforce its own mandates.

Secure Executive Mandate. AI governance cannot be a grassroots effort. It requires an explicit, visible, and unwavering mandate from the highest levels of the organization, such as the CEO and the Board of Directors. This executive sponsorship is critical for granting the governance program the authority to make binding decisions, securing necessary resources, and signaling to the entire organization that responsible AI is a top-level priority.

Create an AI System Inventory. An organization cannot govern what it cannot see. The Charter must mandate the creation and continuous maintenance of a centralized inventory of all AI systems in use, in development, or procured from third parties. This registry is the linchpin of governance, serving as the basis for risk assessment, monitoring, and regulatory reporting.

Develop a Risk Taxonomy. Define and categorize the types of AI-related risks the organization will formally assess and manage. A typical taxonomy includes categories such as fairness and bias, security, privacy, operational reliability, legal and compliance, reputational harm, and societal impact.

Define Human Oversight Models. Articulate the organization’s default models for human interaction with AI systems. This should define different levels of oversight—such as human-in-the-loop (human must approve each action), human-on-the-loop (human can intervene), and human-out-of-the-loop (fully autonomous)—and establish clear criteria for when each model is required, particularly for high-risk applications.

AI Charter Step-by-Step Charter Creation

The process of creating the Charter is as important as the final document itself. A collaborative, inclusive process builds the necessary buy-in and ensures the resulting framework is practical and respected.

Phase 1: Discovery and Stakeholder Interviews (Days 1-30)

The process begins with information gathering, not writing. A core working group (e.g., from Legal, Risk, and IT) should conduct structured interviews with key stakeholders across the organization. This includes leaders from business units, data science and engineering teams, information security, privacy, and HR. The goal is to understand current AI usage (both official and “shadow IT”), identify perceived risks and opportunities, and align the Charter’s objectives with business needs.

Phase 2: Draft Structure and Content (Days 31-60)

Using the findings from the discovery phase and a comprehensive template, the working group drafts the initial version of the Charter. This involves translating stakeholder input and the requirements of frameworks like the EU AI Act and ISO 42001 into specific, actionable policies, principles, and process descriptions.

Phase 3: Multi-level Review and Executive Approval (Days 61-75)

The draft Charter is circulated for review and feedback among the broader group of stakeholders who were initially interviewed. This iterative review process ensures accuracy, practicality, and builds consensus. Once stakeholder feedback is incorporated, the refined Charter is formally presented to the executive sponsor and any relevant senior committees (e.g., Enterprise Risk Committee, Board of Directors) for final approval and ratification.

Phase 4: Publication and Rollout (Days 76-90)

The officially approved Charter is published in a central, easily accessible location, such as the company intranet. Its publication should be accompanied by a formal communication plan to announce its launch and explain its importance to all employees.

Operationalizing Your Charter: The First 90 Days

An approved Charter is just the beginning. Success depends on operationalization.

Days 1-30: Foundation and Communication

The first month is focused on establishing the core governance infrastructure and ensuring enterprise-wide awareness.

Convene the AI Governance Committee. Formally establish the committee as defined in the Charter. Hold the inaugural meeting to ratify its operating procedures, meeting cadence, and immediate priorities⁶⁰.

Launch Enterprise Communication. Execute the communication plan developed during the Charter’s creation. This should include an announcement from the executive sponsor, all-hands meetings, and intranet articles explaining the Charter’s purpose and what it means for employees⁷³.

Initiate the AI System Inventory. Mandate and launch the effort to create the AI system inventory. Provide teams with the necessary tools (e.g., spreadsheets, dedicated software) and clear instructions for registering their AI systems⁶⁰.

Deploy Foundational Training. Roll out a mandatory, high-level awareness training module for all employees. This training should cover the organization’s AI principles, the purpose of the governance framework, and employees’ basic responsibilities (e.g., acceptable use of generative AI tools)³.

Days 31-60: Process Rollout and Integration

The second month focuses on implementing the core governance processes and beginning to assess the highest-risk systems.

Implement Intake and Risk Assessment Processes. Formally launch the AI Use Case Intake and AI Impact Assessment (AIIA) processes. Ensure the intake form and AIIA template are accessible and that relevant teams know how and when to use them⁷⁹.

Triage the AI Inventory. As inventory submissions come in, the AI Governance Committee should begin triaging systems based on their initial risk profiles. Identify the top 5-10 highest-risk systems to undergo the first formal AIIAs⁶⁵.

Develop Role-Based Training. Create and deliver more detailed, role-specific training for key stakeholders. Data scientists and engineers need training on secure coding for AI and bias testing. Product managers need training on completing AIIAs. Legal and compliance teams need training on the specifics of the EU AI Act and other relevant regulations⁷³.

Days 61-90: Monitoring and Initial Audits

The third month is about establishing monitoring capabilities and creating a feedback loop for improvement.

Establish Monitoring and KPIs. Implement the initial set of KPIs for the governance program. Create a dashboard for the AI Governance Committee to track progress on inventory completion, AIIA status, and time-to-approval metrics³.

Operationalize Incident Management. Finalize and communicate the AI incident reporting and management process. Conduct a tabletop exercise or simulation to test the process and ensure key personnel understand their roles in an AI-related incident³.

Conduct First Internal Review. At the end of the 90-day period, the AI Governance Committee should conduct its first internal review of the program’s implementation. This involves gathering feedback from teams who have used the new processes, identifying early pain points or gaps, and creating an action plan for refinement⁷⁸.

Core Governance Processes in Practice

The Charter mandates several processes that form governance’s daily operations:

AI Use Case Intake and Triage. All new AI projects must begin by submitting a standardized intake form. This form captures the business case, data requirements, and a preliminary risk profile. The AI Governance Committee or a designated subgroup reviews these submissions to assign an initial risk tier (e.g., low, medium, high), which determines the required level of subsequent review⁶⁰.

AI Impact Assessment (AIIA). For systems triaged as medium or high-risk, the project team must complete a formal AIIA. This comprehensive assessment evaluates potential risks across multiple domains, including fairness, transparency, privacy, security, and societal impact, directly supporting the risk management requirements of EU AI Act Article 9⁴².

TEVV Gates. The governance framework should integrate formal review “gates” into the existing software development lifecycle (SDLC) or MLOps pipeline. At these gates (e.g., before model training, before deployment), the project team must present evidence of Test, Evaluation, Validation, and Verification to the Governance Committee for approval before proceeding to the next stage⁵⁶.

Post-Market Monitoring. For deployed high-risk systems, the Charter mandates an ongoing, proactive process of collecting and analyzing performance data from the real world. This fulfills the legal obligation of EU AI Act Article 72 and ensures that the organization can identify and mitigate new risks that may emerge after deployment²².

Measuring Success: Key Performance Indicators

Track these metrics to demonstrate value and manage performance:

Coverage Metrics:

- Governance Coverage: Percentage of all known AI systems registered in the central inventory

- Risk Assessment Coverage: Percentage of high-risk systems in the inventory that have a completed and approved AIIA

Efficiency Metrics:

- Time-to-Review: Average number of days from AI use case intake submission to final governance decision (approval/rejection)

Risk and Compliance Metrics:

- AI-Related Incidents: Number of reported AI incidents per quarter, categorized by severity

- Incident Response Time: Mean Time to Detect (MTTD) and Mean Time to Remediate (MTTR) for AI-related incidents

- Monitoring Adherence: Percentage of post-market monitoring reviews for high-risk systems completed on schedule

Training and Awareness Metrics:

- Training Completion Rate: Percentage of required employees who have completed their assigned AI governance training

Tracking these metrics provides the AI Governance Committee and executive leadership with quantitative data on the health and effectiveness of the program, enabling data-driven decisions for its continuous improvement⁹⁰.

Verifiable Industry Examples

Leading organizations have established AI governance frameworks, though comprehensive details remain typically internal:

Microsoft has established a Responsible AI Standard to guide its product development and publishes a Responsible AI Transparency Report detailing its governance practices and decision-making for releasing AI systems. No publicly verifiable success metric identified.

Google has published a set of AI Principles that guide its research and product development, focusing on applications that are socially beneficial and avoid unfair bias. No publicly verifiable success metric identified.

Amazon Web Services (AWS) achieved ISO/IEC 42001 certification for its AI Management System (AIMS). The certification was verified by Schellman Compliance, LLC, an ANAB-accredited certification body, validating that AWS has implemented a formal system to manage AI risks and opportunities.

Microsoft 365 Copilot has achieved ISO/IEC 42001 certification, confirming that an independent third party has validated Microsoft’s framework for managing risks associated with the development, deployment, and operation of the service.

Key Trends and Emerging Practices

Several developments are shaping AI governance evolution:

Technological Advances. TEVV practices are becoming more sophisticated, with automated bias testing and model cards becoming standard. AI assurance tools are emerging to support continuous monitoring requirements.

Regulatory Momentum. The EU AI Act’s deadlines are approaching, with high-risk system requirements taking effect. ISO/IEC 42001 adoption is accelerating as organizations seek third-party validation of their governance maturity.

Industry Convergence. AI governance is increasingly integrated with existing security and compliance programs rather than operating as a separate function. Organizations are building unified GRC (governance, risk, and compliance) platforms that encompass AI alongside traditional IT risks.

FAQ: Common Questions About AI Governance Charters

What is an AI Governance Charter? An AI Governance Charter is a formal, foundational document that defines the mission, scope, authority, and accountability structures for managing artificial intelligence within an organization. It serves as the constitution for the AI governance program.

Why do companies need an AI Governance Charter? For any organization developing, deploying, or using “high-risk” AI systems within the European Union, a structured governance framework is a legal obligation under the EU AI Act. Beyond compliance, it manages risks, builds stakeholder trust, and enables safe innovation.

How long does Charter implementation take? A 30-60-90 day plan provides a structured, time-bound approach to activating the Charter, ensuring momentum is built and maintained. Full maturity typically requires 12-18 months of continuous improvement.

What regulations apply to AI governance? Key frameworks include the EU AI Act for high-risk systems, NIST AI RMF for risk management methodology, and ISO/IEC 42001 for certifiable management systems. Sector-specific regulations may also apply.

Who should lead AI governance efforts? AI governance cannot be a grassroots effort. It requires an explicit, visible, and unwavering mandate from the highest levels of the organization, such as the CEO and the Board of Directors. Day-to-day operations typically involve cross-functional teams including legal, risk, IT, and business units.

The AI Governance Charter isn’t just another policy document. It’s your organization’s constitutional framework for navigating the complex intersection of innovation, risk, and regulation in the age of artificial intelligence. Organizations that build effective governance now will have competitive advantages tomorrow. Those that don’t will find themselves scrambling to catch up while managing preventable crises.

Start with executive mandate. Build your foundation. Create your Charter. Your future depends on it.

Ready to Test Your Knowledge?

Resources List

- EU AI Act – Regulation (EU) 2024/1689

- AI Governance Charter – A Great Investment – Tech Jacks Solutions

- NIST AI Risk Management Framework (AI RMF 1.0)

- ISO/IEC 42001:2023 Artificial Intelligence Management System Standards – Microsoft

- What is AI Governance? – IBM

- Article 6: Classification Rules for High-Risk AI Systems – EU AI Act

- Annex III: High-Risk AI Systems – EU AI Act

- Article 9: Risk Management System – EU AI Act

- High-Risk AI Systems and Requirements – WilmerHale

- Article 17: Quality Management System – EU AI Act

- Design of a Quality Management System based on the EU AI Act – arXiv

- Post-market Monitoring and Enforcement – IAPP

- Navigating New Regulations for AI in the EU – AuditBoard

- EU AI Act Obligations for High-Risk AI Systems – AO Shearman

- AI RMF Core – NIST AIRC

- NIST AI RMF Playbook

- Core Functions: Govern, Map, Measure, Manage – IS Partners

- Understanding ISO 42001 and Demonstrating Compliance – ISMS.online

- Understanding ISO 42001: The World’s First AI Management System Standard – A-LIGN

- ISO/IEC 42001: A New Standard for AI Governance – KPMG

- AI Act as a Neatly Arranged Website – Legal Text

- AI Governance Committee Implementation – Tech Jacks Solutions

- 10 Components of an Effective AI Governance Policy

- Risk Management in AI – IBM

- The Pathway to AI Governance

- Ten Steps to Creating an AI Policy – Corporate Governance Institute

- Responsible AI Principles and Approach – Microsoft

- AI Principles – Google AI

- ISO 42001 Artificial Intelligence Management System – AWS

Ready Made Templates for Download

Need help creating your own AI Governance Charter or want to leverage our Free or Professional Template? Save yourself time, energy, and cost by utilizing our carefully crafted and vetted templates. View and click our Image below for more detail on each version.

Posters

Thanks for checking out the article and visiting our website. All of your support is valued and appreciate it! Check out our Template Market Place!