AI Use Case Policy: 5 Essential Steps for Success

Artificial Intelligence (AI) has evolved into a powerful engine of change across nearly every industry. It’s promising better productivity, sharper insights, and faster innovation. But integrating AI isn’t a free-for-all scenario. You can’t just unleash it like it’s your favorite streaming series – binge-watching without guidelines. Enter the AI Use Case Policy (AUCP): your roadmap for clear, responsible, and effective AI usage.

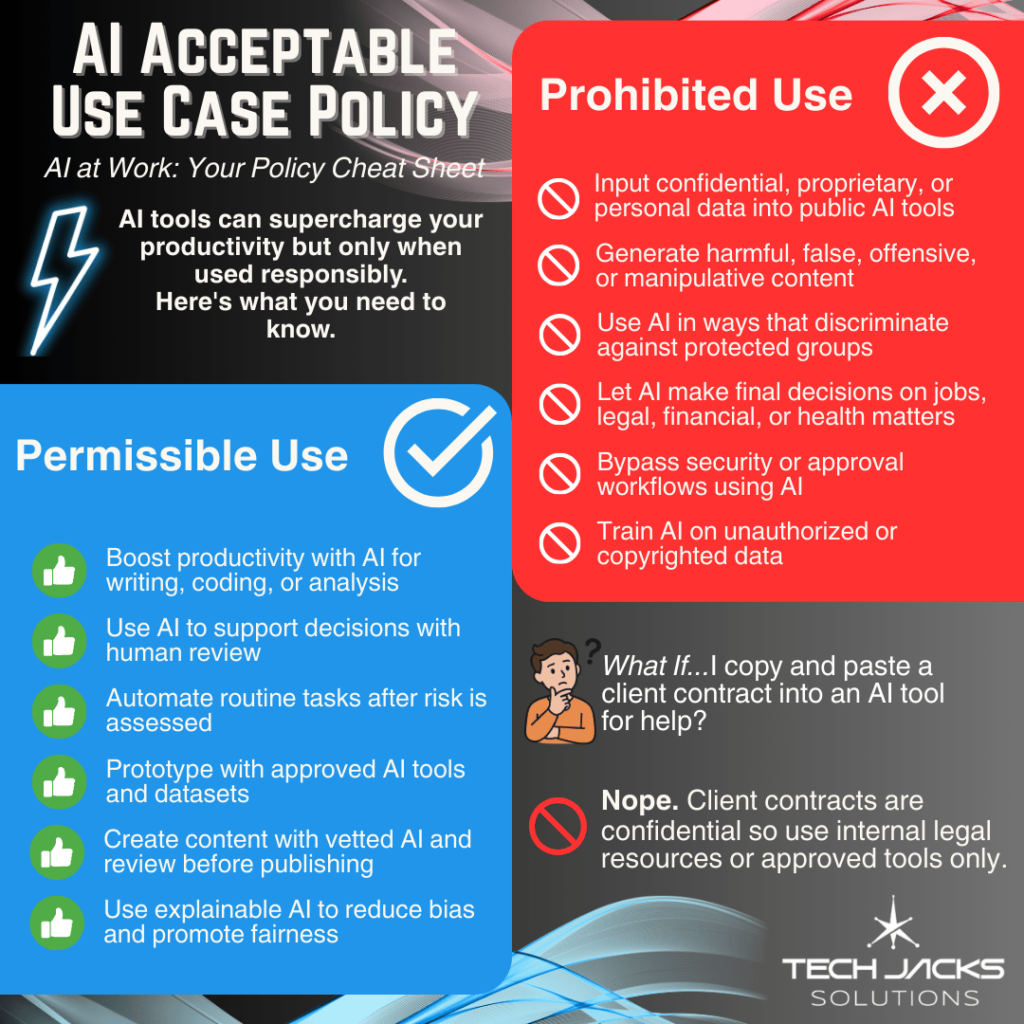

Organizations can partner this AUCP with an AI Acceptable Use policy to establish guidelines for AI usage, ensuring that customer data and other concerns are properly managed. AI’s role in enhancing productivity and decision-making is significant, but it also poses significant risks that need to be addressed through clear policies and ongoing maintenance.

Why Bother With an AI Use Case Policy?

Think of your AI Use Case Policy as the house rules for AI. Sure, there isn’t a law explicitly saying you need one labeled precisely like this, but regulatory bodies like GAO, NIST, OECD, and CSA pretty much demand you have your AI purposes clearly documented. It helps keep your AI compliant, transparent, and ethically sound (plus, it might keep you out of hot water with regulators and clients).

Having clear guidelines isn’t just about avoiding trouble; it’s also about enabling your team to maximize AI’s potential confidently. When everyone knows the rules, they can innovate without hesitation, experiment responsibly, and push the envelope while staying aligned with ethical norms and regulatory requirements.

What Exactly Does This Policy Do?

- Clarifies Expectations: Clearly spells out the use cases for which AI can be implemented.

- Reduces Risks: Tackles big-ticket issues like privacy breaches, algorithmic biases, and unauthorized data access head-on.

- Builds Trust: Keeps your team, customers, and stakeholders confident in your AI decisions.

- Boosts Innovation: Sets clear guardrails so your team can innovate without constantly worrying if they’re stepping over regulatory, security or privacy-colored lines.

- Enhances Accountability: Provides clear roles and responsibilities, making accountability straightforward and transparent.

- Supports Strategic Goals: Ensures AI implementations align with your organization’s broader strategic objectives.

Steps to Creating an Effective AI Use Case Policy

Step 1: Identify and Document Your AI Use Cases

Start by getting everyone together and mapping out exactly where you’re using AI (AI Use Case Tracker):

- What’s the purpose?

- Who’s involved?

- What data is being used?

- What’s the expected outcome?

- Are there existing tools or processes that will interact with AI?

Potential obstacle: Getting comprehensive buy-in. Some teams might resist documentation or worry about losing autonomy. Keep communication open and clearly illustrate the benefits; less ambiguity equals fewer headaches down the road. Engage teams early in the process and highlight how clear documentation can improve efficiency and collaboration. If a tollgate is put into place where security teams need to approve any new use of AI, then performing this work upfront saves time. It can potentially mitigate conflict when time pressure becomes urgent.

Step 2: Risk Classification

Not all AI use cases are created equal. I mean – just think of the implications with healthcare and finance. These industry’s alone would make it readily clear that authorized use cases must/should be thoroughly vetted. Organizations could classify each as high, medium, or low-risk based on:

- Data sensitivity

- Potential for harm

- Regulatory complexity

- Impact on users and stakeholders

- Client requirements

Example: AI analyzing customer purchasing trends? Low risk. AI making healthcare diagnostic recommendations? Definitely high risk. AI monitoring employee behavior? Medium to high risk, depending on context.

There is no standardized labeling scheme for how risk are labeled, so each organization is recommended to identify and create their own unique risk tiers and implemented as needed. Our examples above are descriptive. EU AI Act does provide labeling tiers – however, this may not be applicable, or the best description of your particular risk at evaluation.

- Unacceptable Risk

- High Risk

- Limited Risk

- Minimal Risk.

Step 3: Define Clear AI Workflows and Boundaries

Lay out crystal-clear (or as clear as you can make them) workflows that state what’s allowed and what’s not:

- Define acceptable AI tools and platforms.

- Specify permissible data use and storage practices.

- Outline clear procedures for incident reporting and escalation.

Potential obstacle: Overcomplicating things. Make your workflows detailed enough to be clear but simple enough to follow without a Ph.D. Use visual aids or simple checklists to communicate complex processes easily. There will be participants who appreciate the system/workflow and do everything in their power to utilize it to help expedite their workflow or help refine it so that it works without major hitches.

However, there will also be situations in which you may deal with requestors, who at best, provide all of their feedback in the form of complaining that the tools (they want to use) are harmless and the mere mention of an evaluation is grounds for revolt. It is a good thing governance isn’t about feelings, and it protects everyone. Feel free to eagerly welcome them into the process, and show them how this help protect their organizations, and as a result, their own job.

Step 4: Integrate with Existing Governance

Don’t reinvent the wheel. Embed your AI Use Case Policy into your existing governance structure:

- Regular check-ins by an AI governance committee.

- Periodic policy updates to stay current.

- Incorporate feedback loops with stakeholders to continuously refine and improve the policy

- Make easily available, visible on a SharePoint or Confluence type public site where workforce can easily view it as needed..

Step 5: Train Your People

Let’s face it, policies only work if people actually follow them:

- Regular, role-specific training sessions.

- Accessible resources and ongoing communication.

- Make the policy easily available and visible. Utilize a SharePoint or Confluence interna page where workforce can easily view it as needed.

- Reinforce training with interactive sessions, quizzes, and practical exercises.

Example: Quick, engaging training videos or casual “AI Lunch & Learns” can boost employee understanding and compliance without feeling like another tedious chore. Create opportunities for open dialogue to address questions or concerns immediately.

Selecting Approved AI Tools

When selecting approved AI tools, organizations must consider several factors to ensure the safe and responsible use of these technologies. First and foremost, evaluate the tool’s security measures and data management practices. It’s crucial to ensure that the AI tools comply with relevant laws and regulations, including those related to data privacy and protection.

Organizations should also assess the potential risks associated with the use of AI tools. This includes concerns about data privacy, potential bias in AI algorithms, and the risk of generating inappropriate content. By carefully evaluating these factors, organizations can select AI tools that align with their values and goals, while also protecting their customers’ data and maintaining compliance with applicable laws.

Additionally, it’s important to establish clear guidelines for the use of approved AI tools. This includes specifying how data classified as sensitive or personally identifiable information should be handled. By setting these guidelines, organizations can ensure that their use of AI tools is both responsible and compliant with relevant regulations.

Managing Compliance Risks

Managing compliance risks is a critical component of any organization’s AI strategy. This involves ensuring that the use of AI tools complies with relevant laws and regulations, including data protection and privacy laws. Organizations must establish clear guidelines for the use of AI tools, covering aspects such as data management, training data, and potential risks.

Compliance teams should regularly review and update the organization’s AI acceptable use policy to ensure that it remains effective and relevant. This includes addressing new risks and technological advancements as they emerge. Furthermore, organizations should exercise caution when using AI tools, particularly when dealing with sensitive information. It’s essential to have robust security measures in place to protect against potential risks, including illegal activities and inappropriate content.

By prioritizing compliance and responsible use, organizations can harness the benefits of AI while minimizing its risks. This approach not only protects the organization but also builds trust with customers and stakeholders, ensuring the ethical and responsible use of AI technologies.

Keep Track: AI Use Case and System Inventories

Maintaining updated AI use case and system inventories isn’t just good practice…it’s essential. We cover this in our article & infographic here. These inventories help to:

- Simplify compliance and audit processes.

- Help quickly identify potential risks.

- Align with best practices recommended by CSA and NIST.

- Provide a comprehensive view for strategic planning and resource allocation.

Example AI Use Case tracker: Examples are just for representational purposes – use case attributes selected are randomized.

Real-Life AI Acceptable Use Case Examples

- Healthcare: AI-powered diagnostic tools specifically approved for radiology and pathology, ensuring accuracy and ethical standards.

- Finance: AI systems for transaction monitoring to flag fraudulent activity, safeguarding assets and enhancing customer trust.

- Technology: AI-based cybersecurity threat detection tools that continuously monitor and respond to threats, ensuring robust protection.

- Customer Service: Generative AI chatbots handling non-sensitive customer queries, improving service response times and customer satisfaction.

- Marketing: AI-driven analytics to personalize content and predict consumer behavior responsibly.

- Human Resources: AI systems to streamline recruitment processes, ensuring fairness and unbiased decision-making.

Remember, your organization’s specifics will vary depending on your industry, size, and regulatory landscape. Customizing your AUCP is key to effectively managing AI use.

Wrapping it Up

An AI Use Case Policy isn’t just paperwork. It could be your organization’s championship playbook for responsible AI. Done right, it simplifies life for your team, protects your organization, and ensures your AI tools become powerful allies, not unpredictable headaches. Stay proactive, stay compliant, and keep (winning) innovating responsibly.

To ensure compliance with data protection laws like GDPR and CCPA, clear measures must be established for data collection and usage, coupled with security protocols that protect personal information and bolster the integrity of AI systems.

Need Help getting your AI Use Case Policy & Inventory system establish? Contact us for advisory or hands-on services. Take care.

LaTanya Jackson

April 11, 2025This is very informative, presented in great detail, simple and clear. I am starting to feel like a defacto IT Specialist. Bravo👏🏾