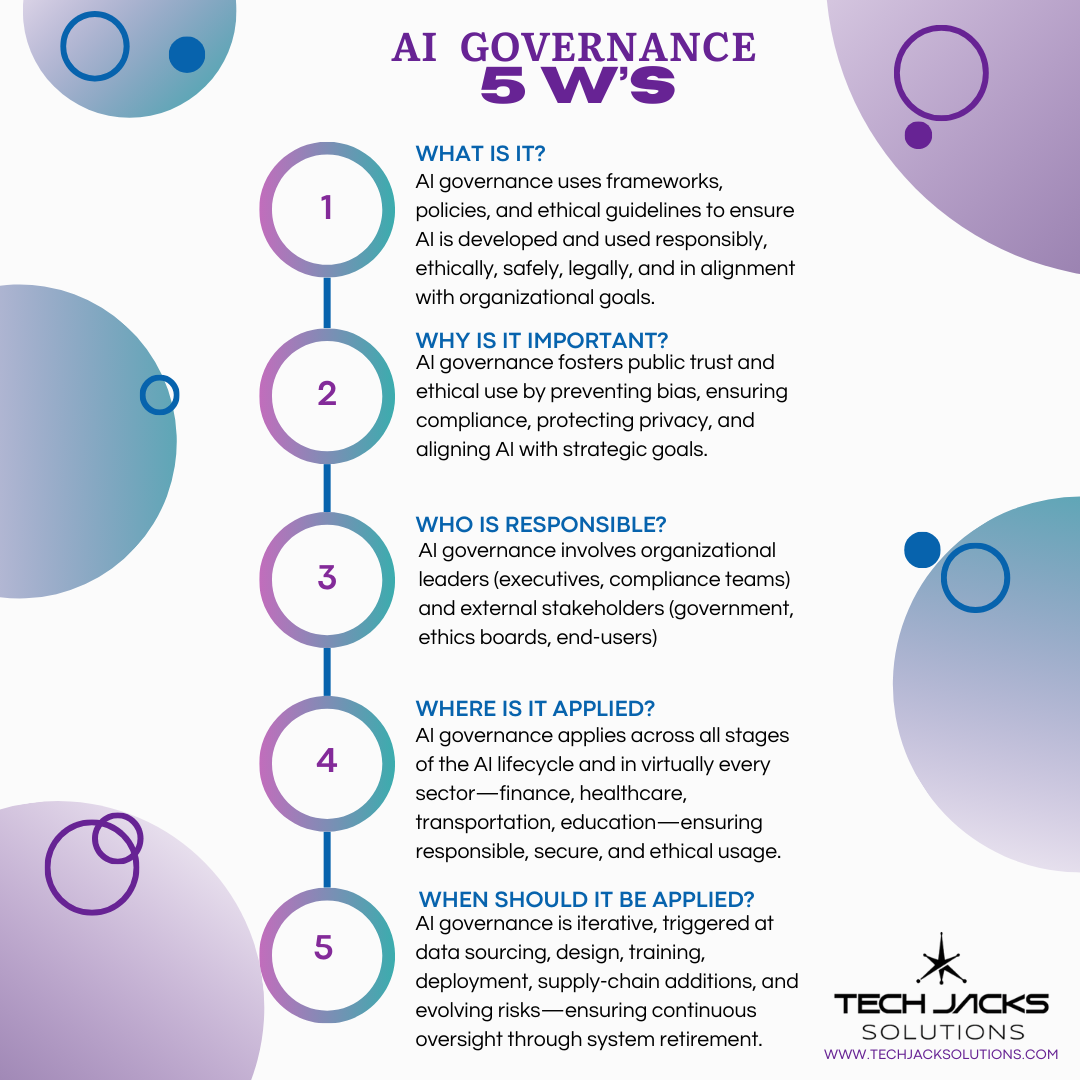

5 W’s of AI Governance

The 5 Ws of Great AI Governance

AI governance isn’t optional anymore. It’s the foundation that separates organizations using AI successfully from those creating expensive disasters.

Companies deploying AI without proper governance face a brutal reality. When algorithms fail, they don’t just crash. They discriminate, leak data, or make life-changing decisions incorrectly. These failures happen regularly.

What Is AI Governance?

AI governance is your organization’s system for keeping artificial intelligence safe, legal, ethical, and strategically aligned. It’s the management layer ensuring your AI does what it should (and doesn’t do what it shouldn’t).

The U.S. National Institute of Standards and Technology (NIST) calls governance the “GOVERN” function in their AI Risk Management Framework—the oversight mechanism for every AI decision your organization makes. ISO/IEC 42001 treats it as a certifiable management system, similar to how ISO 27001 handles information security.

Governance covers six core areas:

- Strategy alignment (does this AI project serve business goals?)

- Risk assessment and mitigation

- Security controls

- Privacy protection

- Fairness testing

- Ongoing monitoring and assurance

Organizations with mature governance implement dozens or even hundreds of specific controls across these areas. Why? Fixing problems after deployment costs significantly more than preventing them through proactive governance.

Why Is AI Governance Important?

Ungoverned AI creates five categories of damage:

Regulatory penalties. The EU AI Act fines companies up to €35 million or 7% of global annual turnover for non-compliance. Not theoretical. The Act prohibits certain uses entirely (social scoring) and heavily regulates high-risk applications affecting health, safety, or fundamental rights.

Reputational damage. When AI systems exhibit bias or make controversial decisions, public backlash follows swiftly. Organizations have abandoned major AI initiatives after discovering discriminatory patterns in their algorithms.

Security breaches. NIST’s adversarial AI taxonomy documents how unprotected models get poisoned, extracted, or manipulated. Researchers have demonstrated various attack methods that can compromise model integrity and extract sensitive information.

Privacy violations. Companies face risks when employees inadvertently share proprietary information with public AI systems, leading to immediate policy changes and access restrictions.

Biased outcomes. Healthcare algorithms have been found to systematically provide different care recommendations for different demographic groups, affecting patient care and requiring extensive remediation efforts.

Good governance converts these threats into competitive advantages. Organizations with mature AI governance report faster compliance audits and fewer incidents during AI deployments.

Who Is Responsible for AI Governance?

Governance requires a coalition, not a czar.

The typical AI Governance Committee includes:

- Chief Data/AI Officer (strategy and standards)

- Chief Information Security Officer (security controls)

- General Counsel (legal compliance)

- Chief Risk Officer (risk assessment)

- Domain executives (business alignment)

The Cloud Security Alliance’s AI Organizational Responsibilities framework provides detailed RACI charts showing how different teams collaborate on data security, model monitoring, and fairness. Examples from their framework:

- Data scientists: responsible for bias testing

- MLOps engineers: accountable for model monitoring

- Legal team: consulted on regulatory requirements

- Business owners: informed of deployment decisions

Major financial institutions structure governance through dedicated AI divisions with specialists across trading, risk, compliance, and technology. Their governance boards bring together diverse perspectives to address AI challenges.

External stakeholders matter too. Auditors verify controls. Regulators enforce standards. Affected users deserve transparency about AI’s impact on them.

Where Is AI Governance Applied?

Governance follows AI everywhere. No exceptions.

Healthcare. Clinical decision support and diagnostic AI require governance for validation, performance monitoring, and clinician training. The FDA regulates many AI-based medical devices as Software as Medical Device (SaMD).

Finance. AI processing loan applications must comply with fair lending laws, monitor for discriminatory patterns, and maintain audit trails for regulators.

Critical infrastructure. Smart grids and autonomous transport systems require governance focused on reliability and safety to prevent service disruptions.

Employment and education. Hiring algorithms and grading systems need oversight to ensure fairness and transparency.

The EU AI Act labels these domains “high-risk,” requiring:

- Detailed decision logging

- Human oversight capabilities

- Regular bias assessments

- User transparency reports

ISO 42001 and NIST AI RMF provide equivalent safeguards for global organizations.

Cloud deployments need special attention. Major cloud providers offer built-in governance features for drift detection and explainability. Organizations still need policies for deployment authorization, testing protocols, and retirement procedures.

When Should AI Governance Be Applied?

Always. Governance runs continuously through five lifecycle stages:

Plan & Design. Before writing code, governance committees review project proposals against responsible AI principles. Projects failing ethical review don’t proceed.

Build & Validate. Organizations document each model’s intended use, limitations, and test results. Testing tools help teams probe models for bias before deployment.

Deploy & Operate. Companies use gradual rollouts for algorithm changes, starting with small user percentages. Automatic rollback capabilities activate if metrics decline. Human oversight remains available.

Monitor & Improve. Fairness toolkits run regular bias checks on production algorithms. When drift is detected, models retrain automatically or alert operators.

Retire. Old models require secure deletion, especially those trained on personal data. Teams document lessons learned for future projects.

NIST’s MAP-MEASURE-MANAGE cycle and ISO 42001’s Plan-Do-Check-Act loop reinforce that governance is evergreen, not a one-time checklist.

Building Your Governance Foundation

Start with these five actions based on established frameworks:

Define your program. Document policies aligned with NIST AI RMF, ISO 42001, and relevant regulations. Leading organizations publish governance frameworks to demonstrate transparency.

Align objectives. Connect governance to business goals. Link risk controls directly to business metrics while preventing harmful outcomes.

Assign clear roles. Everyone from board to junior engineers needs defined responsibilities. Use RACI charts. Update job descriptions. Create escalation paths.

Apply consistently. Governance covers every AI touchpoint, including third-party models. When using external AI services, organizations must validate them against their governance standards.

Adopt continuous improvement. Schedule monthly governance reviews, quarterly policy updates, and annual external audits. Governance frameworks evolve through multiple iterations as organizations learn.

Implementation Patterns

Leading organizations build governance around existing risk management frameworks:

Financial services typically establish AI Review Boards combining technology and ethics perspectives. Models receive risk tier ratings determining testing requirements. Higher-risk models require more frequent performance reporting.

Healthcare organizations often create AI Centers of Excellence governing clinical algorithms. These frameworks mandate clinical review for AI touching patient care. Deployment requires appropriate medical oversight.

Manufacturing companies governing AI in autonomous equipment apply safety-critical systems standards. This includes testing protocols, simulations, and operator override capabilities. Human confirmation remains essential.

These patterns show governance accelerates innovation rather than blocking it. Standardized processes reduce deployment times by eliminating uncertainty and rework. Clear rules enable faster decisions.

Companies treating AI governance as overhead miss the point. It’s infrastructure. You wouldn’t run production systems without monitoring, backups, or security. AI needs identical operational discipline.

The alternative? Learning through catastrophe. But AI failures don’t just crash. They discriminate, violate privacy at scale, or make life-altering mistakes. Governance prevents your AI from becoming a cautionary tale.

Organizations embedding governance from day zero transform AI from a compliance headache into a competitive, trustworthy, and scalable advantage.

Learn more about AI Governance in our Article:

8 Definitive Steps for AI Governance Success—Don’t Let Your AI Become a Liability

LaTanya Jackson

March 26, 2025This information about AI Governance is very helpful. I actually only heard of Data Governance in the Information Technology industry, so this was definitely an eye opener.