AI Explainability

Your smartphone can recognize your face in milliseconds. But ask it why it thinks that’s you instead of your twin, and you’ll get digital silence. Or a Confabulation (le’t s keep it real).

This isn’t a small problem. We’re building AI systems that make medical diagnoses, approve loans, and control autonomous vehicles. Yet most of these systems operate like magic tricks to the user. They work, but nobody can explain how.

Here are 13 problems that make AI explainability harder than it should be.

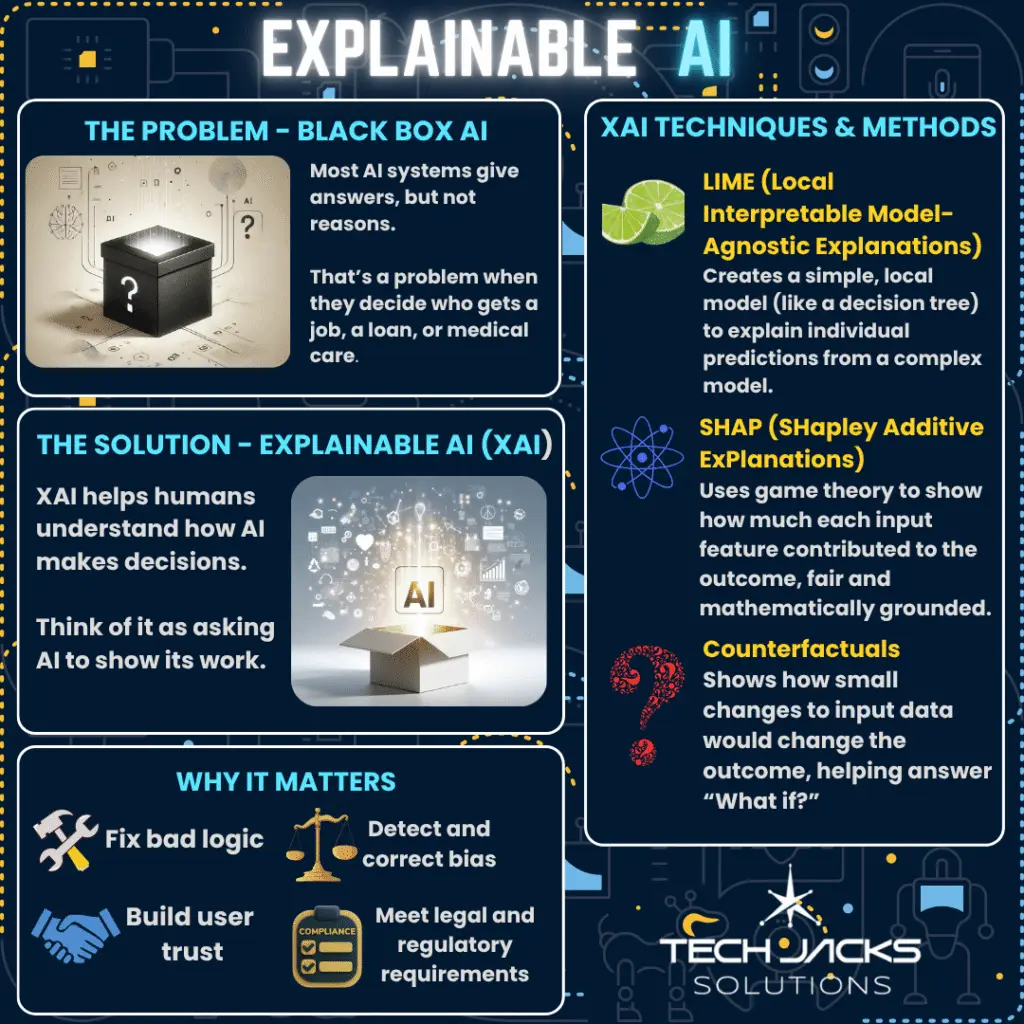

1. Black Box Mystery

Modern AI models contain billions of parameters. Billions.

GPT-4 reportedly has over 1.7 trillion parameters. That’s like trying to understand the behavior of every person in a city with 200 times the population of Earth. Even the engineers who build these systems can’t predict what they’ll do in specific situations. (Oi!)

Maps to: NIST AI RMF Measure/Manage functions, EU AI Act Article 13 (transparency requirements), ISO 42001 clause 8.2 (design & development) – AI Explainability

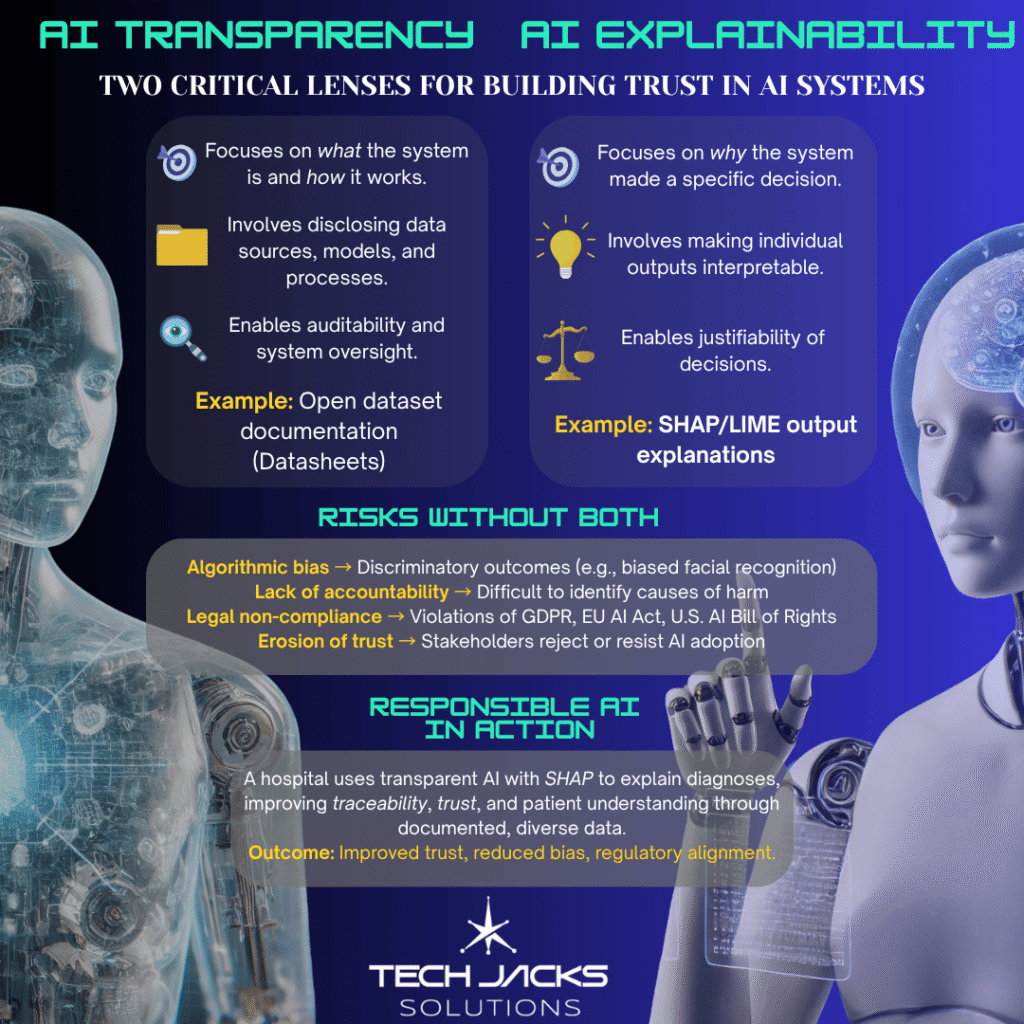

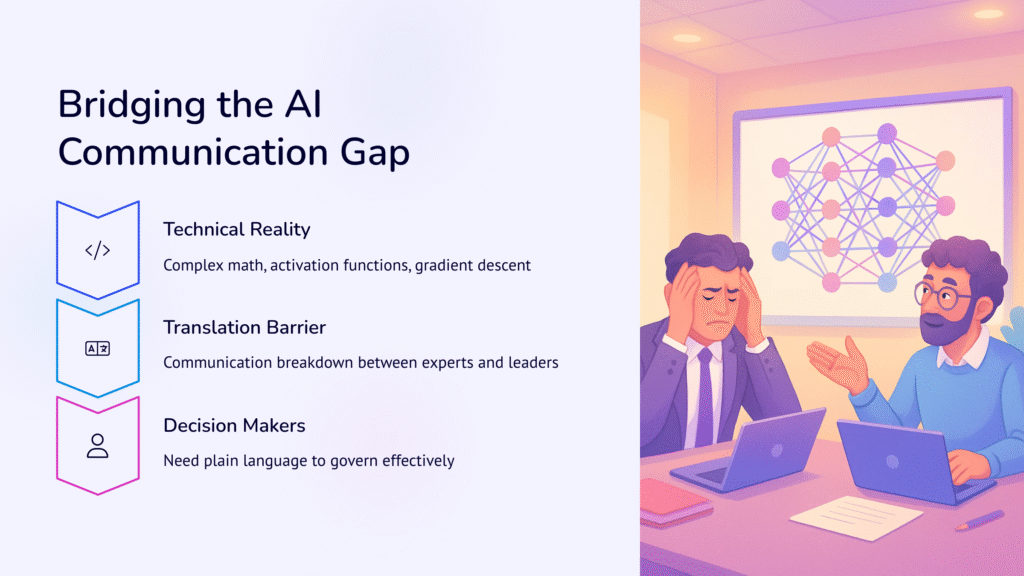

2. Lost in Translation

I once watched a data scientist try to explain a neural network to a friend of mine. The scientist talked about activation functions and gradient descent. My friends eyes glazed over. My eyes glazed over.

The gap between AI’s mathematical reality and human language creates a permanent communication breakdown. Technical people understand the mechanics but can’t translate them into plain English that decision-makers need.

Maps to: GAO AI Accountability Framework People/Process pillar, NIST AI RMF Govern function (risk communication)

3. Audit Nightmare

Auditors hate what they can’t verify.

When PwC audits a bank’s loan decisions, they can review the underwriter’s notes and decision criteria. But how do you audit an AI model that processes 847 variables through 12 hidden layers? You don’t. You cross your fingers and hope.

Maps to: EU AI Act Articles 16-23 (high-risk AI obligations), ISO 42001 clause 9.2 (internal audit) – AI Explainability

4. Trust Issues

Trust requires understanding. Always has.

The 2020 Edelman Trust Barometer found that 68% of people won’t use AI services they can’t understand. This isn’t technophobia. It’s rational skepticism about systems that won’t explain themselves.

Maps to: NIST AI RMF Map function (context & impact assessment), GAO Governance pillar

5. Healthcare & Finance Roadblocks

Doctors and financial advisors share one thing: they must justify every decision.

A radiologist using AI to read mammograms needs to explain suspicious findings to patients and colleagues. “The AI said so” doesn’t work in a malpractice lawsuit. This forces many medical professionals to avoid AI tools that could genuinely help patients. AI Explainability any one?

Maps to: EU AI Act high-risk sector annexes, ISO 42001 clause 4.1 (context of the organization)

6. Hidden Bias Problems

Amazon’s recruiting AI favored male candidates. For years.

The system learned from historical hiring data where men dominated tech roles. It downgraded resumes with words like “women’s” (as in “women’s chess club captain”). Nobody noticed because nobody could see inside the decision process. Amazon eventually scrapped the entire project.

Maps to: NIST AI RMF Measure function, model risk management frameworks

7. Smart vs. Understandable

Here’s the catch-22: the most accurate AI models are usually the least explainable.

A simple decision tree might be 78% accurate and perfectly transparent. A deep neural network might hit 94% accuracy but operate as a complete black box. Organizations constantly face this choice between performance and comprehension.

Maps to: GAO Performance pillar, NIST AI RMF Manage function (risk treatment)

8. Broken Tools

LIME and SHAP are the two most popular AI explanation tools. They’re also unreliable sometimes.

LIME can give different explanations for identical inputs if you run it twice. SHAP sometimes highlights features that don’t actually influence decisions. It’s like having a translator who occasionally makes up words but speaks with complete confidence.

Maps to: ISO 42001 clause 8.3 (validation), NIST AI RMF Measure function

9. Beyond Human Limits

AlphaFold predicts protein structures better than any human scientist. But its reasoning involves relationships between thousands of amino acids that no human brain can fully process.

Should we accept AI decisions that exceed human understanding? And if we do, how do we maintain meaningful oversight?

Maps to: EU AI Act Article 14 (human oversight requirements), GAO Governance pillar

10. Security vs. Transparency

Detailed explanations can backfire spectacularly.

In 2019, researchers showed how explanation data could be used to reverse-engineer proprietary AI models. Attackers can use explanation outputs to steal trade secrets or fool security systems. More transparency sometimes means less security.

Maps to: NIST AI RMF Manage function (privacy risk mitigation), OWASP ML Top-10 (ML03 – Model Inversion)

11. Fake Explanations

AI explanations might be completely wrong about how the system actually works.

Think of it like asking someone why they chose chocolate ice cream. They might say “I love sweet flavors” when they actually chose it because it was closest to the register. AI explanations can have the same problem. They describe plausible reasoning, not actual reasoning.

Maps to: ISO 42001 clause 8.3 (verification), NIST AI RMF Measure function

12. Moving Legal Targets

The EU AI Act requires “sufficiently meaningful” explanations. What does that mean exactly? Nobody knows yet.

Companies are building compliance strategies around vague requirements that regulators are still figuring out. IEEE P7001 offers some guidance, but legal interpretation continues shifting as courts handle their first AI cases.

Maps to: EU AI Act Chapters II-III obligations, GAO Data pillar

13. Information Overload

Sometimes too much explanation is worse than none.

Emergency room doctors using AI diagnostic tools don’t want 15-minute explanations. They want clear, actionable insights in 30 seconds. Overwhelming users with technical details can actually increase medical errors and decision time.

Maps to: NIST AI RMF Map function (context & audience), GAO People/Process pillar

The Real Challenge with AI Explainability

These aren’t just technical problems. They’re trust problems.

Every unexplainable AI decision erodes public confidence. Every biased algorithm that goes undetected damages AI’s reputation. Every regulatory mismatch slows innovation in areas where AI could genuinely help.

The solution isn’t perfect explanations. It’s better ones. Companies that figure out how to make their AI systems more transparent will win more customers, pass more audits, and avoid more lawsuits.

That’s not a prediction. It’s already happening.

Key Frameworks Referenced:

- NIST AI Risk Management Framework (AI RMF)

- EU AI Act

- ISO/IEC 42001 AI Management Systems

- GAO AI Accountability Framework

- IEEE P7001 Transparency Standards

- OWASP ML Security Top-10